Find out several key differences between generative AI and LLM. Select the right AI tools for your business.

Introduction

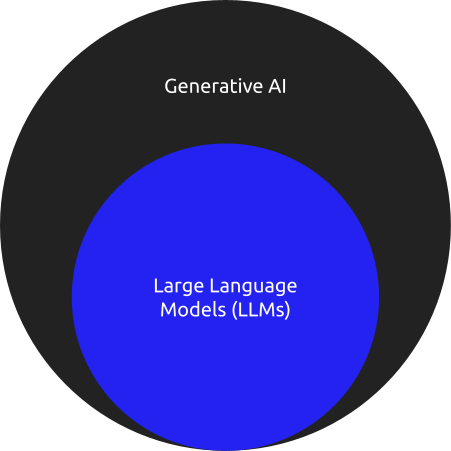

When people think of generative AI, they often immediately associate it with large language models such as OpenAI’s ChatGPT. However, while these models are significant, they represent just a portion of the broader generative AI spectrum.

LLMs belong to the subset of generative AI models designed specifically for linguistic tasks like text generation, question answering, and summarization. However, generative AI is a more expansive category that includes diverse model architectures and data types. In essence, while LLMs are a type of generative AI, not all generative AI models fall under the category of LLMs.

What is generative AI?

Generative AI encompasses AI systems capable of producing fresh content across various mediums like text, images, audio, video, visual art, conversation, and code.

These AI models generate content through machine learning (ML) algorithms and techniques, learning from extensive training datasets. For instance, a generative AI model assigned to compose music would glean insights from a vast collection of music data. Utilizing ML and deep learning methods to recognize patterns within this data, the AI system would then craft music according to user specifications.

Types of generative AI models

Generative AI models leverage various types of ML algorithms, each possessing distinct capabilities and characteristics. Below are some of the most prevalent:

- Generative adversarial networks (GANs): first introduced in 2014, they are machine learning models where two neural networks engage in competition. One network, the generator, crafts original data, while the other, the discriminator, assesses whether the data is AI-generated or real. Through deep learning methods and a feedback loop that penalizes the discriminator for errors, GANs learn to generate increasingly lifelike content.

- Variational autoencoders (VAEs): also unveiled in 2014, they can utilize neural networks to encode and decode data, enabling them to learn methods for generating new data. The encoder condenses data into a compact representation, while the decoder reconstructs the input data from this condensed form. This encoding helps the AI represent data efficiently, while decoding aids in developing efficient data generation techniques. VAEs are versatile for various content generation tasks.

- Diffusion models: developed in 2015, they are widely used for image generation. These models progressively introduce noise to input data across multiple steps, creating a random noise distribution. They then reverse this process to generate new data samples from the noise. Many image generation services, like OpenAI’s DALL-E and Midjourney, combine diffusion techniques with other ML algorithms to produce highly detailed outputs.

- Transformers: introduced in 2017 to enhance language translation, they revolutionized natural language processing (NLP) by employing self-attention mechanisms. These mechanisms enable transformers to analyze large volumes of unlabeled text, identifying patterns and relationships among words or sub-words in the dataset. Transformers have facilitated the development of large-scale generative AI models, particularly LLMs, many of which rely on transformers to generate contextually relevant text.

- Neural radiance fields (NeRFs): introduced in 2020, they are used to utilize ML and artificial neural networks to generate 3D content from 2D images. By analyzing 2D images of a scene from various perspectives, NeRFs can infer the scene’s 3D structure, enabling them to produce photorealistic 3D content. NeRFs hold promise for advancing fields such as robotics and virtual reality.

Use cases of Generative AI

Generative AI boasts various examples, including versatile chatbots such as OpenAI’s ChatGPT and Google Gemini (formerly Bard), image-generating platforms like Midjourney and DALL-E, code generation tools like GitHub Copilot and Amazon CodeWhisperer, and audio generation tools like AudioPaLM and Microsoft Vall-E.

With its wide array of models and tools, generative AI finds application in numerous scenarios. Organizations leverage generative AI to craft marketing and promotional visuals, tailor output for individual users, facilitate language translation, compile research findings, summarize meeting notes, and much more. Selecting the appropriate generative AI tool hinges on aligning its capabilities with the organization’s specific objectives.

What are large language models?

LLMs, a subset of generative AI, are specialized in handling text-based content. They utilize deep learning algorithms and depend on extensive datasets to comprehend text input and generate new textual output, spanning song lyrics, social media snippets, short stories, and summaries.

Belonging to the category of foundation models, LLMs serve as the fundamental architecture for a significant portion of AI language comprehension and generation. Numerous generative AI platforms, such as ChatGPT, lean on LLMs to generate authentic output.

If you want to get deeper into large language models, you can check our blog: What Are Large Language Models (LLMs)?

The LLM evolution

In 1966, MIT introduced the Eliza chatbot, an early example of Natural Language Processing (NLP). Although not a modern language model, Eliza engaged users in dialogue by recognizing keywords in their natural language input and selecting responses from a predefined set.

After the first AI winter, spanning from 1974 to 1980, interest in NLP resurged in the 1980s. Progress in areas like part-of-speech tagging and machine translation improved researchers’ understanding of language structure, laying the groundwork for the development of small language models. Advancements in machine learning techniques, GPUs, and other AI-related technologies in subsequent years allowed for the creation of more sophisticated language models capable of handling complex tasks.

In the 2010s, there was a significant exploration of generative AI models’ potential, with deep learning, GANs, and transformers expanding the capabilities of generative AI, including LLMs, to analyze extensive training data and enhance their content generation abilities. By 2018, major tech companies began releasing transformer-based language models capable of processing vast amounts of training data, thus dubbed large language models.

Google’s Bert and OpenAI’s GPT-1 were among the initial LLMs. Since then, there has been a continuous stream of updates and new versions of LLMs, particularly since the public launch of ChatGPT in late 2022. Recent LLMs like GPT-4 now offer multimodal capabilities, allowing them to work with various mediums such as images and audio, in addition to language.

Use cases of LLMs

LLMs offer a multitude of use cases and advantages. Traditional LLMs find applications in text generation, translation, summarization, content classification, text rephrasing, sentiment analysis, and conversational chatbots. The emergence of newer multimodal LLMs expands this range further, as exemplified by models like GPT-4, which enable LLMs to also undertake tasks like image generation.

LLMs vs. generative AI: How are they different?

LLMs stand apart from other varieties of generative AI due to distinctions in their capabilities, model architectures, training data, and limitations.

Capabilities

Common capabilities of LLMs include:

- Text generation: LLMs can craft coherent and contextually relevant text across various domains, from marketing materials to fictional narratives to software code.

- Translation: While LLMs can translate text between languages, their performance may be inferior to dedicated translation models, especially for less common languages.

- Question answering: LLMs can offer explanations, simplify complex concepts, provide advice, and respond to a wide range of natural language questions, although their factual accuracy may be limited.

- Summarization: LLMs excel at condensing lengthy passages of text, identifying key arguments and information. For instance, Google’s Gemini 1.5 Pro can analyze extensive text inputs equivalent to multiple novels.

- Dialogue: LLMs simulate conversation effectively, making them suitable for applications like chatbots and virtual assistants.

Generative AI, on the other hand, encompasses a broader range of capabilities, including:

- Image generation: Models like Midjourney and DALL-E create images based on textual prompts, with some, like Adobe Firefly, capable of editing existing images by generating new elements.

- Video generation: Emerging models such as OpenAI’s Sora generate realistic or animated video clips in response to user prompts.

- Audio generation: These models produce music, speech, and other audio forms. For example, Eleven Labs’ voice generator generates spoken audio from text inputs, while Google’s Lyria model creates instrumental and vocal music.

- Data synthesis: Generative models generate artificial data resembling real-world data, useful for training ML models when real data is scarce or sensitive. Though caution is required due to potential biases, synthetic data aids in scenarios like medical model training, reducing reliance on personal health information.

Model Architecture

Today’s LLMs predominantly utilize transformers as their core architecture. Transformers leverage attention mechanisms, which excel at comprehending lengthy text passages by discerning word relationships and relative importance. It’s worth noting that transformers aren’t exclusive to LLMs; they’re also employed in other generative AI models, including image generators.

However, there are distinct model architectures utilized in non-language generative AI models that are absent in LLMs. A notable example is convolutional neural networks (CNNs), primarily employed in image processing. CNNs specialize in analyzing images to identify prominent features such as edges, textures, objects, and scenes.

Model Training

Training data and model architecture are intricately linked, influencing the choice of algorithm.

LLMs undergo training on extensive language datasets sourced from diverse origins, spanning novels, news articles, and online forums. Conversely, training data for other generative AI models can encompass various formats, such as images, audio files, or video clips, contingent on the model’s intended application.

These discrepancies in data types lead to differing training processes between LLMs and other generative AI models. For instance, data preprocessing and normalization techniques vary between an LLM and an image generator. Moreover, the breadth of training data diverges; while LLMs require comprehensive datasets to grasp fundamental language patterns, more specialized generative models necessitate targeted training sets aligned with their specific objectives.

Challenges and limitations

Training any generative AI model, including LLMs, presents certain challenges, such as addressing bias and acquiring sufficiently large datasets. However, LLMs encounter some unique problems and limitations.

One significant challenge arises from the intricacy of textual data compared to other data types. Consider the vast spectrum of human language available online, ranging from technical documentation to poetic works to social media captions. This diversity poses a challenge even for advanced LLMs, as they may struggle to comprehend nuances like unfamiliar idioms or words with context-dependent meanings, leading to instances of inappropriate responses or hallucinations.

Another hurdle is maintaining coherence over extended passages. LLMs are often tasked with analyzing lengthy prompts and generating complex responses, making it challenging to ensure logical consistency throughout. While LLMs can adeptly generate high-quality short texts and understand concise prompts, they may encounter difficulties with longer inputs and outputs, risking lapses in internal logic.

This latter limitation is particularly concerning because LLM-generated hallucinations may not always be immediately evident. Unlike other generative AI models, where obvious visual discrepancies may signal inaccuracies, LLM output often appears fluent and confident, potentially masking factual errors. For instance, while an image generator producing an unrealistic scene might raise immediate red flags, an LLM’s well-articulated summary of a complex scientific concept could contain subtle inaccuracies that go unnoticed, especially to individuals lacking expertise in the subject matter.

Select the Right Approach: LLM VS Generative AI

When deciding between Generative AI and Large Language Models (LLMs), it’s vital to consider various factors that can guide your choice for the most suitable approach for your project:

Content Type

Generative AI excels in producing diverse content types like images, music, and code, while LLMs are tailored for text-based tasks such as understanding language, generating text, translation, and textual analysis.

Data Availability

Generative AI requires specific and varied datasets relevant to the content type, whereas LLMs are optimized for extensive text data, making them ideal for projects with abundant textual resources.

Task Complexity

Generative AI is suited for complex, creative content generation tasks or scenarios requiring varied outputs. In contrast, LLMs are proficient in tasks focused on language understanding and text generation, providing accurate and coherent responses.

Model Size and Resources

Larger generative AI models demand significant computational resources and storage capacity, whereas LLMs may be more efficient for text-focused tasks due to their specialization in language processing.

Training Data Quality

Generative AI relies on high-quality and diverse training data to produce meaningful outputs, while LLMs depend on large, clean text corpora for effective language understanding and generation.

Application Domain

Generative AI finds its niche in creative fields like art, music, and content creation, while LLMs excel in natural language processing applications such as chatbots, content summarization, and language translation.

Development Expertise

Developing and fine-tuning generative AI models require expertise in machine learning and domain-specific knowledge, whereas LLMs, particularly pre-trained models, are more accessible and user-friendly for text-based tasks, requiring less specialized expertise.

Ethical and Privacy Considerations

Considering ethical implications is crucial when using AI models, especially for sensitive content. LLMs are often fine-tuned to adhere to specific ethical guidelines, offering control over model behavior.

Ultimately, your choice between Generative AI and LLMs should align with your project objectives, the content involved, and available resources. In some cases, a hybrid approach combining both Generative AI and LLMs may offer the most comprehensive solution to meet diverse project requirements.

Conclusion

To conclude, while both Generative AI and Large Language Models share the common goal of content generation, they vary significantly in their approaches, capabilities, and applications.

It’s essential to grasp these differences to leverage the appropriate technology for specific tasks and domains effectively. As AI continues to progress, both Generative AI and Large Language Models will remain essential in fostering innovation and creativity across diverse industries.

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

Novita AI LLM Inference Engine: the largest throughput and cheapest inference available

Discover more from Novita

Subscribe to get the latest posts sent to your email.