Novita AI LLM Inference Engine: the largest throughput and cheapest inference available

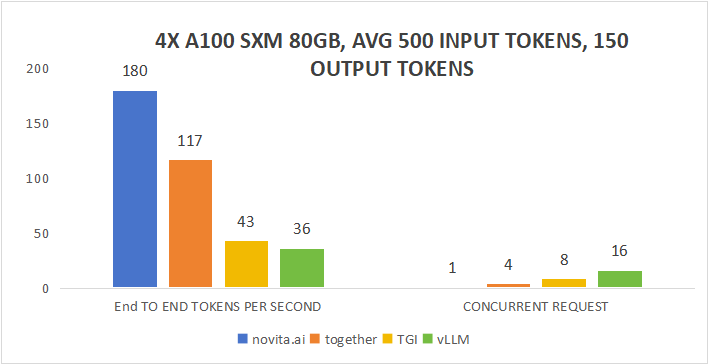

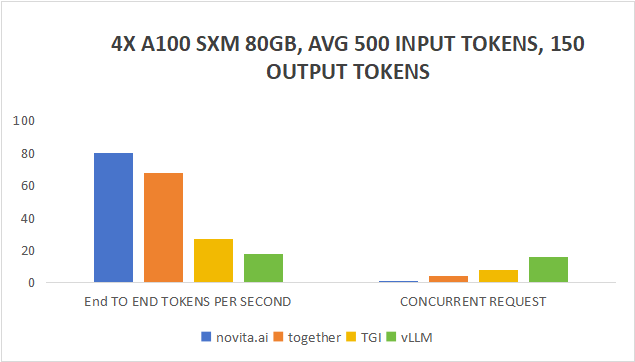

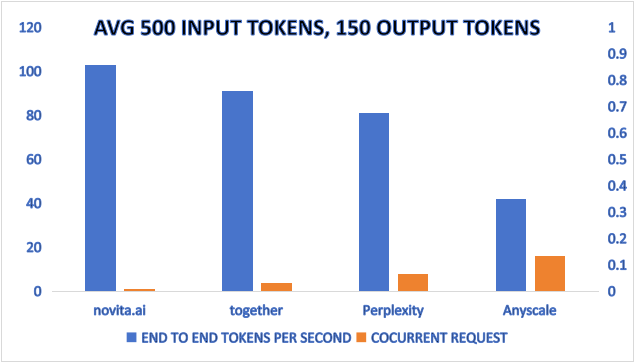

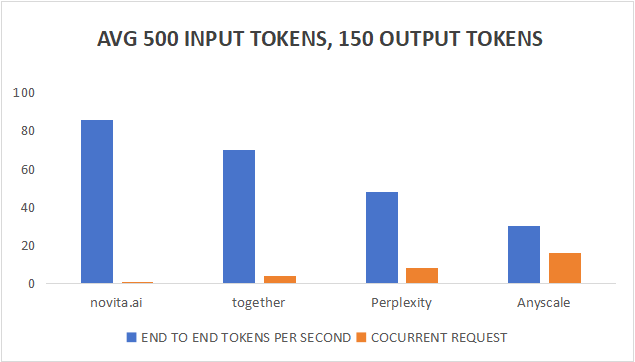

The Novita AI Inference Engine stands out as an exceptionally fast inference service, surpassing all others in terms of speed. It demonstrates impressive performance, processing 130 tokens per second when used with the Llama-2–70B-Chat model, and an even higher rate of 180 tokens per second when paired with the Llama-2–13B-Chat model. These figures indicate that the Novita AI Inference Engine is significantly more efficient in executing inference tasks compared to alternative services.

Introduction

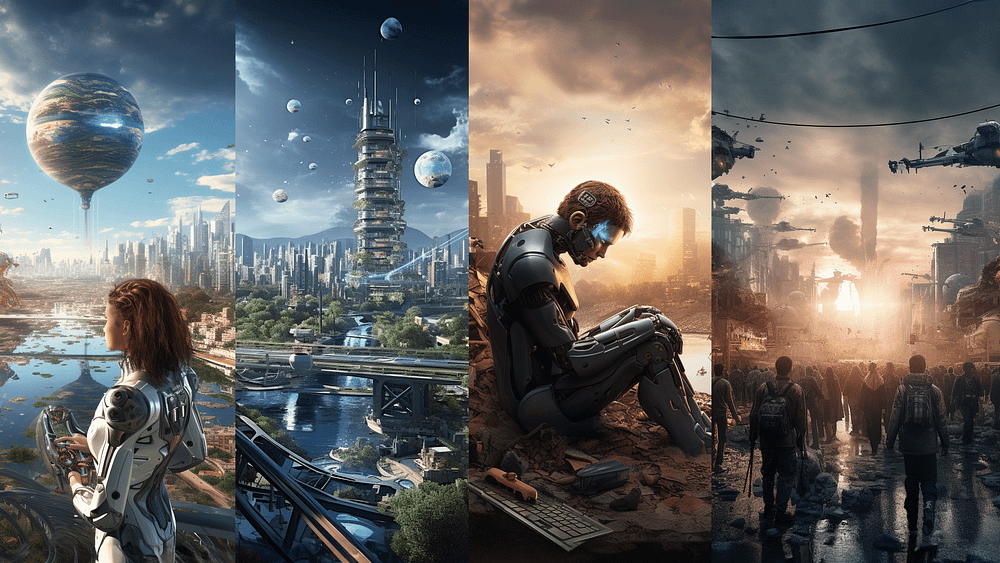

Novita AI, the promising AI engine company is excited to announce the launch of our LLM Inference Engine, a groundbreaking advancement in generative AI technology. Designed to offer the largest throughput and the most cost-effective inference solutions on the market, this engine is tailored to meet the needs of businesses and developers looking to scale their AI applications without compromising on performance or breaking the budget.

Standing at the forefront of artificial intelligence technology, novita.ai commits itself to innovation with state-of-the-art LLM Inference Engine. Founded with the vision to democratize access to advanced AI capabilities, novita.ai is committed to delivering high-performance, cost-effective solutions that cater to a diverse array of industries including tech startups, creative agencies, and educational institutions.

Performance

novita.ai’s LLM Inference Engine exemplifies leading-edge performance in generative AI, optimized for handling extensive volumes of data with precision. Designed to manage an impressive input capacity of up to 500 tokens and an output of 180 tokens under standard conditions, the engine can scale up to a maximum output of 4096 tokens to accommodate more complex interactions.

novita.ai Inference API compared to Together, perplexity and Anyscale APIs with default LLMPerf settings

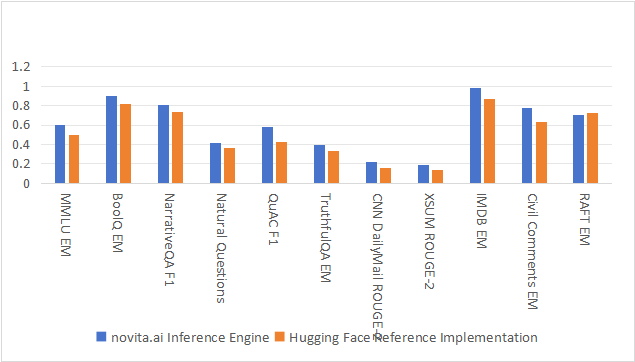

Quality

Enhancements to the novita.ai LLM Inference Engine are achieved without sacrificing the quality of output. Our optimization process eschews techniques such as quantization, which, although potentially beneficial for computational efficiency, can subtly alter the model’s behavior.

The following chats show the results of several accuracy benchmarks. novita.ai Inference achieves results inline with the reference Hugging Face implementation.

Key Features of novita.ai’s LLM Inference Engine

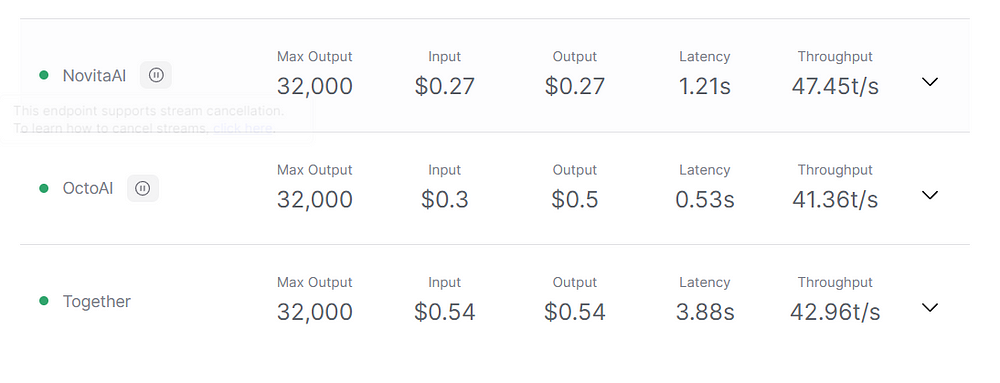

- Unprecedented Throughput: With a capability up to 47.45 tokens per second, our LLM Inference Engine supports high-demand applications, enabling rapid response times and smoother user interactions, even during peak usage.

- Cost-Effectiveness: At just $0.20 per million tokens for both input and output, the Novita.ai LLM Inference Engine stands as the most affordable option in the industry, allowing for extensive scalability at minimal cost.

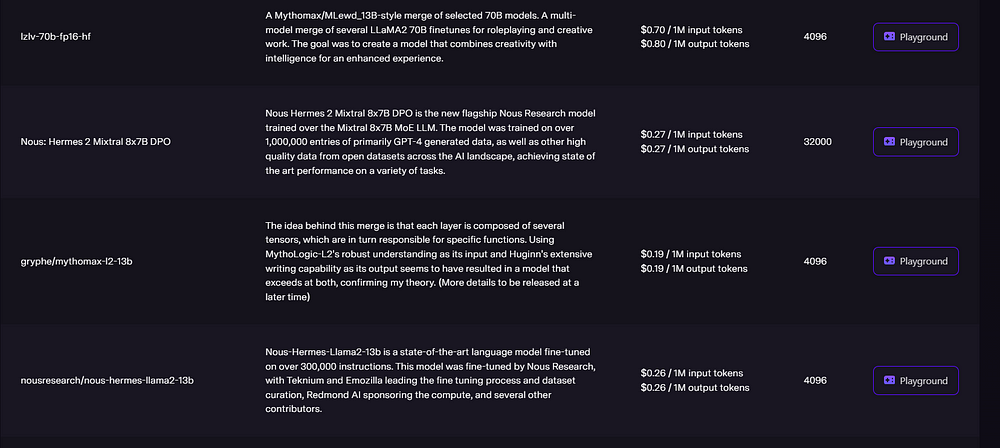

- State-of-the-Art AI Models: Incorporating advanced models like LLaMA2, Nous Hermes 2 Mixtral 8x7B DPO, and MythoLogic-L2, the engine offers superior versatility and accuracy across a broad range of applications.

- Serverless Integration: Users can integrate these powerful capabilities into their systems with ease, thanks to our serverless infrastructure that eliminates the complexity of setup and maintenance.

- Ultra-Low Latency: Facilitates smooth and efficient user interactions with response times significantly lower than the industry average.

Competitive Advantages of novita.ai’s LLM Inference Engine

The Novita AI LLM Inference Engine is not only the most affordable but also the most powerful tool in its class, distinguishing itself from competitors by:

- Offering the highest throughput available today, which is critical for applications requiring instant processing and real-time analytics.

- Maintaining low costs, which democratize access to state-of-the-art AI technologies, making it possible for startups and smaller developers to utilize advanced AI tools.

- Ensuring ease of use with our plug-and-play infrastructure, which allows businesses of any size to implement our engine without prior AI deployment expertise.

Pricing Policy

In line with our commitment to accessibility and innovation, Novita.ai has structured a pricing policy that reflects our dedication to providing value:

- Transparent, low-cost pricing: $0.20 per million tokens, with no hidden fees or escalating costs.

- Volume discounts: We offer competitive discounts for high-volume users, enhancing affordability for large-scale deployments.

Get to know our pricing policy

Applications and Target Audience

The novita.ai LLM Inference Engine is ideal for a variety of applications:

- Tech Companies and Developers: Incorporate advanced AI functionalities into apps and services swiftly and affordably.

- Creative Agencies: Employ AI to generate dynamic content and engage in meaningful consumer interactions.

- Educational Institutions and Researchers: Utilize cutting-edge AI for educational tools and academic research, pushing the boundaries of innovation.

Conclusion

With the novita.ai LLM Inference Engine, we are setting new standards for affordability and performance in the AI industry. Our engine is designed to empower businesses and developers to harness the full potential of AI without the usual cost and complexity barriers. Join us as we drive forward the future of AI applications. The future is generative. With novita.ai, it’s more accessible than ever.

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

LLM Leaderboard 2024 Predictions Revealed

Unlock the Power of Janitor LLM: Exploring Guide-By-Guide

TOP LLMs for 2024: How to Evaluate and Improve An Open Source LLM