Unveiling Examples of Roles in Prompt Engineering for Developers

Dive into an example of using roles in prompt engineering to understand their significance in the field. Explore more on our blog.

Key Highlights

- Prompt engineering means creating and improving questions or commands to help AI models produce the results we want.

- In prompt engineering, we give the AI specific roles or jobs, which can change how it responds and what information it provides.

- By using roles like “teacher,” “historian,” or “marketing expert,” users can get more precise and suitable answers from the AI.

- To use roles well, it is important to know what the AI model can do, make prompts clear, and improve them based on the responses we get.

- Prompt engineering with roles can be used in many fields, including education, marketing, technical writing, and more with services provided by Novita AI.

Introduction

Prompt engineering has become very important in the quickly changing area of artificial intelligence (AI). It mainly involves using language smartly to talk to AI models. This helps guide them to get the results we want. This work uses ideas from natural language processing (NLP). NLP is a part of machine learning that helps computers understand and work with human language. Overall, prompt engineering connects what humans mean with how AI understands it. In this blog, you will learn more about what is an example of using roles in prompt engineering

Exploring the Essence of Prompt Engineering

Prompt engineering is crucial for AI models. Crafting detailed instructions, or “prompts,” helps the AI understand language nuances accurately. Mastering language intricacies and AI functionality optimizes its performance in diverse scenarios.

What is Prompt Engineering in AI Development

To get precise responses from an AI model, prompt engineering is essential. By providing clear instructions, you guide the AI effectively, improving its output quality. Understanding the AI’s capabilities and your task is crucial. Tailoring prompts based on whether you need creative text, facts, or code ensures the AI meets your requirements accurately.

The Significance of Prompt Engineering

Prompt engineering is crucial as AI plays a larger role in content creation and data analysis. Clear communication is essential to steer AI towards desired results, minimizing errors and ensuring content meets objectives. This method allows for valuable insights from AI models beyond basic responses.

Here’s a YouTube video about Prompt Engineering’s importance.

What are “Roles” in Prompt Engineering

One interesting part of prompt engineering is called “role prompting.” Here, users give specific roles or personas to the AI model. This method goes further than just asking a question. It helps the AI understand the context of what you want. This leads to better and more detailed answers.

For example, if you ask an AI for marketing advice, you can tell it to respond as “a seasoned marketing director.” This way, you’re looking for information and helping the AI use a certain tone and style.

Why Integrate Roles in Prompt Engineering?

Prompt engineering enhances conversations with AI by understanding how people communicate and process information. Setting roles in prompts gives AI context, improving responses and making it a more effective tool for users.

Enhancing AI’s Understanding Through Role Specialization

AI interactions, like customer service, benefit from assigned roles. Designating roles helps AI connect with topics and tailor responses accordingly. For instance, an AI acting as a “museum tour guide” will offer different information than if it were a “financial advisor.” This role system ensures more accurate and tailored responses to user queries.

Streamlining Development Processes

By integrating roles in prompt engineering, AI output improves and development becomes easier. Defining roles and context from the start saves time when fine-tuning AI models for specific tasks, especially in fast-evolving fields like generative AI. Role prompting enables quick adjustments to new areas by tailoring prompts to required expertise and communication style. This flexibility accelerates innovation and unlocks new possibilities for AI-powered products and services across industries.

Diving Deeper: Examples of Roles in Action

To demonstrate role prompting, consider this example: when writing a blog post on quantum physics, instead of asking the AI to “explain quantum physics,” prompt it to “explain quantum physics as a seasoned physics professor would to beginners.” This approach helps the AI adopt a specific role, enhancing clarity and engagement.

Real-life Examples of Role in Prompt

Example 1. Role: Chef

- Prompt: “As a chef, please give me a simple pasta recipe.”

- Output: The model provides a detailed pasta recipe, including ingredients and steps.

Example 2. Role: Doctor

- Prompt: “As a doctor, please explain the common symptoms of high blood pressure and how to prevent it.”

- Output: The model outlines the symptoms of high blood pressure, risk factors, and prevention suggestions.

Example 3. Role: Business Consultant

- Prompt: “As a business consultant, please analyze a startup’s market entry strategy.”

- Output: The model provides market analysis, competitor assessment, and suggested entry strategies.

Example 4. Role: History Teacher

- Prompt: “As a history teacher, please explain the main causes of World War II.”

- Output: The model details the background, causes, and impacts of World War II.

Example 5. Role: Novelist

- Prompt: “As a novelist, please write the opening of a short story about time travel.”

- Output: The model generates an engaging story opening involving a time travel plot.

The Art of Crafting Effective Prompts

Crafting good prompts is crucial. They should be clear, specific, and flexible to guide the AI effectively while allowing for creative and detailed responses. A skilled prompt engineer understands language, context, and AI dynamics to create prompts that yield excellent answers, enhancing the user experience.

Balancing Specificity with Flexibility

Balancing specificity and flexibility is key in prompt design. A good prompt offers clear directions and allows room for creative exploration by mixing specific instructions with open-ended questions.

For example, instead of asking for a “500-word essay on climate change,” you could prompt, “Imagine you are a climate scientist at a conference. Deliver a compelling 500-word speech on the urgent need to address climate change.” This directive provides a scenario and objective, empowering the AI to determine content and expression effectively.

Techniques for Iterative Prompt Refinement

Prompt engineering is a complex process that involves continuous adjustments. Testing, evaluating, and refining prompts based on AI responses is crucial for clarity and effectiveness.

- Start with a Clear Objective: Setting clear goals, tracking changes, and regularly assessing the AI’s performance against objectives are key to improving prompt quality for optimal outputs.

- Gather Feedback: Use responses from the AI to assess the effectiveness of your prompts. Analyze what works and what doesn’t.

- Test Variations: Experiment with different phrasings, structures, and levels of specificity to see which combinations yield the best results. TestA/B testing and analyzing response patterns aid in prompt refinement.

Use LLM API to Gain Efficiency with Role in Prompt

You now understand what it is and have great examples and tips in your hands. If you use an AI API service platform, you can speed up your workflow. Novita AI is an AI API platform that offers various LLM models and services. You can focus on application growth and customer service while entrusting the LLM Infrastructure to the Novita Team.

Step-by-Step Guide to Using Novita AI LLM API

- Step 1: Create an account on Novita AI and sign in.

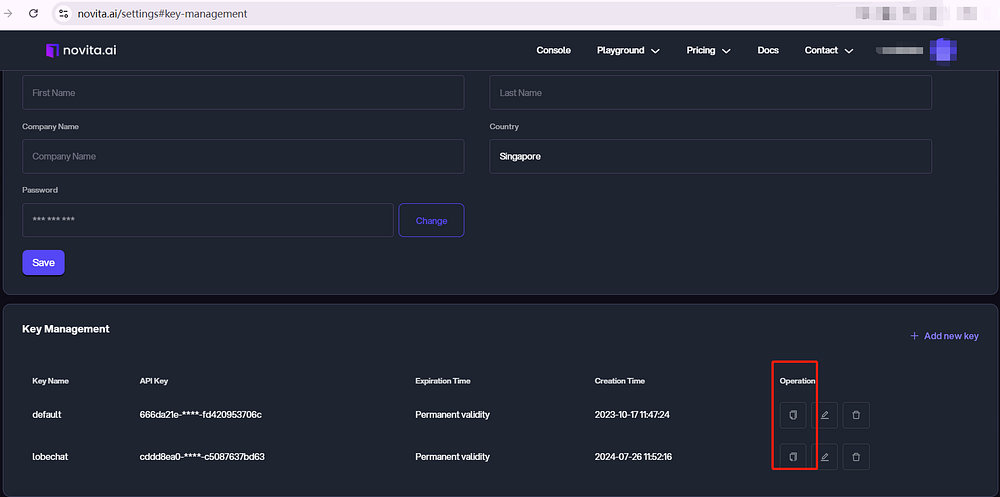

- Step 2: Obtain the API key under the Novita AI dashboard.

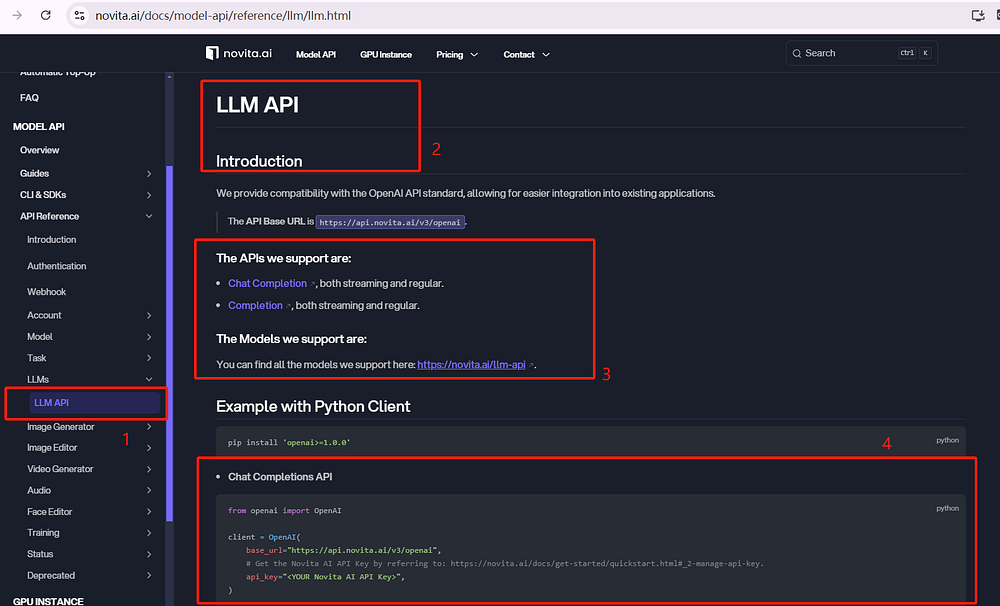

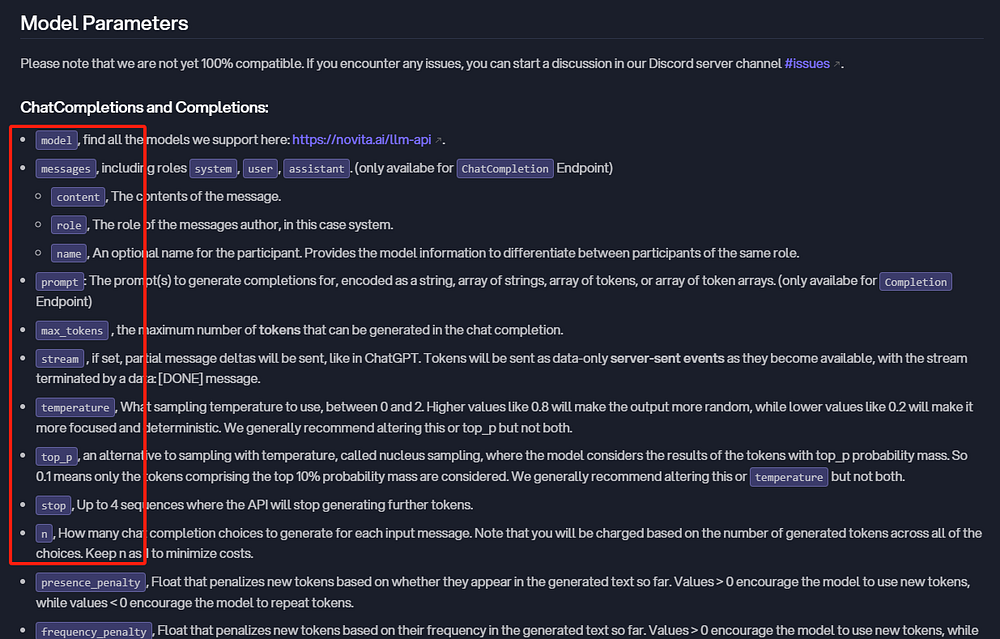

- Step 3. Navigate to the Novita AI Documentation Page. Find the “LLM API” under the “LLMs” tab.

- Step 4: Obtain and integrate the API key into your existing project backend to develop your LLM API. Check the LLM API Reference to find the “APIs” and “Models” supported by Novita AI.

- Step 5: Click the models link under “the models we support are” and then you can see various models at the end of the page.

- Step 6: Choose the model that meets your demand. Set up your development environment and adjust parameters including content, role, name, and detailed prompt.

- Step 7: Test several times until the API can be used reliably.

Sample Chat Completions API

pip install 'openai>=1.0.0'

from openai import OpenAIclient = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring to: https://novita.ai/docs/get-started/quickstart.html#_2-manage-api-key.

api_key="<YOUR Novita AI API Key>",

)model = "Nous-Hermes-2-Mixtral-8x7B-DPO"

stream = True # or False

max_tokens = 512chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": "Act like you are a helpful assistant.",

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

)if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "", end="")

else:

print(chat_completion_res.choices[0].message.content)With the API key, you can get high-quality content generated by large language models. Novita AI also offers a playground for testing models.

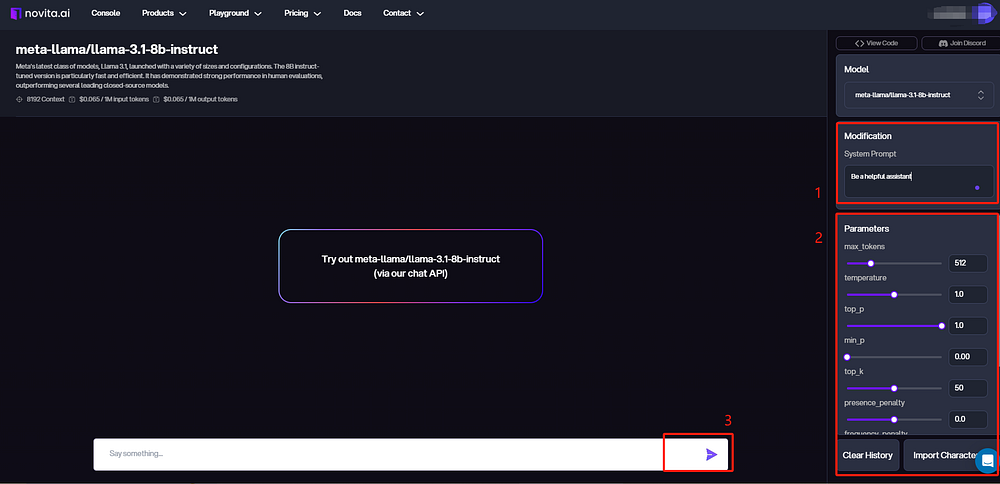

Try it on the playground.

- Step 1: Visit Novita AI and create an account.

- Step 2: After logging in, navigate to “LLM Playground” under the “Playground” tab.

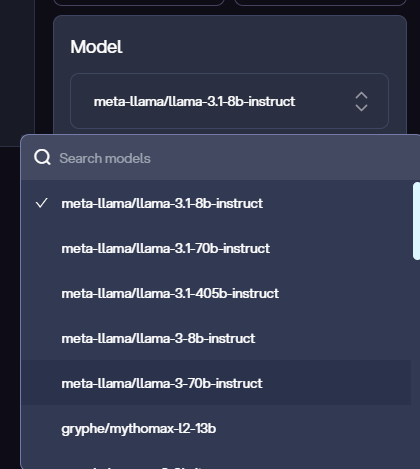

- Step 3: Select the model from the list like the llama 3.1 models.

- Step 4: Enter Roles in the “Modification”. Set the parameters according to your needs like temperature, and max tokens.

- Step 5: Click the button on the right, then you can get content in a few seconds.

Navigating Challenges in Prompt Engineering

Prompt engineering is very powerful, but it has challenges. One big challenge is the complexity of human language. It is not always easy to convey meaning through text. Another challenge is bias. AI models can unknowingly pick up and continue existing biases from that data.

Addressing the Complexity of Human Language

One way to help is to use NLP technology. Techniques like sentiment analysis and named entity recognition help us understand the meaning of text better. Another helpful strategy is to test and improve prompts iteratively. By checking how AI responds to different prompts, engineers can find and fix any misunderstandings.

Overcoming Constraints in AI Interpretation

One way to fix this is by using clear and simple prompts. By giving clear instructions and avoiding difficult words, engineers can help the AI understand better. Adding examples to prompts can also help get the desired output. When engineers show the AI what a good answer looks like, it gives helpful context and makes things clearer.

Conclusion

In conclusion, prompt engineering is essential for optimizing AI interactions. In this blog, we know what is an example of using roles in prompt engineering. By crafting thoughtful prompts and assigning specific roles, users can enhance the accuracy and relevance of AI responses. This iterative refinement process improves output quality and fosters better communication between humans and AI. As technology evolves, mastering prompt engineering will be crucial for leveraging AI’s potential across various fields, driving innovation and effective solutions.

FAQs

What is the primary role of a prompt engineer?

A prompt engineer creates and improves the questions or instructions for AI models. Their main job is to make these prompts better. The goal is to achieve the desired output from the AI interactions.

How do roles in prompt engineering improve AI interactions?

Role specialization in prompt engineering gives direction and clarity to the AI model, similar to how a job title guides a person’s work. It makes the responses more relevant to what the user really needs.

Can someone without a technical background enter prompt engineering?

You don't need a technical background to start a career in prompt engineering. While technical skills can help, creativity, good language skills, and a desire to learn are just as important.

What are the challenges faced by prompt engineers today?

Engineers face challenges in balancing technical expertise and understanding human communication while considering the limits of current AI models.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading

1.Prompt Engineering Business Task: Develop for Success