Simplify LLM Quantization Process for Success

Simplify the LLM quantization process for success with our expert tips and guidance. Explore our blog for more insights.

Key Highlights

- Quantization is a way to make big language models smaller by changing their weights and activations into simpler types of data.

- This method lets these models work on regular gadgets without losing much performance.

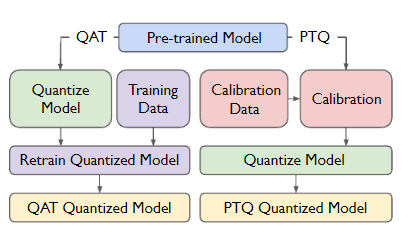

- With quantization, we see two main kinds: Post-Training Quantization (PTQ) and Quantization-Aware Training (QAT).

- By making the model size smaller through this compression technique, it becomes easier to use them more widely and they can do tasks quicker.

- To get better at this quantization process, there are special methods like QLoRA and PRILoRA that help fine-tune how it’s done.

- Novita AI, an AI API platform featuring various LLMs, offers LLM API service. Developers can also deploy models with the platform to produce more reliably and scalably.

Introduction

In machine learning, especially when talking about big language models (LLMs), quantization is a key step in reducing their size and increasing their speed. As LLMs have evolved, their complexity has grown exponentially, leading to a significant increase in their number of parameters. To get these smaller, faster models out there working well, it’s crucial to know the ins and outs of various quantization methods and tricks, including model compression. This article takes you deep into what quantization means, why it matters so much, its upsides and downsides, along common hurdles people run into while doing it. Let’s dive into everything that makes LLM quantification fascinating, including the use of different data types for reducing the size of large language models.

Understanding the Basics of Quantization in AI

In AI, quantization simplifies neural network details and calculations, enabling faster operation and reduced space usage while maintaining effectiveness. It’s akin to efficiently packing for a trip — fitting essentials into a smaller suitcase. By converting data to simpler formats, we reduce model size while minimizing errors. Various quantization methods, like post-training and quantization-aware training, optimize AI systems for efficient operation with reduced computational power and memory usage, making it a crucial training process for achieving high precision and reducing the number of bits needed for storage.

What is Quantization

Quantization in machine learning reduces the computational and memory demands of models for efficient deployment. Model weights and activations are represented with lower precision data, like 16-bit float, brain float 16-bit, 8-bit int, or even lower. Benefits include smaller sizes, faster fine-tuning, and quicker inference — ideal for resource-constrained environments.

The Role of Quantization in LLMs

Quantization is a crucial process in making LLMs work more efficiently in deep learning. By reducing the precision of model details, quantization helps improve the speed and performance of these complex models. Quantizing an LLM reduces its computational requirements, allowing it to run on less powerful hardware while still delivering adequate performance. This makes it easier to use these advanced language tools on various devices, including larger models, opening up new possibilities for everyday use.

What Are the Advantages and Disadvantages of LLM Quantization?

Quantized LLMs help save on memory and can work quicker, but there’s a catch — they might not be as accurate and could slow down a bit. Finding the right balance between these upsides and downsides is key to making sure these models are used effectively.

Advantages

- Smaller models: Quantization improves the performance of large models by reducing model size for deployment on devices with smaller hardware.

- Reduced memory consumption: Reduced bit width means less memory usage and lower memory requirements.

- Fast inference: Using lower bit widths for weights reduces memory bandwidth requirements, leading to more efficient computations.

- Increased Scalability: Quantized models have a lower memory footprint, making them more scalable. This allows organizations to expand their IT infrastructure to support their use.

Disadvantages

- Potential loss in accuracy: Quantization’s main drawback is reduced output accuracy. Converting model weights to lower precision can harm performance.

- Complex and time-consuming: Implementing model quantization requires a deep understanding of the model and its architecture

Exploring Different Quantization Techniques

Optimizing models for efficiency involves adjusting how they handle numbers through linear and non-linear methods. Linear methods use a consistent number range, while non-linear methods offer scale factor flexibility. Quantization-aware training maintains model accuracy during training; post-training quantization fine-tunes model weights efficiently without compromising performance.

Linear vs Non-Linear Quantization Methods

Quantization methods are categorized as Linear and Non-linear, based on the distribution of original data. Non-linear Quantization is preferred due to smaller precision loss caused by uneven weights and activation values in models. However, Linear Quantization, more commonly used, is generally more effective in inference than Non-linear Quantization. In simple terms, Non-linear Quantization depends on whether the original data is uniformly distributed or not. The weights and activation values of the model are usually uneven, so the precession loss caused by Non-linear Quantization is smaller.

PTQ vs QAT: Two Types of LLM Quantization

Quantization techniques like PTQ and QAT can reduce LLM size and resource needs, balancing precision and performance for smooth operation on different platforms.

- Post-training quantization (PTQ) is a method that quantizes a trained model after training, reducing weights and activations from high to low precision. PTQ compresses trained weights through a weight conversion process to save memory. It’s simple to implement but doesn’t consider quantization impact during training.

- Quantization-aware training (QAT) considers quantization’s impact during training. The model is trained using quantization-aware operations to simulate the quantization process, achieving higher precision compared to PTQ.

How to Quantize Your LLM

By following these steps closely and leveraging frameworks, you can effectively optimize models for various devices while ensuring good performance and efficient management of size in best practices.

1. Preparing Your Model for Quantization

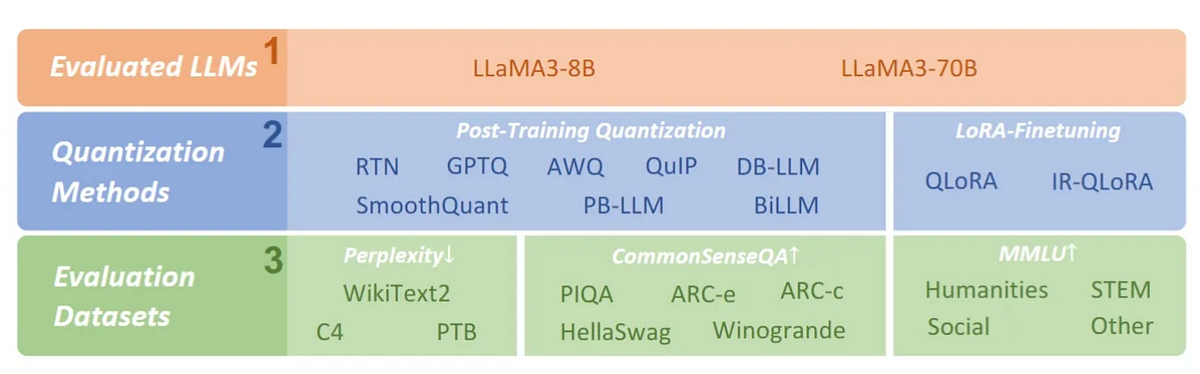

Before quantizing your large language model, ensure it’s well-trained on relevant data. Identify weight tensors impacting neural connections and reduce their size through weight quantization without compromising effectiveness. Convert weight tensors to quantized tensors for optimized space usage on resource-constrained devices. Given the wide application of low-bit quantization for LLMs in resource-limited scenarios, the llama-3 model provided by Novita AI is a good option.

2. Choosing the Right Quantization Strategy

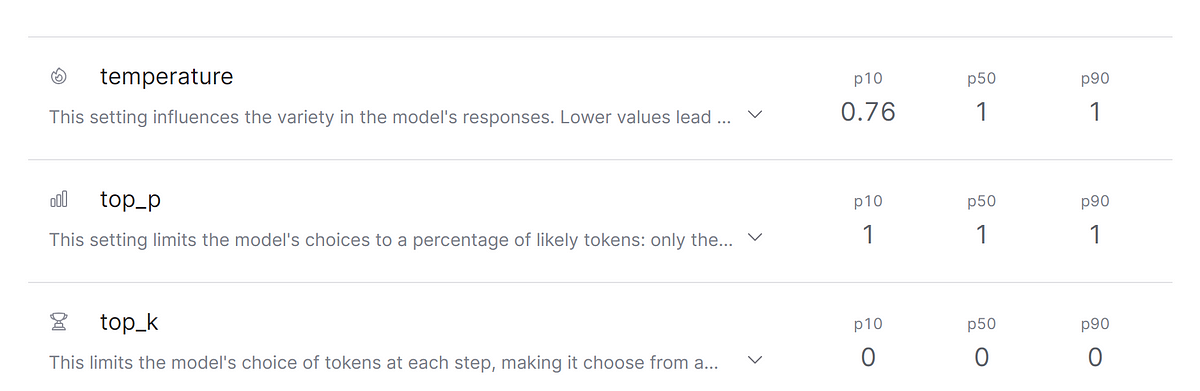

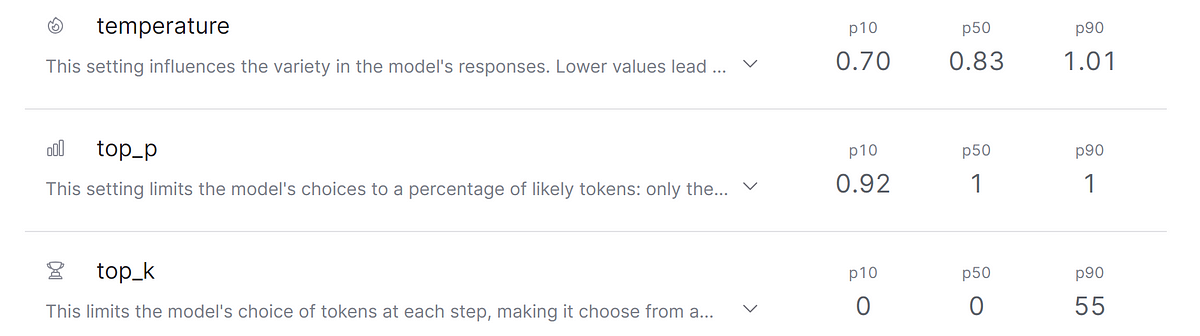

Choosing the right quantization method is crucial to optimize your LLM. Each quantization method has different schemes for how the activations and weights are quantized. For weight quantization, llama-3 offers 8-bit and 4-bit options. The 4-bit quantization includes GPTQ support for enhanced accuracy with calibration, maintaining the same performance level in the end with minimal performance degradation. For dynamic quantization, it supports 8-bit activation quantization and 8-bit weight quantization. Monitor performance closely to maintain accuracy without significantly increasing memory usage.

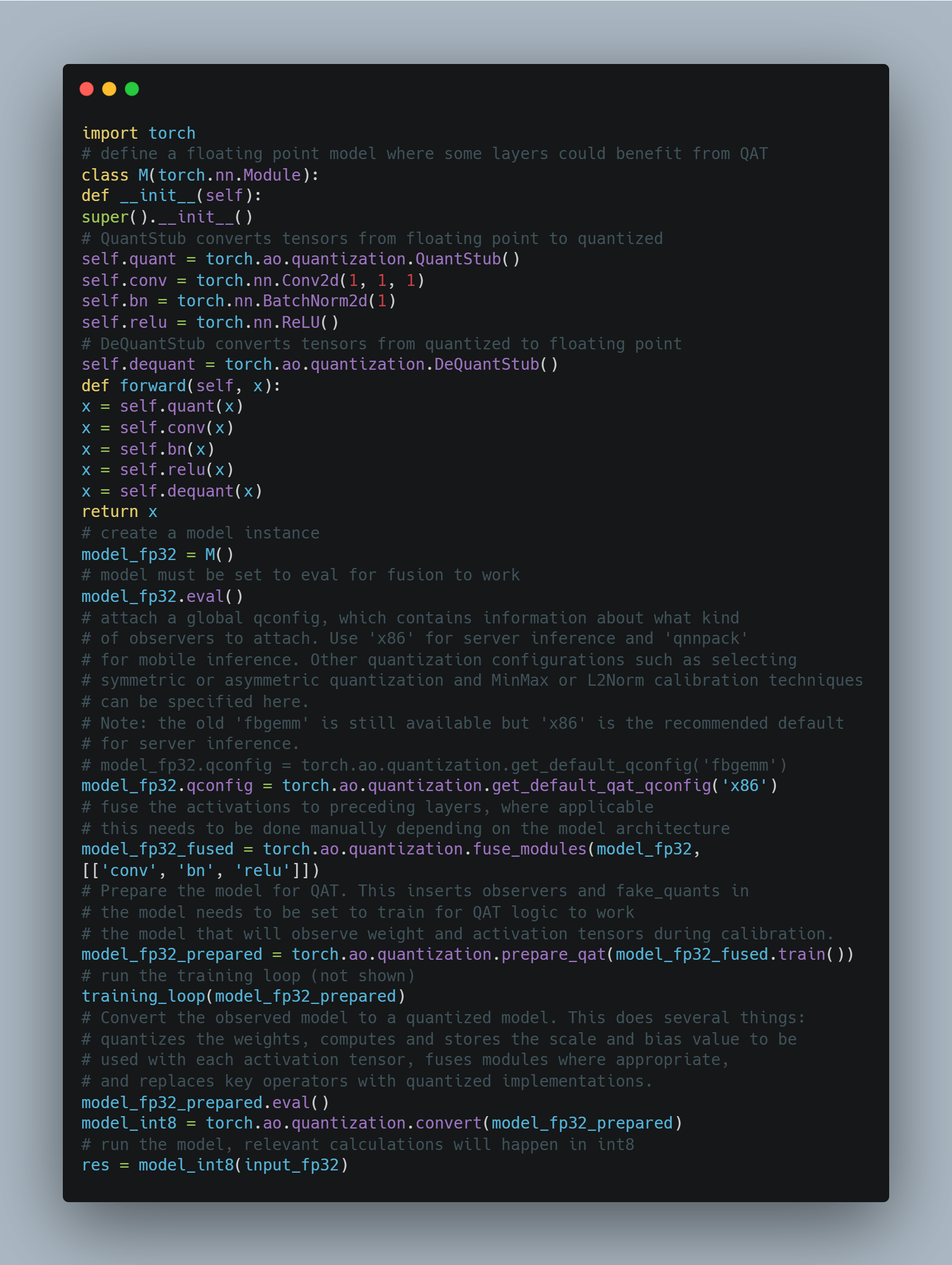

3. Preparing Necessary Data

Install data from libraries like TorchAO. Quantify model parameters into low-precision formats, such as INT8, INT4, etc., to reduce the model size and inference latency. Here is a sample Python code.

4. Implementing Quantization Using Frameworks

Finally, using an API framework simplifies adding quantization to your LLM. Their tools and libraries ease the quantization process for llama-3 models. Leveraging frameworks like Novita AI streamlines quantization implementation in LLMs for enhanced efficiency.

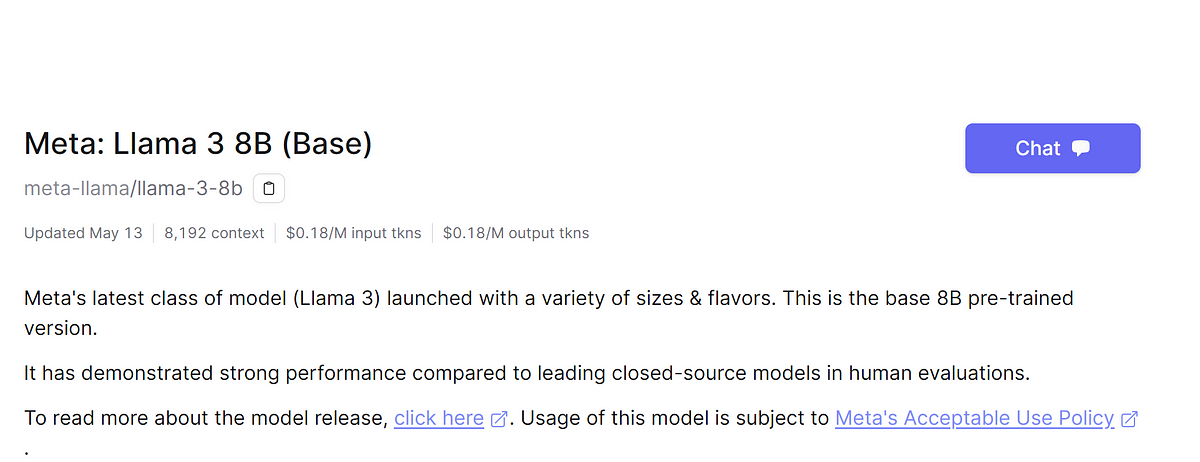

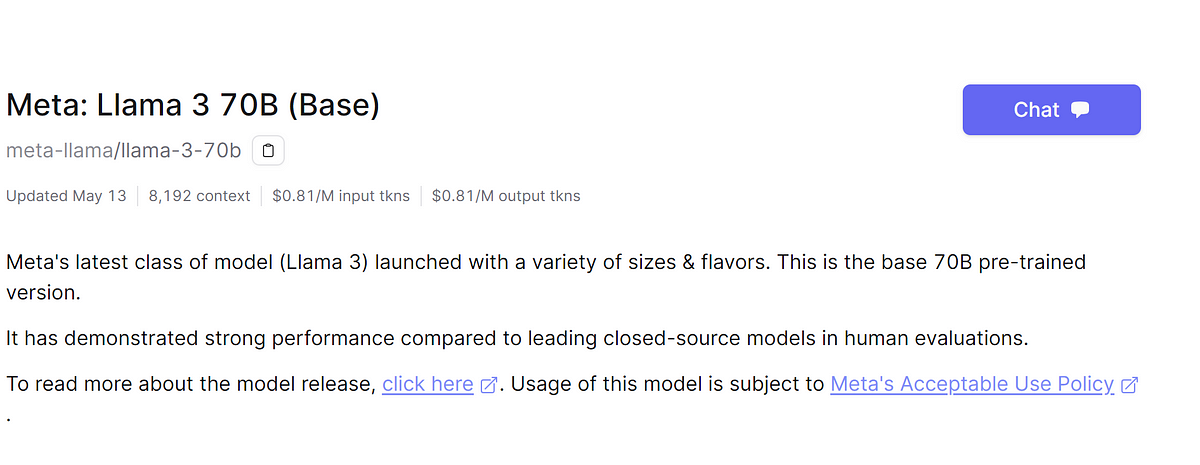

How to Use Novita AI LLM with llama-3 model

Novita AI, a user-friendly and cost-effective platform designed to cater to various AI API requirements, is prepared to offer LLM API service. Novita AI is compatible with the OpenAI API standard, making it easier to integrate into current applications. If you don’t want to be bothered to do quantization, you can integrate llama-3 into your application directly with Novita AI API.

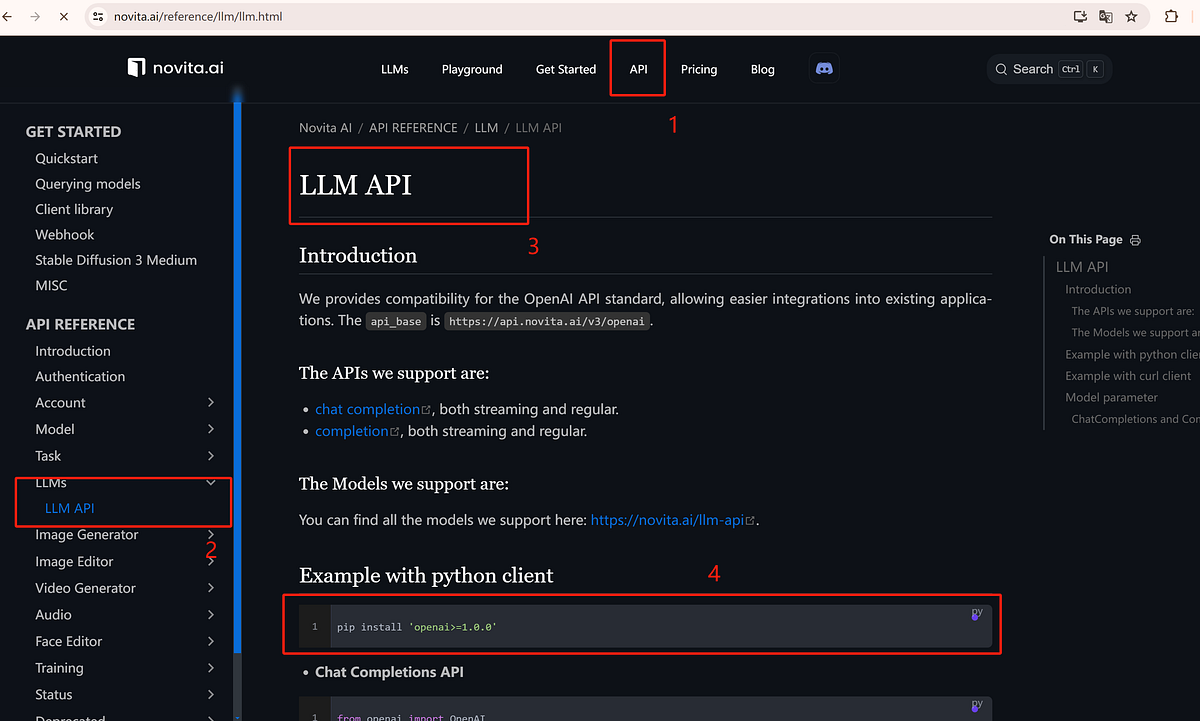

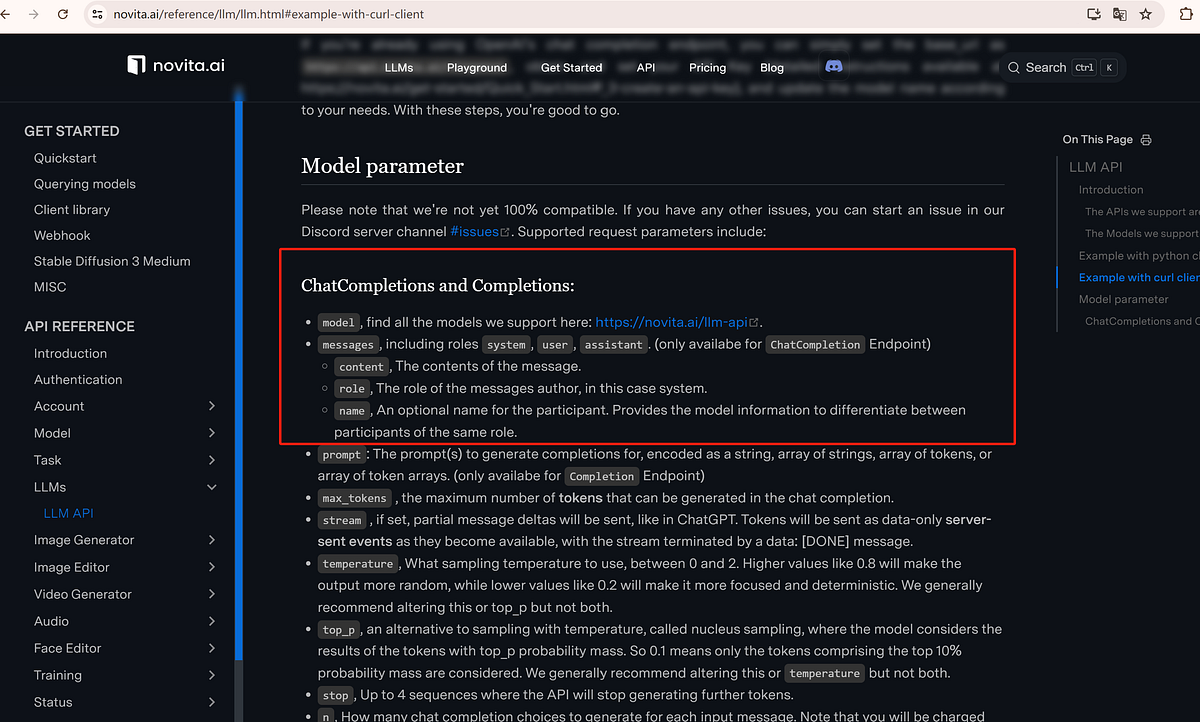

A Guide to Using LLM API with Novita AI

- Step 1: Visit Novita AI and create an account. We offer $0.5 in credits for free.

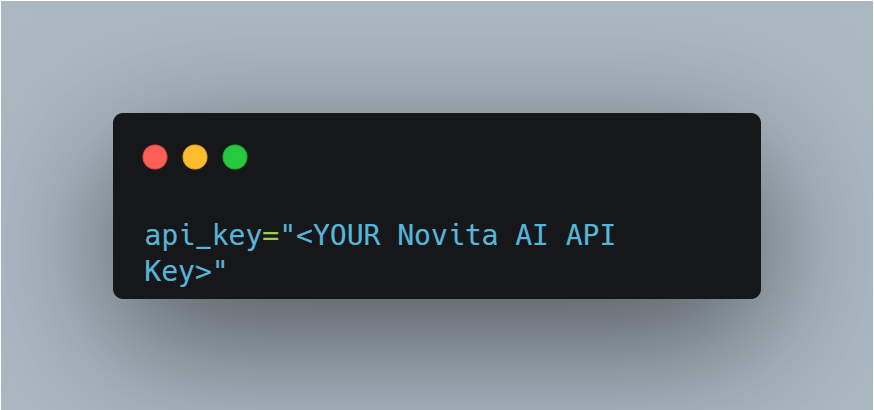

- Step 2: Then obtain an API key from Novita AI. You can create your API key.

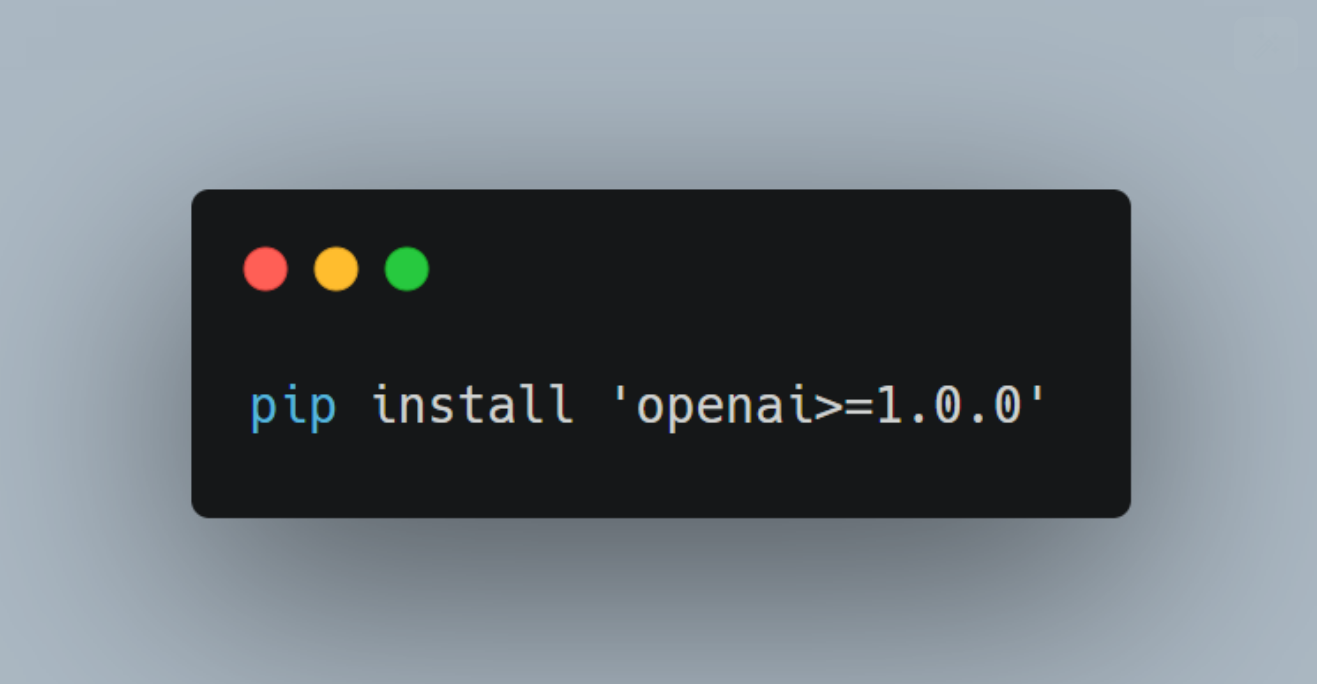

- Step 3: LLM API Installation: Navigate to API and find the “LLM” under the “LLMs” tab. Install the Novita AI API using your programming language’s package manager. For Python users, this could be a simple command like

- Step 4: After installation, import the necessary libraries into your development environment. Initialize the API with your API key to start interacting with Novita AI LLM.

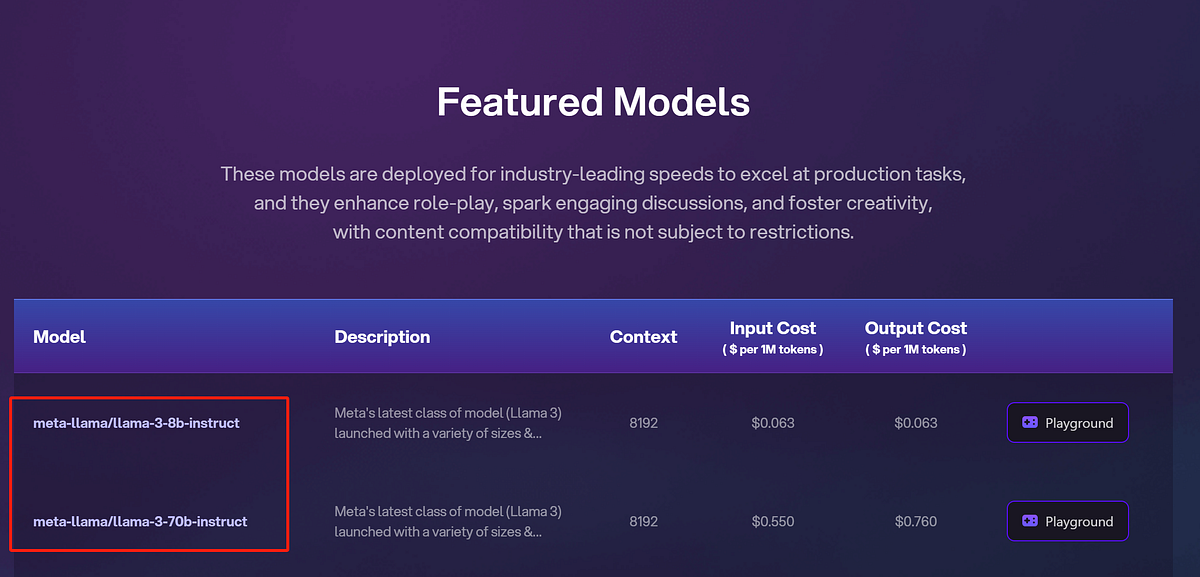

- Step 5: Deploy the llama-3 model with Novita AI API by clicking the link after “the models we support are”. We provide two llama-3 models: llama-3–8b-instruct and llama-3–70b-instruct.

- Step 6: Adjust parameters like messages, prompt, and max tokens to train your new models. You can now use the Novita AI LLM API.

- Step 7: Test the LLM API extensively until it is ready for full implementation.

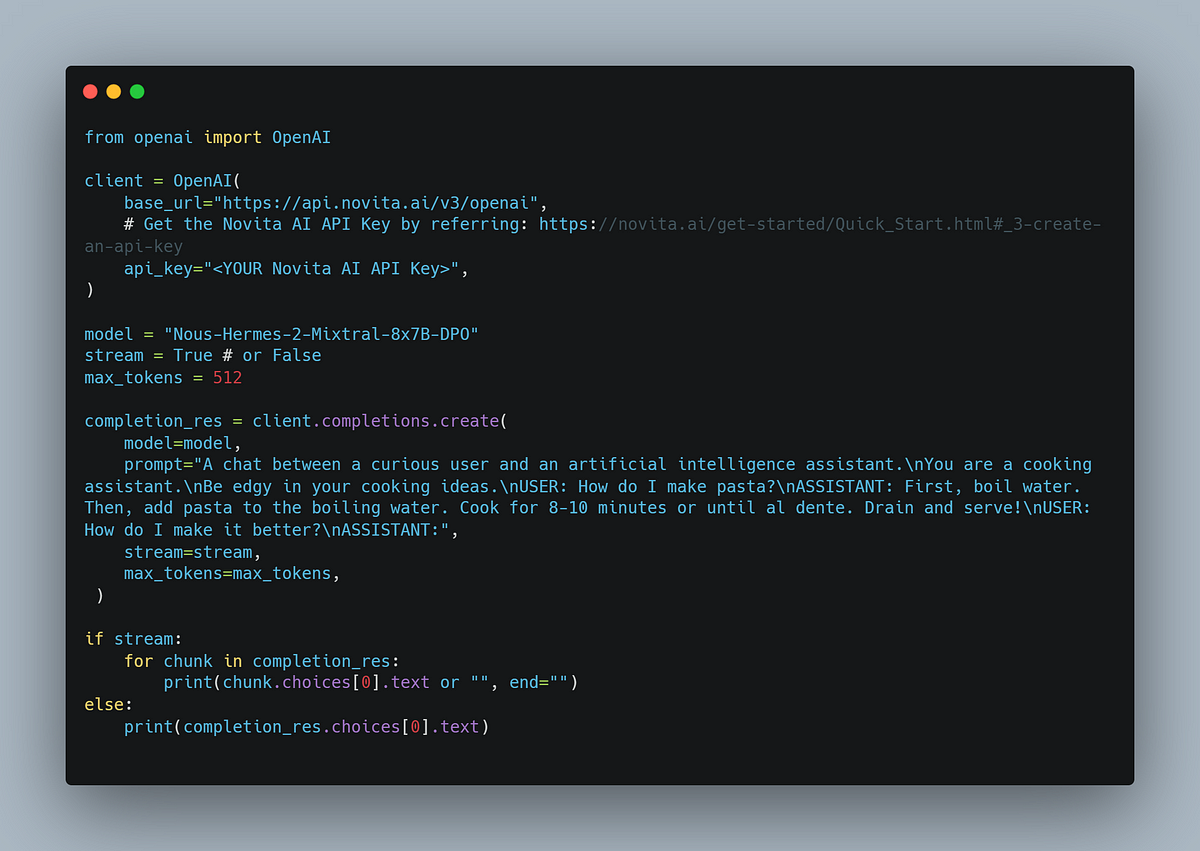

Sample Completions API

Addressing Common Challenges in LLM Quantization

Quantization can benefit LLMs but has challenges. It may reduce model accuracy by making weights less precise, affecting performance. Latency issues can arise, causing delays on low-power devices. Overcoming these challenges involves planning, selecting optimal methods, and implementing tricks to maintain accuracy and prevent slowdowns, enabling effective quantization use for LLMs.

Dealing with Accuracy Loss Post-Quantization

Quantizing LLMs to simplify them can lead to accuracy loss, as detailed weights of the model may lose valuable information. To mitigate this, choose the right quantization method, monitor the model’s performance, and use calibration techniques. Minimizing quantization errors by rounding off or using simpler number formats is crucial for maintaining accuracy. Strategic quantization, proper calibration, and error reduction are key to preserving a quantized model’s effectiveness.

Overcoming Quantization-Induced Latency Issues

Running large language models on low-power devices can cause delays due to quantization, impacting performance. Optimizing the quantized model through pruning and efficient memory usage is crucial for overcoming latency issues. Focusing on reducing memory bandwidth helps mitigate quantization-induced latency, ensuring smoother operation on resource-constrained devices.

Conclusion

To wrap things up, getting the hang of how quantization works in AI, and more so for LLMs, is key to making them work better and faster. This process makes the model smaller and boosts its speed, which are pretty big pluses. But it’s important not to forget that sometimes when you use quantization on LLMs, they might not be as accurate or could run a bit slower. Picking the best way to do quantization for your specific model will help keep it running smoothly. Using methods like QAT or PTQ can help simplify this whole thing. By exploring different ways to do quantification and tackling usual hurdles head-on, you’re setting yourself up for a smooth ride in making your LLM work just right.

Frequently Asked Questions

What is the Difference Between Quantization and Sampling?

Sampling pertains to time or space intervals, whereas quantization focuses on amplitude or value resolution.

Can Quantization Be Reversed or Adjusted Post-Implementation?

Quantization reduces model weight precision and is irreversible. However, tweaking quantization parameters post-application ensures optimal model performance for future adjustments.

How Does Quantization Affect Model Training and Inference Time?

Quantization significantly impacts model learning and prediction speed. Reducing memory footprint and computational demands of quantized models enables faster inference times and improved performance on resource-constrained mobile devices or embedded systems.

Are There Any Specific Models That Benefit More From Quantization?

Quantization is useful for large, detailed models with many components like llama-3. It reduces their size, making them more manageable on everyday devices.

Novita AI is the all-in-one cloud platform that empowers your AI ambitions. With seamlessly integrated APIs, serverless computing, and GPU acceleration, we provide the cost-effective tools you need to rapidly build and scale your AI-driven business. Eliminate infrastructure headaches and get started for free — Novita AI makes your AI dreams a reality.

Recommended Reading