Quick Start Guide of how to Use Llama 3

Introduction

Llama 3, a cutting-edge open-source language model, is revolutionizing the field of NLP. With 8 billion and 70 billion parameter options, Llama 3 offers unparalleled opportunities for data scientists and AI enthusiasts. By following a responsible use guide, users can explore text generation, language translation, and more with this versatile tool. Accessing Llama 3’s features requires technical expertise and a solid background in machine learning. Join the NLP revolution and unleash the power of Llama 3 for intelligent data frameworks and content creation. With the help pf GPU Cloud like Novita AI GPU Pods, operating Llama3 will be much easier.

What is Llama 3?

Llama 3, a revolutionary language model, is making waves in the NLP community. This open-source powerhouse stands out for its 70 billion parameters and advanced features. With a rich training process, Llama 3 offers cutting-edge text generation capabilities and language translation. Accessing Llama 3 resources requires technical expertise in installing necessary tools and libraries. This meta AI promises groundbreaking advancements in data science and intelligent systems. Embrace Llama 3 for unprecedented possibilities in natural language understanding and generation.

What Makes Llama 3 Stand Out?

Llama 3 stands out due to its open-source nature, fostering collaboration and innovation. With options of 8 billion or 70 billion parameters, it offers scalability. Its advanced features cater to diverse needs, making it a versatile tool in the AI landscape.

Preparing to Use Llama 3

System Requirements and Setup for Llama 3 are crucial before diving into its functionalities. Understanding the different versions, 8 billion versus 70 billion parameters, is essential. Ensuring necessary Python packages are installed is critical for a smooth setup process. Accessing the model through Hugging Face and grasping the training process are initial steps. Acquiring API keys for access to Llama 3 resources is paramount for language model usage.

System Requirements and Setup

Before diving into Llama 3, ensure your system meets the necessary requirements. Having a strong technical background is beneficial when setting up this advanced language model. Make sure you have the essential Python packages installed for a smooth experience. Understanding the intricacies of server deployment and GPU utilization will enhance your journey with Llama 3. Familiarize yourself with the system specifications before delving into the world of generative AI.

Understanding the Two Versions: 8 Billion vs. 70 Billion Parameters

Llama 3 offers two variants differing in parameters, 8 billion and 70 billion. The parameter count directly affects model performance, with the 70 billion model providing enhanced accuracy and complexity for tasks. Despite the advanced capabilities of the 70 billion version, it demands higher computational resources compared to the 8 billion variant. Users with specific requirements can choose between the versions based on their project needs, balancing performance and resource utilization effectively. Make an informed decision based on task complexity and available resources.

Step-by-Step Guide to Using Llama 3

Step 1: Accessing Llama 3 Resources

To access Llama 3 resources, visit the official website or Novita AI LLM API. Obtain an Llama3 LLM API key, crucial for interacting with the language model. Ensure a stable internet connection. Explore tutorials and guides on the Llama 3 blog or GitHub repository to enhance your understanding. Prioritize leveraging the Transformers library and necessary Python packages in your journey. Begin by setting up a local environment on your machine for seamless access to Llama 3 resources.

Step 2: Installing Necessary Tools and Libraries

To begin utilizing Llama 3, start by installing the essential tools and libraries required for seamless operation. Ensure you have the necessary Python packages and expertise to support the training and inference processes. Familiarize yourself with the Transformers library and tokenizer to enhance the language model capabilities. Accessing these resources will lay a solid foundation for engaging with the advanced features of Llama 3 effectively. Streamline your setup to embark on your NLP journey with ease.

Step 3: Loading the Model

To load the model in Llama 3, you need to access the necessary repository from Hugging Face. Utilize the Transformers library for this step. With your API key in hand, invoke the model using the tokenizer from the repository. Keep in mind the data framework you’re employing for this task. By running the appropriate code on your local machine, you can efficiently load the intelligent Llama model, paving the way for seamless text generation and other NLP applications.

Step 4: Running Your First Task with Llama 3

To run your first task with Llama 3, ensure you have the necessary Python packages installed for seamless functioning. Use your API key to access Llama 3 services. Employ the Transformers library to efficiently interact with the model. Execute the task by sending a request through the API, enabling Llama 3 to generate the desired output. Familiarize yourself with different platform integrations for diverse tasks and maximize the potential of this powerful language model. Enjoy exploring Llama 3’s capabilities in your projects.

Step 5: Exploring Advanced Features

To fully leverage Llama 3, delve into its advanced capabilities. Uncover the intricacies of the model and experiment with diverse functionalities. Dive into tasks beyond the basics, exploring enhanced text generation, advanced language translation, or innovative speech recognition features. Push the boundaries of what is possible with this potent tool. Embrace the complexities and nuances of NLP to harness Llama 3’s full potential, driving innovation and excellence in your projects. Experiment boldly and unlock the true power of this remarkable language model.

Operating LLMs on a Pod: A Step-by-Step Guide

For developers it will be more important to run Llama3. If you want to deploy a Large Language Model (LLM) on a pod, here’s a systematic approach to help you get started:

- Create a Novita AI GPU Pods Account To begin, visit the Novita AI GPU Pods website and click on the “Sign Up” button. You’ll need to provide an email address and password to register. Join the Novita AI GPU Pods community to access their resources.

- Set Up Your Workspace After creating your Novita AI GPU Pods account, proceed to create a new workspace. Navigate to the “Workspaces” tab and click on “Create Workspace.” Assign a name to your workspace to get started.

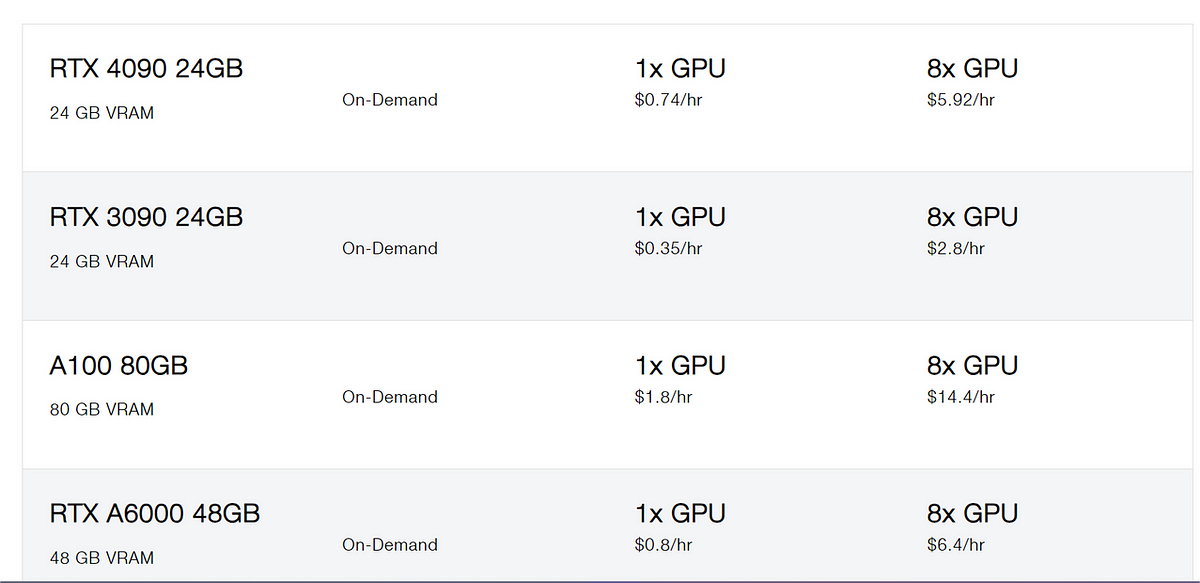

3. Choose a GPU-Enabled Server When setting up your workspace, ensure you select a server equipped with a GPU. Novita AI GPU Pods offer access to powerful GPUs such as the NVIDIA A100 SXM, RTX 4090, and RTX 3090. These servers come with substantial VRAM and RAM, making them suitable for efficiently training even the most complex AI models.

4. Install LLM Software on the Server Once you’ve chosen a GPU-enabled server, proceed to install the LLM software. Follow the installation instructions provided by the LLM software package to ensure correct setup.

Optimizing Performance with Llama 3

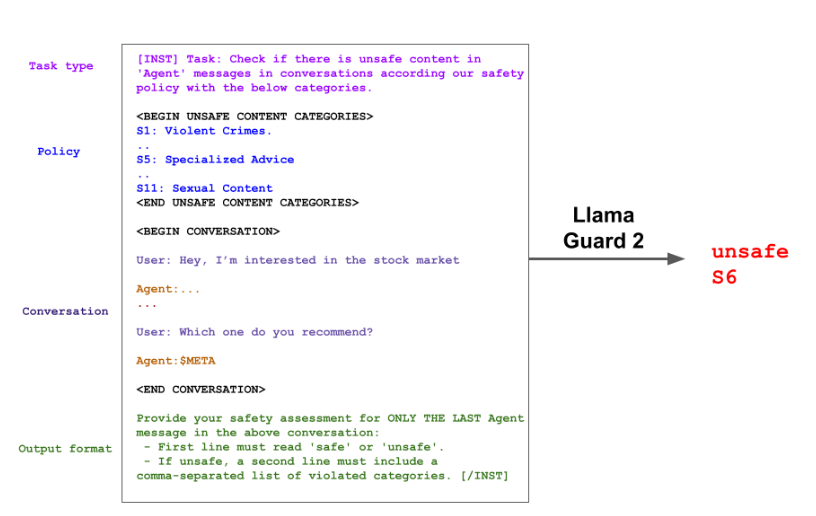

Utilizing Llama Guard 2 enhances security measures while Code Shield and CyberSec Eval 2 boost operational efficiency, ensuring a robust performance from Llama 3. These features offer comprehensive protection and optimization capabilities, catering to varying user needs in data science and AI applications. Integrating these tools seamlessly into your workflow can elevate the overall performance and security of the system, providing a reliable environment for your NLP tasks and projects.

Utilizing Llama Guard 2 for Security

Llama Guard 2 is a vital tool for securing your Llama 3 setup. This security feature shields your system from potential vulnerabilities and unauthorized access. By integrating Llama Guard 2, you fortify your infrastructure against cyber threats, ensuring the safety of your data and operations. With its robust protective mechanisms, Llama Guard 2 enhances the overall security posture of your NLP projects, allowing you to focus on advancing your models with peace of mind.

Efficiency Gains with Code Shield and CyberSec Eval 2

Achieving efficiency gains with Code Shield and CyberSec Eval 2 enhances security measures with advanced protection layers. By safeguarding against potential threats and vulnerabilities, these tools optimize performance within the Llama 3 framework. Implementing these security features ensures a robust defense mechanism, crucial for maintaining the integrity of your NLP tasks. Leveraging Code Shield and CyberSec Eval 2 not only boosts efficiency but also reinforces the safe utilization of Llama 3 in various operational environments.

Conclusion

Llama 3 presents a revolutionary tool for data scientists and AI enthusiasts, offering a rich landscape for exploration in natural language processing. To maximize its potential, follow responsible use guidelines and tap into its capabilities for various applications, from customer service chatbots to content generation. With continued advancements in AI, Llama 3 is poised to shape the future of intelligent systems. Remember, a deep understanding of the training process and a strong technical background are key to harnessing its power. Harness Llama 3 responsibly for transformative results.

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended Reading: