Quick and Easy Guide to Fine-Tuning Llama

Key Highlights

- Making Llama models better at specific jobs or understanding certain areas is done by fine-tuning them. This means you start with a model that’s already been trained and tweak it to do something new.

- Through this tweaking process, Llama models get really good at different language tasks because they’re optimized for better results in real-life uses.

- With the help of a GPU Cloud developers can finetune their LLM in a more cheap way.

Introduction

Llama models are really interesting when it comes to the world of NLP, and they hold a lot of promise for new ideas. By fine-tuning these models, we can make them work better and fit more specific jobs. With tools like reinforcement learning and input from people, we can push what llama models can do even further. It’s super important to get the basics of how to fine-tune llama models if you want to dive deep into advanced machine learning stuff. In this guide, let’s dig into how tweaking llama models just right can unleash their full capabilities.

Understanding Llama Models and Fine-Tuning Basics

Llama models, just like other types of language helpers, need a bit of tweaking to get them ready for certain jobs. This tweaking means taking a model that’s already been set up and teaching it with new information. By doing this, the llama gets better at its job and can be adjusted to meet specific goals. It’s really important to grasp how these llama models work and the basics of making these tweaks if you want to make the most out of them in different language tasks. Through this process called fine-tuning, we can make sure each model is customized perfectly and improved upon by learning all about the area or task it will be dealing with.

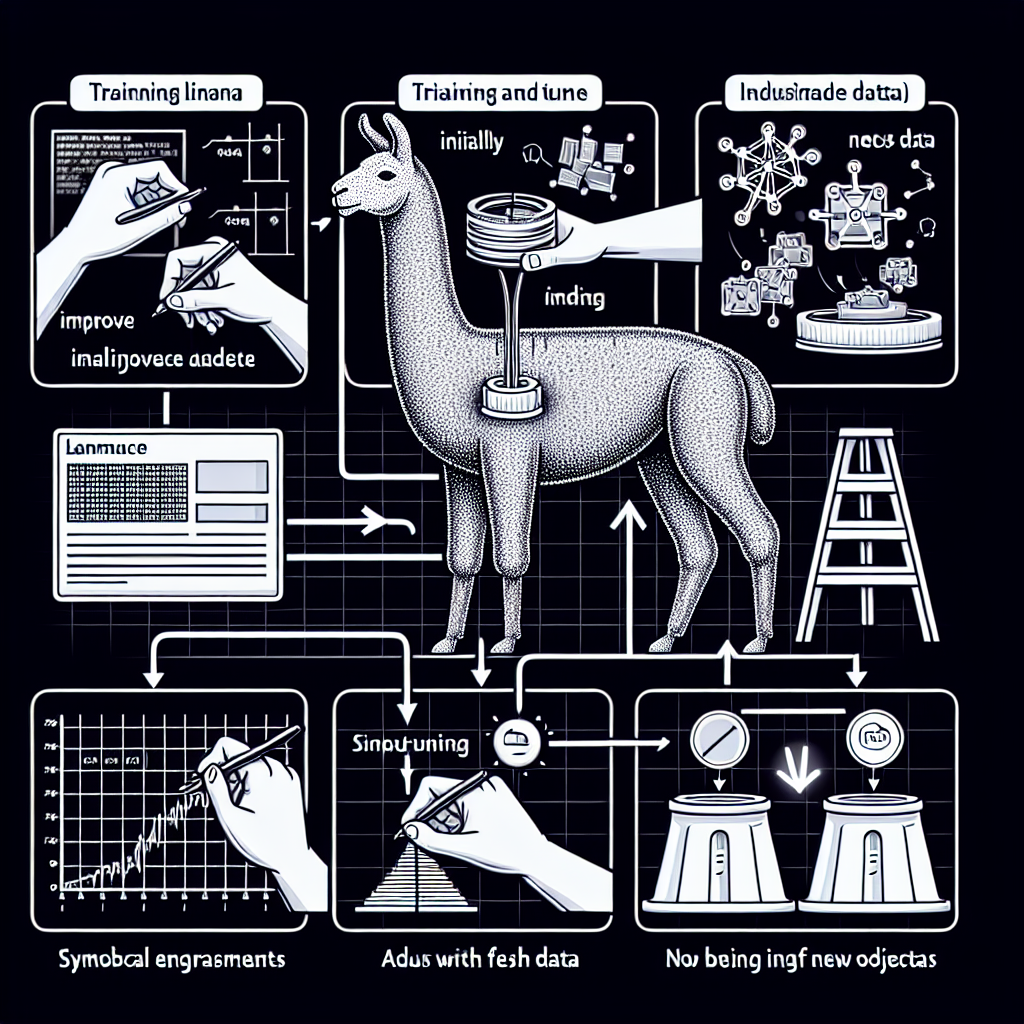

What is Llama Model Fine-Tuning?

Fine-tuning llama models means making small adjustments to these pre-trained setups so they work better for certain jobs or with specific kinds of information. With fine-tuning, you tweak the model’s settings to get along with new data, which helps it do its job more accurately and quickly in tasks that need a special touch.

The Benefits of Using Finetuned Llama

There are several benefits to using a finetuned language model:

Improved Accuracy and Relevance

Finetuning a language model on a specific dataset or task can significantly improve the accuracy and relevance of the text it generates. This is because the model learns the specific language and concepts related to that dataset or task, and is able to generate text that is more closely aligned with the user’s intent.

Reduced Bias

Finetuning a language model on a diverse dataset can help to reduce bias in the text it generates. This is because the model is exposed to a wider range of perspectives and viewpoints, and is less likely to generate text that is biased towards a particular group or perspective.

Increased creativity and variety

Finetuning a language model on a creative dataset or task can increase the creativity and variety of the text it generates. This is because the model learns to generate text that is more varied and interesting, and is less likely to stick to a single pattern or style.

Step-by-Step Guide to Fine-Tuning Your Llama Model

Now that we know the main points about making Llama models better, let’s go through a guide on how to do it yourself.

Step 1: Selecting Your Model and Dataset

Start by choosing a base model and training dataset. The base model serves as the foundation, determining the system’s basic features. Select from various Llama models based on your specific needs, considering their size and complexity.

Step 2: Configuring Your Model for Fine-Tuning

Set up your model for fine-tuning by adjusting settings like learning rate and batch size. The learning rate determines the step size during learning, while batch size dictates how many data examples are processed at once. Additionally, configure model-specific options to optimize performance.

Step 3: Training Your Model

Train your model using the chosen dataset, which contains labeled examples for improvement. Divide the dataset into batches and control training iterations with epochs. Adjust batch size to balance training speed and resource demands. Utilize reinforcement learning with human feedback (RLHF) for further enhancements.

Step 4: Evaluating Model Performance

After training, evaluate your model’s performance using metrics like accuracy, precision, recall, and F1 score. Test with a separate dataset to gauge its ability to handle new data. Focus on specific tasks to ensure the model’s responses align with intended use cases.

Step 5: Iteration and Optimization

Refine your model based on evaluation results. Adjust the learning rate for the right balance of speed and accuracy, and tweak batch size to optimize training efficiency. Enhance training data diversity and focus to improve overall model performance.

How to Fine Tune Llama with the Help of GPU Cloud?

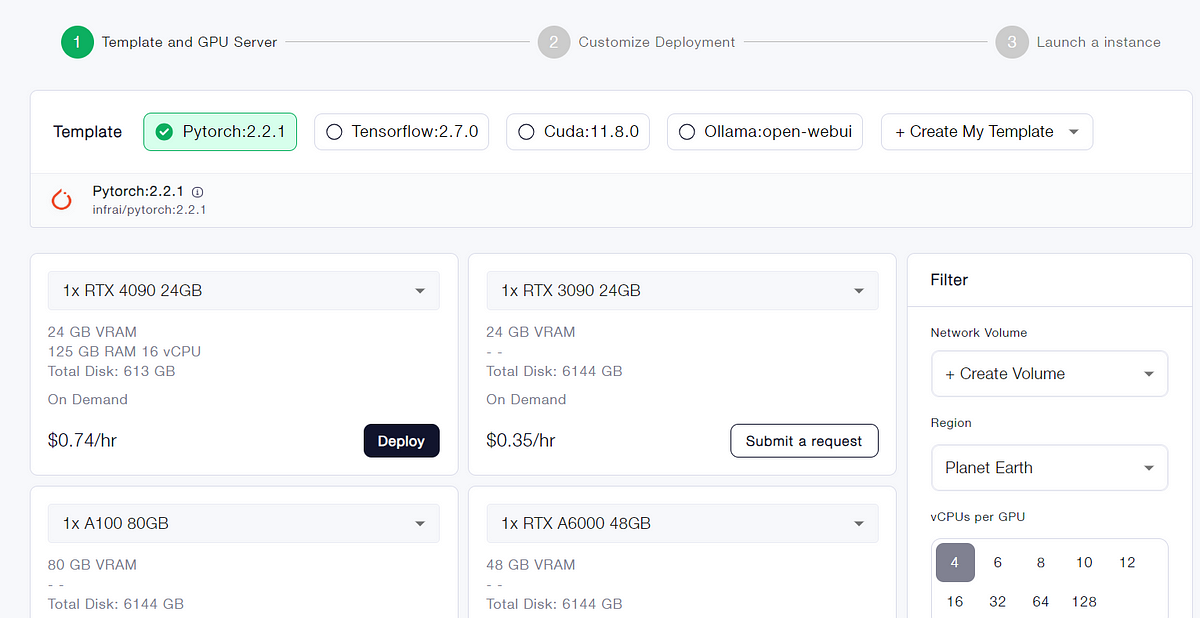

Fine-tuning a sophisticated AI model like Llama can be a complex and resource-intensive task, but with the right tools and infrastructure, it becomes more manageable. Here’s a step-by-step guide on how to fine-tune Llama using Novita AI GPU Pods:

Step 1: Environment Setup with Novita AI Pods Infrastructure

The journey begins with setting up the environment using Novita AI’s scalable GPU cloud infrastructure. This infrastructure is designed to be both cost-effective and conducive to AI innovations. By utilizing the on-demand GPU capabilities provided by Novita AI Pods, you can ensure high computational power while keeping cloud costs in check. This foundational step is crucial for the success of the fine-tuning process.

Step 2: Incorporating Llama3 into the Existing Ollama Framework

With Ollama ready, the process moves on to integrating Llama3 into the existing Ollama framework. This integration is a pivotal step that enhances the capabilities of Ollama by incorporating the advanced AI features of Llama3. The seamless integration results in a more powerful AI solution that is ready for fine-tuning.

Step 3: Testing the Integrated System for Performance and Reliability

After the integration, the final step is to thoroughly test the integrated system for performance and reliability. This involves running a series of benchmarks and real-world use cases to ensure that the system operates optimally. The testing phase is critical to validate the success of the fine-tuning process and to confirm that the integrated AI ecosystem is robust and efficient.

Conclusion

Wrapping things up, making small adjustments to your llama models can really step up their game for different kinds of language jobs. By using the cool tech called transformers and taking advantage of Hugging Face’s stuff that anyone can use, you’ll find it pretty straightforward to get these models ready for new information they haven’t seen before. Don’t forget about fine-tuning those settings that control how the model learns, changing up how much data you feed it at once, and gradually making your model better with a mix of reinforcement learning and getting input from people. Keeping an eye on what’s new in NLP will help you keep improving what language models like llama can do.

Frequently Asked Questions

How Much Data Do I Need to Fine-Tune a Llama Model?

To get a Llama model just right, you need different amounts of data based on how tough the job is and what kind of results you’re looking for. Usually, if you have more data to train with, your fine-tuned model will do better. But don’t worry if your dataset isn’t huge; using methods like supervised fine-tuning and getting tips from human feedback can still make a big difference in boosting its performance.

Can I Fine-Tune a Llama Model Without Deep Learning Experience?

Even if you’re new to the whole deep learning scene, tweaking a Llama model is totally doable. With help from the open-source crowd, there’s no shortage of tutorials and guides out there. They walk you through each step on how to adjust Llama models with tools like Hugging Face. This way, even folks just starting can tailor-make these llama models for whatever they need.

Novita AI is the all-in-one cloud platform that empowers your AI ambitions. With seamlessly integrated APIs, serverless computing, and GPU acceleration, we provide the cost-effective tools you need to rapidly build and scale your AI-driven business. Eliminate infrastructure headaches and get started for free - Novita AI makes your AI dreams a reality.

Recommended Reading: