Introducing Meta-Llama Models: What Are They and How to Set Up

Introduction

Meta-Llama models have emerged as a game-changer in the field of generative AI. Developed by Meta, these models offer a powerful tool for developers, researchers, and businesses to build, experiment, and scale their generative AI applications. The capabilities of Meta-Llama models are made possible by leveraging advanced natural language processing techniques and large language models.

Generative AI refers to the ability of AI systems to generate new content, such as text, images, or videos, based on patterns and data they have learned. Language models, in particular, focus on generating human-like text based on the training data they have been exposed to. Meta-Llama models take this concept to the next level by providing highly advanced and versatile language models for various applications.

The development of Meta-Llama models represents a significant advancement in language understanding and generation. These models have been trained on massive amounts of data, enabling them to comprehend complex language structures and generate coherent and contextually relevant text.

Understanding Meta-Llama Models

Meta-Llama models are a collection of pre-trained and instruction-tuned large language models (LLMs) developed by Meta. These models excel in language understanding and generation, making them ideal for a wide range of applications.

The Meta-Llama models utilize advanced natural language processing techniques to comprehend and generate human-like text. By training on massive amounts of data, these models have developed a deep understanding of language structures and patterns.

The large size of Meta-Llama models enables them to handle complex and nuanced language tasks, such as text summarization, sentiment analysis, code generation, and dialogue systems. These models represent the cutting edge of language modeling technology and provide developers with a powerful tool for their AI projects.

The Emergence of Meta-Llama 3 Family

Meta-Llama 3 is the latest addition to the Meta-Llama model family. This collection of models represents a significant milestone in research and development in the field of AI models.

The emergence of the Meta-Llama 3 family marks a significant advancement in the field of AI models. These models offer improved performance and capabilities compared to their predecessors, making them highly versatile for a wide range of applications.

Developers can leverage the Meta-Llama 3 models to build AI applications that excel in tasks such as text summarization, sentiment analysis, code generation, and dialogue systems. The Meta-Llama 3 family represents the state-of-the-art in language modeling technology and opens up new possibilities for AI development.

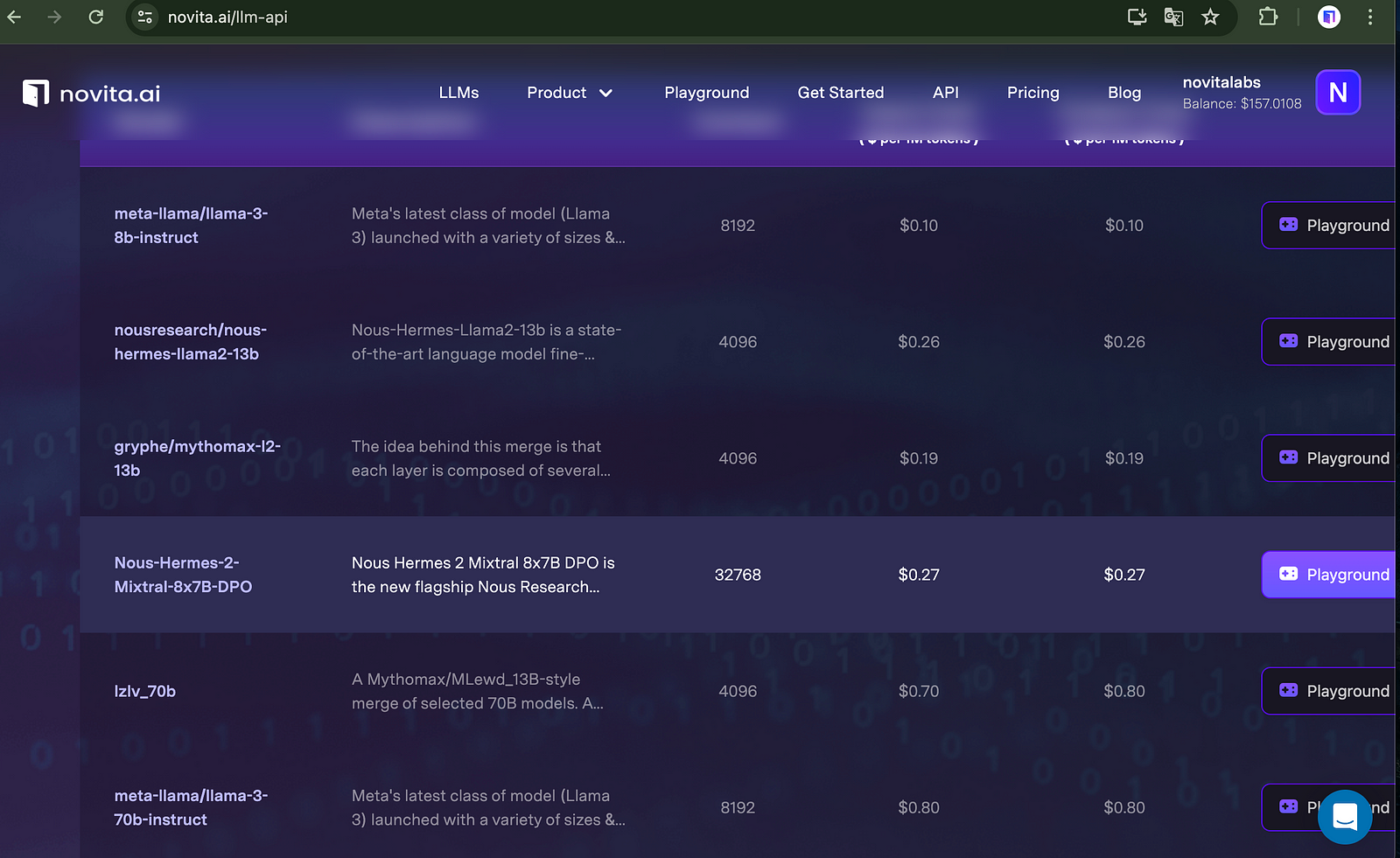

Exploring the Different Sizes: 8B and 70B

Meta-Llama models are available in two different sizes: 8B and 70B. Each size offers unique advantages and is suited for different computational requirements and use cases.

The 8B model is designed for situations where computational power and resources are limited. This model is optimized for faster training times and can be deployed on edge devices. It excels in tasks such as text summarization, text classification, sentiment analysis, and language translation.

On the other hand, the 70B model is ideal for more resource-intensive applications and enterprise-level projects. With its larger parameter size, this model offers enhanced performance and accuracy in tasks such as text summarization, code generation, dialogue systems, and language understanding.

The table below provides a comparison of the key parameters and capabilities of the 8B and 70B Meta-Llama models:

How to Access Meta-Llama Models

Accessing Meta-Llama models is made easy through open-source platforms and community licenses. One such platform is Hugging Face, which provides a user-friendly interface to access and utilize these models.

The open-source nature of Meta-Llama models allows developers to experiment, build, and integrate them into their applications without any licensing restrictions. This promotes collaboration and innovation within the AI community.

By obtaining a community license, developers can access Meta-Llama models and benefit from the latest advancements in language understanding and generation. The availability of these models through open-source platforms like Hugging Face democratizes AI development, making it accessible to a wider audience.

Setting Up Your Meta-Llama Environment

Setting up the Meta-Llama environment requires installation and configuration steps. However, developers can leverage the support and resources provided by AWS to simplify the process.

AWS offers comprehensive support for setting up and configuring Meta-Llama models. Through AWS support, developers can access documentation, tutorials, and community forums to help them navigate the setup process.

By following the installation and configuration steps provided by AWS, developers can quickly establish their Meta-Llama environment and begin utilizing the models for their AI projects. AWS support ensures that developers have the necessary resources and guidance to make the most of Meta-Llama models.

Necessary Hardware and Software Requirements

To effectively set up and utilize Meta-Llama models, developers need to ensure that they meet the necessary hardware and software requirements.

In terms of hardware, developers should have a system with sufficient computational power and memory to handle the demands of running the models. The exact hardware requirements may vary depending on the specific use case and the size of the Meta-Llama model being used.

On the software side, developers need to install and configure the necessary components and dependencies. This includes setting up the Amazon Bedrock environment, which provides access to Meta-Llama models.

By ensuring that the hardware and software requirements are met, developers can create a stable and efficient environment for utilizing Meta-Llama models. This sets the foundation for successful AI development and enables the models to perform at their fullest potential.

Installation and Configuration Steps

To set up the Meta-Llama environment, developers need to follow a series of installation and configuration steps. These steps can be executed through the command line interface (CLI) or by downloading the necessary files from the repository.

The installation process involves retrieving the required software components and dependencies and configuring them on the development system. This typically includes setting up the necessary libraries and frameworks that enable the usage of Meta-Llama models.

Once the software components are installed, developers can proceed with the configuration steps. This may involve specifying the model parameters, setting up the necessary environment variables, and configuring any additional dependencies required by the specific use case.

By following the installation and configuration steps provided by the Meta-Llama documentation, developers can ensure a smooth setup process. This sets the stage for effectively utilizing Meta-Llama models in their AI projects.

Applications of Meta-Llama

Meta-Llama models offer a wide range of applications and integration possibilities for developers. These models can be seamlessly integrated into existing systems or used as standalone components in various projects.

Instruction Tuned Generative Text Models

Meta-Llama models, particularly the instruction-tuned generative text models, offer powerful capabilities for text generation and dialogue systems.

These instruct models are designed to generate contextually relevant and coherent text based on specific instructions or prompts provided by users. By fine-tuning the models with instructions, developers can create AI systems that respond intelligently and accurately to user queries or prompts.

By leveraging the instruction-tuned generative text models of Meta-Llama, developers can build AI systems that generate high-quality text responses, engage in meaningful conversations, and provide valuable insights to users.

Integrating with LLMs and Llama3 Libraries

Integrating Meta-Llama models with existing AI frameworks and libraries is made easy through the use of transformers and Llama3 libraries.

Transformers are powerful tools that allow developers to seamlessly integrate Meta-Llama models into their AI projects. These libraries provide the necessary functionalities and APIs to interact with and utilize the capabilities of Meta-Llama models.

The Llama3 libraries, specifically designed for Meta-Llama models, further enhance the integration process and provide additional resources and tools for developers. These libraries offer a range of utilities, such as data preprocessing, model inference, and result interpretation, making it easier for developers to work with Meta-Llama models.

By leveraging transformers and Llama3 libraries, developers can streamline the integration process and unlock the full potential of Meta-Llama models in their AI applications. These libraries provide the necessary infrastructure and support to effectively utilize the capabilities of Meta-Llama models.

You can learn more about Llama 3 model in our blog: Meta Llama 3: The Most Powerful Openly Available LLM To Date

Training Data and Model Performance

Training data plays a crucial role in the performance of Meta-Llama models. The models are trained on massive amounts of data, which allows them to learn and understand complex language structures.

The training data used for Meta-Llama models consists of publicly available sources, ensuring that the models are exposed to diverse and representative language patterns. This enables the models to generate coherent and contextually relevant text.

Overview of Training Data Used

The training data used for Meta-Llama models consists of a vast amount of publicly available data. This dataset comprises a wide range of language patterns and structures, allowing the models to learn and understand the intricacies of human language.

The training data is carefully curated to ensure that it is representative of real-world language usage. This enables Meta-Llama models to generate text that is contextually relevant and coherent, making them highly effective for various language modeling tasks.

Benchmarks and Performance Insights

Benchmarks and performance metrics provide important insights into the capabilities and limitations of Meta-Llama models.

Performance benchmarks evaluate the models’ ability to generate coherent and contextually relevant text. These benchmarks measure various aspects, such as language understanding, text summarization, sentiment analysis, and code generation.

Ethical Use and Safety Considerations

Ethical use and safety considerations are vital when working with Meta-Llama models. It is essential to ensure that these models are used responsibly and in a manner that aligns with ethical guidelines and best practices.

Cybersecurity measures should be implemented to protect user data and ensure the safety and privacy of individuals interacting with AI systems powered by Meta-Llama models. This includes safeguarding against potential data breaches and ensuring that user information is handled securely.

By prioritizing ethical use and safety considerations, developers can promote responsible AI development and enhance the trustworthiness of AI systems powered by Meta-Llama models.

Addressing Responsibility and Cyber Security

Addressing responsibility and cybersecurity is of paramount importance when working with Meta-Llama models.

Developers should adhere to a responsible use guide when utilizing these models to ensure that they are used in an ethical and accountable manner. This includes avoiding activities that may infringe upon user privacy, data protection, or legal requirements.

Ensuring Community Welfare

By prioritizing child safety and community welfare, developers can contribute to the responsible development and use of AI technology. This involves actively monitoring and addressing potential ethical concerns and taking proactive steps to mitigate risks associated with the utilization of Meta-Llama models.

Navigating Through Challenges and Limitations

Technical limitations may arise in terms of computational requirements, model performance, or specific use case constraints. By acknowledging and addressing these limitations, developers can optimize the utilization of Meta-Llama models.

Ethical considerations also play a significant role when working with AI technology. It is important to ensure responsible use and address potential ethical challenges, such as bias, privacy, and fairness, associated with the utilization of Meta-Llama models.

Technical Limitations and How to Overcome Them

Technical limitations may present challenges when working with Meta-Llama models, but they can be overcome with careful consideration and implementation of appropriate strategies.

For example, computational limitations may be addressed by optimizing the hardware infrastructure or utilizing cloud computing resources. Developers can leverage services like AWS to access the necessary computational power to run Meta-Llama models efficiently.

Model performance limitations can be mitigated through fine-tuning and training techniques. By iteratively refining the models and incorporating human feedback, developers can enhance their performance and address specific use case requirements.

By proactively identifying and addressing technical limitations, developers can optimize the utilization of Meta-Llama models and overcome challenges to achieve the desired outcomes in their AI projects.

Conclusion

In conclusion, understanding Meta-Llama models opens up a world of possibilities in the realm of AI and text generation. With the emergence of Meta-Llama 3 and its different sizes, accessing and utilizing these models can revolutionize projects and enhance performance. Setting up your Meta-Llama environment requires attention to detail, including hardware, software, and ethical considerations. By navigating challenges and limitations, while prioritizing ethical use and safety, you can harness the power of Meta-Llama models effectively. Stay informed, explore training data, and ensure responsible application to leverage the full potential of Meta-Llama technology for innovative solutions.

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

What is the difference between LLM and GPT

LLM Leaderboard 2024 Predictions Revealed

Novita AI LLM Inference Engine: the largest throughput and cheapest inference available