How to Use Llama 3 on Novita AI GPU Instance

Master how to use llama 3 on Novita AI GPU 3. Unleash the power of llama 3 on Novita AI GPU with our guide. Dive in now!

Introduction

Llama 3, a cutting-edge open-source language model, is revolutionizing the field of NLP. With 8 billion and 70 billion parameter options, Llama 3 offers unparalleled opportunities for data scientists and AI enthusiasts. By following a responsible use guide, users can explore text generation, language translation, and more with this versatile tool. Accessing Llama 3’s features requires technical expertise and a solid background in machine learning. Join the NLP revolution and unleash the power of Llama 3 for intelligent data frameworks and content creation. With the help pf GPU Cloud like Novita AI GPU Pods, operating Llama3 will be much easier.

What is Llama 3?

Llama 3, a revolutionary language model, is making waves in the NLP community. This open-source powerhouse stands out for its 70 billion parameters and advanced features. With a rich training process, Llama 3 offers cutting-edge text generation capabilities and language translation. Accessing Llama 3 resources requires technical expertise in installing necessary tools and libraries. This meta AI promises groundbreaking advancements in data science and intelligent systems. Embrace Llama 3 for unprecedented possibilities in natural language understanding and generation.

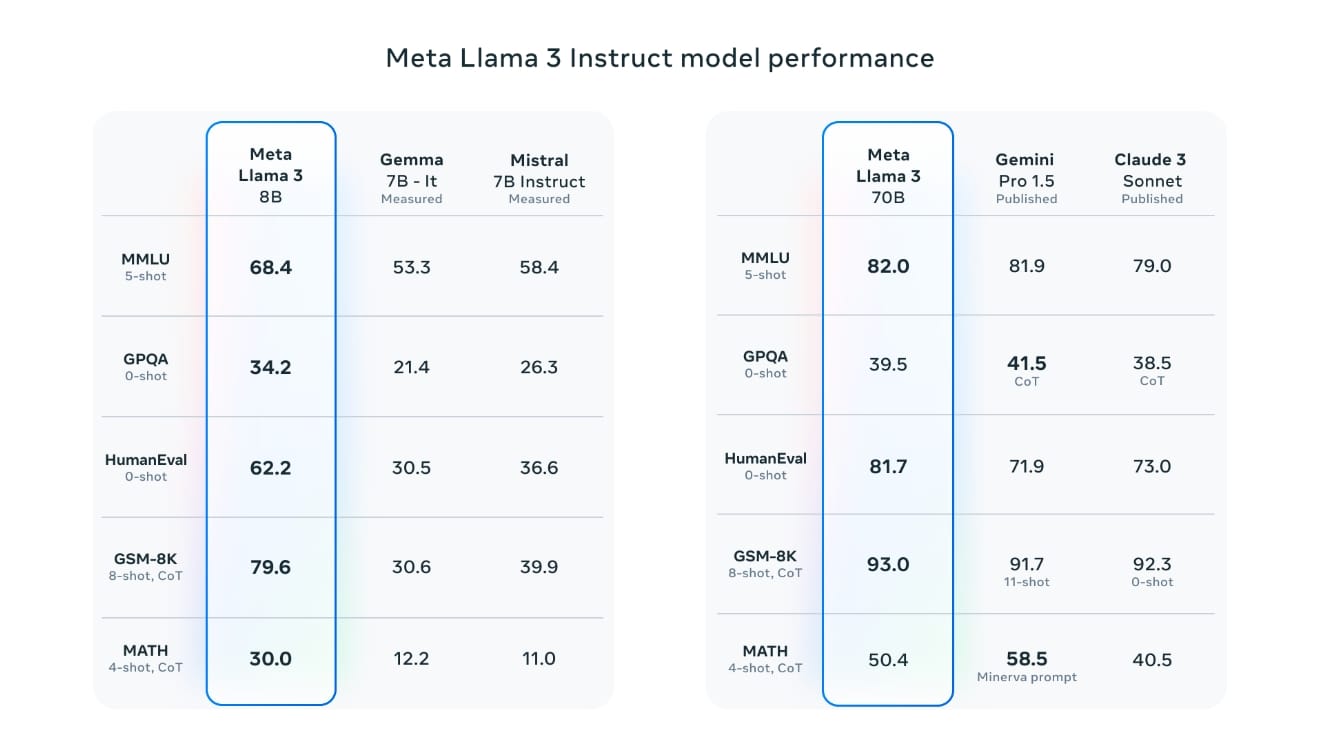

What Makes Llama 3 Stand Out?

Llama 3 stands out due to its open-source nature, fostering collaboration and innovation. With options of 8 billion or 70 billion parameters, it offers scalability. Its advanced features cater to diverse needs, making it a versatile tool in the AI landscape.

Here is a video clip of an introduction to Llama 3:

Key Features of VLLM List Models

- Scale and Complexity: Trained on massive datasets of terabytes of text, these models learn from diverse sources to gain a nuanced understanding of language.

- Sequence Handling: VLLM list models excel at managing sequences, from generating paragraphs to translating languages. Their strength lies in handling complex dependencies through advanced architectures like transformers.

- Versatility Across Domains: VLLM list models are versatile, extending beyond text generation to tasks like sentiment analysis, question answering, and summarization. Their adaptability makes them valuable across different fields, from healthcare to finance.

- Memory Efficiency: Utilizes Paged Attention to avoid unnecessary memory usage, guaranteeing seamless project performance.

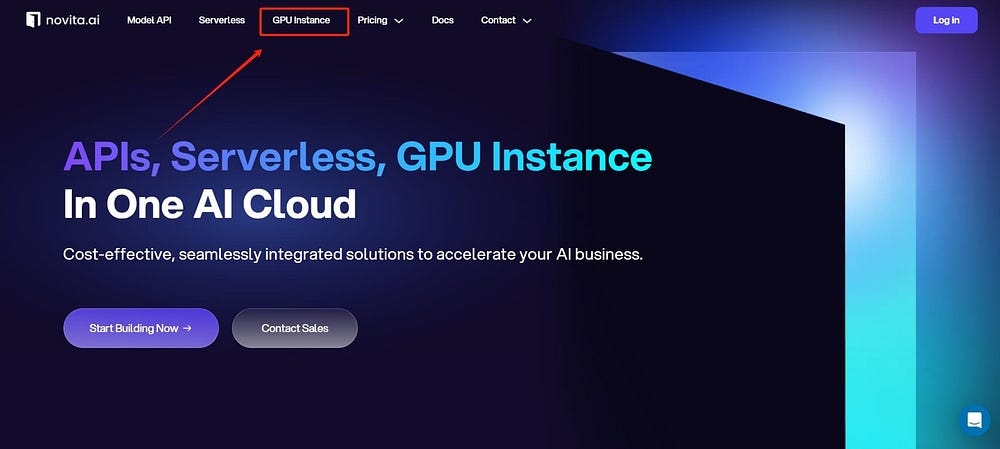

Operating LLMs on Novita AI GPU Instance: A Step-by-Step Guide

LLMs require GPU compute to create stunning art and the process can take at least a few minutes. For developers it will be more important to run Llama3. If you want to deploy a Large Language Model (LLM) on a pod, here’s a systematic approach to help you get started:

- Create a Novita AI GPU Instance Account

To begin, visit the Novita AI GPU Instance website and click on the “Log in” button. You’ll need to provide an email address and password to register.

2. Set Up Your Workspace

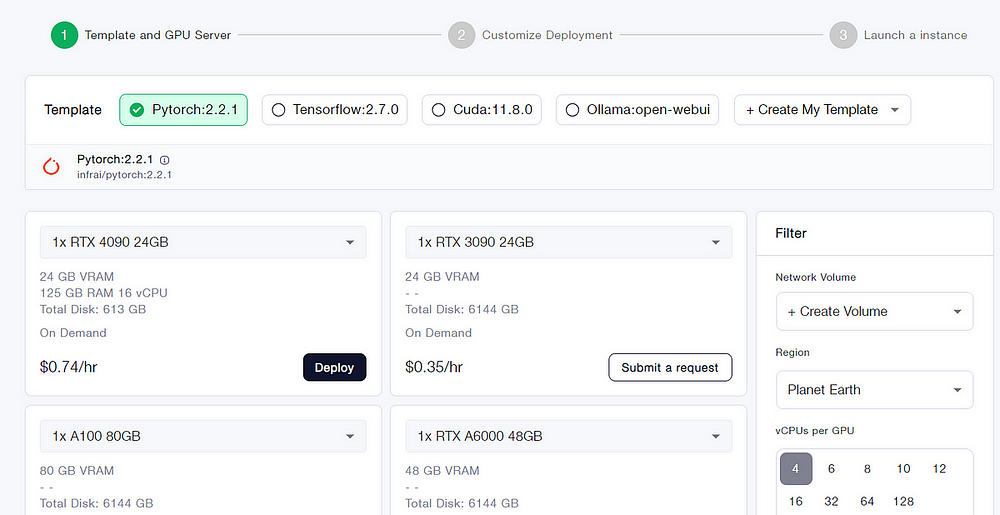

Click on this template link, then pick your instance type, including Pytorch, Tensorflow, Cuda, Ollama. Furthermore, you can also create your own template data by clicking the final bottom.

3. Choose a GPU-Enabled Server

Novita AI GPU Pods offer access to powerful GPUs such as the NVIDIA A100 SXM, RTX 4090, and RTX 3090. These servers come with substantial VRAM and RAM, making them suitable for efficiently training even the most complex AI models.

Click “Select” to continue

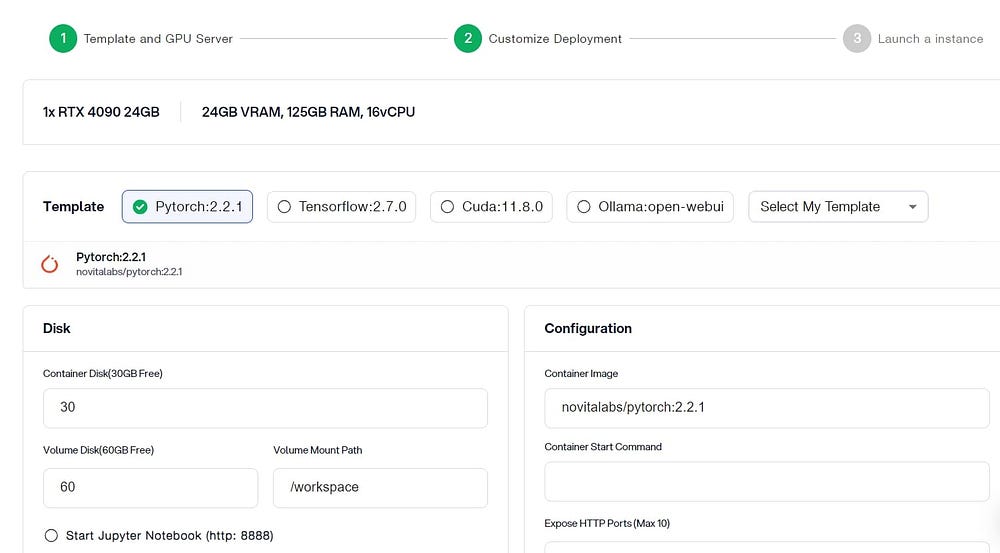

You can customize this data according to your own needs. There are 30GB free in the Container Disk and 60GB free in the Volume Disk, and if the free limit is exceeded, additional charges will be incurred.

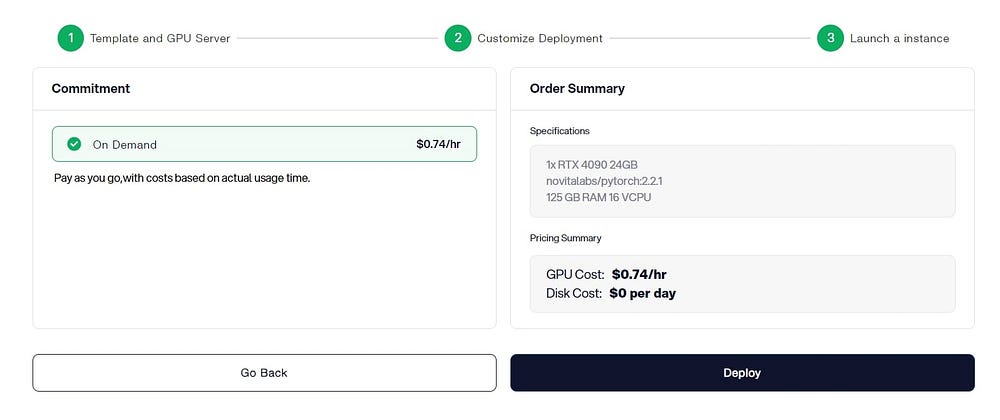

Launch an Instance

Whether it’s for research, development, or deployment of AI applications, Novita AI GPU Instance equipped with CUDA 12 delivers a powerful and efficient GPU computing experience in the cloud.

4. Install LLM Software on the Server

Once you’ve chosen a GPU-enabled server, proceed to install the LLM software. Follow the installation instructions provided by the LLM software package to ensure correct setup.

What can you get through renting GPU in GPU Instance to enhance your Llama

- GPU Cloud Access: Novita AI provides a GPU cloud that users can leverage while using the PyTorch Lightning Trainer. This cloud service offers cost-efficient, flexible GPU resources that can be accessed on-demand.

- Cost-Efficiency: Users can expect significant cost savings, with the potential to reduce cloud costs by up to 50%. This is particularly beneficial for startups and research institutions with budget constraints.

3. Instant Deployment: Users can quickly deploy a Pod, which is a containerized environment tailored for AI workloads. This streamlined deployment process ensures developers can start training their models without any significant setup time.

4. Customizable Templates: Novita AI GPU Instance comes with customizable templates for popular frameworks like PyTorch, allowing users to choose the right configuration for their specific needs.

5. High-Performance Hardware: The service provides access to high-performance GPUs such as the NVIDIA A100 SXM, RTX 4090, and A6000, each with substantial VRAM and RAM, ensuring that even the most demanding AI models can be trained efficiently.

Step-by-Step Guide to Use Novita AI LLM API

Besides renting GPU in GPU cloud and deploying new models by yourself, you have another choice, that is, to choose LLM API Service with Novita AI. Deploying premium vLLM list models involves seamless API integration. This approach allows for fast and scalable AI capabilities, enhancing the quality and diversity of generated content.

- Step 1: Visit the website and create/log in to your account.

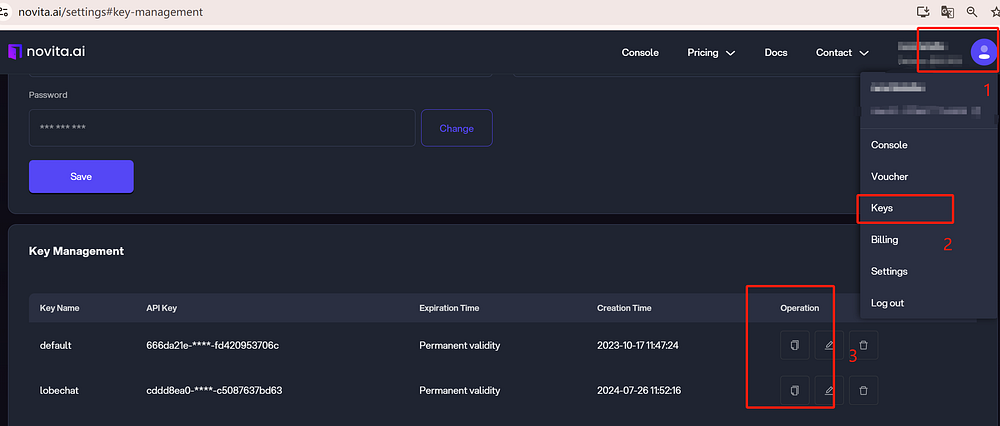

- Step 2: Navigate to “LLM API Key” and obtain the API key you want, like in the following image.

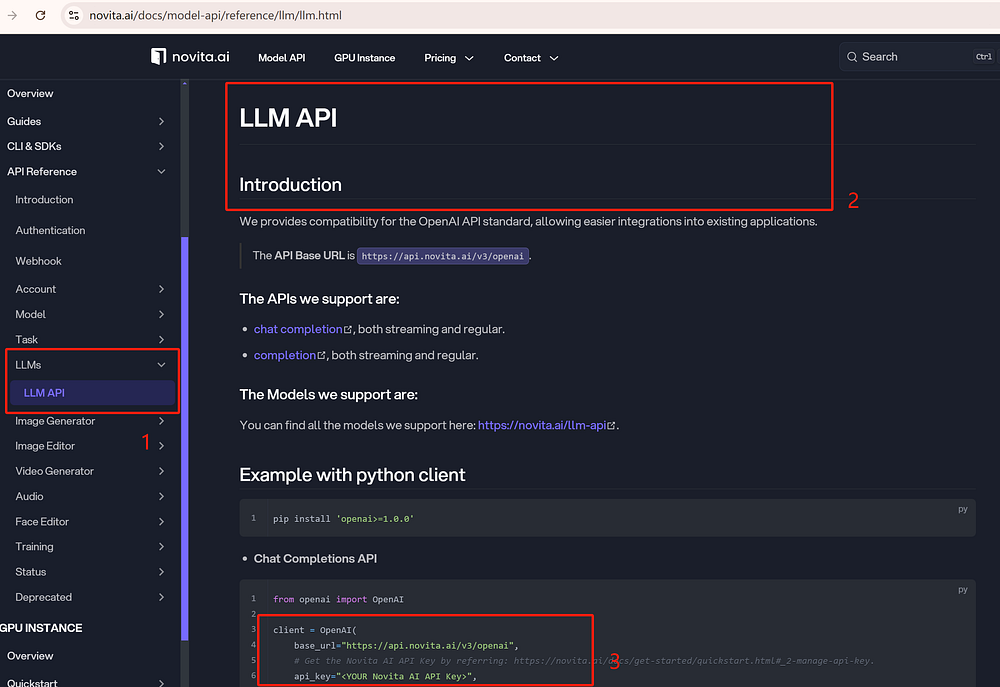

- Step 3: Navigate to API Reference. Find LLM API under the “LLMs”. Use the API key to make the API request.

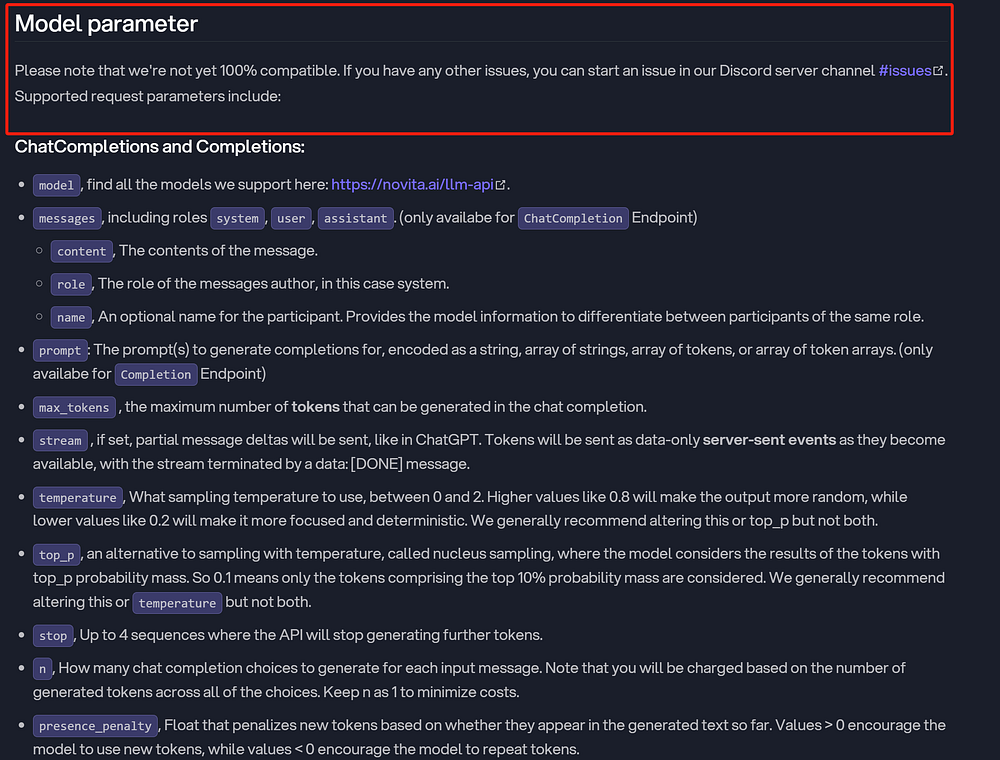

- Step 4: You can adjust the parameters according to your needs.

- Step 5: Integrate it into your existing project backend and wait for the response. Here is a code example for reference.

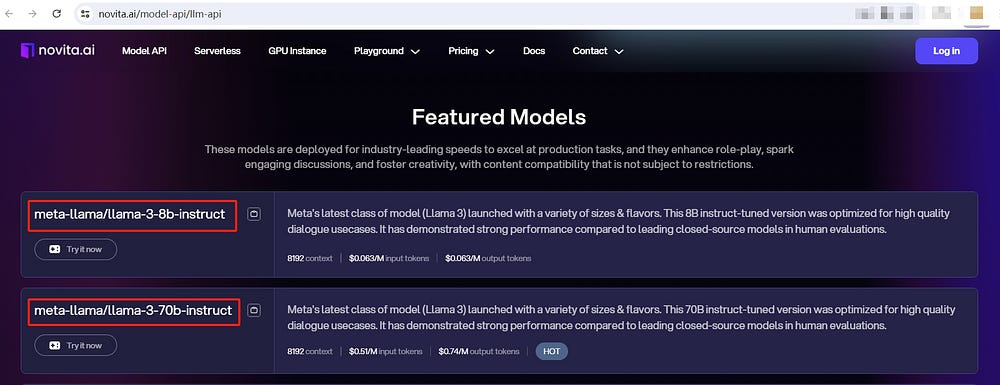

Featured Models

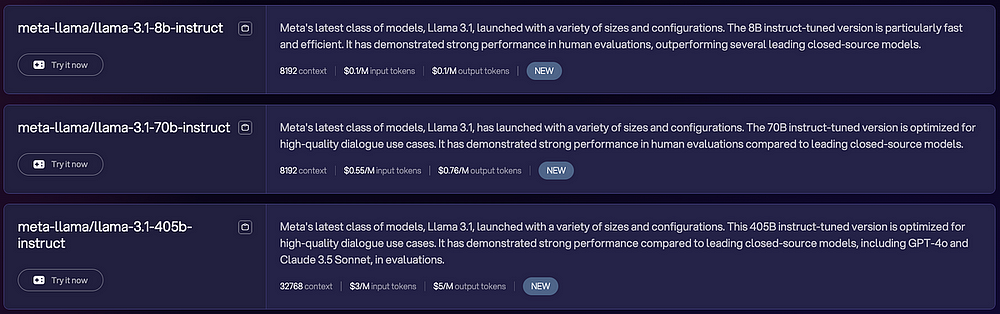

Novita AI LLM API have three models, including meta-llama/llama-3–8b-instruct, meta-llama/llama-3–70b-instruct, meta-llama/llama-3.1–405b-instruct. These models are deployed for industry-leading speeds to excel at production tasks, and they enhance role-play, spark engaging discussions, and foster creativity, with content compatibility that is not subject to restrictions. You can try it according to your needs.

Recently we launched Meta’s latest models, including the advanced meta-llama/llama-3.1–405b-instruct. You try them on LLM Playground.

Future Trend of Generative AI in Ecommerce

Conversational Shopping Experience

Consumers can interact with platforms in natural language, asking for product information, seeking advice, or making purchases as if talking to a salesperson. Generative AI tailors recommendations and content based on user behaviour and preferences, enhancing the shopping experience. By analyzing user data, AI can recommend products in real-time conversations, boosting conversion rates.

Visual Content

Generative AI can create personalized images and visuals for e-commerce platforms, product pages, and marketing initiatives. This encompasses a range of elements such as distinctive product images, interactive banners, and social media graphics that adjust based on specific user tastes.

Inventory Management and Demand Forecasting

- Predictive Analytics: Generative AI models can analyze vast amounts of data, including historical sales, customer behaviour, market trends, and external factors, to generate highly accurate demand forecasts at the product, category, or even individual customer level.

- Supply Chain Optimization: By understanding product demand patterns, order management, and customer preferences, Generative AI can help allocate inventory across multiple distribution channels and warehouses, maximizing availability and minimizing stockouts.

Conclusion

Llama 3 presents a revolutionary tool for data scientists and AI enthusiasts, offering a rich landscape for exploration in natural language processing. To maximize its potential, follow responsible use guidelines and tap into its capabilities for various applications, from customer service chatbots to content generation. With continued advancements in AI, Llama 3 is poised to shape the future of intelligent systems. Remember, a deep understanding of the training process and a strong technical background are key to harnessing its power. Harness Llama 3 responsibly for transformative results.

Frequently Asked Questions

Does vLLM support quantized models?

Yes, vLLM supports quantization models. Quantization can help reduce the memory footprint and computational costs of models, thereby improving inference efficiency.

Does vLLM require GPU?

It requires compute capability 7.0 or higher GPU (e.g., V100, T4, RTX20xx, A100, L4, H100, etc.)

What is the best binary classifier model?

The best binary classifier model varies based on the use case, dataset, and requirements. Popular models include Logistic Regression, Support Vector Machines (SVM), and Random Forest.

Novita AI, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended Reading: