Advanced AI Development with Llama 3 400B

Key Highlights

- Advanced Specifications: Llama 3 400B, with 400 billion parameters, is designed for high efficiency and rapid processing, ideal for real-time applications.

- Model Comparison: Llama 3 400B emphasizes speed, contrasting with Llama 3.1’s focus on depth and contextual understanding.

- Cost-Effective: Competitive pricing for the Llama 3.1 70B model balances performance with budget constraints.

- Impact on AI Research: Marks a significant milestone, with potential for major advancements in language processing and conversational AI.

Introduction

Meta AI is advancing significantly in language models through its latest initiative, Llama 3. The focal point of excitement revolves around its primary model, which boasts 400 billion parameters. The enthusiasm surrounding this innovative model stems from its potential to revolutionize the way individuals engage with and leverage AI.

Overview of Llama 3 400b

The Llama 3 400B model, the flagship of the Llama 3 series, boasts an impressive 400 billion parameters. Its strong Transformer architecture allows it to handle diverse AI tasks by discerning complex patterns and relationships within data. Pre-trained on a vast multilingual dataset of around 15 trillion tokens, it possesses comprehensive knowledge across languages and domains.

The Llama 3 400B performs comparably to top models like GPT-4, excelling in multilinguality, coding, reasoning, and tool usage. While its full capabilities are still evolving, especially in multimodal functions, it represents a significant advancement in AI and language processing technology.

The Llama 3 400B Model: A Giant Leap Forward

The Llama 3 400B model stands out as the largest and most potent in the Llama 3 series, with a staggering 400 billion parameters. Although still under development, early results hint that it will exceed the performance of its smaller counterparts.

The Llama 3 400B model is anticipated to feature advanced multimodal capabilities, allowing it to engage in conversations across multiple languages, handle extended context windows, and exhibit enhanced overall performance.

Llama 3 400B: Capabilities and Features

The Llama 3 400B model is anticipated to feature a suite of advanced capabilities, including:

- Multimodality: The capacity to process and generate diverse types of data, such as text, images, and audio.

- Multilingual Support: The ability to interact and comprehend multiple languages, thereby eliminating language barriers and facilitating global communication.

- Longer Context Window: The capability to process and understand extended text sequences, resulting in more accurate and contextually relevant responses.

- Stronger Overall Capabilities: The Llama 3 400B model is expected to excel in overall performance, showcasing enhanced accuracy, fluency, and coherence.

Speed and Performance: A Quantum Leap

One of the standout features of the Llama 3 400B model is its potential for exceptional speed and performance. Thanks to its vast parameter count, this model is poised to process and generate text at unprecedented speeds. When compared to the existing 70B model, the Llama 3 400B is expected to be considerably faster and more efficient.

To give you an idea of the scale, the 70B model is already a notable accomplishment; however, the 400B model is projected to be about 5.7 times larger. This substantial increase in size and complexity should correspondingly enhance processing speed and accuracy. Consequently, the Llama 3 400B model will likely manage more complex tasks, handle larger datasets, and produce more coherent and precise text.

Open-source advantage

Another important reason why people are so excited about Llama 3 is that it has been released under an open license for research and commercial use. When released in open mode, these state-of-the-art language features are now freely available to researchers and developers across multiple cloud platforms and ecosystems, accelerating innovation and enabling even more novel applications of the technology. The new 400B model is powerful enough to compete with ChatGPT 4, which offers great potential for researchers.

Llama 3 400B’s Impact on the AI Industry

The arrival of Llama 3 400B is set to change the AI industry. Its impact will touch many areas. This includes creating better chatbots and virtual assistants. It will also help make content creation easier and introduce new ways to express creativity. The advanced language skills of this model will help provide more natural conversations. This means users will have a better experience.

Its uses go beyond just social media and entertainment. In healthcare, it can help analyze medical data and support diagnoses. In finance, it can improve fraud detection systems. In education, it can tailor learning to fit each person. The possibilities are as wide as creativity itself.

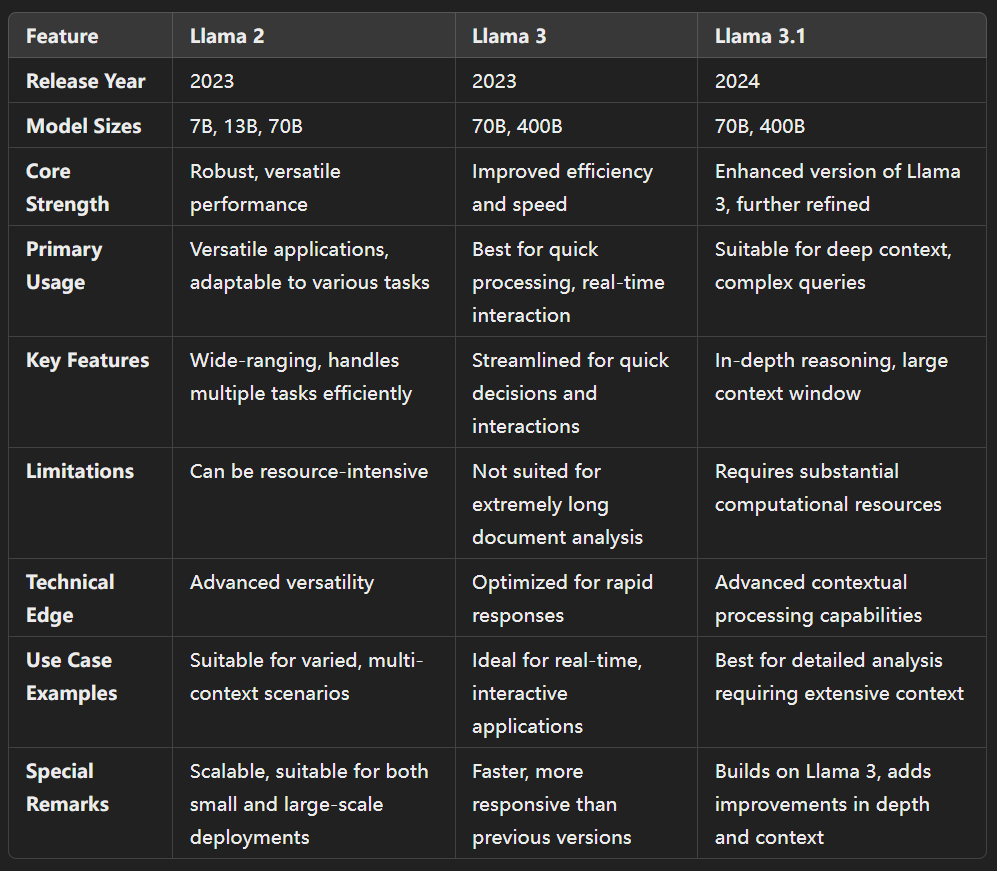

Llama 3 400B compared to other Llama models

The Llama 3 400B model, introduced in 2023, is known for its rapid response times and operational efficiency, positioning it as the preferred choice for real-time engagements and tasks requiring quick processing. In comparison to the versatile yet resource-intensive Llama 2, the Llama 3 400B prioritizes computational speed over deep contextual analysis, making it perfect for projects that demand fast results.

In practice, Llama 3 400B is primarily utilized for model training, involving evaluation, synthetic data generation, and various forms of distillation. The main purpose of Llama 3 400B is to assist AI developers in building robust AI systems. However, when it comes to deploying AI in real-world applications, most developers opt to fine-tune smaller models for practicality. If you’re concerned about cost-effectiveness, ease of use, and scalability, opting for an API-driven approach might be the preferable choice.

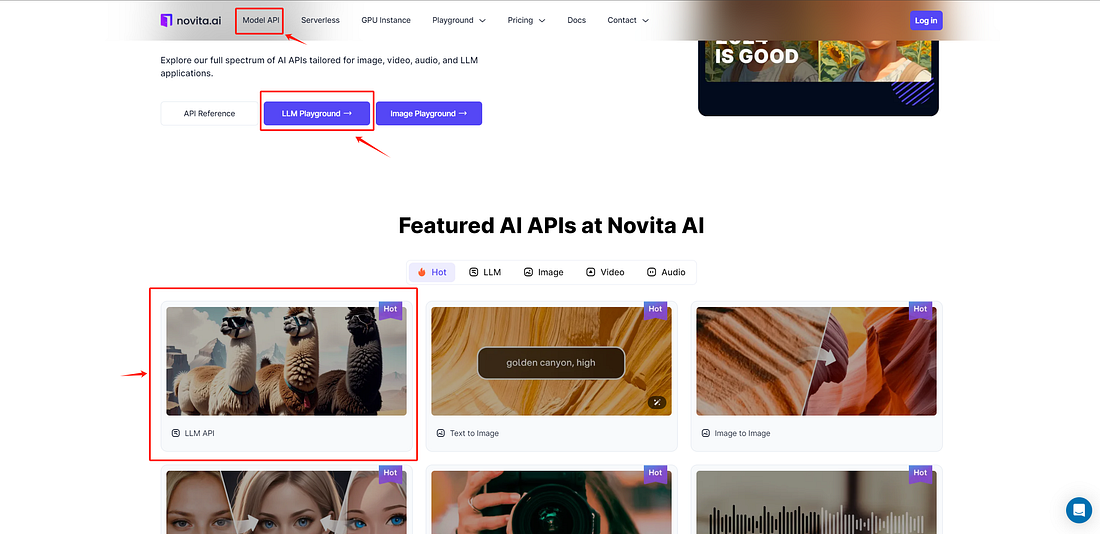

Using the newer Llama model in Novita.AI’s LLM API is your best option.

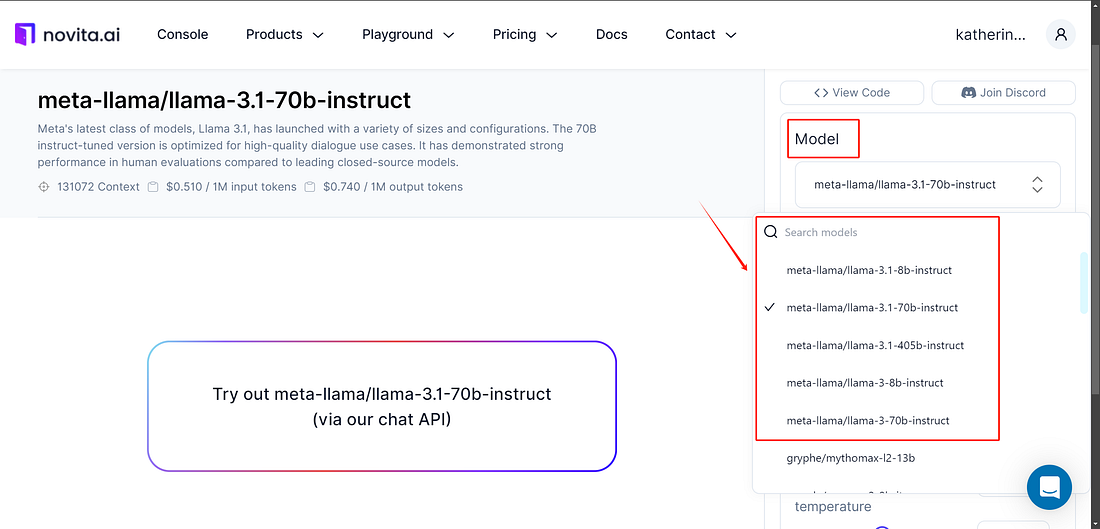

You can also play with llama’s the newer models in the Novita AI LLM Playground before the llama3 API is officially deployed.

- Step1: To access the Playground, navigate to “Model API” tab. Select “LLM Playground” to begin experimenting with the Llama models.

- Step2: You can choose from the llama family of models in the playground

- Step3: Enter Your Prompt and Generate: Type your desired prompt into the designated input field. This is where you should input the text or question you want the model to respond to.

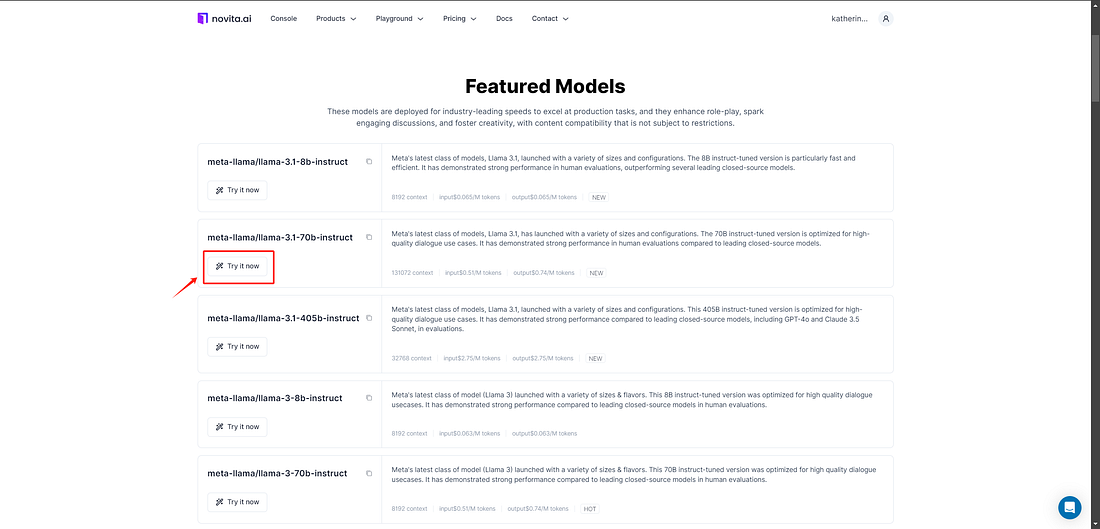

How to deploy LLM API on Novita.AI

Carefully follow these detailed steps to build a robust language processing application using the Llama model API on Novita AI. This comprehensive guide is designed to ensure a smooth and efficient development process, catering to the needs of today’s developers who seek advanced AI platforms

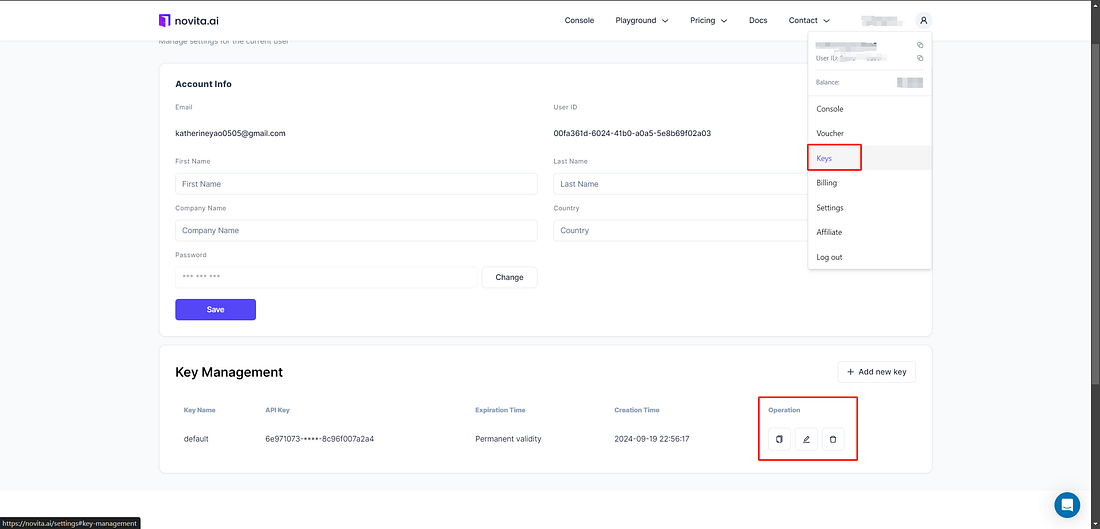

- Step1: Sign up to Get API Access: Visit the official Novita AI website and create an account. Then, go to the API key management section to obtain your API key.

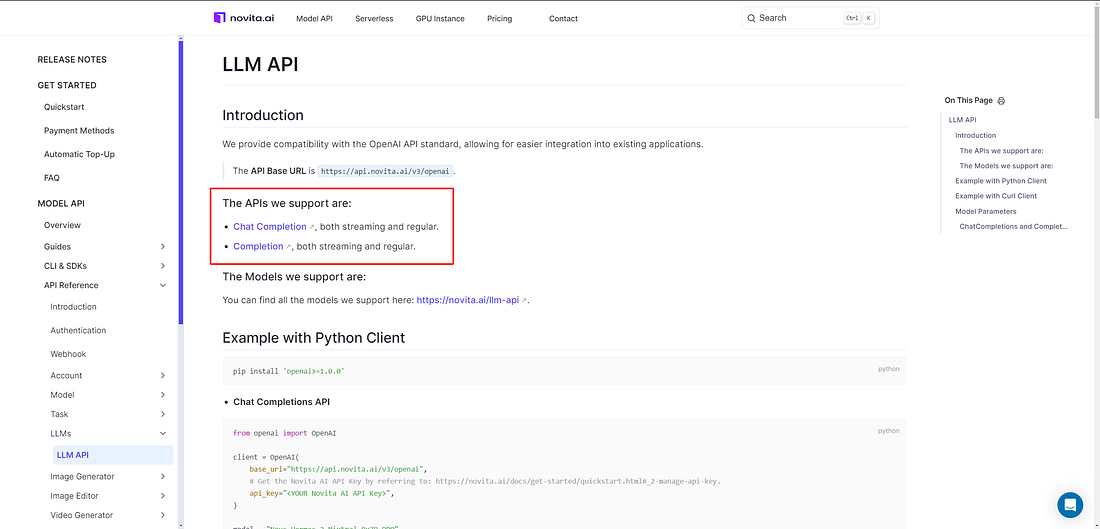

- Step2: Review the Documentation: Thoroughly read through the Novita AI API documentation.

- Step3: Integrate the Novita LLM API:Enter your API key into Novita AI’s LLM API to generate concise summaries.

- Step4: Test and Add Optional Features:Process the API response and present it in a user-friendly format. Consider adding features such as topic extraction or keyword highlighting.

The price of the Llama 3.1 70B model

The Novita AI Llama 3.1 70B model, featured in the chart, is priced competitively at $0.51 per input and output, handling up to 8,192 outputs. This makes it an appealing choice for projects that demand significant processing capabilities but are sensitive to budget constraints. Additionally, the model offers a competitive latency of 0.99 seconds and a throughput of 22.09transactions per second, ensuring it can efficiently manage large data volumes.

The image offers detailed information about various suppliers of the Llama 3.1 70B model, enabling you to compare and choose the service that best meets your performance and pricing needs.

The Future of AI Research

The Llama 3 400B model marks a significant milestone in AI language model development. Its release is poised to profoundly influence the field of natural language processing, empowering researchers and developers to craft more sophisticated and precise AI systems.

As training and enhancements progress for the Llama 3 400B, we anticipate significant breakthroughs in language translation, text generation, and conversational AI. The potential applications for this technology are extensive, offering promising opportunities in customer service, language education, and content creation.

Conclusion

The Llama 3 400B model is a breakthrough in AI technology, offering speed, performance, and open-source advantages. It’s set to revolutionize the sector and set new benchmarks for research and development. Despite cost concerns, the future of AI research looks bright with models like Llama 3 400B leading the way. Embrace this innovative technology and keep up with AI advancements.

Frequently Asked Questions

Can Llama 3 400B be considered a turning point for AI accessibility?

Llama 3 400B could revolutionize AI accessibility with its open license and advancements in generative AI. Its features in art performance and new capabilities promote widespread use and foster innovation.

What are the hardware requirements for Llama 3 400B?

Running the Llama 3.1 405B requires a high-end GPU with over 800GB VRAM, making cloud-based solutions with powerful GPUs a practical necessity due to its substantial memory demands.

Can llama 3 run locally?

Running a local server allows you to integrate Llama 3 into other applications and build your own application for specific tasks.

Is Llama 3.1 better than Llama 3?

Llama 3.1 enhances data quality, model scale, and complexity management for improved task performance based on specific use cases and benchmarks.

Is Llama 3 better than GPT-4?

Benchmark tests show Llama 3 excels in specific tasks, while GPT-4 leads in creative generation and coherence in long dialogues, illustrating their LLM strengths.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading

1.Introducing Code Llama: A State-of-the-art large language model for code generation.

2.Introducing Llama3 405B: Openly Available LLM Releases

3.Meta Llama 3: Newest of the Llama Model family is Crashing the Party