Introducing Code Llama: A State-of-the-art large language model for code generation.

Introduction

Generative AI is on the brink of fully automating code generation, though it hasn’t reached that milestone yet. However, the next best tool is Code Llama! Released in 2023, Meta’s latest code generator, Code Llama, is designed to aid coders in various programming tasks. Code Llama focuses on enhancing developer workflows, generating and completing code, and assisting with testing. Let’s explore Code Llama as a standalone asset and then compare it to other generative AI tools specialized in coding.

How Code Llama works

Code Llama is a code-specialized variant of Llama 2, developed by further training Llama 2 on code-specific datasets and sampling more data from these datasets for extended periods. This process has endowed Code Llama with enhanced coding capabilities, building on the foundation of Llama 2. It can generate code and natural language descriptions of code from both code and natural language prompts (e.g., “Write me a function that outputs the Fibonacci sequence.”). Additionally, it can be used for code completion and debugging. Code Llama supports many popular programming languages, including Python, C++, Java, PHP, TypeScript (JavaScript), C#, and Bash.

Meta is releasing four sizes of Code Llama, featuring models with 7B, 13B, 34B, and 70B parameters respectively. Each of these models, except the 70B version, is trained on 500B tokens of code and code-related data, while the 70B model is trained on 1T tokens. The 7B and 13B base and instruct models also include fill-in-the-middle (FIM) capability, allowing them to insert code into existing code and support tasks like code completion right out of the box.

These models cater to different serving and latency requirements. The 7B model, for instance, can be served on a single GPU. While the 34B and 70B models deliver the best results and offer superior coding assistance, the smaller 7B and 13B models are faster and more suitable for tasks requiring low latency, such as real-time code completion.

Code Llama’s Capabilities

The Code Llama models provide stable generations with up to 100,000 tokens of context. All models are trained on sequences of 16,000 tokens and demonstrate improvements with inputs up to 100,000 tokens.

This capability to handle longer input sequences not only facilitates the generation of longer programs but also unlocks new use cases for a code language model (LLM). For instance, users can provide the model with more context from their codebase, making the generated code more relevant. Additionally, it is beneficial in debugging scenarios within larger codebases, where keeping track of all code related to a specific issue can be challenging for developers. By allowing developers to input an entire length of code, the model can assist in debugging extensive code sections.

Additionally, Meta has further fine-tuned two additional variations of Code Llama: Code Llama — Python and Code Llama — Instruct.

Code Llama — Python is a language-specialized version, further fine-tuned on 100B tokens of Python code. Given that Python is the most benchmarked language for code generation and plays a significant role in the AI community alongside PyTorch, this specialized model offers added utility.

Code Llama — Instruct is an instruction fine-tuned and aligned variation. Instruction tuning continues the training process with a different objective, where the model is provided with a “natural language instruction” input and the expected output. This enhances its ability to understand human expectations from prompts. It is recommended to use Code Llama — Instruct variants for code generation, as they are fine-tuned to produce helpful and safe answers in natural language.

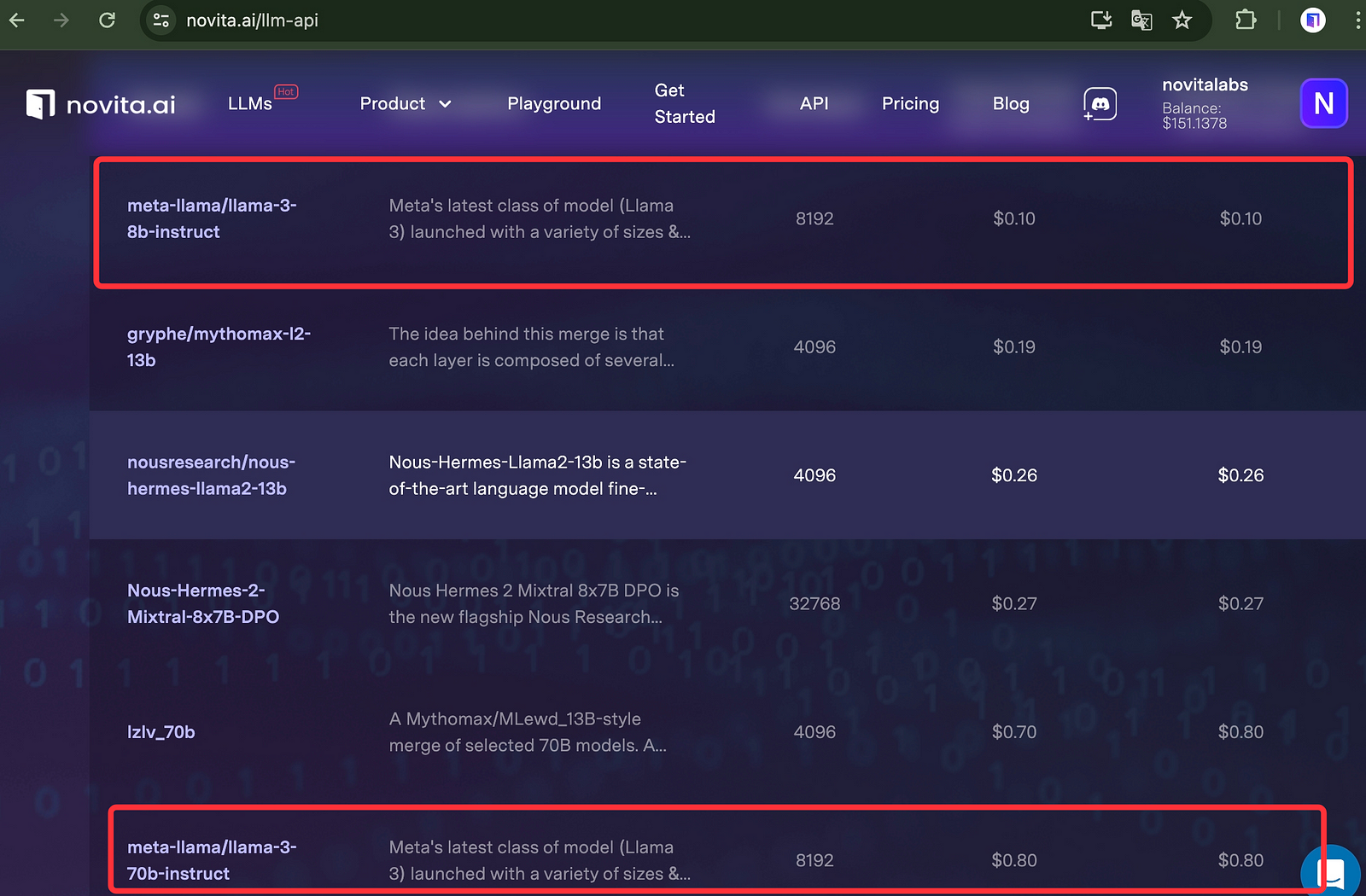

It is not recommended to use Code Llama or Code Llama — Python for general natural language tasks, as these models are not designed to follow natural language instructions. If you want to finish NLP tasks, you can choose Llama2 or Llama3 models, where you can get access by integrating with novita.ai LLM API:

With Cheapest Pricing and scalable models, Novita AI LLM Inference API empowers your LLM incredible stability and rather low latency in less than 2 seconds. LLM performance can be highly enhanced with Novita AI LLM Inference API.

Code Llama is specialized for code-specific tasks and is not suitable as a foundation model for other applications.

Users of the Code Llama models must comply with Meta’s license and acceptable use policy.

Evaluating Code Llama’s performance

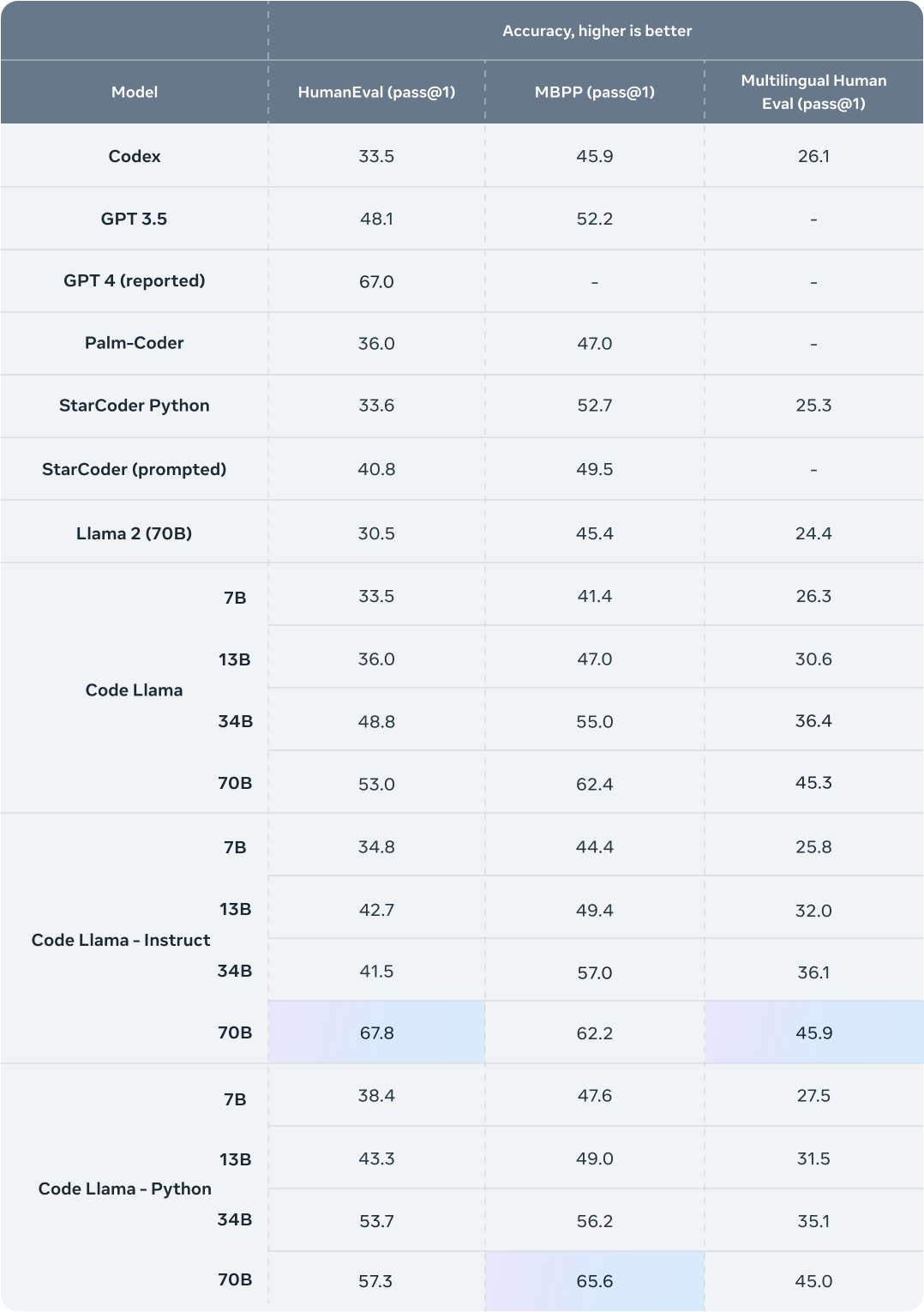

To evaluate Code Llama’s performance against existing solutions, Meta used two popular coding benchmarks: HumanEval and Mostly Basic Python Programming (MBPP). HumanEval assesses the model’s ability to complete code based on docstrings, while MBPP tests the model’s capability to write code based on a description.

Benchmark testing demonstrated that Code Llama outperformed other open-source, code-specific language models and exceeded the performance of Llama 2. For instance, Code Llama 34B achieved a score of 53.7% on HumanEval and 56.2% on MBPP, the highest among state-of-the-art open solutions and comparable to ChatGPT.

As with all cutting-edge technology, Code Llama comes with certain risks. Building AI models responsibly is crucial, so numerous safety measures were implemented before releasing Code Llama. As part of the red teaming efforts, a quantitative evaluation was conducted to assess the risk of Code Llama generating malicious code. Prompts designed to solicit malicious code with clear intent were used, and Code Llama’s responses were compared to those of ChatGPT (GPT-3.5 Turbo). The results indicated that Code Llama provided safer responses.

How to use Code Llama

Code Llama is not directly accessible through a website or platform. Instead, it is available on GitHub and can be downloaded for local use. Here are some ways Code Llama can be accessed:

1. Chatbot Integration: Perplexity-AI, a text-based AI similar to ChatGPT, has integrated Code Llama’s 34B parameter version. This allows users to generate code through text-based prompting. Perplexity-AI offers multiple variants focused on specific programming languages such as Python, Java, C++, and JavaScript.

2. Model Integration: Hugging Face, an open-source platform, provides default models that can be used to generate code easily.

3. Integrated Development Environment (IDE): Ollama is a library of Code Llama that can be downloaded and integrated directly into IDEs. This enables users to utilize large language models locally.

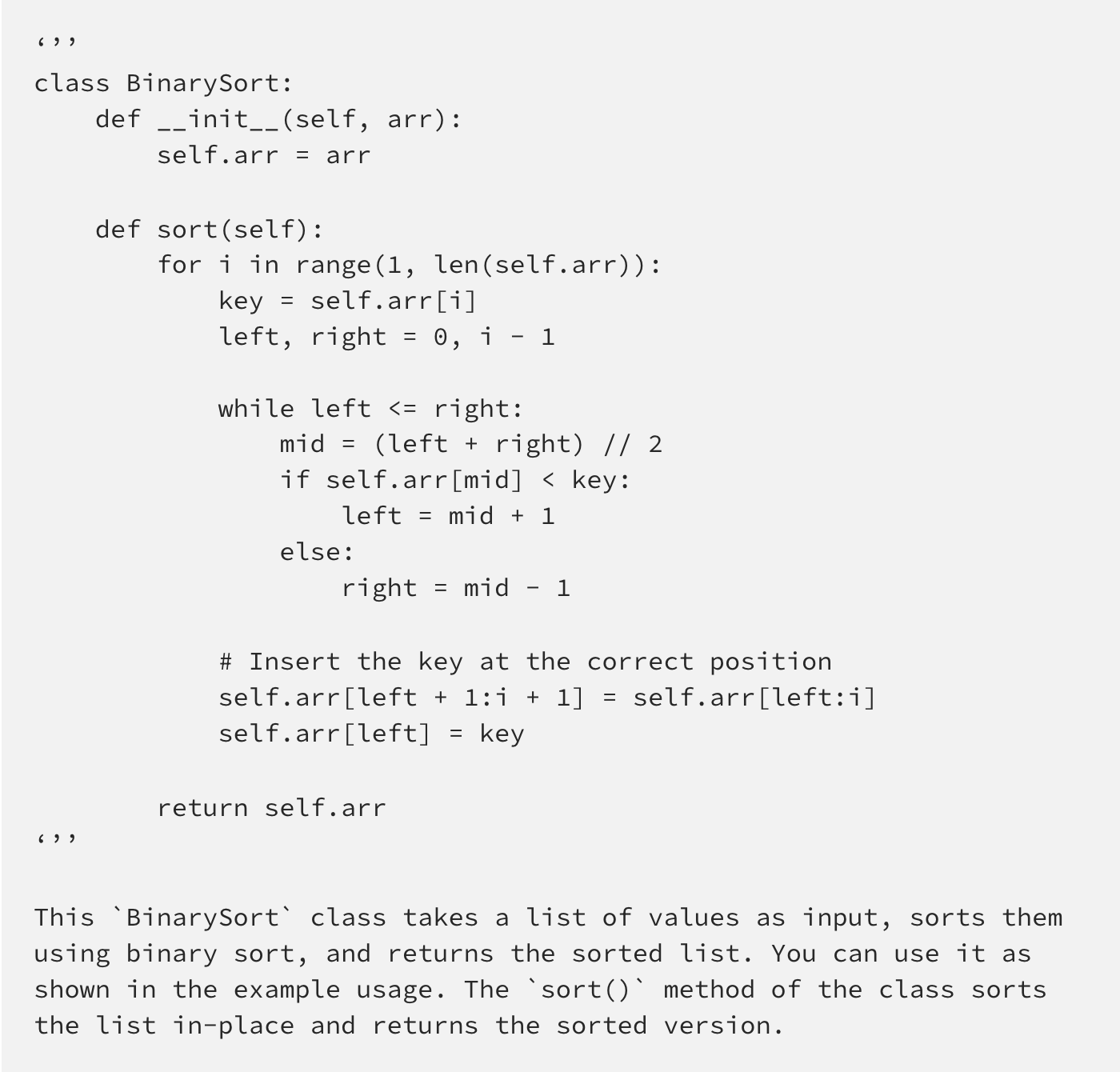

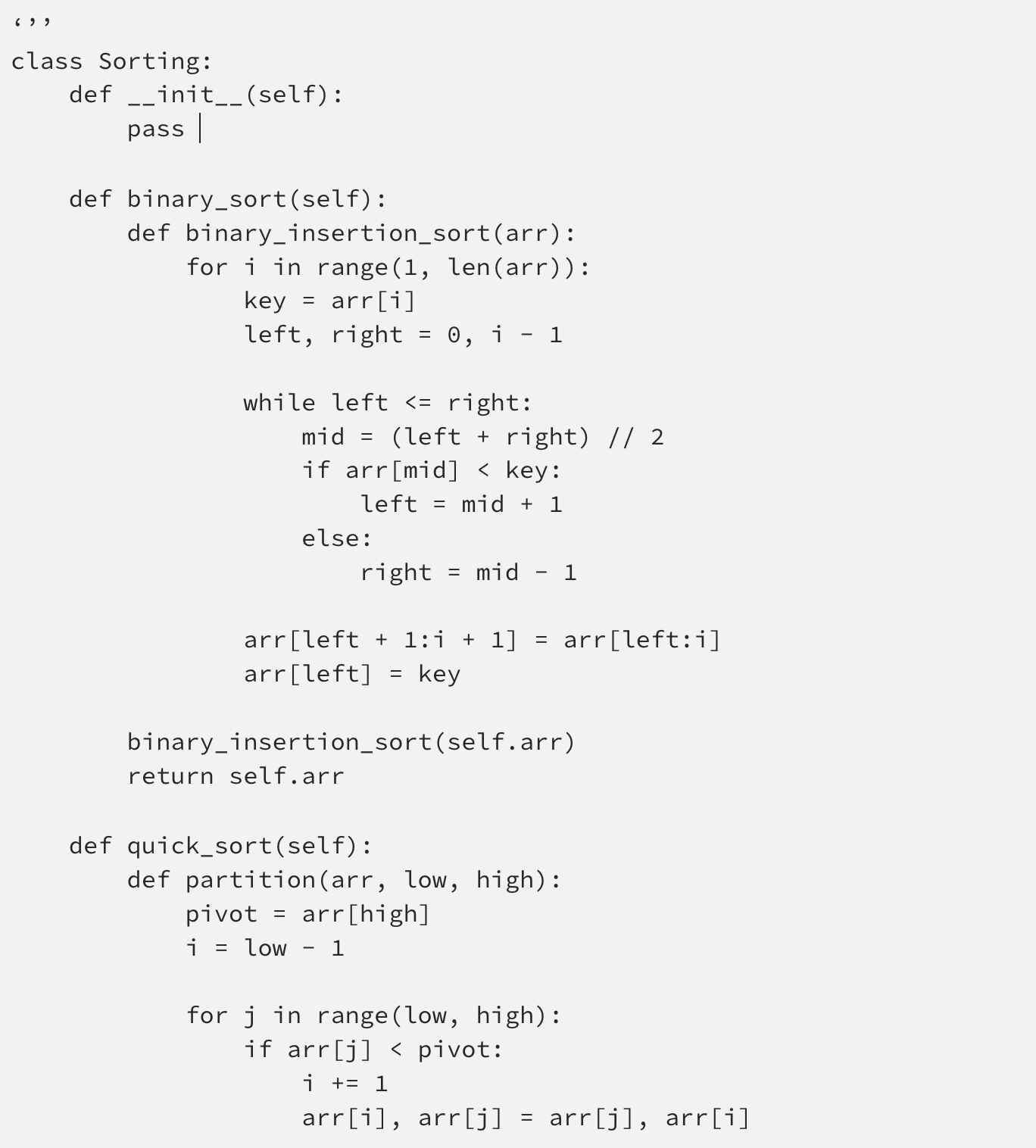

In this tutorial, we’ll showcase Code Llama’s capabilities using Perplexity AI. We’ll start by defining and creating sorting functions. First, we’ll create a binary sort function, which is akin to insertion sort but employs binary search to determine the element’s position. Following that, we’ll inquire with Perplexity if any other algorithms are comparable to binary sort. Lastly, we’ll request Perplexity to generate a Sorting class containing some of those functions.

Prompt:

I have a Python class that would benefit from a binary sorting algorithm. Could you please create a Python class that takes in a list of values, sorts them using binary sort, and returns the sorted list.

Response:

Prompt:

Is there another algorithm comparable or better than binary sort?

Response:

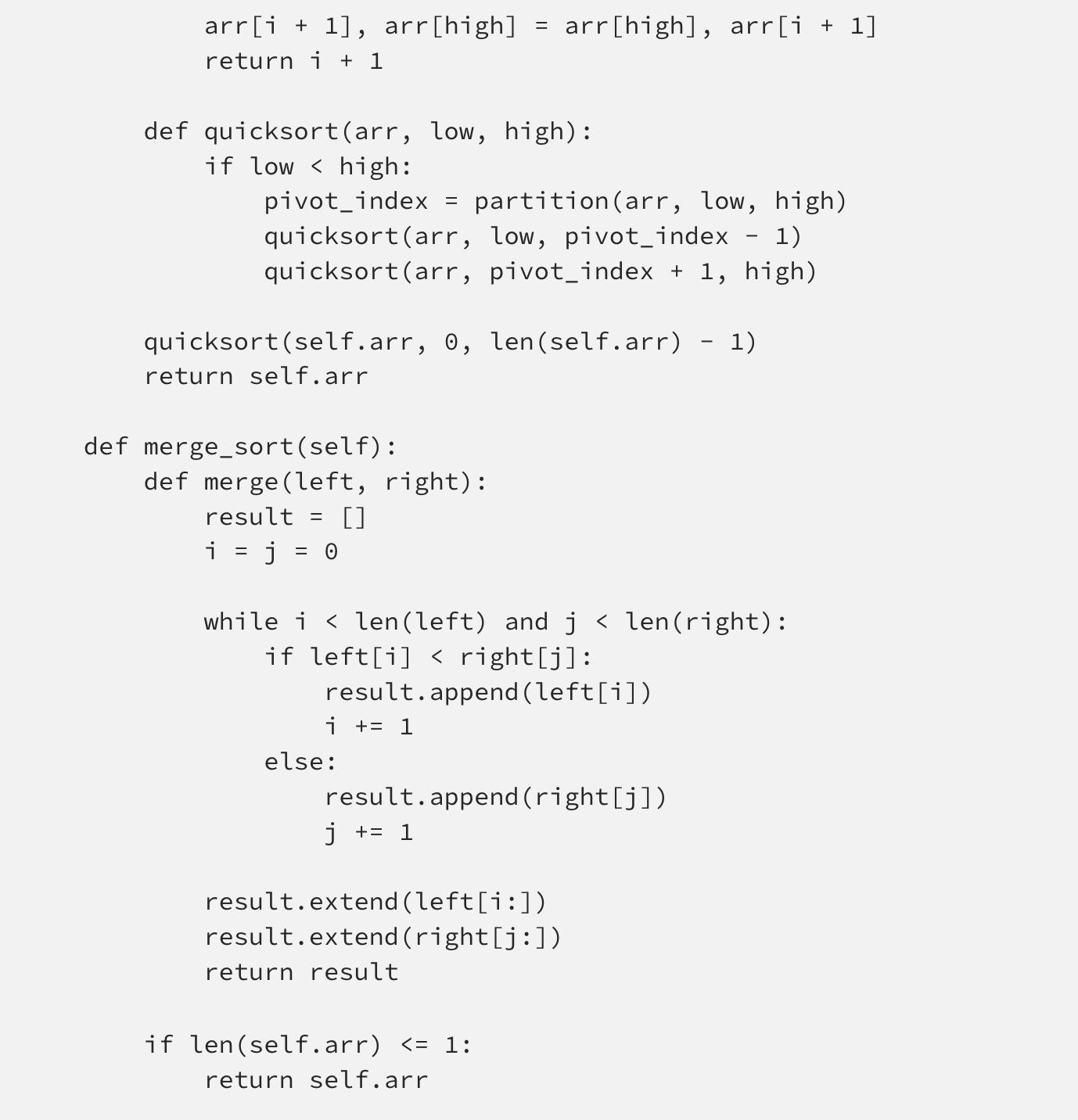

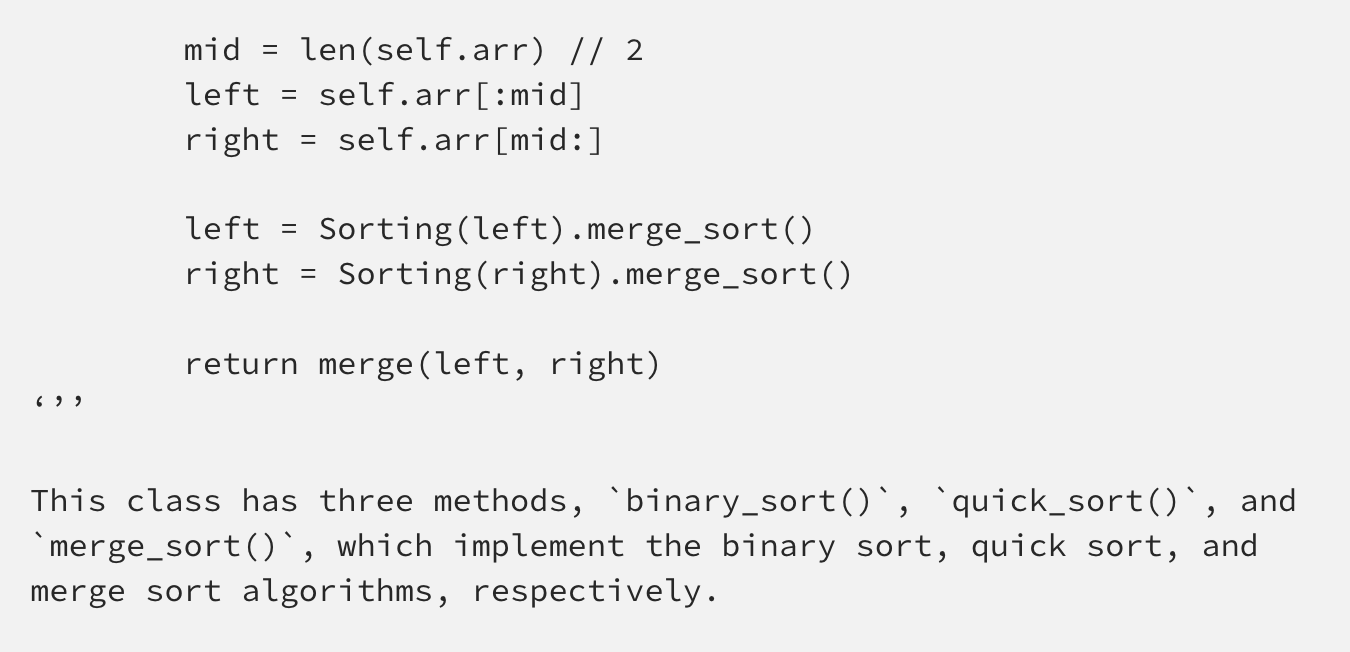

Prompt:

I'd actually like to have sorting options for my needs. Can you create a Sorting class with binary sort, quick sort, and merge sort functionality?

Response:

Who Benefits from Code Llama

Programmers are already using large language models (LLMs) to assist with various tasks, from writing new software to debugging existing code. The objective is to enhance developer workflows, allowing them to focus on the more human-centric aspects of their jobs rather than repetitive tasks.

At Meta, it is believed that AI models, especially LLMs for coding, benefit significantly from an open approach, fostering both innovation and safety. Publicly available, code-specific models can drive the development of new technologies that improve people’s lives. By releasing models like Code Llama, the entire community can assess their capabilities, identify issues, and address vulnerabilities.

Responsible use of Code Llama

Meta’s research paper provides detailed information on Code Llama’s development, the benchmarking tests conducted, the model’s limitations, and the challenges encountered, along with the mitigations taken and future challenges to be addressed.

Additionally, the Responsible Use Guide has been updated to include guidance on developing downstream models responsibly, covering aspects such as:

- Defining content policies and mitigations

- Preparing data

- Fine-tuning the model

- Evaluating and improving performance

- Addressing input- and output-level risks

- Building transparency and reporting mechanisms in user interactions

Developers are advised to evaluate their models using code-specific evaluation benchmarks and to perform safety studies on code-specific use cases, such as generating malware, computer viruses, or malicious code. It is also recommended to use safety datasets for automatic and human evaluations, and to conduct red teaming with adversarial prompts.

The future of generative AI for coding

Code Llama is designed to support software engineers across all sectors, including research, industry, open source projects, NGOs, and businesses. However, there are still many use cases beyond what our base and instruct models can currently support.

We hope that Code Llama will inspire others to leverage Llama 2 to create new, innovative tools for research and commercial products.

Conclusion

Code Llama is a unique tool designed to assist with programming projects, allowing developers to shift their focus from code generation to project objectives. It supports code completion, writes human-readable comments, and generates code.

As an AI programming tool, Code Llama stands out for its offline capability. It can be imported into an IDE, eliminating the need for an internet connection. Trained on 100GB of data, it excels in code completion and generation. There are several versions of Code Llama available, including 7B, 13B, and 34B parameter versions, as well as specialized versions like -Python and -Instruct. Choose the one that best fits your needs, and good luck with your programming endeavors!

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

What is the difference between LLM and GPT

LLM Leaderboard 2024 Predictions Revealed

Novita AI LLM Inference Engine: the largest throughput and cheapest inference available