Mastering Text Generation Inference Toolkit for LLMs on Hugging Face

Introduction

Text generation has emerged as a revolutionary capability, transforming how machines interpret and produce human-like text. This surge in popularity has led to the development of numerous tools designed to streamline and enhance the process of working with LLMs.

The swift expansion of Large Language Models, with new versions appearing almost weekly, has driven a concurrent increase in hosting options to support this technology. Among the many tools available, Hugging Face’s Text Generation Inference (TGI) stands out, enabling us to run an LLM as a service on our local machines.

This guide will delve into why Hugging Face TGI is a significant innovation and how you can use it to develop sophisticated AI models capable of generating text that is increasingly indistinguishable from human writing.

What is Hugging Face Text Generation Inference?

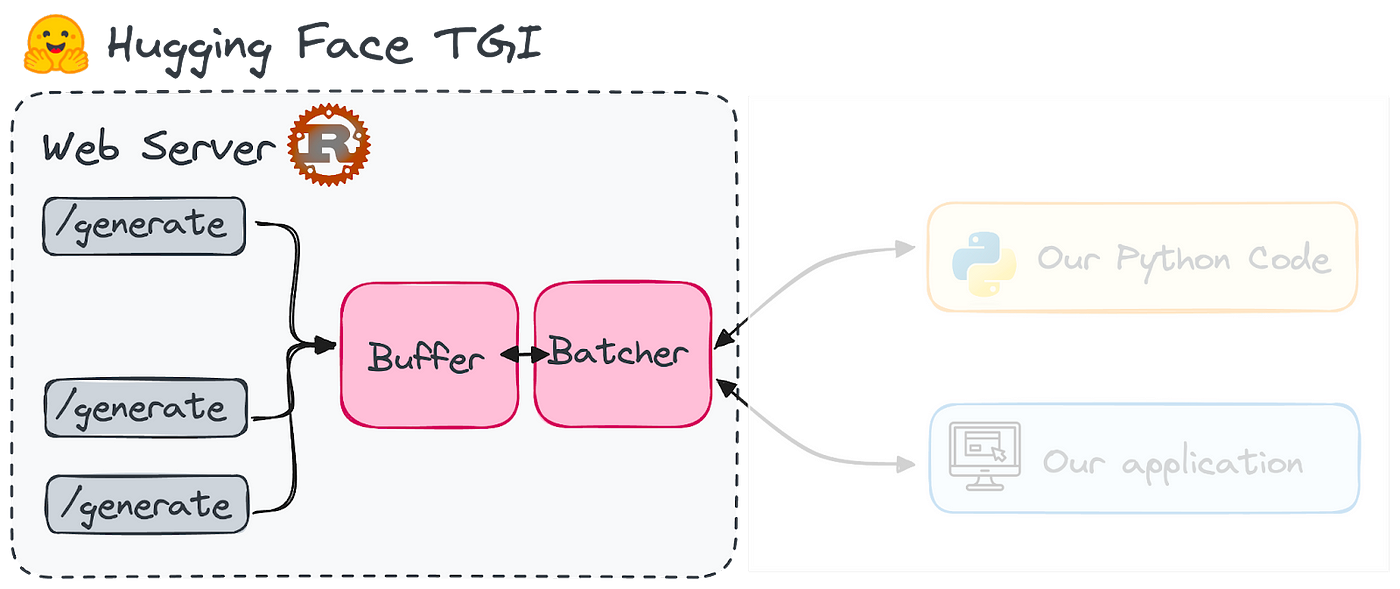

Hugging Face Text Generation Inference (TGI) is a framework written in Rust and Python for deploying and serving Large Language Models. It is a production-ready toolkit designed for this purpose.

Hugging Face develops and distributes TGI under the HFOILv1.0 license, allowing commercial use as long as it functions as an auxiliary tool within the product or service, rather than being the primary feature. The main challenges it addresses are:

Key Features of Hugging Face Text Generation Inference (TGI)

1. High-Performance Text Generation

— TGI employs techniques like Tensor Parallelism, which distributes large models across multiple GPUs, and dynamic batching, which groups prompts together on-the-fly within the server, to optimize the performance of popular open-source LLMs, including StarCoder, BLOOM, GPT-NeoX, Llama, and T5.

2. Efficient Resource Usage

— TGI uses continuous batching, optimized code, and Tensor Parallelism to handle multiple requests simultaneously while minimizing resource usage.

3. Flexibility and Safety

— TGI supports various safety and security features, such as watermarking, logit warping (modifying the logits of specific tokens by infusing a bias value), and stop sequences to ensure responsible and controlled LLM usage.

Hugging Face has optimized the architecture of some LLMs to run faster with TGI, including popular models like LLaMA, Falcon7B, and Mistral. A complete list of supported models can be found in their documentation.

Why Use Hugging Face TGI?

Hugging Face has become the go-to platform for developing AI with natural language capabilities. It hosts a plethora of leading open-source models, making it a powerhouse for NLP innovation. Traditionally, these models were too large to run locally, necessitating reliance on cloud-based services.

However, recent advancements in quantization techniques like QLoRa and GPTQ have made some LLMs manageable on everyday devices, including local computers.

Hugging Face TGI is specifically designed to enable LLMs as a service on local machines. This approach addresses the challenge of booting up an LLM by keeping the model ready in the background for quick responses, avoiding long waits for each prompt. Imagine having an endpoint with a collection of top language models ready to generate text on demand.

What stands out about TGI is its straightforward implementation. It supports various streamlined model architectures, making deployment quick and easy.

Moreover, TGI is already powering several live projects. Some examples include:

- Hugging Chat, an open-source interface for open-access models.

- OpenAssistant, an open-source community initiative for training LLMs.

- nat.dev, a platform for exploring and comparing LLMs.

However, there is a notable limitation: TGI is not yet compatible with ARM-based GPU Macs, including the M1 series and later models.

Setting Up Hugging Face TGI

First, set up Hugging Face TGI end-point.

To install and run Hugging Face TGI locally, begin by installing Rust. Afterward, set up a Python virtual environment with Python version 3.9 or higher, for instance, by using conda:

#Installing Rust

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

#Creating a python virtual environment

conda create -n text-generation-inference python=3.9

conda activate text-generation-inference

Moreover, it’s essential to install Protoc. Hugging Face recommends using version 21.12 for the best compatibility. Please note that you will need sudo privileges to carry out this installation.

PROTOC_ZIP=protoc-21.12-linux-x86_64.zip

curl -OL https://github.com/protocolbuffers/protobuf/releases/download/v21.12/$PROTOC_ZIP

sudo unzip -o $PROTOC_ZIP -d /usr/local bin/protoc

sudo unzip -o $PROTOC_ZIP -d /usr/local 'include/*'

rm -f $PROTOC_ZIP

First, I’ll outline the process for installing it from scratch, which may not be straightforward. If you encounter any issues during this process, you can opt to skip ahead and directly utilize a Docker image, which is simpler. Both scenarios will be covered.

Once all the prerequisites are fulfilled, we can proceed to set up TGI. Start by cloning the GitHub repository:

git clone https://github.com/huggingface/text-generation-inference.git

Next, navigate to the TGI directory on your local machine and install it using the following commands:

cd text-generation-inference/

BUILD_EXTENSIONS=False make install

Now, let’s explore how to utilize TGI, both with and without Docker. For demonstration, I’ll be employing the Falcon-7B model, which is licensed under Apache 2.0.

Launching a model without Docker

The installation process has generated a new command, “text-generation-launcher,” which initiates the TGI server. To activate an endpoint using the Falcon-7B model, simply execute the following command:

text-generation-launcher --model-id tiiuae/falcon-7b-instruct --num-shard 1 --port 8080 --quantize bitsandbytes

Here’s a breakdown of each input parameter:

- model-id: This refers to the specific name of the model as listed on the Hugging Face Hub. In our case, for Falcon-7B, it is “tiiuae/falcon-7b-instruct”.

- num-shard: Adjust this parameter to match the number of GPUs available for utilization. The default value is 1.

- port: This specifies the port on which the server will listen for requests. The default port is 8080.

- quantize: For users with GPUs having VRAM under 24 GB, model quantization is necessary to avoid memory overload. In the preceding commands, “bitsandbytes” is selected for immediate quantization. Another option, “GPTQ” (“gptq”), is also available, though its workings may be less familiar.

TGI Applications

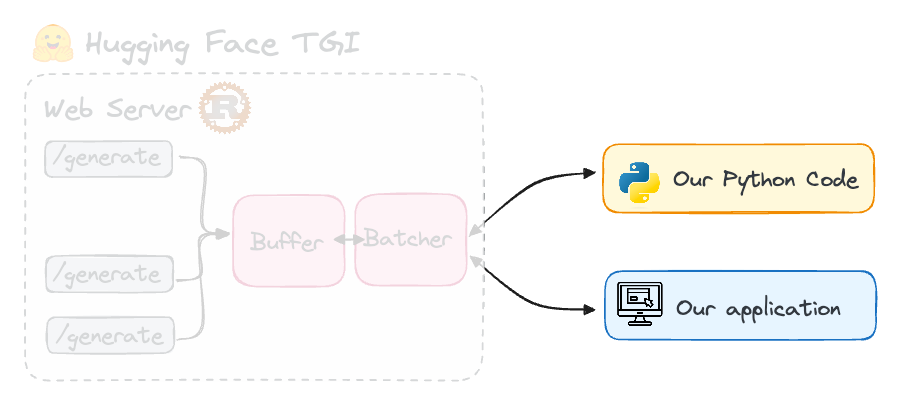

There are several ways to integrate the Text Generation Inference (TGI) server into your applications. Once it’s operational, you can interact with it by sending a POST request to the /generate endpoint to obtain results.

If you opt for continuous token streaming with TGI, utilize the endpoint instead, as demonstrated in the upcoming section. These requests can be made using your preferred tool, such as curl, Python, or TypeScript. To query the model served by TGI with a Python script, you’ll need to install the following text-generation library. You can easily do so by running the following pip command:

pip install text-generation

After launching the TGI server, create an instance of InferenceClient() with the URL pointing to the model-serving endpoint. Then, you can invoke text_generation() to send requests to the endpoint using Python.

from text_generation import Client

client = Client("http://127.0.0.1:8080")

client.text_generation(prompt="Your prompt here!")

To activate streaming with the InferenceClient, just set stream=True. This will enable you to receive tokens in real-time as they are generated on the server. Here’s how you can utilize streaming:

for token in client.text_generation("Your prompt here!", max_new_tokens=12, stream=True):

print(token)

Prompting a Model Through TGI

Keep in mind that you’ll be interacting with the deployed model on your endpoint, which in our case is Falcon-7B. Through TGI, we establish a live endpoint that facilitates retrieving responses from the chosen LLM.

As a result, with Python, prompts can be directly sent to the LLM via the client hosted on our local device, accessible through port 8080. Therefore, the corresponding Python script would resemble this:

from text_generation import Client

client = Client("http://127.0.0.1:8080")

print(client.generate("Translate the following sentence into spanish: 'What does Large Language Model mean?'", max_new_tokens=500).generated_text)

We’ll receive a response from the model without needing to install it in our environment. Rather than using Python, we can query the LLM with CURL, as demonstrated in the following example:

curl 127.0.0.1:8080/generate \

-X POST \

-d '{"inputs":"Code in Javascript a function to remove all spaces in a string and then print the string twice.","parameters":{"max_new_tokens":500}}' \

-H 'Content-Type: application/json'

After experimenting with TGI, it demonstrates impressive speed. With Falcon-7B (with a token limit of 500), it only takes a few seconds. In contrast, the typical inference approach using the transformers library requires approximately 30 seconds.

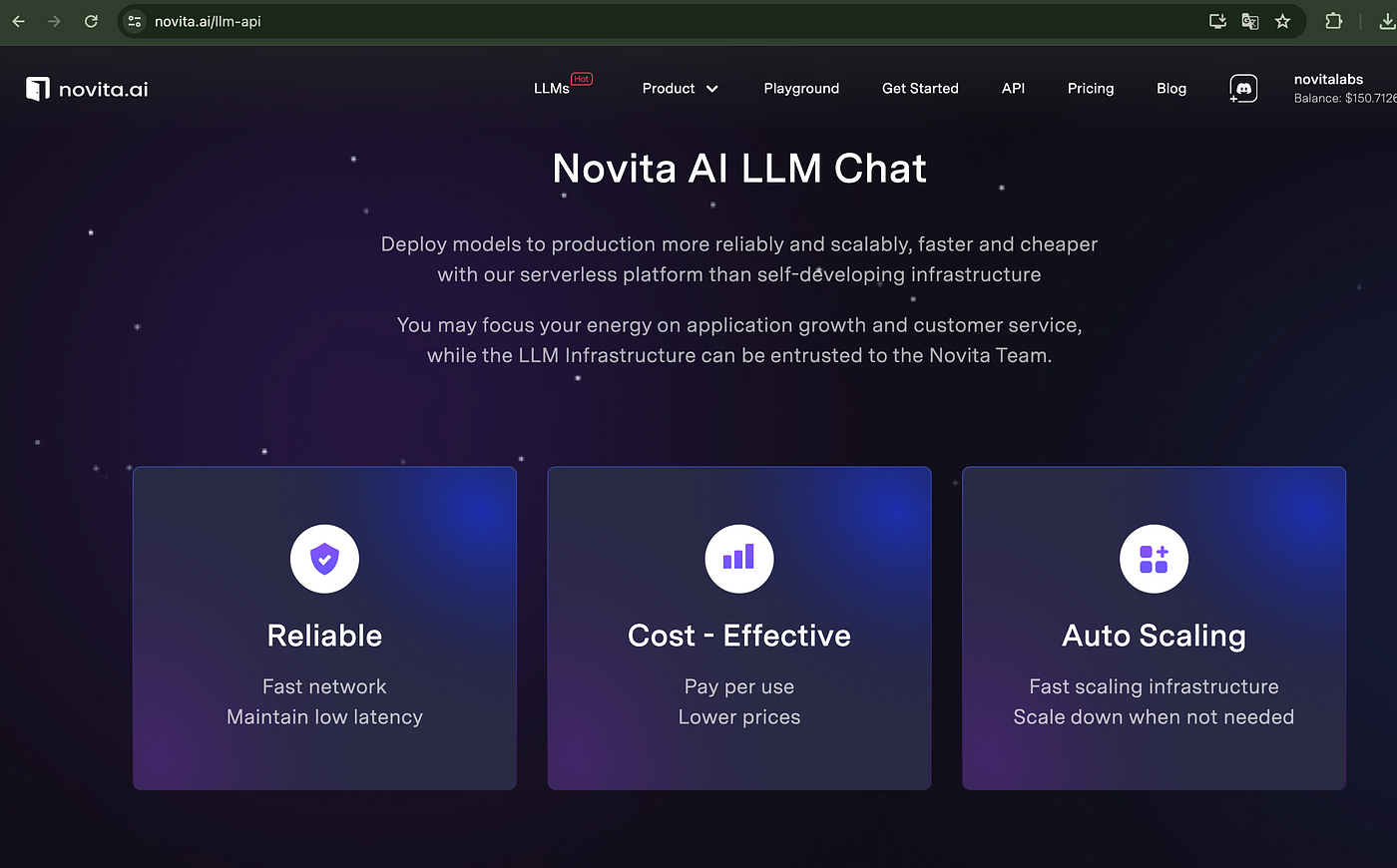

Integration with novita.ai using novita.ai’s LLM

Starting from version 1.4.0, TGI has introduced an API that is compatible with novita.ai’s LLM API. This new Messages API enables seamless transition for customers and users from novita.ai models to open-source LLMs. The API is designed for direct integration with novita.ai’s client libraries or with third-party tools such as LangChain or LlamaIndex.

TGI’s support for Messages ensures that its Inference Endpoints are fully compatible with the novita.ai LLM API. This compatibility allows users to easily replace any existing scripts that use novita.ai models with open LLMs hosted on TGI endpoints without any hassle.

This upgrade facilitates effortless switching, granting immediate access to the extensive advantages of open-source models, including:

Complete control and transparency regarding models and data. Elimination of concerns over rate limits. Flexibility to tailor systems to meet unique requirements. An example of how to integrate TGI into the novita.ai chat completion protocol is provided below:

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the NovitaAI API Key by referring: https://novita.ai/get-started/Quick_Start.html#_3-create-an-api-key

api_key="<YOUR NovitaAI API Key>",

)

model = "meta-llama/llama-3-8b-instruct"

stream = True # or False

max_tokens = 512

chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": "Act like you are a helpful assistant.",

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "", end="")

else:

print(chat_completion_res.choices[0].message.content)

As evident, the novita.ai library can be utilized to specify our ENDPOINT and our Hugging Face password. For further insight into this integration, refer to the Hugging Face TGI documentation.

Practical Tips When Using TGI

Core concepts of LLMs

Before diving into HuggingFace TGI, it’s important to have a good understanding of Large Language Models. Get acquainted with key concepts such as tokenization, attention mechanisms, and the Transformer architecture. These fundamentals are essential for tailoring models and enhancing text generation results.

Model preparation and optimization — understanding Hugging Face

Learn how to prepare and optimize models for your specific needs. This involves selecting the appropriate model, customizing tokenizers, and applying techniques to improve performance without compromising quality.

Familiarize yourself with how HuggingFace operates and how to use its HuggingFace Hub for NLP development. A good starting point is the tutorial “An Introduction to Using Transformers and Hugging Face.”

Understanding the concept of fine-tuning is also essential, as models often need optimization for specific objectives. You can learn how to fine-tune a model with the guide “An Introductory Guide to Fine-Tuning LLMs.”

Generation strategies

Investigate different approaches to text generation, including greedy search, beam search, and top-k sampling. Each method has its advantages and disadvantages, affecting the coherence, creativity, and relevance of the generated text.

Conclusion

Hugging Face’s TGI toolkit enables individuals to delve into AI text generation. It provides a user-friendly toolkit for deploying and hosting LLMs for sophisticated NLP applications demanding human-like text.

Although self-hosting large language models offers data privacy and cost control, it necessitates robust hardware. Nevertheless, recent advancements in the field enable smaller models to be utilized directly on our local machines.

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

What is the difference between LLM and GPT

LLM Leaderboard 2024 Predictions Revealed

Novita AI LLM Inference Engine: the largest throughput and cheapest inference available