Introducing Mistral's Mixtral 8x7B Model: Everything You Need to Know

Introduction

Mistral AI, a prominent player in the AI industry, has recently introduced its latest model, Mixtral 8x7B. This new model, part of the Mixtral series, builds on the previous models and provides significant improvements in conversation quality, knowledge, and capabilities. With a focus on open technology, Mistral AI aims to make advanced AI models more accessible to the developer community.

Marking a significant achievement, Mixtral 8x7B secured €400 million in Series A funding, propelling its valuation to an impressive $2 billion and solidifying its position in the competitive AI sector. The funding round, spearheaded by Andreessen Horowitz, attracted notable investors like Lightspeed Venture Partners, Salesforce, and BNP Paribas, among others.

The three Mistrals

Mistral-tiny and Mistral-small are presently utilizing their two publicly released open models, while Mistral-medium is employing a prototype model with enhanced performance, undergoing testing in a deployed environment.

Mistral models

Mistral-tiny and Mistral-small are presently employing their two released open models, while the third, Mistral-medium, utilizes a prototype model with superior performance, undergoing testing in a deployed environment. Mistral-large stands as their premier model, ranking as the second-best model globally.

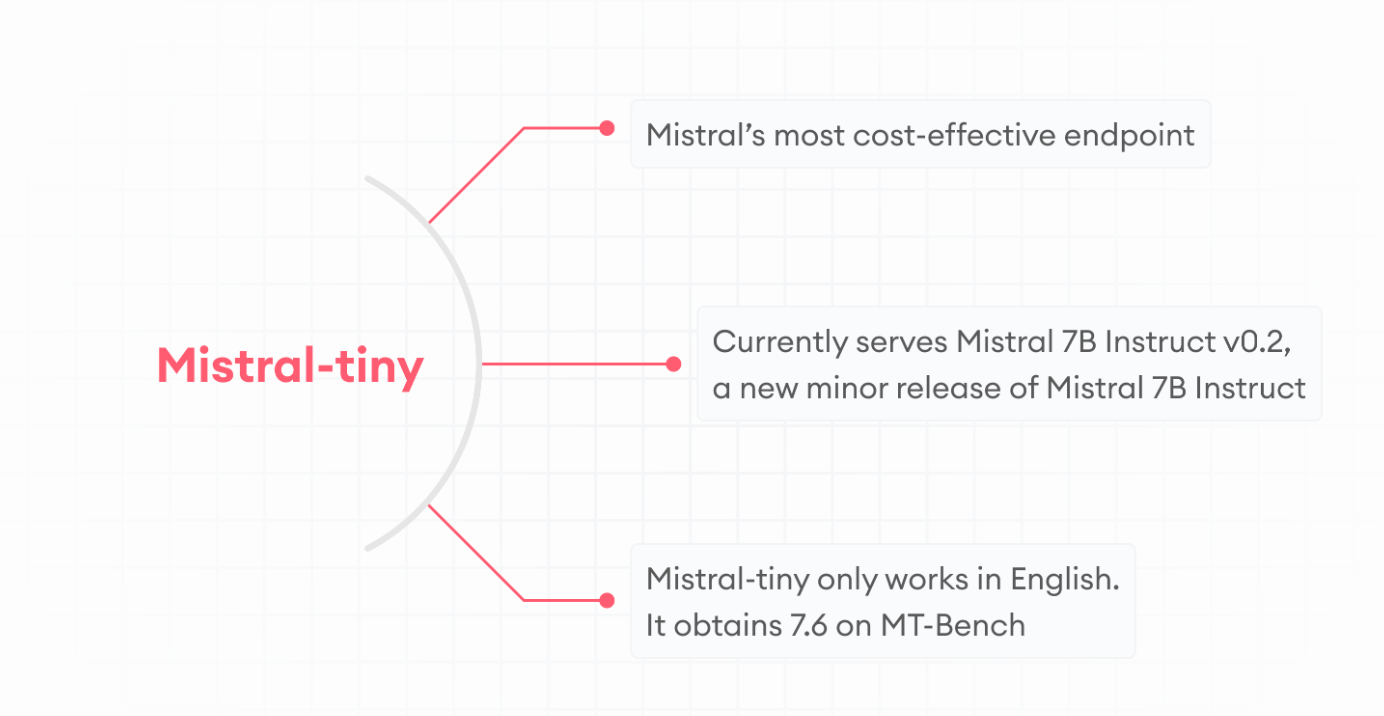

Mistral-tiny: serves as Mistral’s most cost-effective endpoint, currently accommodating Mistral 7B Instruct v0.2, a new minor release of Mistral 7B Instruct. Operating exclusively in English, it achieves a score of 7.6 on the MT-Bench. The instructive model is available for download here.

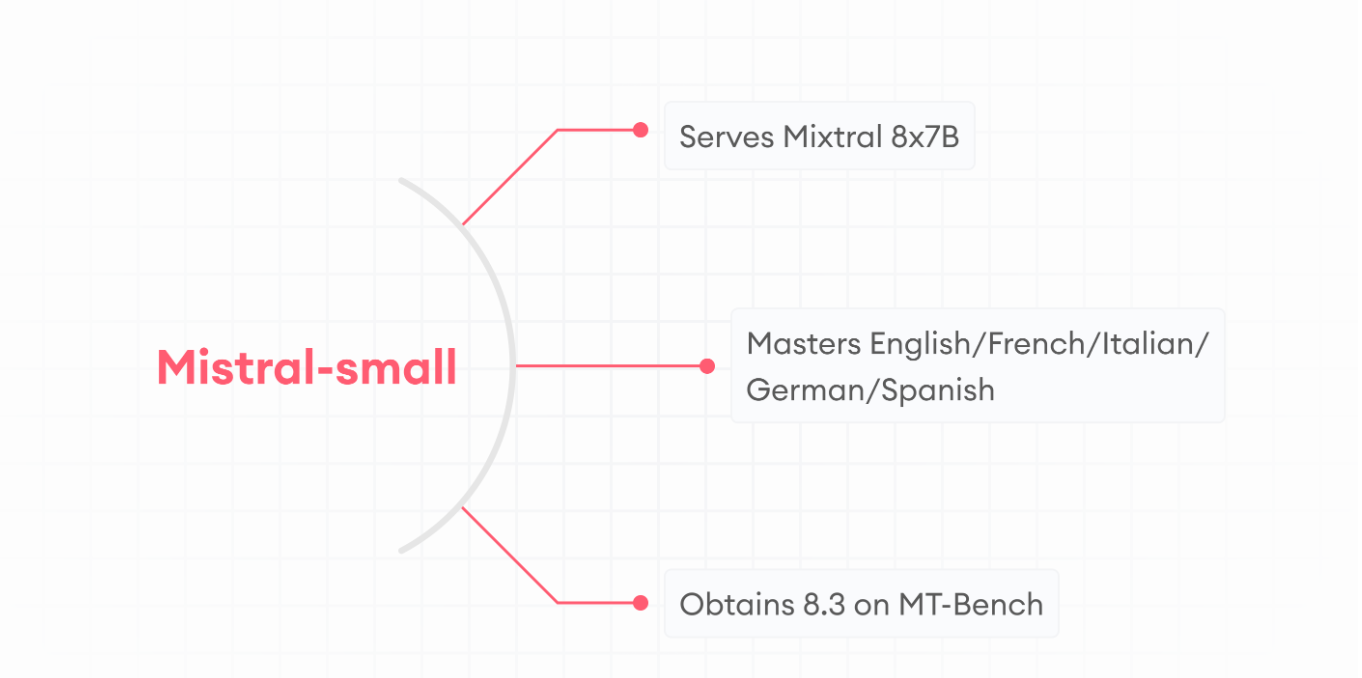

Mistral-small: caters to Mixtral 8x7B, excelling in English, French, Italian, German, Spanish, and code. It achieves a score of 8.3 on the MT-Bench. This model is well-suited for streamlined tasks such as classification, customer support, or text generation, especially when performed in large volumes. By the end of February, Mistral-small received an update on their API, featuring a model significantly superior (and faster) than Mixtral 8x7B.

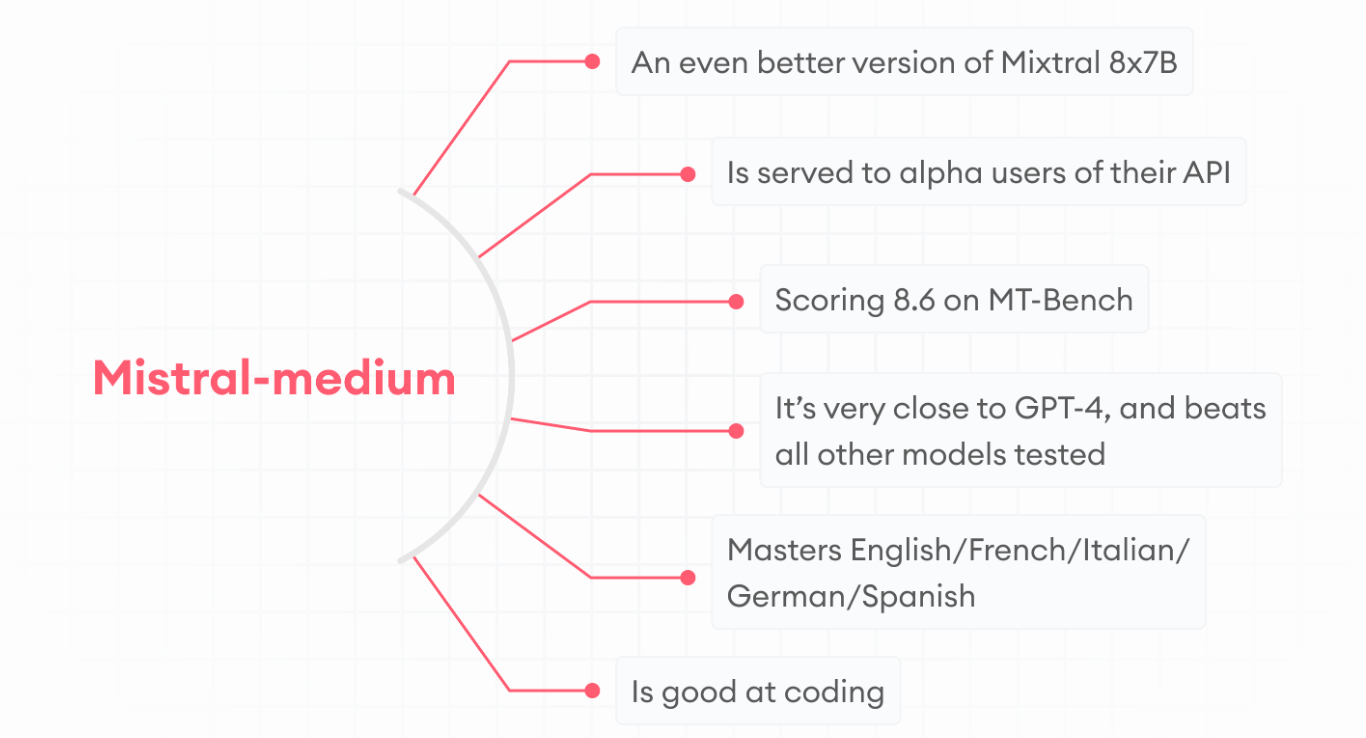

Mistral-medium: represents an upgraded iteration of Mixtral 8x7B, exclusively available to alpha users of their API. Boasting an impressive score of 8.6 on the MT-Bench, it closely rivals GPT-4 and outperforms all other tested models. Proficient in English, French, Italian, German, and Spanish, as well as adept in coding, Mistral-medium is well-suited for tasks demanding moderate reasoning. These include activities like data extraction, summarizing documents, or crafting job and product descriptions.

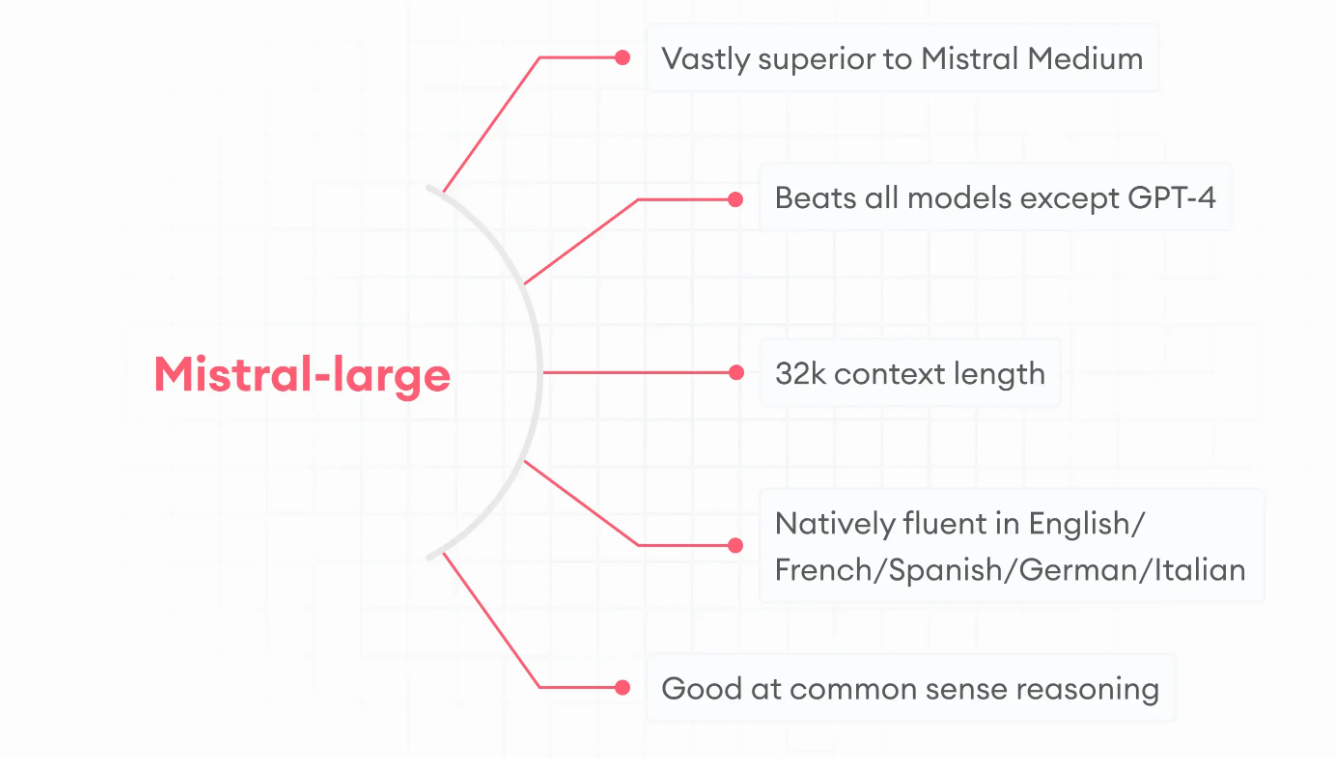

Mistral Large: their most recent unveiling, significantly surpasses Mistral Medium and ranks as the second-best model globally via an API. It has the capacity to handle 32k context tokens and possesses native fluency in English, French, Spanish, German, and Italian. Achieving a remarkable score of 81.2% on MMLU (measuring massive multitask language understanding), it outperforms models like Claude 2, Gemini Pro, and Llama-2–70B. Mistral Large excels particularly in common sense and reasoning, boasting an accuracy rate of 94.2% on the Arc Challenge (5 shots).

Mistral 7B

Mistral AI took a distinct approach with its initial model, Mistral 7B, opting not to directly compete with larger counterparts like GPT-4. Instead, it trained on a smaller dataset comprising 7 billion parameters, presenting a unique proposition in the AI model domain. In a bid to underscore accessibility, Mistral AI has made this model available for free download, enabling developers to integrate it into their own systems. Mistral 7B stands as a compact language model that comes at a significantly lower cost compared to models like GPT-4. While GPT-4 boasts broader capabilities than such smaller models, it also entails higher expenses and complexity in operation.

Mixtral 8x7B

Here are the key highlights of Mixtral:

- It processes context with up to 32k tokens.

- It supports English, French, Italian, German, and Spanish languages.

- Mixtral demonstrates proficiency in coding tasks.

- With fine-tuning, it can transform into an instruction-following model, achieving an MT-Bench score of 8.3.

The model integrates seamlessly with established optimization tools like Flash Attention 2, bitsandbytes, and PEFT libraries. Its checkpoints are accessible under the mistralai organization on the Hugging Face Hub.

How Mixtral 8x7B works

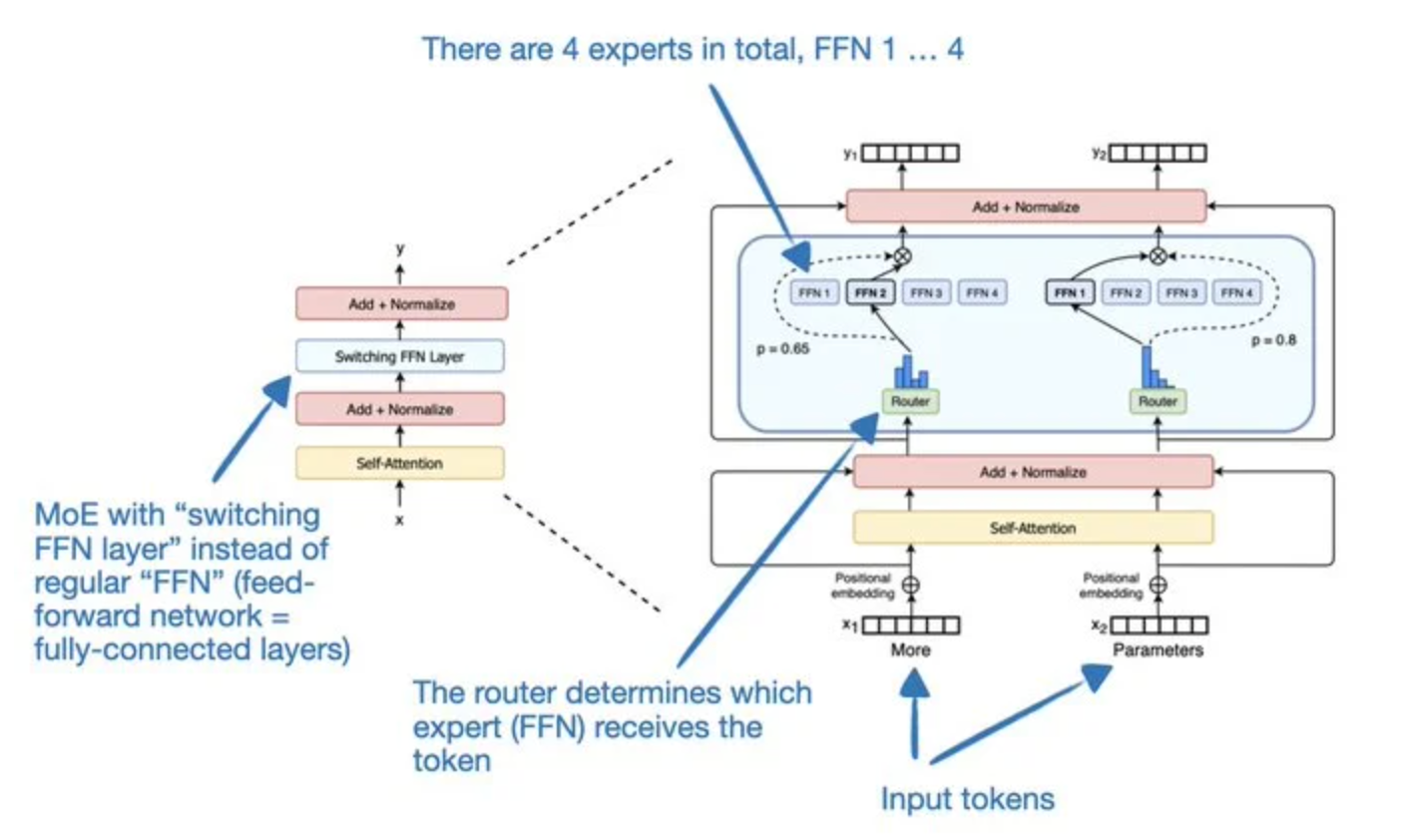

Mixtral employs a sparse mixture of expert (MoEs) architecture, as depicted in the diagram below. In this setup, each token undergoes processing by a specific expert, with a total of four experts in operation. However, in the more complex Mixtral-8x-7B model, there are eight experts utilized, with two experts assigned to process each token. At each layer and for every token, a specialized router network selects two out of the eight experts to handle the token. The outputs of these experts are then combined additively.

So, why opt for MoEs? In the Mixtral model, integrating all eight experts, each tailored for a 7B-sized model, theoretically results in a total parameter count approaching 56B. However, this figure is slightly lower in practice. This discrepancy arises because the MoE method is selectively applied to the MoE layers rather than the self-attention weight matrices. Consequently, the actual total parameters are likely to fall within the range of 40–50B.

The primary advantage lies in the functionality of the router, which directs tokens in a manner that ensures only 7B parameters are activated at any given time during the forward pass, rather than the entire 56B. Each token undergoes processing by only two out of the eight experts at each layer. Moreover, these experts can vary across layers, facilitating more intricate processing pathways. This selective activation of parameters not only accelerates the training process but, more importantly, significantly expedites inference compared to traditional non-MoE models. This efficiency serves as a primary rationale for adopting an MoE-based approach in models like Mixtral.

Step-by-Step Installation Guide

Installing Mixtral 8x7B is a step-by-step process that involves setting up the necessary dependencies and configuring the environment. Here’s a guide to help you install Mixtral 8x7B:

- Install dependencies: Start by installing the required dependencies, including Python, CUDA, and other libraries specified by Mistral AI.

- Download the model: Download the Mixtral 8x7B model from the Mistral AI website or the Hugging Face Model Hub.

- Configure the environment: Configure your environment to meet the requirements of Mixtral 8x7B. This may involve setting up GPU resources, RAM, and other system configurations.

- Test the installation: Once the environment is configured, test the installation by running a sample script provided by Mistral AI. This will ensure that the model is installed correctly and is ready for use.

Practical Applications and Use Cases

Mixtral 8x7B has a wide range of practical applications and can be used in various industries. Here are some examples of the practical applications and use cases of Mixtral 8x7B:

- Natural language processing: Mixtral 8x7B can be used for tasks such as text classification, sentiment analysis, and text generation.

- Coding assistance: The model’s advanced code generation capabilities make it a valuable tool for developers, providing assistance with coding, debugging, and understanding complex programming concepts.

- Content generation: Mixtral 8x7B can be used to generate content for blogs, articles, and other written materials, as well as create code for various applications.

- Benchmarking: Mixtral 8x7B can be used to benchmark the performance of other models and systems, providing insights into their strengths and weaknesses.

Experience Mixtral 8x7B with novita.ai LLM

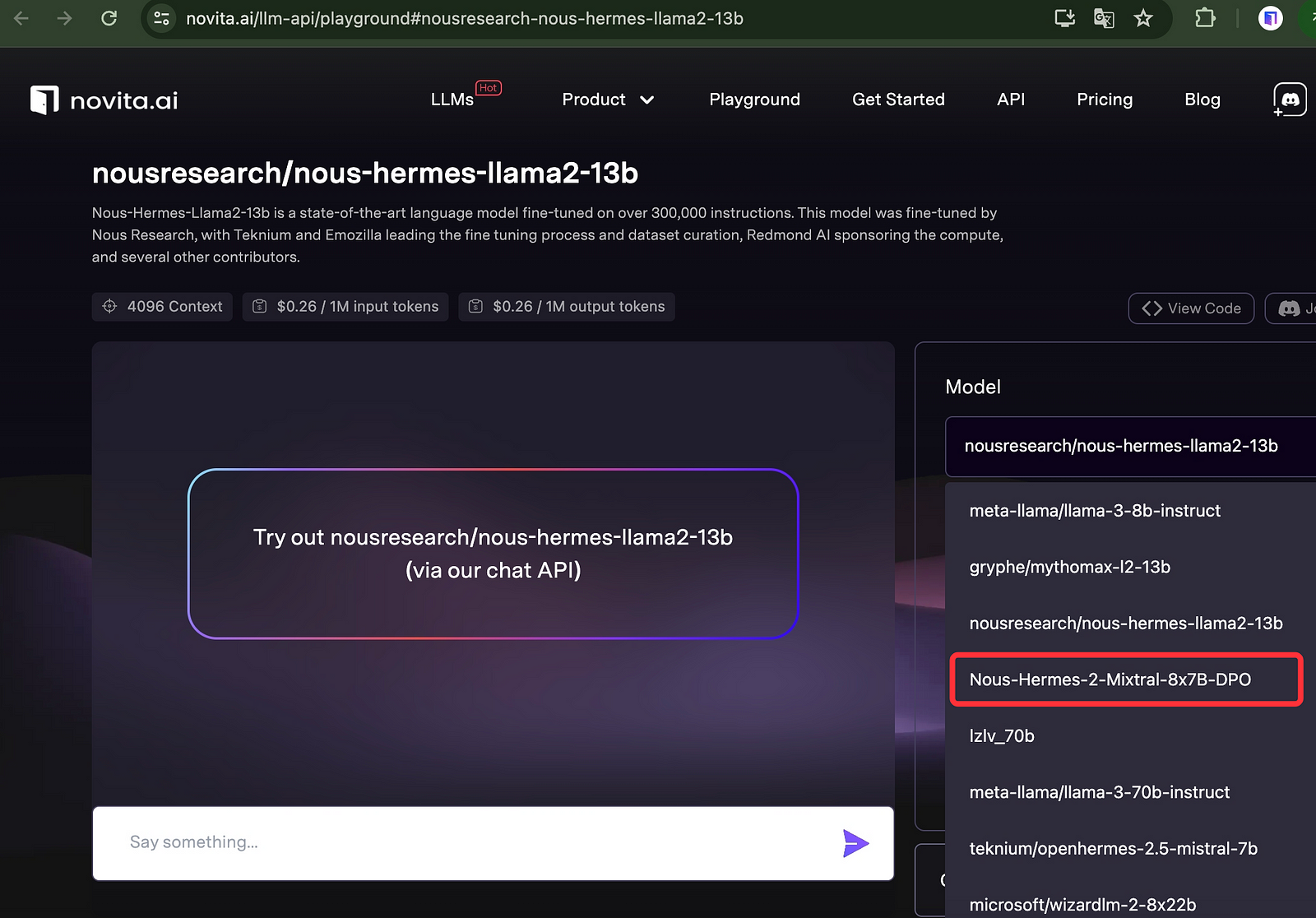

To experience the power of Mixtral 8x7B model, you can apply novita.ai LLM API since it has equipped with Mixtral 8x7B model.

Or you can directly check our chatbot using Mixtral 8x7B model:To experience the power of Mixtral 8x7B model, you can apply novita.ai LLM API since it has equipped with Mixtral 8x7B model.Experience Mixtral 8x7B with novita.ai LLM

Comparing Mixtral 8x7B with Other Models

Mixtral 8x7B stands out among other models in the AI landscape. Here’s a comparison of Mixtral 8x7B with other models:

- Mixtral 8x7B vs. Llama 2 70B: Mixtral 8x7B surpasses Llama 2 70B in most benchmarks and offers six times faster inference speed.

- Mixtral 8x7B vs. OpenAI GPT-3.5: Mixtral 8x7B matches or exceeds the performance of OpenAI GPT-3.5 across various benchmarks.

- Mixtral 8x7B vs. Anthropic Claude 2.1: Users prefer Mixtral 8x7B outputs over Anthropic Claude 2.1, indicating its superior performance.

These comparisons highlight the competitive edge of Mixtral 8x7B and its position as a leading model in the AI landscape. Its performance, efficiency, and versatility make it a top choice for developers and researchers.

Why Mixtral 8x7B Stands Out Among Competitors

Mixtral 8x7B stands out among its competitors due to its standout features and competitive edge. Here’s why Mixtral 8x7B is a market leader:

- Superior performance: Mixtral 8x7B outperforms its competitors in various benchmarks, offering enhanced performance and efficiency.

- Efficient parameter utilization: The sparse mixture of experts (MoE) architecture in Mixtral 8x7B allows for selective engagement of parameters, maximizing performance while minimizing computational costs.

- Open weights: Mixtral 8x7B is licensed under Apache 2.0, making its weights openly available. This fosters responsible AI usage and allows for modification and improvement by the developer community.

These standout features and competitive advantages position Mixtral 8x7B as a market leader in the AI landscape, offering a powerful and efficient solution for various applications.

Optimizing Performance with Mixtral 8x7B

Optimizing performance with Mixtral 8x7B is essential to ensure efficient and effective use of the model. Here are some tips for optimizing performance:

- Maximize resources: Ensure that your system has sufficient GPU resources, RAM, and other hardware specifications to support the requirements of Mixtral 8x7B.

- Fine-tuning: Fine-tune the model for specific tasks and applications to enhance its performance and effectiveness.

- Troubleshooting: Familiarize yourself with the troubleshooting techniques and guidelines provided by Mistral AI to resolve any issues or challenges that may arise during usage.

By following these tips and optimizing the performance of Mixtral 8x7B, you can maximize its capabilities and achieve optimal results in your applications.

Tips for Maximizing Efficiency and Accuracy

To maximize efficiency and accuracy with Mixtral 8x7B, consider the following tips:

- Data preprocessing: Ensure that your data is properly preprocessed and formatted to optimize model performance.

- Batch processing: Utilize batch processing techniques to maximize throughput and minimize latency.

- Resource allocation: Allocate sufficient GPU resources and RAM to handle the workload efficiently.

- Fine-tuning: Fine-tune the model for specific tasks and applications to improve accuracy and tailor it to your needs.

Troubleshooting Common Issues

Common issues may arise when using Mixtral 8x7B, but they can be resolved with proper troubleshooting techniques. Here are some common issues and their solutions:

- Out-of-memory errors: Increase the available GPU memory or reduce the batch size to avoid memory-related issues.

- Compatibility issues: Ensure that your system meets the requirements specified by Mistral AI and use compatible versions of dependencies and libraries.

- Slow performance: Optimize the model’s resource allocation, fine-tune for better performance, and utilize batching techniques to improve speed.

Conclusion

In conclusion, Mistral’s Mixtral 8x7B Model offers a cutting-edge approach to machine learning with its innovative Mixture of Experts (MoE) Architecture. This model provides optimized performance and cost-effective inference, making it a game-changer for businesses across various industries. By setting up Mixtral 8x7B with the step-by-step guide and leveraging its advanced features, users can maximize efficiency and accuracy in their operations. Its benchmarking against traditional models and competitors showcases its superiority in the field. Embrace Mixtral 8x7B to stay ahead in the realm of machine learning and unlock its full potential for your business’s success.

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

What is the difference between LLM and GPT

LLM Leaderboard 2024 Predictions Revealed

Novita AI LLM Inference Engine: the largest throughput and cheapest inference available