Introducing GPT Neo: the Large Scale Autoregressive Language Model with Mesh-Tensorflow

Discover the power of GPT Neo, the Large Scale Autoregressive Language Model with Mesh-Tensorflow. Learn more on our blog!

Introduction

Language models have revolutionized the field of natural language processing (NLP), enabling machines to understand and generate human-like text. One such language model is GPT Neo, a large-scale autoregressive language model based on the GPT architecture. With its impressive 125 million parameters, GPT Neo is capable of generating high-quality text and performing various NLP tasks, making it a valuable tool for few-shot learning in practice with eleutherai and huggingface. This model can be easily incorporated into a pipeline for text generation, allowing for different sequences to be generated each time it is run.

What is GPT Neo?

GPT Neo is a pre-trained language model that has been trained on a large dataset to understand and generate human-like text. It is part of the GPT family of models and is based on the GPT architecture. GPT Neo has 125 million parameters, which allows it to capture the intricacies of natural language and generate coherent and contextually relevant text. One unique aspect of GPT Neo is its use of local attention in every other layer with a window size of 256 tokens, making it a powerful tool for language processing tasks. With a vocabulary size of the model set at 50257, GPT Neo has a vast range of tokens that it can recognize and generate, making it a highly versatile and accurate language model.

The model is trained using the Pile dataset, a large text corpus that provides diverse and extensive training data. This dataset enables GPT Neo to learn the patterns and structures of the English language, making it capable of generating high-quality text.

The Evolution of Autoregressive Language Models

Autoregressive language models have played a significant role in the evolution of machine learning and natural language processing. These models, such as GPT Neo, are designed to predict the next word in a sequence based on the previous words. This allows them to generate coherent and contextually relevant text.

Over the years, autoregressive language models have evolved in terms of size and performance. With advancements in hardware and training techniques, models like GPT Neo have been able to scale to millions of parameters, enabling them to capture more complex language patterns and generate more accurate text.

The development of autoregressive language models has greatly contributed to the advancements in machine translation, sentiment analysis, text generation, and other NLP tasks. These models have opened up new possibilities for natural language understanding and have paved the way for the development of more advanced language models.

Key Features of GPT Neo

GPT Neo boasts several key features that make it a powerful language model. Its architecture, based on the GPT model, allows it to understand and generate human-like text. With its impressive size, GPT Neo is capable of capturing complex language patterns and generating coherent and contextually relevant text.

Another standout feature of GPT Neo is its ability to scale to large-scale language modeling tasks. This is made possible through its implementation of model using mesh-tensorflow, a framework that enables efficient parallel processing. By leveraging multiple GPUs, GPT Neo can handle massive amounts of data and perform computations in a highly efficient manner.

Additionally, GPT NeoX, a GPU-specific repository, is now available for those looking to utilize the model’s full potential on GPU. The parameters for GPT NeoX can be defined in a YAML configuration file, which is passed to the deepy.py launcher. To make things easier, we have provided some example .yml files in the configs folder, showcasing a diverse array of features and model sizes. While these files are generally complete, they may not be optimal for every use case.

These key features make GPT Neo a versatile and powerful tool for text generation, language translation, sentiment analysis, and other NLP applications.

Architecture and Design Principles

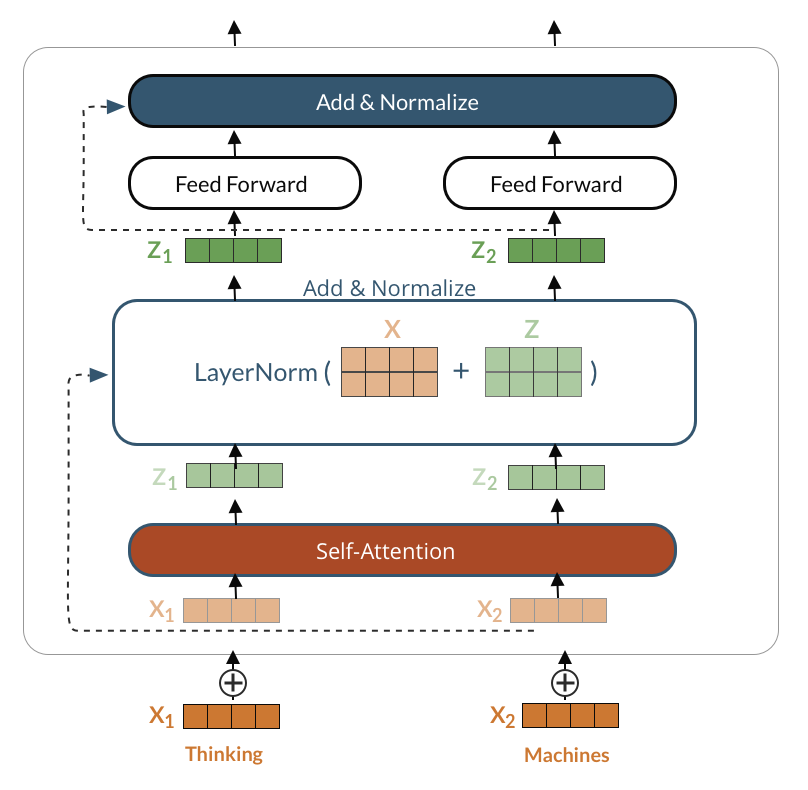

The architecture of GPT Neo is based on the GPT model, which stands for Generative Pretrained Transformer. Transformers are a type of neural network architecture that has revolutionized natural language processing tasks. The GPT architecture consists of multiple layers of self-attention and feed-forward neural networks.

In GPT Neo, the transformer architecture allows the model to capture the dependencies and relationships between words in a given text. This enables it to generate coherent and contextually relevant text.

At the core of the GPT architecture is the concept of tokens. Tokens represent individual units of text, such as words or characters. By processing these tokens, GPT Neo can understand the structure and meaning of the text and generate appropriate responses.

The design principles of GPT Neo prioritize the generation of high-quality and contextually relevant text. The model is trained on a large dataset to learn the patterns and structures of natural language, giving it the ability to generate text that is coherent and meaningful.

The Power of 125 Million Parameters

GPT Neo’s impressive 125 million parameters contribute to its ability to generate high-quality and contextually relevant text. Parameters are the variables that the model learns during the training process. The more parameters a model has, the more complex patterns it can capture and the better it can generate text.

The size of GPT Neo’s model is a significant factor in its performance. With a large number of parameters, it can capture intricate language patterns and generate coherent and contextually relevant text.

Additionally, GPT Neo has a vast vocabulary size, allowing it to understand and generate a wide range of words and phrases. This extensive vocabulary further enhances its ability to generate accurate and diverse text.

Training GPT Neo: Behind the Scenes

Training GPT Neo involves a complex process that includes processing a large dataset and optimizing the model’s parameters. The model is trained on the Pile dataset, which provides diverse and extensive text data for training.

During training, the model processes the dataset in batches, with each batch containing a fixed number of examples. The batch size is an important parameter that affects the training process. A larger batch size can lead to faster training but may require more memory. Conversely, a smaller batch size may slow down the training process but can help prevent overfitting.

Through the training process, GPT Neo learns the patterns and structures of natural language, enabling it to generate coherent and contextually relevant text.

Leveraging the Pile Dataset for Training

GPT Neo is trained using the Pile dataset, a large text corpus that provides diverse and extensive training data. The Pile dataset contains a wide range of text sources, including books, articles, websites, and more. This diverse collection of text allows GPT Neo to learn the patterns and structures of language and generate coherent and contextually relevant text.

During the training process GPT Neo processes the text data in the Pile dataset and optimizes parameters to capture the complexities of language. By exposing the model to a vast amount of text data, GPT Neo becomes proficient in understanding and generating human text.

The Pile dataset plays a crucial role in training GPT Neo providing the necessary data for the model to learn and generalize its knowledge of natural language.

Mesh-TensorFlow: Scaling to Meet GPT Neo’s Needs

Mesh-TensorFlow plays a vital role in scaling GPT Neo to fulfill its requirements efficiently. By harnessing the power of GPUs and utilizing parallel processing, Mesh-TensorFlow optimizes the training and inference processes for large language models like GPT Neo. Its functionality enables seamless integration with GPT Neo, allowing for optimal performance during both training and deployment phases. This systematic approach ensures that GPT Neo can handle the complexities of its 125 million parameters and extensive vocabulary size, utilizing the tensor-expert-data parallelism framework for efficient processing. This makes GPT Neo a powerhouse in natural language processing applications.

Practical Applications of GPT Neo

GPT Neo has a wide range of practical applications, thanks to its ability to generate high-quality and contextually relevant text. One of the key applications of GPT Neo is in content generation, such as writing blog posts, articles, and other forms of written content. its understanding of natural language, GPT Neo can generate coherent engaging text on a given topic.

Additionally, GPT Neo can be used for various natural language processing tasks, including sentiment analysis, text translation, question answering, and more. Its ability to understand and generate text makes it a valuable tool for implementing models in real-world applications that require natural language understanding and generation.

Content Generation: Blogs, Articles, and More

Content generation is one of the primary applications of GPT Neo. With its understanding of natural language and the ability to generate coherent and contextually relevant text, GPT Neo can be used to generate blog posts, articles, and other written content.

For bloggers and content creators, GPT Neo offers a valuable tool for generating high-quality and engaging content on various topics. By providing a few examples or prompts, GPT Neo can generate complete articles or pieces of text that are indistinguishable from those written by humans.

Natural Language Processing Tasks

GPT Neo’s natural language processing capabilities make it suitable for a wide range of tasks. It can be used for sentiment analysis, which involves determining the sentiment or emotion expressed in a given piece of text. This can be valuable for analyzing customer feedback, social media content, and other forms of text data.

GPT Neo can also be used for machine translation, where it translates text from one language to another. By understanding the context and structure of the input text, GPT Neo can generate accurate translations.

Inference time refers to the time it takes for GPT Neo to generate a response or prediction given an input. GPT Neo’s architecture and design principles prioritize efficiency, allowing it to perform inference in a timely manner. This makes it suitable for real-time applications where quick responses are required.

Comparing GPT Neo with Other Language Models

GPT Neo is part of a family of language models that includes other notable models such as GPT-3 and BERT. Each of these models has its own strengths and applications.

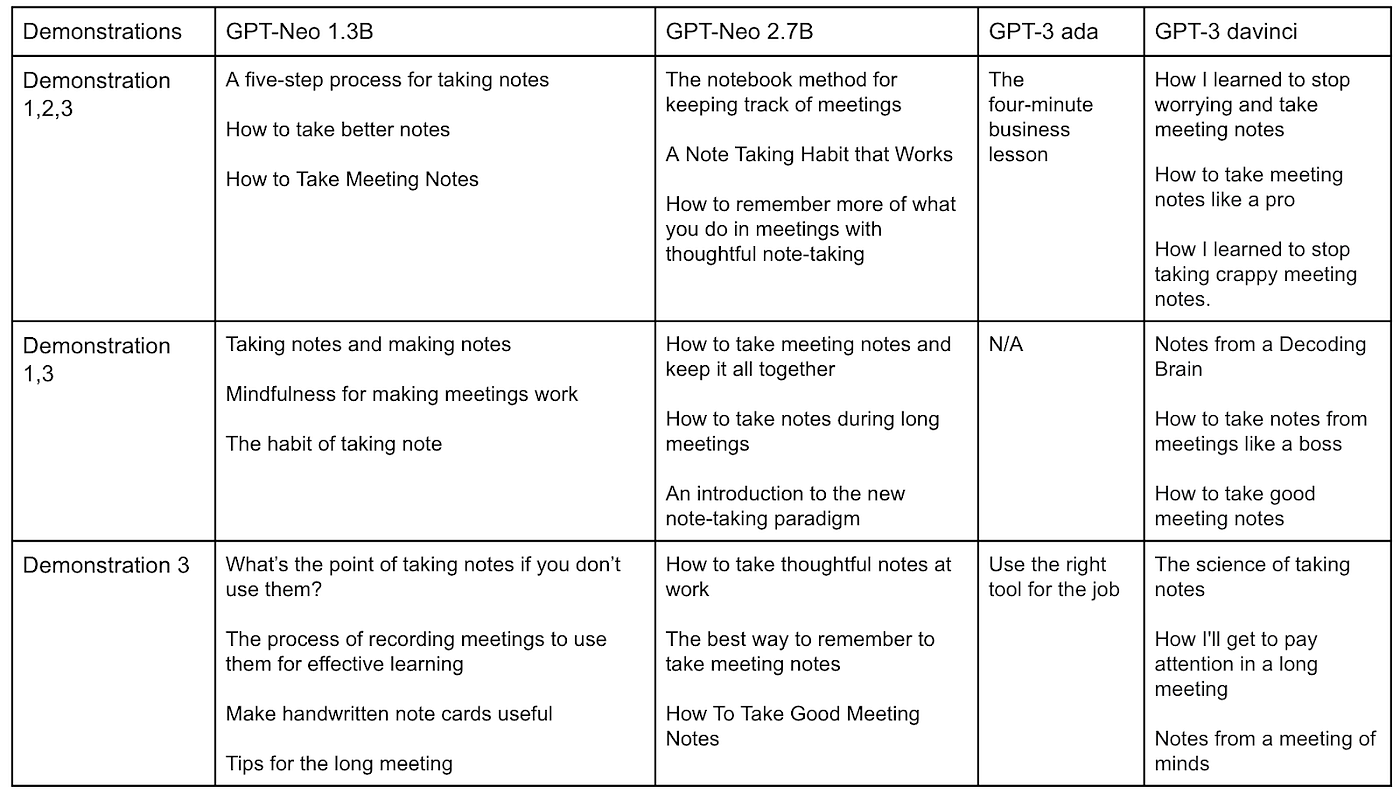

When comparing GPT Neo to GPT-3, one key difference lies in their size and number of parameters. GPT-3 is significantly larger than GPT Neo, with 175 billion parameters compared to GPT Neo’s 125 million parameters. This difference in size affects their ability to capture complex language patterns and generate accurate text.

BERT, on the other hand, is a different type of language model that focuses on bidirectional representations of text. While GPT Neo and BERT serve different purposes, they both contribute to the advancements in natural language understanding and generation.

GPT Neo vs. GPT-3: What’s the Difference?

GPT Neo and GPT-3 both belong to the family of GPT models, but they have key differences in terms of size and performance. GPT-3 is a much larger model with 175 billion parameters, while GPT Neo has 125 million parameters. This difference in size affects their ability to capture complex language patterns and generate accurate text.

Due to its larger size, GPT-3 tends to perform better on zero-shot tasks, where no specific training is provided. GPT Neo, on the other hand, requires a few examples or prompts to achieve good results.

Both GPT Neo and GPT-3 excel in natural language processing tasks, but the differences in their size and performance make them suitable for different applications and use cases.

GPT Neo and Its Place Among Emerging Models

GPT Neo is an emerging language model that has gained attention for its impressive performance and capabilities. As part of the GPT family of models, GPT Neo has found its place among other notable language models in the market.

While models like GPT-3 and BERT have dominated the landscape, GPT Neo offers a powerful alternative with its robust architecture and large-scale capabilities. Its ability to generate coherent and contextually relevant text, combined with its scalability using mesh-tensorflow, sets it apart from other emerging models.

As GPT Neo continues to be developed and refined, it is expected to make significant contributions to the field of natural language processing and find its place alongside established models in the market.

Implementing GPT Neo in Real-World Applications

GPT Neo has immense potential for implementation in real-world applications across various industries. Its natural language understanding and generation capabilities make it suitable for tasks such as chatbots, virtual assistants, and customer support systems.

When deploying GPT Neo in real-world applications, it is important to follow guidelines and best practices to ensure optimal performance and mitigate potential biases. Ethical considerations must also be taken into account when using language models to ensure fair and unbiased outcomes.

The general usage of GPT Neo involves providing a few examples or prompts to guide the model’s predictions. By fine-tuning and adapting the model to specific tasks, developers can harness the power of GPT Neo in their applications.

Guidelines for Deployment

When deploying GPT Neo or any language model in real-world applications, it is essential to follow guidelines and best practices to ensure optimal performance and mitigate potential biases.

Firstly, it is important to consider the specific use case and task for which the model will be deployed. This includes determining the appropriate input format, defining the desired output, and setting criteria for evaluating the model’s performance.

Additionally, ethical considerations must be taken into account to address potential biases and ensure fair and unbiased outcomes. This involves carefully curating the training data and monitoring the model’s predictions to detect and rectify any biases that may arise.

Lastly, regular updates and retraining of the model may be necessary to adapt to changing data and improve its performance over time.

By adhering to these guidelines, developers can ensure the successful deployment and implementation of GPT Neo in real-world applications.

Addressing Limitations and Biases

Like any language model, GPT Neo has its limitations and potential biases. It is important to address these limitations and biases when deploying the model in real-world applications.

One of the limitations of GPT Neo is its reliance on the training data it has been exposed to. If the training data is biased or lacks diversity, the model may exhibit biases in its generated text.

To mitigate biases, it is important to curate the training data carefully and monitor the model’s predictions. By incorporating diverse and inclusive training data and regularly evaluating the model’s output, developers can minimize the impact of biases.

Ethical considerations should also be taken into account when using language models, ensuring fairness, transparency, and accountability in their deployment. By addressing limitations and biases, developers can ensure the responsible and ethical use of GPT Neo in real-world applications.

Privacy and individual information issues are another serious limitation of GPT Neo for it is open-source.

To overcome those limitations mentioned above, you can apply our powerful LLM API to cut the chances of biases and ensure your personal information.

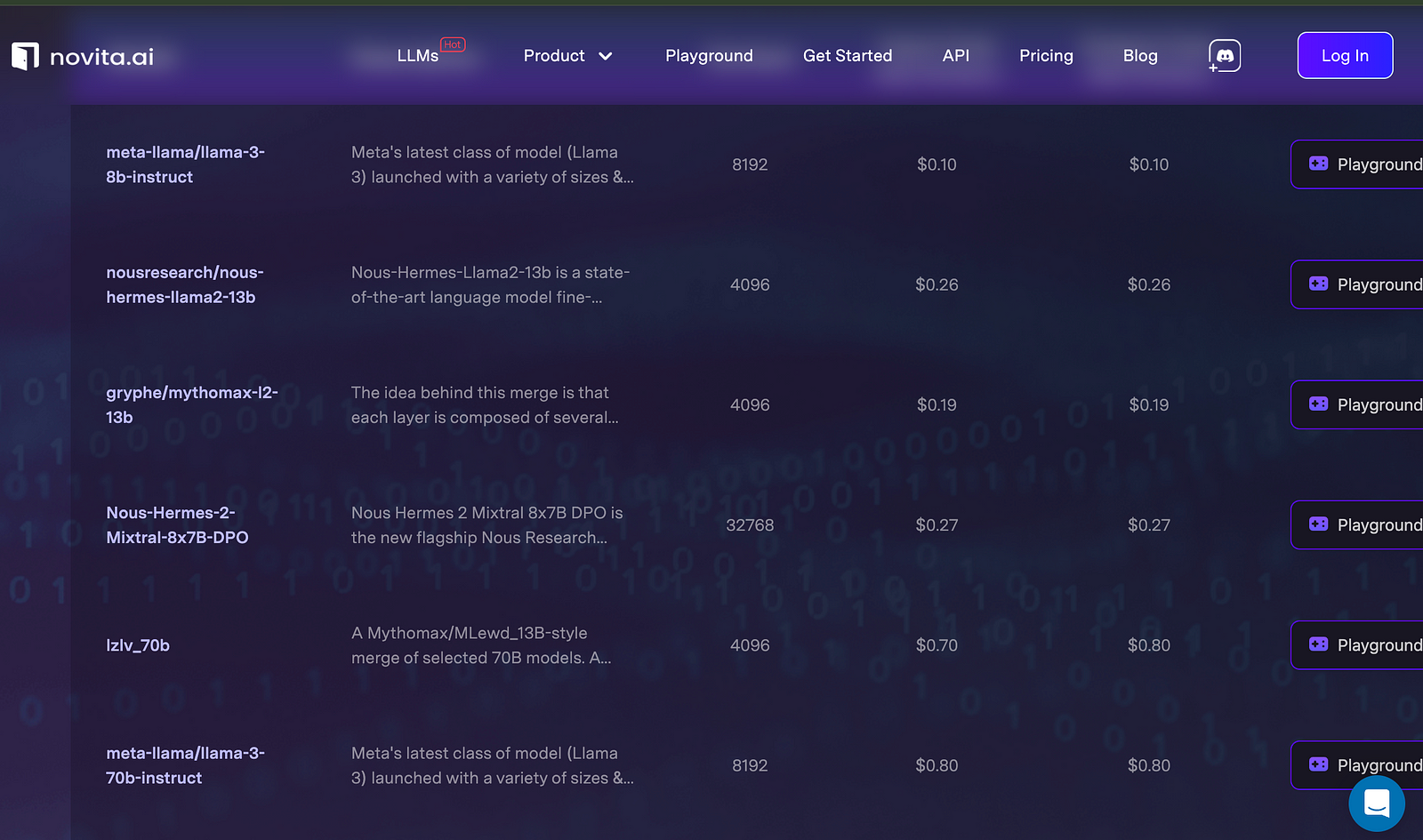

Besides that Novita AI LLM offers you unrestricted conversations through powerful Inference APIs. With Cheapest Pricing and scalable models, Novita AI LLM Inference API empowers your LLM incredible stability and rather low latency in less than 2 seconds.

Moreover, our API featuring the latest and powerful meta llama 3 model released recently:

Future of GPT Neo and Autoregressive Models

The future of GPT Neo and autoregressive language models looks promising. As technology advances and more research is conducted in the field of natural language processing, we can expect further improvements in the performance and capabilities of models like GPT Neo.

One trend that is likely to continue is the scaling of language models to even larger sizes, enabling them to capture more complex language patterns and generate more accurate text. Additionally, we can expect advancements in fine-tuning techniques and the integration of language models into various applications, further expanding their utility and impact.

Conclusion

In conclusion, GPT Neo stands out as a cutting-edge autoregressive language model with impressive capabilities. With a vast parameter count and innovative Mesh-TensorFlow technology, it promises tremendous potential across various applications, from content generation to complex natural language processing tasks. As the future unfolds, GPT Neo’s evolution and impact in the realm of language modeling are anticipated to reshape how we interact with AI-driven technologies. Stay tuned for the latest trends and advancements in this exciting field.

Frequently Asked Questions

How do developers address potential biases in GPT Neo?

Developers address potential biases in GPT Neo by carefully curating the training data to include diverse and inclusive examples. They also monitor the model’s predictions and evaluate its output to detect and rectify any biases that may arise.

What are the challenges in training large-scale models like GPT Neo?

One challenge is the computational resources required, as large-scale models require powerful GPUs and significant memory. Another challenge is optimizing the batch size, as larger batches can lead to faster training but may require more memory. Balancing these factors is crucial for efficient training of large-scale models.

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

What is the difference between LLM and GPT

LLM Leaderboard 2024 Predictions Revealed

Novita AI LLM Inference Engine: the largest throughput and cheapest inference available