Mastering Mistral Chat Template: Comprehensive Guide

Key Highlights

- Definition of Chat Template: A chat template in Mistral defines structured roles (such as “user” and “assistant”) and formatting rules that guide how conversational data is processed, ensuring coherent and context-aware interactions in AI-driven dialogue generation.

- Mistral Chat Template Use Guide: This comprehensive guide includes setting up the environment, constructing and applying the chat template.

- Automated Pipeline Efficiency: Introduction of an automated chat pipeline streamlines the application of chat templates, improving efficiency in generating responses tailored to specific conversation contexts.

Introduction

Curious about mastering the use of Mistral chat template? Dive into our comprehensive step-by-step guide! Before delving into the user guide, we’ll dissect how a chat template functions to enhance your understanding. Additionally, we’ll introduce an automated chat pipeline to boost efficiency. If you’re intrigued, read on!

What is Mistral Chat Template?

In short, “Mistral Chat Template” means chat template for Mistral models.

Mistral Model Series

The Mixtral model series is part of Mixtral AI’s open-source generative AI models available under the Apache 2.0 license. Mistral AI offers the Mixtral models as open-source, allowing developers and businesses to use and customize them for various applications. Specifically, there are two versions of the Mixtral models: Mixtral 8x7B and Mixtral 8x22B.

Introducing Chat Template

The utilization of LLMs for chat applications is becoming more prevalent. Unlike traditional language models that process text in a continuous sequence, LLMs in a chat setting handle an ongoing dialogue made up of multiple messages. Each message in this dialogue is characterized by a specific role, such as “user” or “assistant,” along with the actual text of the message.

Similar to the process of tokenization, various LLMs require distinct input formats for chat interactions. To address this, chat templates have been incorporated as a feature. These templates are integrated into the tokenizer’s functionality, outlining the method for transforming a list of conversational messages into a unified, model-specific tokenizable string.

How Does Chat Template Work?

Message Structure

Each message in a chat template is typically represented as an object or dictionary containing two main attributes:

- Role: Specifies the role of the speaker, such as “user” or “assistant”.

- Content: The actual text or content of the message.

{"role": "user", "content": "Hello, how are you?"}

{"role": "assistant", "content": "I'm doing great. How can I help you today?"}Formatting Rules

Chat templates define how these messages are concatenated or separated to form a coherent input string for the model. This could involve adding whitespace, punctuation, or special tokens to indicate the structure of the conversation.

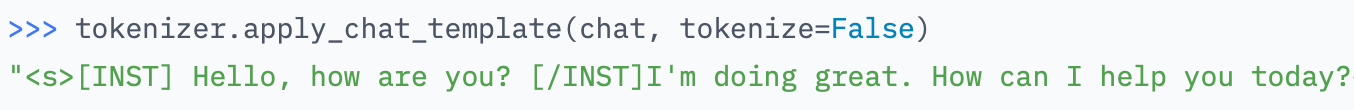

Example:

- Simple template (BlenderBot):

" Hello, how are you? I'm doing great. How can I help you today? I'd like to show off how chat templating works!</s>"- Complex template (Mistral-7B-Instruct):

"<s>[INST] Hello, how are you? [/INST]I'm doing great. How can I help you today?</s> [INST] I'd like to show off how chat templating works! [/INST]"In Mistral-7B-Instruct, for instance, the [INST] and [/INST] tokens are used to demarcate user messages, indicating specific structural information that the model has been trained to interpret.

Integration with Tokenizer

Chat templates are integrated into the model’s tokenizer to ensure that the formatted conversation data is converted into a tokenized format that the model can process effectively. This tokenization is crucial for the model to generate appropriate responses based on the context provided by the conversation.

How Do I Use Mistral Chat Template?

To use the Mistral-7B-Instruct-v0.2 model with chat templates for conversational generation, you can follow these steps based on the provided information:

Setup and Configuration

First, ensure you have the necessary imports and setup for your environment, including getting Mistral model API from Novita AI:

from transformers import AutoModelForCausalLM, AutoTokenizer

# Assuming you have already imported OpenAI and configured the client

# from openai import OpenAI

# client = OpenAI(base_url="https://api.novita.ai/v3/openai", api_key="<YOUR Novita AI API Key>")

# Define your model and tokenizer

model_name = "mistralai/Mistral-7B-Instruct-v0.2"

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Set device (CPU or GPU)

device = "cuda" if torch.cuda.is_available() else "cpu"

model.to(device)Constructing the Chat Template

Define your conversation as a list of messages, where each message includes the role (“user” or “assistant”) and the content of the message:

messages = [

{"role": "user", "content": "What is your favourite condiment?"},

{"role": "assistant", "content": "Well, I'm quite partial to a good squeeze of fresh lemon juice. It adds just the right amount of zesty flavour to whatever I'm cooking up in the kitchen!"},

{"role": "user", "content": "Do you have mayonnaise recipes?"}

]Applying the Chat Template

Use the apply_chat_template() method provided by the tokenizer to format the messages according to Mistral's chat template requirements:

encodeds = tokenizer.apply_chat_template(messages, return_tensors="pt")

model_inputs = encodeds.to(device)

Generating Responses

Generate responses using the Mistral model:

generated_ids = model.generate(model_inputs['input_ids'], max_new_tokens=1000, do_sample=True)

decoded_responses = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)

print(decoded_responses)Explanation:

- Tokenization: The

apply_chat_template()method converts the list of messages (messages) into a format that the Mistral model expects. It handles adding necessary tokens like[INST]and[/INST]to delineate user inputs as specified. - Model Inference:

model.generate()is used to generate responses based on the formatted input. Adjustmax_new_tokensas needed to control the length of generated responses.do_sample=Trueenables sampling from the model's distribution, which can improve response diversity. - Decoding:

tokenizer.batch_decode()decodes the generated token IDs into readable text, skipping special tokens like<s>and</s>.

Notes:

- Ensure your environment has sufficient resources (CPU/GPU) to handle model inference, especially with larger models like Mistral-7B.

- Adjust parameters such as

max_new_tokensanddo_samplebased on your specific application requirements for response length and generation strategy.

How to Use an Automatic Pipeline For Chat?

Apart from using chat templates, e.g. Mistral chat template, the automated text generation pipeline provided by Hugging Face Transformers simplifies the integration of conversational AI models. Using the “TextGenerationPipeline”, which now includes functionalities previously handled by the deprecated “ConversationalPipeline”, makes it straightforward to generate responses based on structured chat messages.

Key Points

- Pipeline Integration: The “TextGenerationPipeline” supports chat inputs, handling tokenization and chat template application seamlessly.

- Deprecated Functionality: The older “ConversationalPipeline” class has been deprecated in favor of the unified approach with the “TextGenerationPipeline”.

- Example with Mistral Model: Demonstrates using the pipeline with the Mistral-7B-Instruct-v0.2 model. Messages are structured with roles (“system” or “user”) and content, formatted according to Mistral’s chat template.

- Usage Simplification: Initializing the pipeline and passing it a list of structured messages automates tokenization and template application.

- Output Example: The assistant’s response is generated based on the input message, maintaining the context and style specified by the Mistral model.

Code Example

from transformers import pipeline, AutoModelForCausalLM, AutoTokenizer

# Initialize the text generation pipeline with the Mistral model

model_name = "mistralai/Mistral-7B-Instruct-v0.2"

pipe = pipeline("text-generation", model=model_name)

# Define chat messages with roles and content

messages = [

{"role": "system", "content": "You are a friendly chatbot."},

{"role": "user", "content": "Explain the concept of artificial intelligence."},

]

# Generate response using the pipeline

response = pipe(messages, max_new_tokens=128)[0]['generated_text']

# Print the assistant's response

print(response)In this code example:

- Initialization: The pipeline is initialized with the Mistral-7B-Instruct-v0.2 model using

pipeline("text-generation", model="mistralai/Mistral-7B-Instruct-v0.2"). - Message Format: Messages are structured with roles (“system” or “user”) and content, adhering to Mistral’s chat template format.

- Response Generation: The pipeline handles tokenization and applies the chat template automatically. The generated response reflects the input context and style specified by the Mistral model.

This approach leverages the capabilities of Hugging Face Transformers to simplify the implementation of conversational AI models, ensuring efficient and effective integration into chat-based applications.

Real-life Applications of Chat Template

Customer Support Chatbots:

- Scenario: A customer interacts with a chatbot for troubleshooting or assistance.

- Chat Template: The template structures the conversation with roles like “user” (customer) and “assistant” (chatbot), ensuring the chatbot understands user queries and provides appropriate responses.

- Benefits: Improves the efficiency of resolving customer issues by maintaining context across multiple interactions.

Educational Chatbots:

- Scenario: Students engage with chatbots to ask questions, seek explanations, or receive tutoring assistance.

- Chat Template: Structured roles such as “student” and “tutor” guide how educational content is presented and discussed.

- Benefits: Facilitates personalized learning experiences by adapting content delivery based on student queries and learning objectives.

Healthcare Consultation:

- Scenario: Patients interact with virtual healthcare assistants for medical advice, symptom checking, or appointment scheduling.

- Chat Template: Defines how patient inputs (symptoms, concerns) and healthcare advice/responses are structured.

- Benefits: Ensures accurate communication of medical information, adherence to privacy regulations, and continuity of care.

Job Interview Simulations:

- Scenario: Job candidates participate in virtual interviews conducted by AI-driven interviewers.

- Chat Template: Structures the interview dialogue with roles such as “interviewer” and “candidate”, guiding the flow of questions and responses.

- Benefits: Provides realistic interview practice, feedback on communication skills, and preparation for real-world job interviews.

Conclusion

In conclusion, mastering the use of Mistral chat template involves understanding its structured approach to processing conversational data. We’ve explored how chat templates function, particularly within the context of Mistral models like Mistral-7B-Instruct-v0.2. By dissecting these components, we’ve highlighted the seamless integration of chat templates with Mistral’s tokenizer and model, ensuring coherent and contextually-aware dialogue generation. Moreover, we introduced an automated chat pipeline that further streamlines the process, replacing deprecated methods with a unified approach through the TextGenerationPipeline.

With these insights and tools, developers and businesses can effectively harness Mistral’s power for diverse applications in AI-driven conversational systems.

Novita AI is the all-in-one cloud platform that empowers your AI ambitions. With seamlessly integrated APIs, serverless computing, and GPU acceleration, we provide the cost-effective tools you need to rapidly build and scale your AI-driven business. Eliminate infrastructure headaches and get started for free — Novita AI makes your AI dreams a reality.

Recommended Reading

Introducing Mistral’s Mixtral 8x7B Model: Everything You Need to Know

Introducing Mixtral-8x22B: The Latest and Largest Mixture of Expert Large Language Model