Introduction

Writing error-free code from scratch is time-consuming and error-prone. Developers have long relied on tools like syntax highlighting, code autocompletion, and code analysis to enhance their coding experience.

Advancements in machine learning have led to AI-assisted tools like DeepCode (now Snyk), which offer intelligent recommendations, transforming how developers approach coding. Large Language Models such as Llama Code are trained on vast datasets, enabling them to generate contextually accurate code based on plain English descriptions of desired software functionality.

In this blog, we will delve into LLM models for code generation. We’ll explore their basics, evolution in software development, core principles, essential tools, environment setup, model selection, step-by-step guidance on code generation, advanced techniques, issue troubleshooting, and success measurement.

Understanding the Basics of LLMs for Code Generation

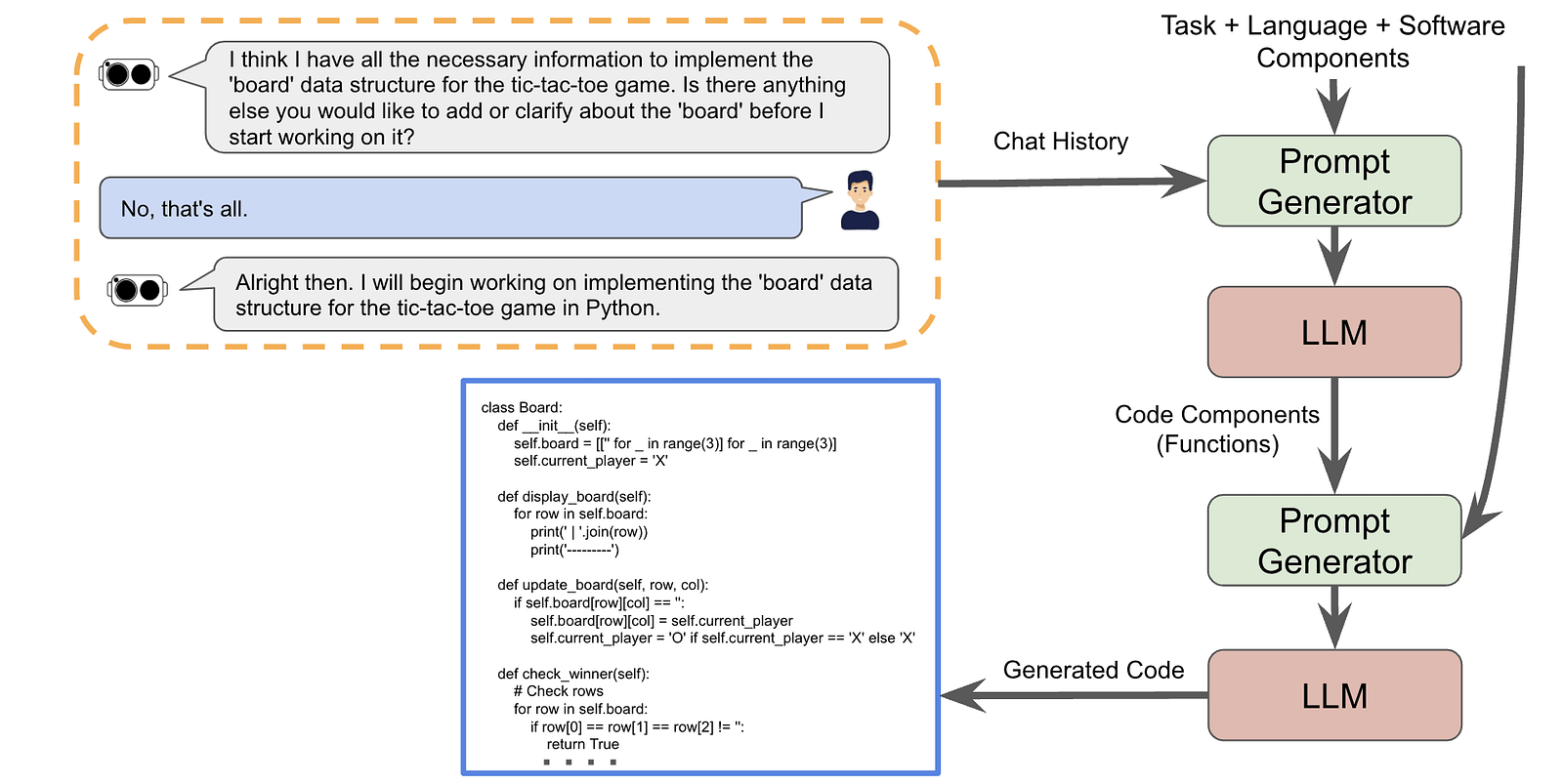

LLMs, or large language models, are AI-driven systems that specialize in generating code from natural language inputs. Instead of painstakingly writing code line by line, developers can now describe the functionality they need in plain English, and these AI models can automatically translate their descriptions into working code.

These models are trained on vast datasets that include code repositories, technical forums, coding platforms, documentation, and web data relevant to programming. This extensive training allows them to understand the context of code, including comments, function names, and variable names, resulting in more contextually accurate code generation.

LLMs have revolutionized code generation by allowing developers to streamline their coding tasks and reduce the time and effort required to write code from scratch. They have the potential to transform the software development process and make it more accessible to non-technical users.

Core Principles of Code Generation with LLMs

Code generation with LLMs is based on a few core principles that enable developers to describe code functionality in natural language and have it automatically translated into working code.

Firstly, LLMs leverage their extensive training on code repositories, technical forums, and other relevant sources to understand the context of code. This includes comments, function names, and variable names, allowing them to generate code that is more contextually accurate.

Secondly, LLMs use natural language processing techniques to parse and interpret the natural language descriptions provided by developers. This involves breaking down the input into meaningful units, understanding the relationships between different parts of the description, and mapping the natural language input to the appropriate code constructs.

Lastly, LLMs generate code by utilizing their learned knowledge of programming languages and coding best practices. This includes generating code that follows the syntax and structure of the selected programming language, as well as incorporating coding conventions and patterns commonly used in software development.

Preparing Your Environment for LLM-Based Code Generation

Before you can start performing code generation with LLMs, it is important to prepare your environment. This involves setting up the necessary tools and platforms, as well as ensuring that you have access to the relevant code repositories and resources.

Essential Tools and Platforms for LLM Code Generation

When it comes to code generation with LLMs, there are several essential tools and platforms that can enhance your workflow and productivity. These tools and platforms provide seamless integration with LLM models, enabling you to generate code more efficiently and accurately. Some of the essential tools and platforms for LLM code generation include:

- OpenAI Codex: OpenAI Codex is an extremely flexible AI code generator capable of producing code in various programming languages. It excels in activities like code translation, autocompletion, and the development of comprehensive functions or classes. Key features of OpenAI Codex include natural language interface, multilingual proficiency, enhanced code understanding, and a general-purpose programming model.

- GitHub Copilot: Developed by GitHub and OpenAI, GitHub Copilot is a code completion tool driven by AI. It suggests code based on the context of the code being typed and supports multiple programming languages. Key features of GitHub Copilot include AI-powered code assistance, training and language support, and availability on multiple IDEs.

- Visual Studio Code: Visual Studio Code is a popular code editor that offers excellent support for LLM code generation. It provides a wide range of extensions and plugins that can enhance the code generation process with LLMs. Visual Studio Code is highly customizable and supports multiple programming languages, making it a versatile tool for LLM-based code generation.

Setting Up Your First Project with an LLM

Setting up your first project with an LLM involves several steps to ensure a smooth and successful code generation process. Here’s a guide to help you get started:

- Define your project: Clearly define the goals and requirements of your project, including the specific code generation tasks you need to accomplish.

- Select an LLM: Choose the LLM that best suits your coding needs based on factors such as code completion, language support, and integration capabilities.

- Set up your development environment: Install the necessary tools and platforms for LLM code generation, such as IDEs or code editors, plugins, and extensions.

- Train your LLM: Train your chosen LLM on relevant code repositories to improve the accuracy and relevance of the generated code.

- Fine-tune LLM parameters: Fineune the parameters of your LLM to optimize the output for your specific project requirements.

- Generate code: Use the LLM to generate code based on your project requirements. Provide clear and detailed instructions to the LLM, and iterate as necessary to refine the code generation process.

Selecting the Right LLM for Your Coding Needs

Selecting the right LLM for your coding needs is crucial to ensure accurate and efficient code generation. When evaluating LLMs for code generation, consider the following factors:

- Code completion: Look for LLM models that offer intelligent code completion, suggesting code based on the context of the code being typed. This can significantly speed up the coding process and improve productivity.

- Programming language support: Consider the programming languages supported by the LLM model. Ensure that the LLM can generate code in the programming language(s) you commonly use.

- Selection criteria: Evaluate LLM models based on criteria such as accuracy, efficiency, and language support. Choose an LLM that aligns with your specific coding requirements and preferences.

Comparison of Popular LLMs for Code Generation

When it comes to popular LLMs for code generation, several models stand out for their features and capabilities. Let’s compare some of the most popular LLMs for code generation:

These LLM models offer a range of features and support for multiple programming languages, making them valuable tools for code generation. Consider their specific features and capabilities to choose the best LLM model for your coding needs.

Criteria to Consider When Choosing an LLM

When choosing an LLM for code generation, it is important to consider several criteria to ensure that the model aligns with your coding requirements. Here are some key criteria to consider:

- Accuracy: Evaluate the accuracy of the LLM in generating code. Look for models that consistently generate accurate and contextually relevant code.

- Efficiency: Consider the efficiency of the LLM in generating code. Look for models that can generate code quickly and without significant latency.

- Language support: Assess the programming languages supported by the LLM. Ensure that the LLM can generate code in the programming languages you commonly use.

- Integration capabilities: Check whether the LLM can seamlessly integrate with your development environment, such as IDEs or code editors.

Step-by-Step Guide to Generating Code with LLMs

Generating code with LLMs involves following a step-by-step process to ensure accurate and efficient code generation. Here’s a guide to help you generate code with LLMs:

- Define your coding task and requirements: Clearly define the functionality and requirements of the code you want to generate.

- Provide detailed instructions: Describe the code you want to generate in natural language, providing clear and specific instructions.

- Use code snippets: Incorporate code snippets in your instructions to give the LLM more context and guidance.

- Iterate and refine: Review the generated code and iterate as necessary to refine the output.

Defining Your Coding Task and Requirements

Before generating code with LLMs, it is important to define your coding task and requirements. Clearly identify the functionality and specifications of the code you want to generate. This includes understanding the input and output requirements, specific computations or operations that need to be performed, and any constraints or limitations that apply.

Provide as much contextual information as possible to the LLM to ensure accurate and relevant code generation. This can include additional details about the problem you are trying to solve, examples of similar code or functions, and any specific coding conventions or patterns you want the generated code to follow.

Fine-Tuning LLM Parameters for Optimal Output

Fine-tuning the parameters of an LLM is an important step in optimizing the output for code generation. Fine-tuning involves adjusting the various parameters and settings of the LLM to achieve the desired level of accuracy and relevance in the generated code.

Some of the parameters that can be fine-tuned include the temperature, which controls the randomness of the output, and the maximum length, which limits the length of the generated code. By experimenting with different parameter settings, you can optimize the output for your specific coding task and requirements.

It is important to note that fine-tuning requires careful consideration and testing to ensure that the generated code remains accurate and reliable. Fine-tuning should be done iteratively, with frequent evaluation of the output to identify the optimal parameter settings.

Advanced Techniques in LLM-Based Code Generation

LLM-based code generation offers several advanced techniques that can further enhance the code generation process. These techniques leverage the capabilities of LLMs and other tools to improve code intelligence and streamline integration with existing development workflows. Some of the advanced techniques in LLM-based code generation include:

- Integration with existing development workflows: Integrate LLMs into your existing development environments and workflows to seamlessly incorporate code generation capabilities into your coding tasks.

- Code intelligence: Utilize code intelligence tools and techniques to enhance the understanding and contextuality of the code generated by LLMs. This can involve leveraging additional code repositories, documentation, or domain-specific knowledge bases.

Integrating LLMs with Existing Development Workflows

Integrating LLMs with existing development workflows is an important step in maximizing the benefits of code generation. By seamlessly incorporating LLMs into your software development processes, you can streamline coding tasks and enhance productivity. Here are some key considerations for integrating LLMs with existing development workflows:

- Identify integration points: Identify the specific stages or tasks in your development workflow where LLM-based code generation can be most beneficial. This can include tasks such as code completion, code refactoring, or generating code snippets for specific functionalities.

- Configure IDEs or code editors: Configure your IDEs or code editors to integrate with LLMs, allowing for seamless code generation and integration within the development environment.

- Train LLM models on relevant code repositories: Ensure that your LLM models are trained on relevant code repositories that align with your existing systems and coding practices. This will help the LLM generate code that is more contextually accurate and relevant.

Customizing LLM Outputs for Specific Programming Languages

LLMs offer the flexibility to customize the generated code to meet the specific requirements of different programming languages. By understanding the language-specific syntax and conventions, you can tailor the LLM outputs to align with the coding standards and practices of specific programming languages.

Customization can involve modifying the generated code to adhere to specific language-specific coding styles, naming conventions, or best practices. This ensures that the generated code seamlessly integrates with existing codebases and follows the established coding standards of your target programming language.

Troubleshooting Common Issues in LLM Code Generation

LLM code generation, like any other coding process, can encounter common issues that require troubleshooting and debugging. Some of the common issues in LLM code generation include:

- Incorrect syntax: Generated code may contain syntax errors that prevent it from running correctly. It is important to review the generated code for syntax errors and correct them using debugging techniques.

- Incorrect output: The generated code may not produce the desired output or may not meet the expected functionality. In such cases, it is important to analyze the code logic, review the input instructions, and make necessary adjustments to the LLM parameters or instructions.

- Performance issues: Code generation with LLMs may encounter performance issues, such as slow processing or excessive memory usage. Analyze the LLM model’s resource requirements and optimize the code generation process to improve performance.

Debugging Generated Code: Tips and Tricks

Debugging generated code is an important step in the code generation process to identify and resolve any issues or errors. Here are some tips and tricks for debugging generated code:

- Review the generated code: Carefully review the generated code to identify any syntax errors, logic issues, or inconsistencies.

- Test the code: Execute the generated code and test it against different inputs or scenarios to ensure that it produces the desired output.

- Use debugging tools: Leverage debugging tools and techniques to step through the generated code, analyze variable values, and identify the source of any issues.

- Iterate and refine: If issues are identified, iterate by adjusting the LLM parameters or input instructions, and review the generated code again.

Enhancing the Accuracy and Efficiency of Generated Code

To enhance the accuracy and efficiency of generated code, there are several strategies and techniques you can employ:

- Refine input instructions: Provide more specific and detailed instructions to the LLM, including any additional context or requirements.

- Review and iterate: Continuously review and iterate on the generated code, making adjustments to the LLM parameters or input instructions as necessary.

- Incorporate code suggestions: Leverage code suggestions provided by the LLM to enhance the generated code. Consider alternative code snippets or approaches suggested by the LLM.

- Optimize LLM parameters: Fine-tune the LLM parameters to optimize the output for accuracy and efficiency. Adjust parameters such as temperature, length constraints, and sampling techniques to achieve the desired results.

Key Metrics for Assessing LLM Performance

When assessing the performance of LLMs for code generation, several key metrics can be used to evaluate their effectiveness and accuracy. Some of the key metrics for assessing LLM performance include:

- Accuracy: Measure the accuracy of the generated code by comparing it with manually written code or known correct code examples. This can be done using automated testing frameworks or manual code review processes.

- Speed: Evaluate the speed at which the LLM generates code. Compare the time taken for code generation with the LLM to the time taken for manual coding.

- Code quality: Assess the quality of the generated code by evaluating factors such as readability, maintainability, and adherence to coding standards. Use code analysis tools and code review processes to assess the quality of the generated code.

- Efficiency: Measure the efficiency of the code generation process by comparing the time and effort required to generate code with LLMs versus traditional manual coding.

Real-World Case Studies: Success Stories and Lessons Learned

Real-world case studies provide valuable insights into the practical applications of LLM code generation and the lessons learned from these implementations. They offer examples of successful use cases and demonstrate the benefits and challenges of using LLMs for code generation.

Here is an example of code generation with novita.ai LLM API, my imput: Generate a python function that takes a product review and its corresponding sentiment and appends these as a new row to a specified CSV file.

Conclusion

In conclusion, mastering code generation with LLM models opens up a world of possibilities in software development. By understanding the evolution, principles, and tools associated with LLMs, you can enhance your coding efficiency and accuracy.

Choosing the right LLM tailored to your needs and fine-tuning its parameters are crucial steps towards generating optimal code. Embrace advanced techniques, troubleshoot common issues, and measure success through key metrics and real-world case studies. Stay abreast of emerging trends and breakthroughs in LLM technology to stay ahead in the coding game. The future of coding with LLMs holds exciting prospects for innovation and efficiency.

Frequently Asked Questions

What Are the Limitations of Using LLMs for Code Generation?

LLMs for code generation have some limitations. They may generate inaccurate or misleading code if the knowledge base used is outdated or the LLM cannot correctly interpret the information. RAG can address this issue by incorporating external context into the code generation process.

How to Stay Updated on the Latest Developments in LLM for Code Generation?

To stay updated on the latest developments in LLM for code generation, developers can actively participate in technical forums and communities dedicated to AI and machine learning. They can also use search engines to find relevant articles, research papers, and blog posts that discuss advancements in LLM technology.

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

Novita AI LLM Inference Engine: the largest throughput and cheapest inference available

Discover more from Novita

Subscribe to get the latest posts sent to your email.