How to Create Your LLM With LangChain: a Step-by-Step Guide

Unlock the secrets of building your own language model with LangChain. Simplify the process, integrate with ease, and unleash the power of AI development.

Introduction

Large language models (LLMs) like OpenAI’s GPT-3, Google’s BERT, and Meta’s LLaMA are revolutionizing various industries by facilitating the generation of diverse text types, from marketing material and data science code to poetry. While ChatGPT has gained considerable attention for its user-friendly chat interface, there are numerous unexplored opportunities for incorporating LLMs into different software applications.

If you’re intrigued by the transformative potential of generative AI and LLMs, this tutorial is tailored for you. Here, we introduce LangChain — an open-source Python framework designed for constructing applications utilizing LLMs such as GPT.

Explore the realm of building AI applications with LangChain through our “Building Multimodal AI Applications with LangChain & the OpenAI API AI Code Along” session. You’ll delve into transcribing YouTube video content using the Whisper speech-to-text AI and subsequently employing GPT to pose questions about the content.

What is LangChain

LangChain stands as an open-source framework meticulously crafted to streamline the development of applications fueled by large language models (LLMs). It furnishes a comprehensive array of tools, components, and interfaces aimed at simplifying the construction of LLM-centric applications. With LangChain at hand, managing interactions with language models, seamlessly linking disparate components, and integrating resources like APIs and databases becomes a seamless endeavor. For further insights into LangChain’s role in Data Engineering and Data Applications, refer to our dedicated article.

The LangChain platform presents a repertoire of APIs that developers can seamlessly embed in their applications, empowering them to imbue language processing capabilities sans the need to start from scratch. Consequently, LangChain significantly expedites the process of crafting LLM-based applications, rendering it accessible to developers spanning a spectrum of expertise.

Chatbots, virtual assistants, language translation utilities, and sentiment analysis tools exemplify the manifold applications powered by LLMs. Leveraging LangChain, developers fashion bespoke language model-based applications tailored to precise requirements.

As natural language processing continues its strides forward with broader adoption, the potential applications of this technology are poised to be virtually limitless. Here are several salient features distinguishing LangChain:

- Customizable prompts tailored to specific needs

- Crafting chain link components to address advanced usage scenarios

- Seamlessly integrating models for data augmentation and accessing cutting-edge language model capabilities such as GPT and HuggingFace Hub

- Versatile components facilitating mixing and matching to suit specific requirements

- Manipulating context to establish and steer the course for enhanced precision and user satisfaction

Key Components of LangChain

LangChain distinguishes itself through its emphasis on flexibility and modularity, breaking down the natural language processing pipeline into discrete components. This approach empowers developers to customize workflows to suit their requirements, rendering LangChain adaptable for a multitude of AI applications across diverse sectors and scenarios.

Components and Chains

Within LangChain, components represent specialized modules executing distinct functions within the language processing pipeline. These components can be interconnected to form “chains,” enabling the creation of tailored workflows. For instance, a customer service chatbot chain may incorporate modules for sentiment analysis, intent recognition, and response generation.

Prompt Templates

LangChain offers reusable prompt templates that can be dynamically adapted by inserting specific values. For example, a template prompting for a user’s name can be personalized by inserting the user’s actual name. This feature facilitates the generation of prompts based on dynamic resources.

Vector Stores

Vector stores facilitate the storage and retrieval of information via embeddings, which analyze numerical representations of document meanings. Serving as storage facilities for these embeddings, Vector Stores enable efficient search based on semantic similarity.

Indexes and Retrievers

Indexes function as databases storing details and metadata about the model’s training data, while retrievers swiftly search this index for specific information. This enhances the model’s responses by providing context and related information.

Output Parsers

Output parsers refine the responses generated by the model, managing undesired content, tailoring the output format, or supplementing extra data to the response. Consequently, output parsers assist in extracting structured results, such as JSON objects, from the language model’s responses.

Example Selectors

Example selectors in LangChain identify relevant instances from the model’s training data, thereby enhancing the precision and relevance of generated responses. These selectors can be customized to prioritize certain types of examples or filter out unrelated ones, ensuring tailored AI responses based on user input.

Agents

Agents represent unique LangChain instances, each equipped with specific prompts, memory, and chains tailored for particular use cases. These agents can be deployed across various platforms, including web, mobile, and chatbots, catering to a diverse audience.

How to Set up LangChain with Python

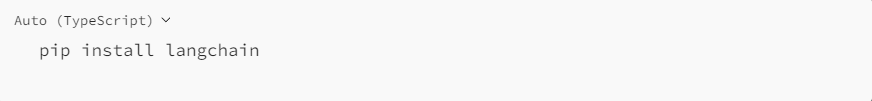

Installing LangChain in Python is pretty straightforward. You can either install it with pip or conda.

Install using pip

Install using conda

Setting up the basic framework of LangChain lays the foundation for its functionality. However, LangChain truly excels when it’s seamlessly integrated with a wide array of model providers, data stores, and similar resources.

Environment setup

Incorporating LangChain typically involves integrating it with diverse model providers, data stores, APIs, and similar components. As with any integration process, it’s essential to furnish LangChain with the necessary and pertinent API keys for its operation. This can be accomplished in two ways:

- Setting up key as an environment variable

- Directly set up the key in the relevant class

How to Build A Language Model Application in LangChain

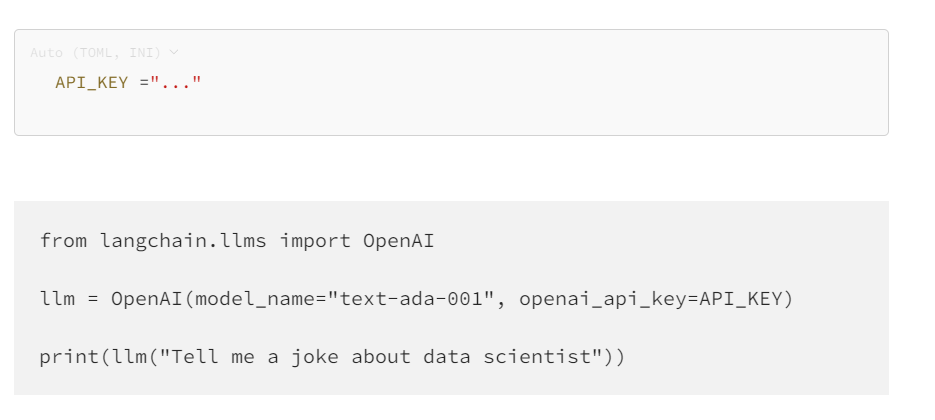

LangChain offers an LLM class tailored for interfacing with different language model providers like OpenAI, Cohere, and Hugging Face. At its core, an LLM’s primary function is text generation. With LangChain, constructing an application that takes a string prompt and yields the corresponding output is remarkably straightforward.

Output:

>>> “What do you get when you tinker with data? A data scientist!”

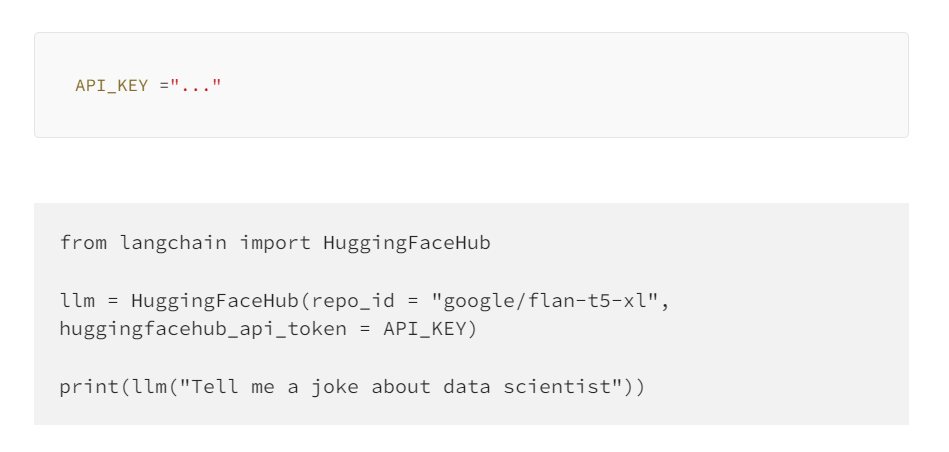

In the example above, we are using text-ada-001 model from OpenAI. If you would like to swap that for any open-source models from HuggingFace, it’s a simple change:

You can get the Hugging Face hub token id from your HF account.

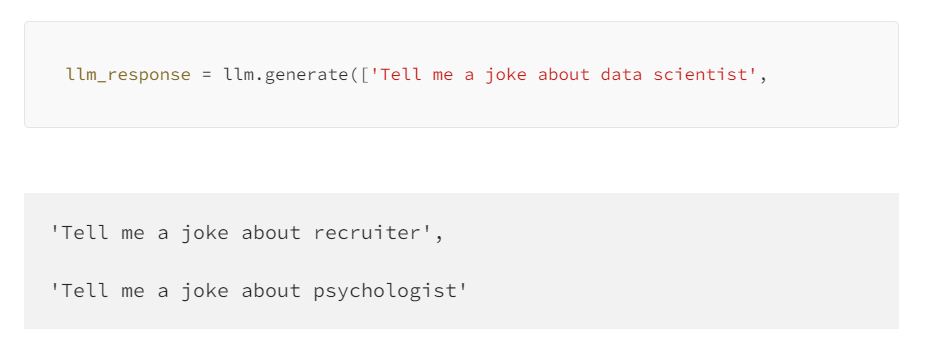

If you have multiple prompts, you can send a list of prompts at once using the generate method:

Output:

The most basic application you can develop with LangChain involves sending a prompt to a language model of your choosing and receiving a response. This straightforward setup allows for various parameters to be adjusted, such as the temperature. The temperature parameter regulates the randomness of the output and defaults to 0.7.

Integrating LLMs and prompts within multi-step workflows

Chaining in the LangChain context involves integrating LLMs with other elements to construct an application. Examples include:

- Sequentially linking multiple LLMs, where the output of the first LLM serves as input for the second LLM.

- Integrating LLMs with prompt templates.

- Combining LLMs with external data sources, such as for question answering.

- Incorporating LLMs with long-term memory, such as chat history.

Conclusion and Inspiration

Just a short while ago, the impressive capabilities of ChatGPT left us all in awe. However, the landscape has rapidly evolved, presenting us with new developer tools like LangChain that empower us to create similarly remarkable prototypes on our personal laptops within hours.

LangChain, an open-source Python framework, empowers individuals to develop applications powered by LLMs (Large Language Models). This framework provides a versatile interface to a multitude of foundational models, simplifying prompt management, and acting as a central hub for various components such as prompt templates, additional LLMs, external data, and other tools through agents (as of the latest update).

To stay abreast of the latest advancements in Generative AI and LLM, consider attending our Building AI Applications with LangChain and GPT webinar. In this session, you’ll grasp the fundamentals of utilizing LangChain for AI application development, learn about structuring an AI application, and discover techniques for embedding text data for optimal performance. Additionally, you can explore our cheat sheet on the landscape of generative AI tools, which categorizes different tools, their applications, and their impact across various sectors.

Or you can directly try our Large Language Model by novita.ai. Equipped with various models, our LLM offers you uncensored, unrestricted conversations through powerful Inference APIs. With Cheapest Pricing and scalable models, Novita AI LLM Inference API empowers your LLM incredible stability and rather low latency in less than 2 seconds.

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

What is the difference between LLM and GPT

LLM Leaderboard 2024 Predictions Revealed

Novita AI LLM Inference Engine: the largest throughput and cheapest inference available