Enhance Your Applications with Llama 3 API Access

Key Highlights

- Llama 3 Model Overview:Meta’s Llama 3 family includes advanced large language models (LLMs) in 8B and 70B sizes, optimized for dialogue tasks and text generation.

- Performance Evaluation: Llama 3 surpasses open-source models like Mistral 7B and Gemini 1.5 in key benchmarks, demonstrating improved reasoning and context handling abilities.

- API Utility: APIs are crucial connectors for developers to efficiently integrate advanced LLMs into applications, enabling easy scalability and response customization.

- Notable API Providers: Leading providers such as Lepton, Fireworks, Novita AI and Together AI offer robust and cost-effective AI solutions for various applications.

- User-Friendly Process: Novita AI provides developers with tools and resources for deploying the Llama 3 API, including an experimentation playground and a streamlined key management system.

Introduction

Large language models (LLMs) like Meta’s Llama 3 have revolutionized natural language processing, enabling advanced interactions and deeper software understanding. Llama 3 excels in dialogue tasks and handling complex language challenges. This article delves into Llama 3’s features, integration through APIs, and the role of API providers like Novita AI in deploying these models seamlessly.

What is the Llama 3 model?

Meta developed the Llama 3 family of large language models (LLMs), featuring pretrained and instruction-tuned generative text models in 8B and 70B sizes. These instruction-tuned models are optimized for dialogue tasks and outperform many open-source chat models on key industry benchmarks. Additionally, Meta prioritized optimizing both helpfulness and safety during the development process.

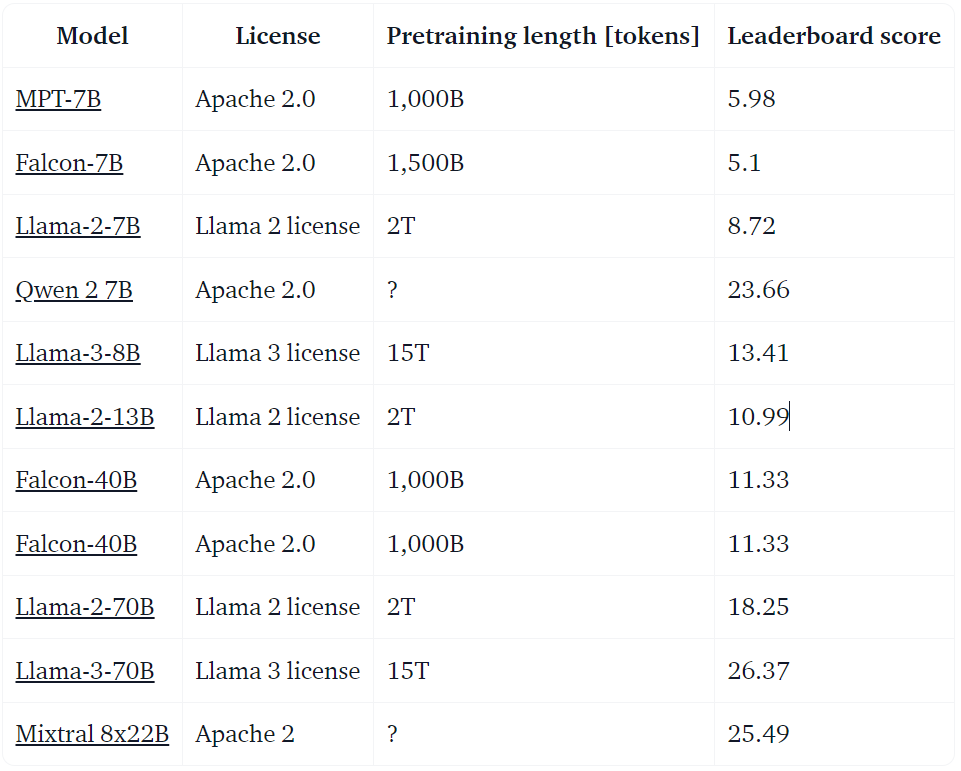

Llama 3 evaluation

Here, you’ll find a list of models and their Open LLM Leaderboard scores. This list isn’t exhaustive, so we encourage you to explore the full leaderboard. Keep in mind that the LLM Leaderboard is particularly useful for evaluating pre-trained models, while other benchmarks focus on conversational models.

How to use Llama 3?

This repository includes two versions of Meta-Llama-3–8B: one designed for use with transformers and another compatible with the original Llama 3 codebase.

Use with transformers

Refer to the snippet below for usage with Transformers:

import transformers

import torch

model_id = "meta-llama/Meta-Llama-3-8B"

pipeline = transformers.pipeline(

pipeline("Hey how are you doing today?")Use with llama 3

Please follow the instructions in the repository.

To download the original checkpoints, see the example command below using huggingface-cli:

huggingface-cli download meta-llama/Meta-Llama-3-8B --include "original/*" --local-dir Meta-Llama-3-8B

For Hugging Face support, Meta-Llama developers recommend using Transformers or TGI, but a similar command will also work.

Meta’s LLama 3 is twice as big as LLama 2

Llama 3 features improved reasoning capabilities and accommodates a larger context window of up to 8,000 tokens, enhancing its effectiveness for complex natural language processing tasks in software development. Meta’s Llama 3 was trained on an extensive dataset comprising over 15 trillion tokens.

Versus Mistral 7B and Gemma 7B

Meta’s Llama 3 8B model is said to outperform other open-source models like Mistral 7B and Gemma 7B on various benchmarks, including MMLU, ARC, and DROP

Versus Gemini 1.5

Meta’s Llama 3 70B has shown superior performance compared to Gemini 1.5 Pro across several benchmarks, including MMLU, HumanEval, and GSM-8K.

Versus GPT-3.5

Meta’s Llama 3 70B has demonstrated impressive performance against GPT-3.5 on a custom test set specifically designed to evaluate skills in coding, writing, reasoning, and summarization.

What is an API?

APIs, or Application Programming Interfaces, are digital connectors that allow different software applications to communicate and share data. They act as intermediaries, enabling seamless interactions between various programs and systems.

APIs are ubiquitous in our daily lives — whether you’re using rideshare apps, making mobile payments, or controlling smart home devices remotely. When you engage with these applications, they rely on APIs to exchange information with servers, process requests, and present results in a user-friendly format on your device.

Why Do We Need an LLM API?

APIs provide developers with a standardized interface to integrate large language models into their applications. This standardization not only streamlines the development process but also ensures access to the latest model improvements. It enables efficient scaling of tasks and the selection of suitable LLMs for various applications. Additionally, the flexibility of APIs allows for the customization of LLM responses to meet specific requirements, enhancing their adaptability and relevance across different scenarios.

Leading LLM API Providers for Developers in 2024

API providers are cost-effective cloud platforms that facilitate the efficient deployment of machine learning models. They offer infrastructure-free access to advanced AI through user-friendly APIs, robust scalability, and competitive pricing, making AI accessible for businesses of all sizes. In this section, we’ll explore some of the leading API providers in the industry.

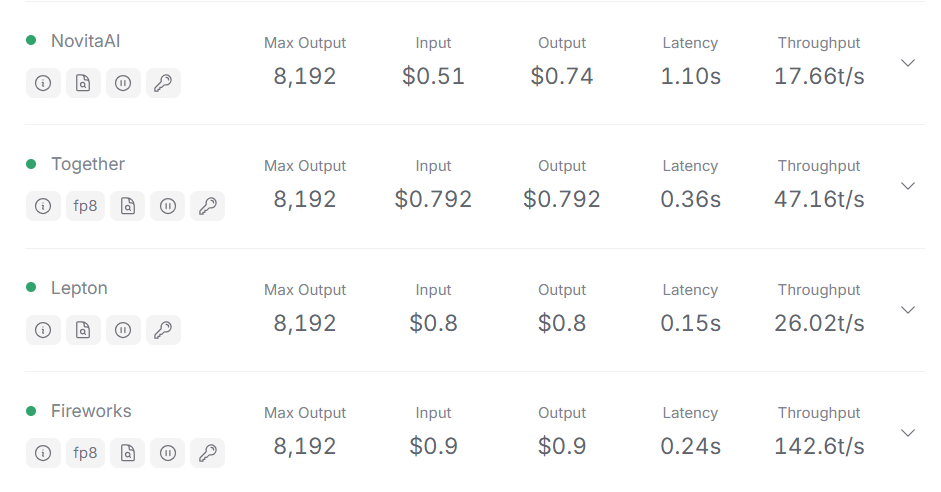

Taking the Llama 3 70B model as an example, here are some API providers, each with distinct performance metrics and cost-effectiveness. We will provide detailed descriptions of each option to assist developers in making informed choices.

Lepton

Lepton is an API provider that also supports a maximum output of 8,192 tokens. Its input and output costs are both $0.80, with a latency of 0.15 seconds and a throughput of 26.02 t/s.

- Advantages: Lepton boasts very low latency, making it ideal for applications where response time is critically important.

- Disadvantages: However, its throughput is relatively lower, which might not be suitable for applications that require processing large volumes of data.

Fireworks

Fireworks is another API provider that can handle requests with a maximum output of 8,192 tokens. Its input and output costs are $0.90, with a latency of 0.24 seconds and a throughput of 142.6 t/s.

- Advantages: Fireworks provides exceptionally high throughput, making it ideal for users needing to handle large amounts of data without being overly concerned about cost.

- Disadvantages: The latency is relatively higher, and its costs are the highest among the four API providers, which might not be suitable for users on a tight budget.

Novita AI

Novita AI is a cloud platform supporting AI ambitions with integrated APIs, serverless computing, and GPU instances. It provides affordable tools for success, helping users kickstart projects cost-free and turn their AI dreams into reality efficiently.

Novita AI is an API provider that offers a cost-effective solution for handling large volumes of requests, making it suitable for users on a budget who need to process substantial amounts of data.

- Advantages: Novita AI has lower costs when managing a high number of requests, which is ideal for budget-conscious users who require extensive data handling.

- Disadvantages: Compared to other providers, Novita AI has a latency of 1.10 seconds, which may pose challenges for applications that require rapid response times.

Together AI

Together AI is another API provider capable of handling requests with a maximum output of 8,192 tokens. Its input and output costs are both $0.792, with a latency of 0.36 seconds and a throughput of 47.16 t/s.

- Advantages: Together offers lower latency and higher throughput, making it well-suited for applications that require fast request processing.

- Disadvantages: Its costs are slightly higher than those of Novita AI, which may be a consideration for users with very tight budgets.

When choosing an API provider, consider cost, latency, and throughput. Novita AI is perfect for budget-conscious projects with large data needs. Lepton excels in low-latency applications, while Fireworks handles massive data with higher costs and latency. Overall, Novita AI stands out for affordable, large-scale data handling.

Expanding on LLM APIs, the Llama 3 API offers developers access to advanced language processing capabilities via a standardized API. This enables seamless integration of linguistic functions into various applications, enhancing their interactive and analytical features. Learn how to use the Llama 3 API on the Novita AI LLM API platform.

Run Meta’s Llama 3 with Novita AI’s LLM API

Follow these structured steps carefully to build powerful language processing applications using the Llama 3 API on Novita AI. This detailed guide ensures a smooth and efficient process, meeting the expectations of modern developers in search of an advanced AI platform.

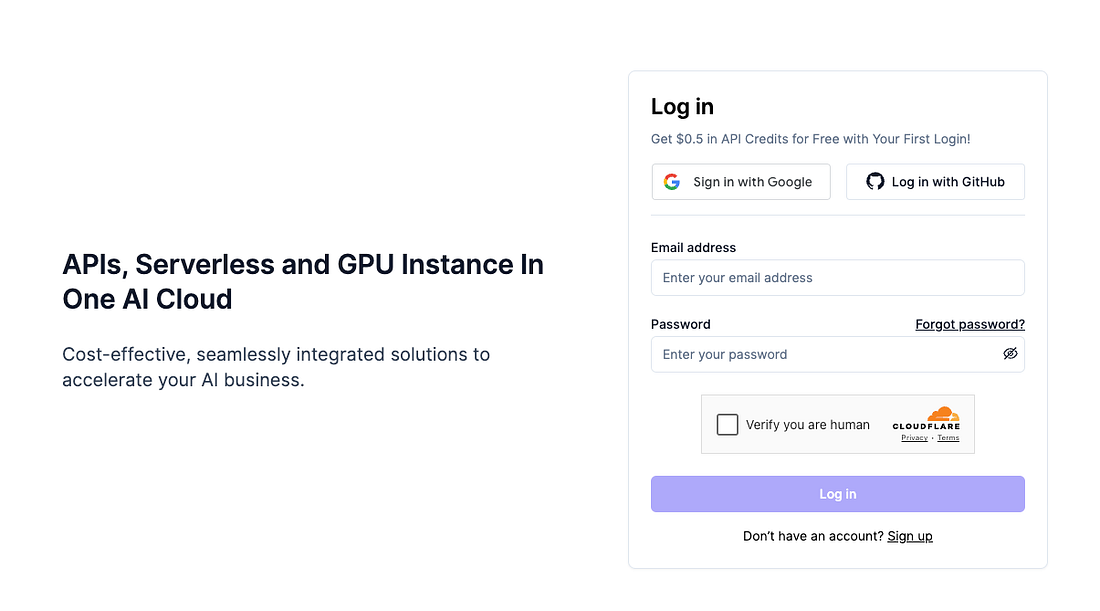

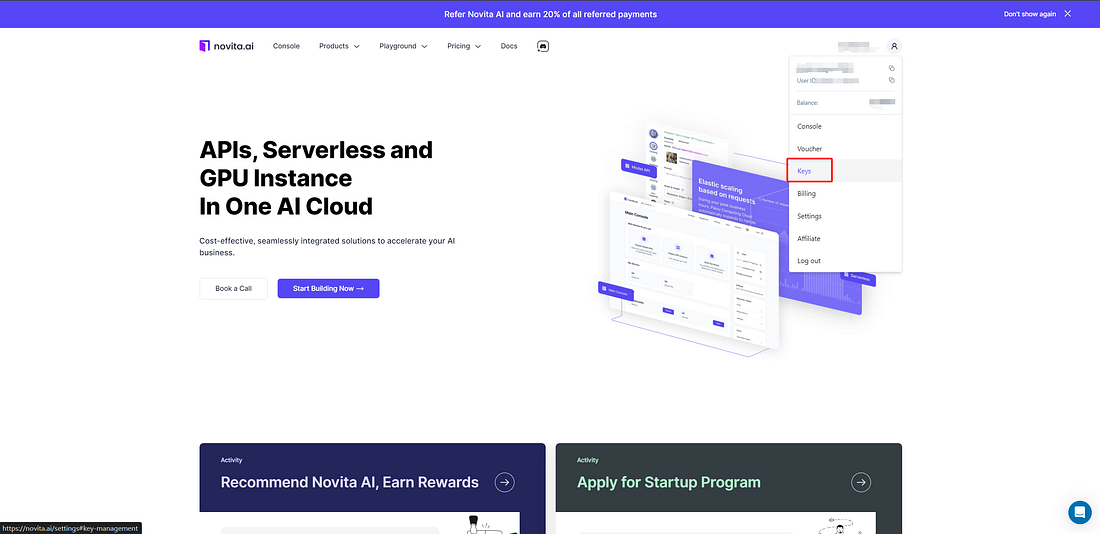

Step1: Go to Novita AI and log in.

You can log in using either your Google or GitHub account, which will create a new account for you upon your first login.

Alternatively, you can sign up with your email address.

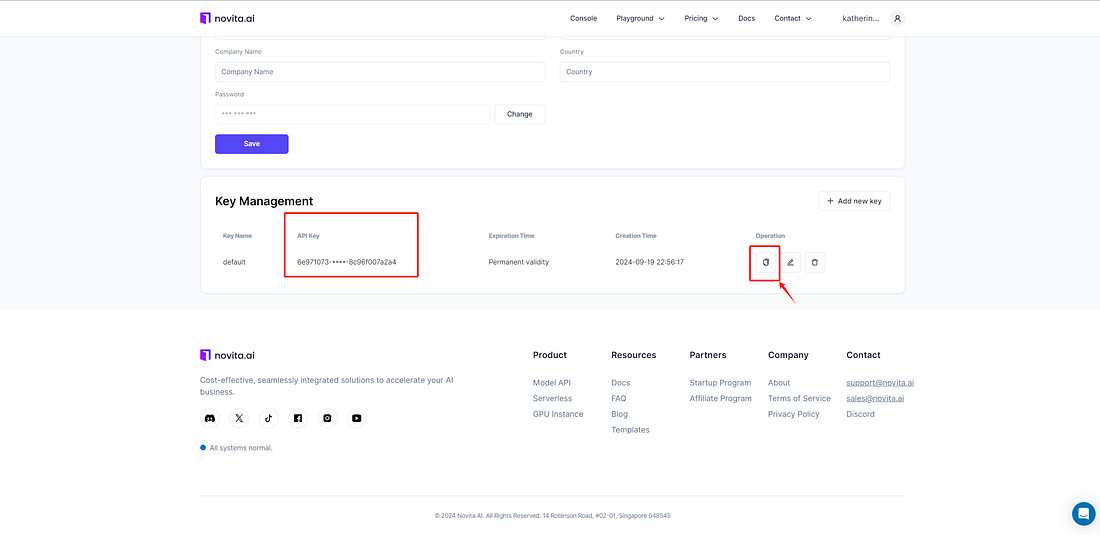

Step2: Manage API Key.

Novita AI uses Bearer authentication to verify API access, requiring an API Key in the request header, such as “Authorization: Bearer {API Key}.”

To manage your keys, navigate to “Key Management” in the settings.

A default key is automatically created upon your first login, and you can generate additional keys by clicking “+ Add New Key.

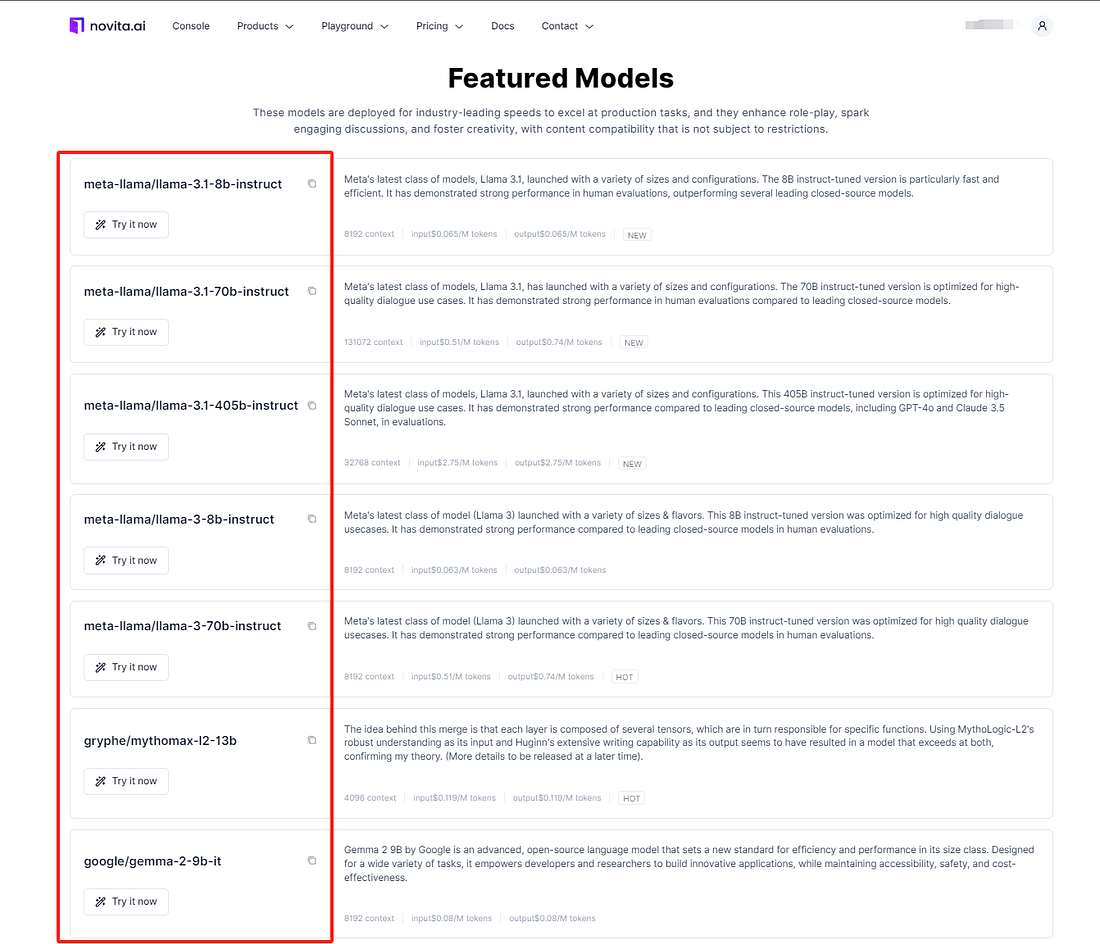

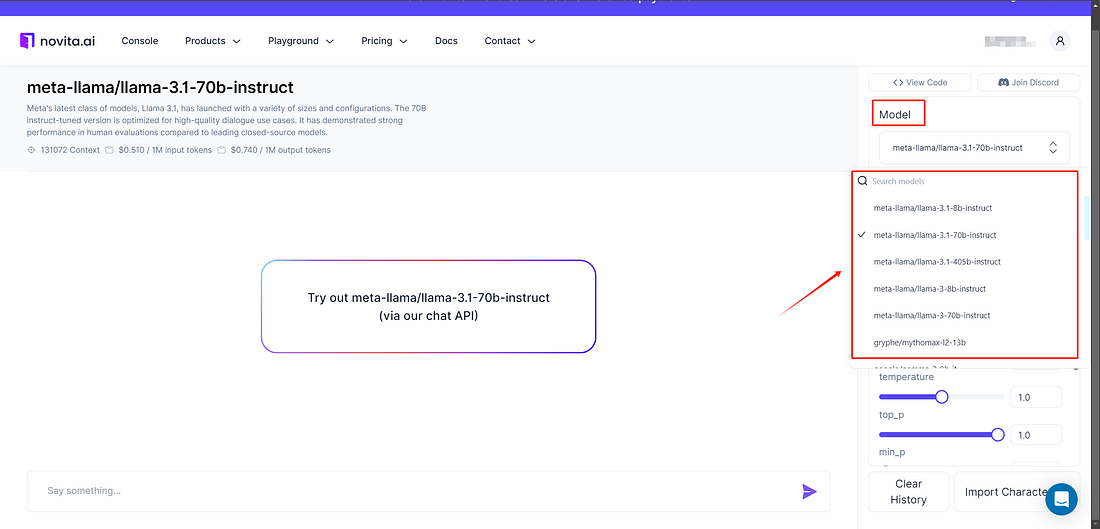

Step 3: Choose Your Model

Novita AI provides a variety of models, including multiple versions of Llama. Select the model that best fits your application’s needs, whether for chat completion, text generation, or other tasks.

Here’s what we offer for Llama 3:

- meta-llama/llama-3.1–8b-instruct

- meta-llama/llama-3.1–70b-instruct

- meta-llama/llama-3.1–405b-instruct

- meta-llama/llama-3–8b-instruct

- meta-llama/llama-3–70b-instruct

To explore the full list of available models, you can visit the Novita AI LLM Models List.

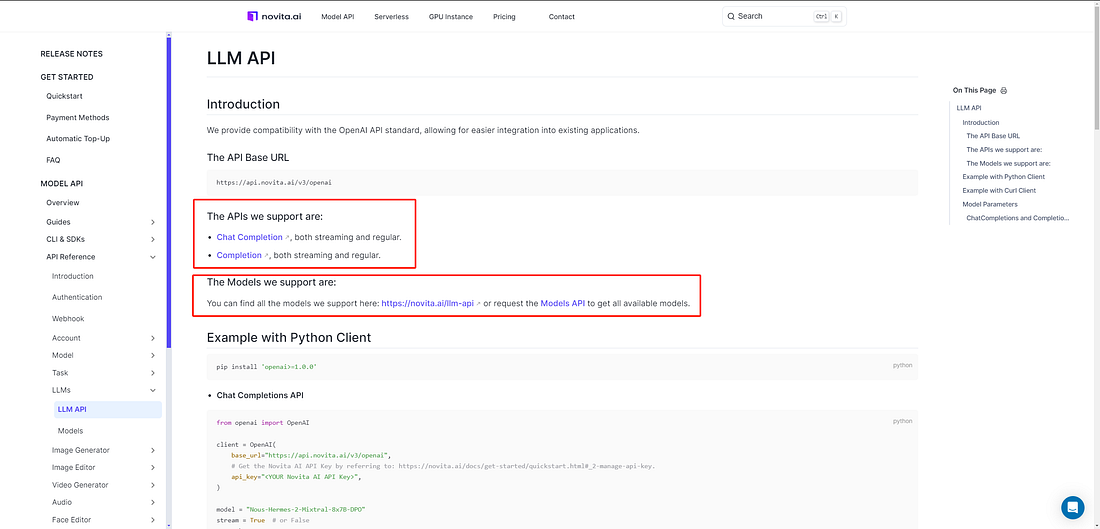

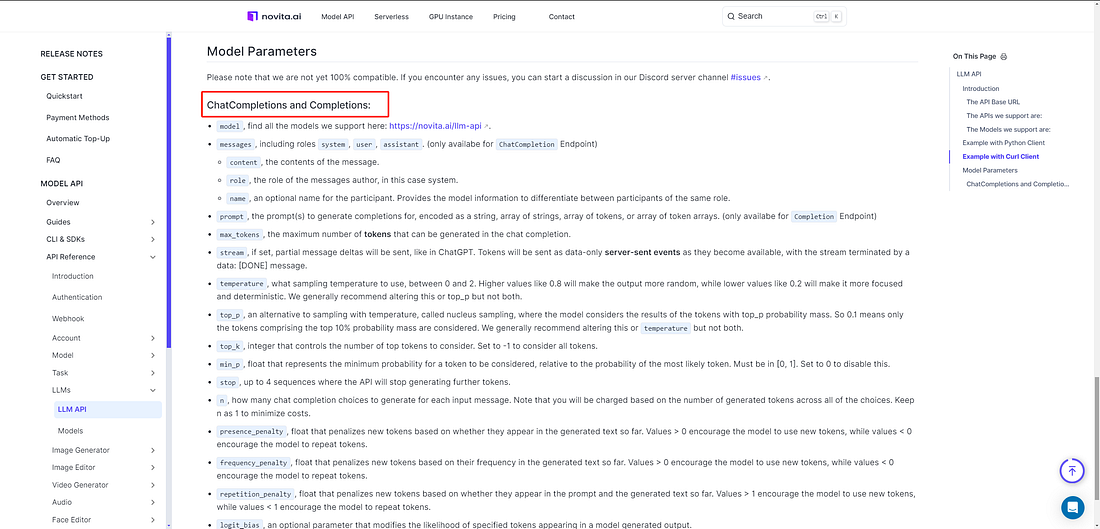

Step 4: Explore the LLM API reference to discover the available APIs and models offered by Novita AI.

Step 5: Choose the model that best fits your needs, then set up your development environment. Configure options such as content, role, name, and prompt to customize your application.

Step 6: Run multiple tests to verify that the API performs consistently and meets your application’s requirements.

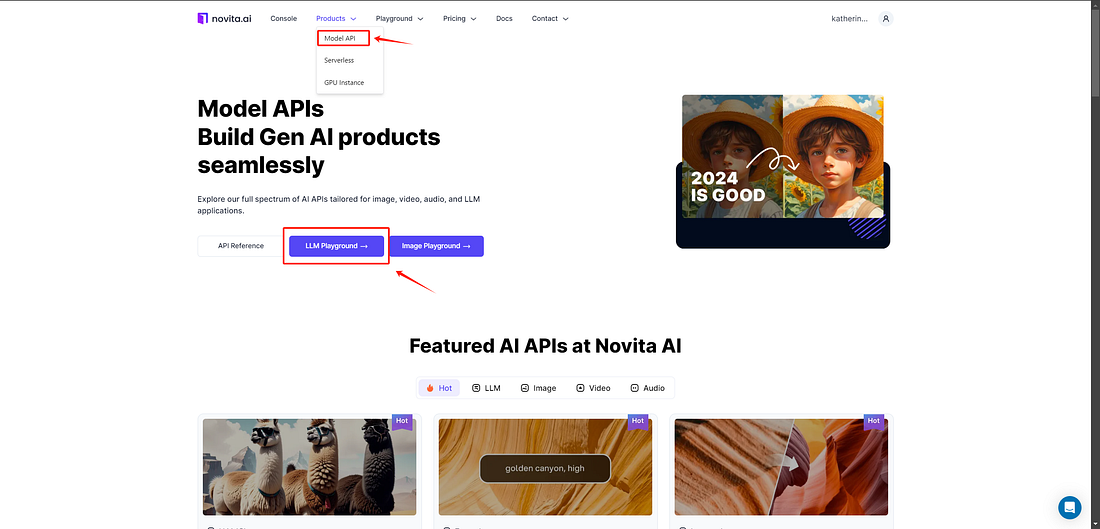

Before deploying the Llama 3 API on Novita AI’s LLM API, you can first try it out in the LLM Playground. We offer developers free usage credits to experiment with the platform. If you have any suggestions, feel free to share them on Discord. Now, let me guide you through the steps to get started:

Step 1: Access the Playground by navigating to the Products tab, selecting Model API, and beginning your exploration of the LLM API.

Step 2: Choose a Model by selecting the Llama model that best suits your evaluation needs.

Step 3: Enter Your Prompt by typing it into the input field to generate a response from the selected model.

Example with Python Client

pip install 'openai>=1.0.0'Chat Completions API:

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring to: https://novita.ai/docs/get-started/quickstart.html#_2-manage-api-key.

api_key="<YOUR Novita AI API Key>",

)

model = "Nous-Hermes-2-Mixtral-8x7B-DPO"

stream = True # or False

max_tokens = 512

chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": "Act like you are a helpful assistant.",

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "", end="")

else:

print(chat_completion_res.choices[0].message.content)Completions API:

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring to: https://docs/get-started/quickstart.html#_2-manage-api-key

api_key="<YOUR Novita AI API Key>",

)

model = "Nous-Hermes-2-Mixtral-8x7B-DPO"

stream = True # or False

max_tokens = 512

completion_res = client.completions.create(

model=model,

prompt="A chat between a curious user and an artificial intelligence assistant.\nYou are a cooking assistant.\nBe edgy in your cooking ideas.\nUSER: How do I make pasta?\nASSISTANT: First, boil water. Then, add pasta to the boiling water. Cook for 8-10 minutes or until al dente. Drain and serve!\nUSER: How do I make it better?\nASSISTANT:",

stream=stream,

max_tokens=max_tokens,

)

if stream:

for chunk in completion_res:

print(chunk.choices[0].text or "", end="")

else:

print(completion_res.choices[0].text)Llama 3 API pricing

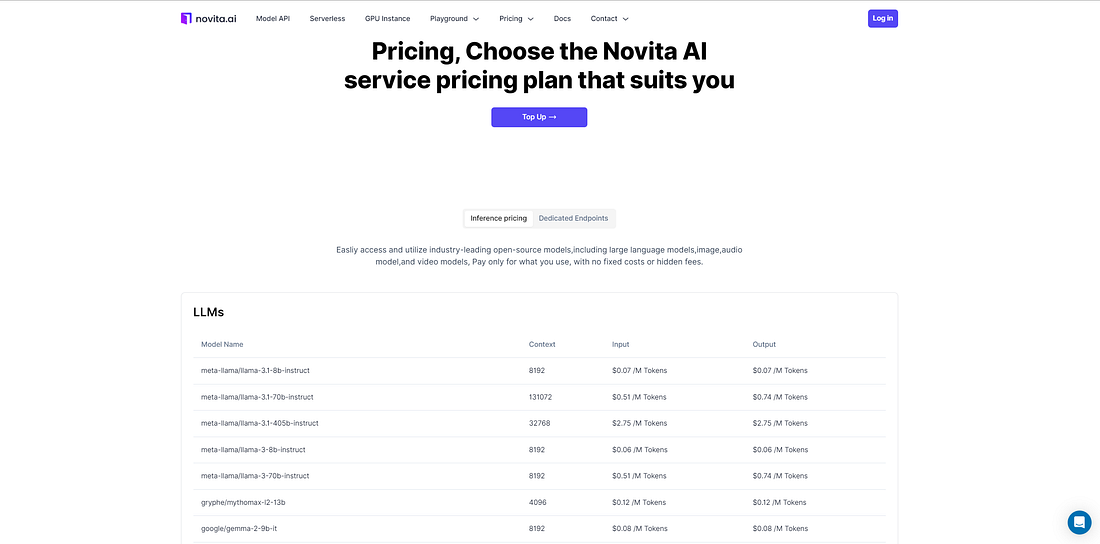

With Novita AI, you can easily access and utilize industry-leading open-source models, including large language models, as well as image, audio, and video models. Pay only for what you use, with no fixed costs or hidden fees. Select the Novita AI pricing plan that best suits your needs.

Conclusion

Meta’s Llama 3 model represents a significant advancement in large language models, enhancing dialogue and reasoning abilities. Integrated via APIs, this model improves user experiences across various applications. Novita AI stands out as a key API provider, offering the Llama 3 API along with essential tools for effective AI development. Platforms like Novita AI are crucial in meeting the growing demand for advanced AI solutions and empowering developers to innovate in this space.

Frequently Asked Questions

How Does Llama 3 Improve Application Performance?

Llama 3 enhances app performance with efficient algorithms and resource usage, ensuring faster calculations for improved user experience and operational efficiency.

Can Llama 3 API Integrate with Any Application?

Llama 3 API, a specialized version of LLM API, offers advanced language processing for developers. Customization may be required for optimal integration.

How to access Llama 3 for free?

If you prefer a local setup, tools like Ollama facilitate the deployment of Llama 3 models on your local machine, enabling free use for personal projects.

What is the difference between Llama 3.1 and 3?

Llama 3.1 outperforms Llama 3 in math and reasoning abilities. For instance, in a Meta tech blog, Llama-3.1 (8B) scores 73.0 on MATH (0-shot, CoT), surpassing Llama-3’s score of 68.4 on MATH (5-shot).

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading

1.Introducing Code Llama: A State-of-the-art large language model for code generation.