Effective AI LLM Test Prompts: Guide For Developers

Enhance your AI LLM test prompts with our developer's guide. Discover effective strategies to improve your testing process.

Key Highlights

- Purpose of Test Prompts: Essential for evaluating the performance, safety, and reliability of large language models (LLMs).

- Crafting Effective Prompts: Focus on clarity, relevance, and specificity to elicit accurate and useful responses from AI models.

- Advanced Techniques: Leverage Natural Language Processing (NLP) and ensure contextual relevance in test prompts.

- LLM API Benefits: Enhance testing efficiency through LLM API service platforms like Novita AI to do unified model interaction.

- Practical Examples: Use real-world scenarios to test LLM capabilities, including summarization, calculation, and creative writing tasks.

- Common Challenges: Address issues such as ambiguity, and bias, and ensure diverse test scenarios.

Introduction

Large language models (LLMs) are revolutionizing AI with their ability to generate content and tackle complex tasks. As these models evolve, ensuring their accuracy, reliability, and safety becomes crucial. AI LLM test prompts guide models to create specific outputs for evaluation, highlighting strengths and weaknesses in comprehension, logic, and creativity. Effective prompts contribute to developing robust and ethical AI systems. Explore their secrets in our blog!

Understanding AI LLM Test Prompts

In AI and natural language processing, test prompts direct large language models to generate specific outputs. These specialized questions evaluate the capabilities and constraints of AI models. Effective test prompts push the model’s comprehension, logic, and creativity to demonstrate strengths and areas for enhancement.

Definition and Importance of Test Prompts in AI

Test prompts are crucial in evaluating the performance of AI, particularly large language models. These specific instructions help developers assess the model’s understanding and response to different tasks, highlighting strengths like accuracy, fluency, coherence, and biases.

By using test prompts, developers can identify areas for improvement and enhance the model’s reliability. Additionally, test prompts play a vital role in ensuring responsible AI use by testing for biases, harmful outputs, and unexpected issues to mitigate ethical risks and uphold human values.

Key Features of Effective Test Prompts

Effective test prompts are clear, relevant, and can help get good responses from the AI model. They should be:

- Clear and Simple: Make sure your prompts are easy to understand. The AI model should know exactly what you are asking.

- Relevant to the Task: The prompt must fit the purpose and goals of the AI model.

- Made to Get Clear Responses: Set up your prompts so the AI can give you well-organized and logical answers.

Why Evaluate AI Models

Continuous evaluation is vital for safe AI development, particularly with large evolving language models. Regular tests ensure performance standards are met and prevent unexpected biases or behaviors. Evaluating models reveals strengths and weaknesses, such as unique text generation or factual summarization. Thorough testing enhances our understanding of how AI models adapt to new data and different scenarios, fostering trust in AI and maximizing its benefits.

Crafting Compelling Test Prompts for AI LLMs

Creating effective test prompts involves understanding how large language models operate and anticipating potential errors. The goal is to push the model’s capabilities by designing challenging prompts that reveal its strengths and weaknesses. Employing best practices and incorporating real-world examples can enhance the development of AI language models significantly.

Best Practices for Developing Test Prompts

The process of making good prompts is not a one-time task. It takes careful planning, execution, and improvement. Follow these best practices to make sure your prompts give helpful insights:

- Start with a Clear Objective: Know what you want to test and which parts of the LLM to check.

- Use Diverse and Representative Data: Avoid bias. Use a wide range of data points and scenarios.

- Establish a Baseline for Comparison: Test your prompts with different LLMs or various versions of the same LLM to set a standard for performance.

For a detailed prompt guide, you can watch this YouTube video.

Examples of Successful AI LLM Test Prompts

To show how to create good prompts, let’s look at some examples that work well for different LLMs:

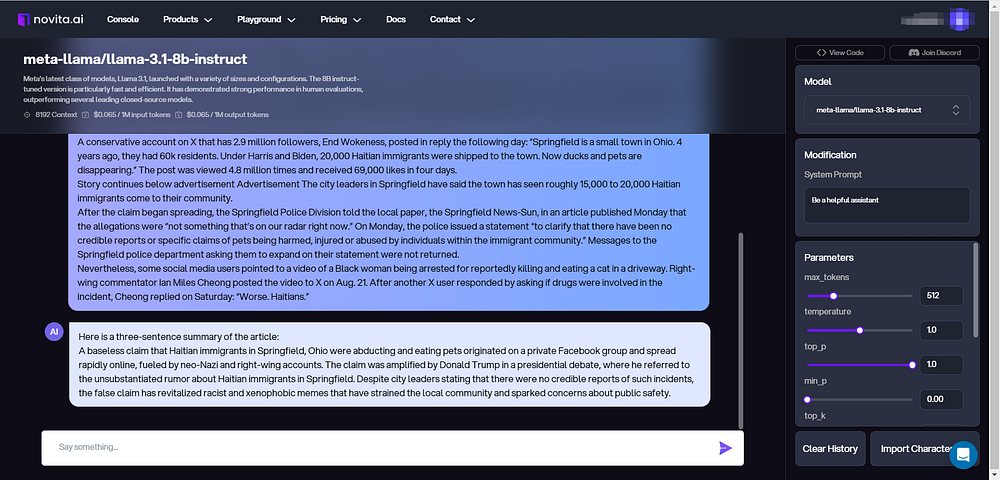

Summarization Task

- Prompt: Summarize the main points of the following article in three sentences. (Provide a news article)

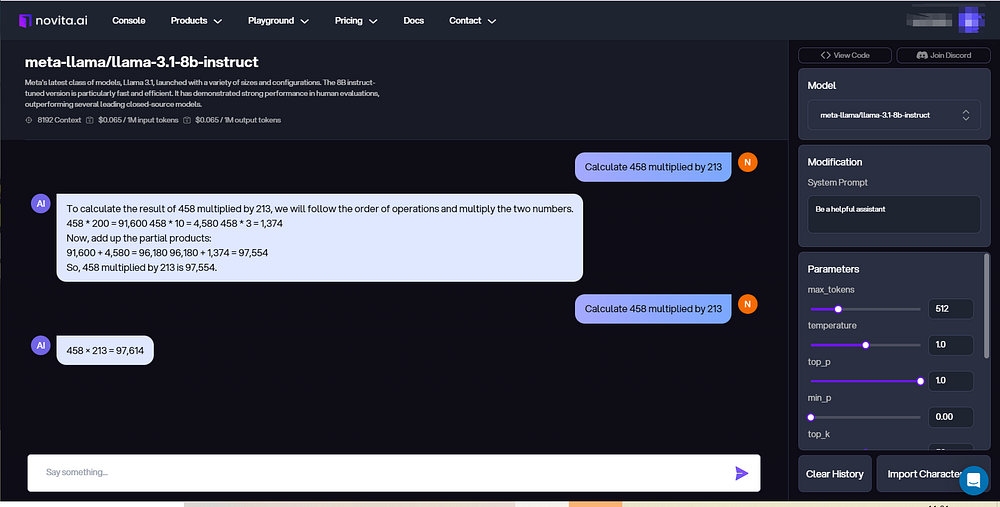

Speed Evaluation

- Prompt: Calculate 458 multiplied by 213.

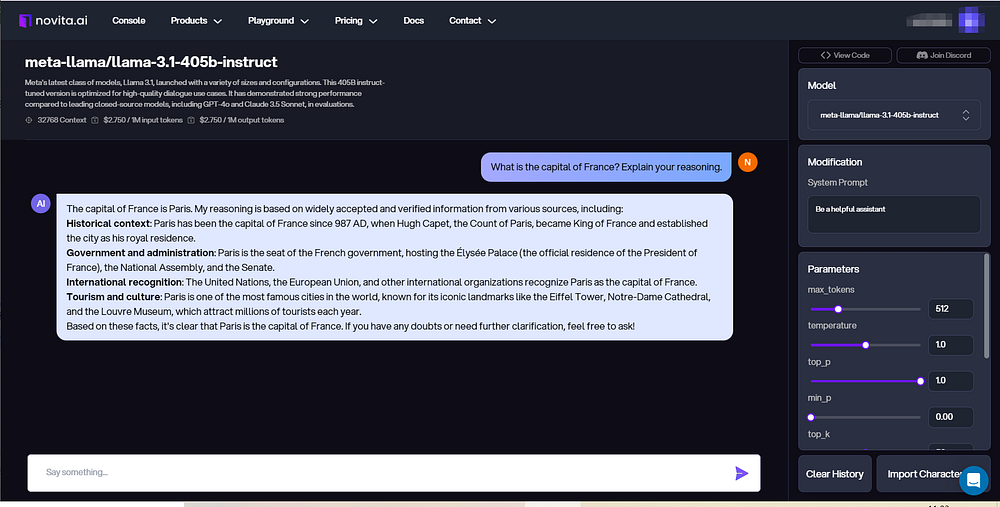

Question Answering Task

- Prompt: What is the capital of France? Explain your reasoning.

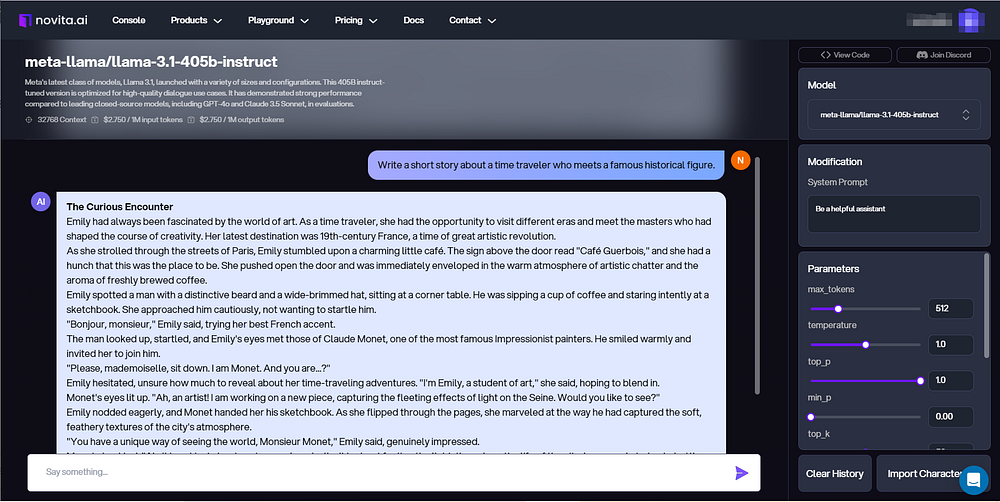

Creative Content Generation:

- Prompt: Write a short story about a time traveler who meets a famous historical figure.

In the examples above, we use two models to test the LLM prompt performance. Llama 3.1 8B is lightweight, the ultra-fast model you can run anywhere, and good for tasking simple reasoning quickly. Llama 3.1 405B is an advanced model fueling a broad range of applications, excellent for complex and creative tasks. Enjoy testing them on LLM Playground if you are interested.

Evaluating the Effectiveness of Your Test Prompts

Evaluating test prompts is crucial. It’s not just about creating them but also checking if they effectively gauge the LLM’s performance. Assess the results for quality, biases, and consistency to ensure the prompts align with the LLM’s purpose.

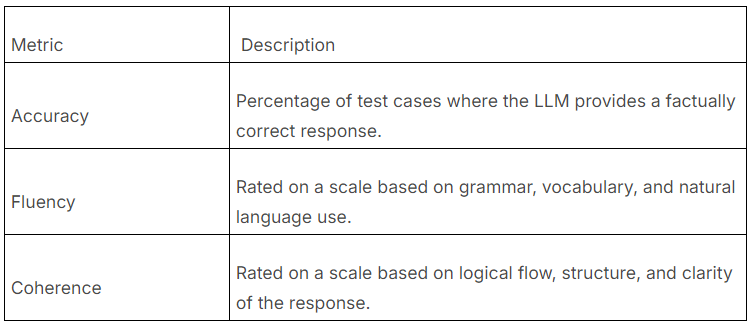

Metrics for Assessing Test Prompt Performance

Assessing test prompt performance requires utilizing appropriate metrics that quantify different aspects of the LLM’s response. Several metrics can be used, each providing unique insights into the model’s capabilities:

- Accuracy: Measures how often the LLM provides a correct or factually accurate response.

- Fluency: Evaluates the grammatical correctness and naturalness of the generated text.

- Coherence: Assesses the logical flow and organization of the LLM’s response.

Here’s an example of how these metrics can be organized:

Analyzing Test Results to Improve Prompts

Analyzing prompt test results is a systematic process. Study the LLM’s outputs to identify patterns and areas for improvement. Compare its performance across various test cases to highlight strengths and weaknesses. Understanding the LLM’s behavior will enhance prompt quality, making them more effective in improving the AI model overall.

The Role of LLM API in Enhancing Test Prompt Efficiency

LLM APIs are useful tools. They simplify the testing process by offering a standard way to work with different large language models. You do not need to set up separate connections for each model. As a result, prompt testing becomes much more efficient. AI service platforms like Novita AI offer useful features through LLM APIs, such as version control, batch processing, and access to pre-trained models.

Benefits of Using LLM API for Test Prompts

Integrating an LLM API into your testing process can make things easier and more efficient. Here are some good benefits:

- Easy Setup: You can use several LLMs with one interface. This means you won’t need to do lots of separate integrations.

- One Place to Manage: You can control prompts, track test cases, and review results all in one area. This helps keep everything organized.

- Growth and Automation: You can easily expand your testing work and automate repeat tasks. This will save you time and effort.

Integrating Novita AI LLM API into Your AI Testing

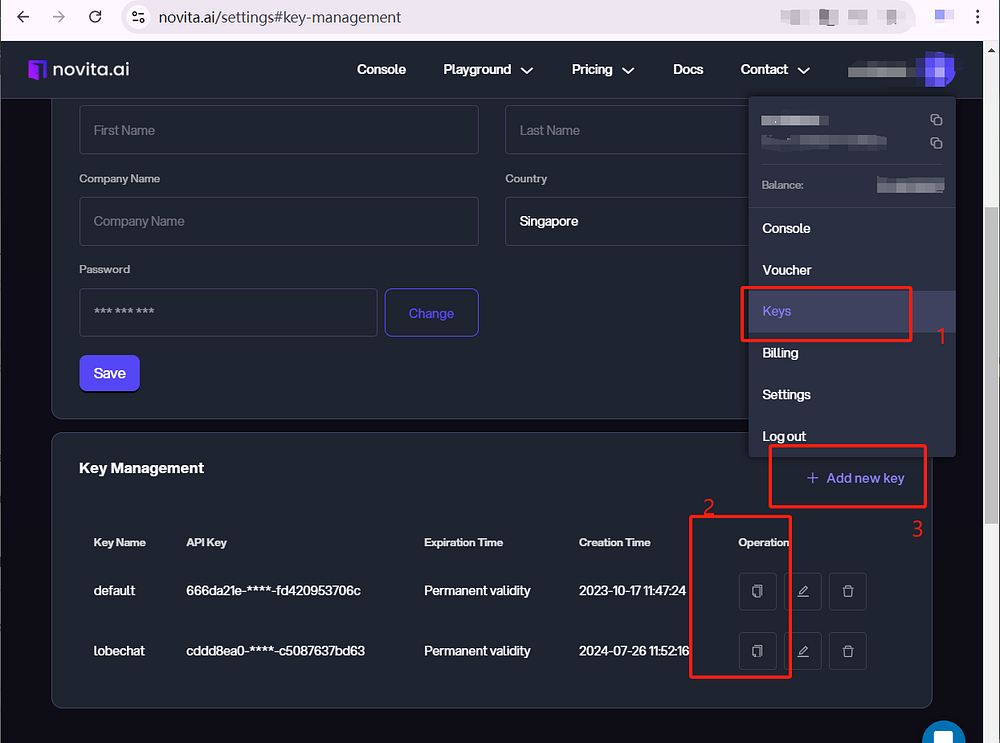

Step 1. Obtain API Key: Sign up for an API key from Novita LLM API. This will be used to authenticate your requests. Go to Novita AI Dashboard. You can click Copy or Add new key.

Step 2. Install Required Libraries: Make sure you have the necessary libraries for making requests. For Python, you might use requests or httpx. Install them via pip if needed.

Step 3. Set Up Your Environment: Create a configuration file or environment variables to securely store your API key.

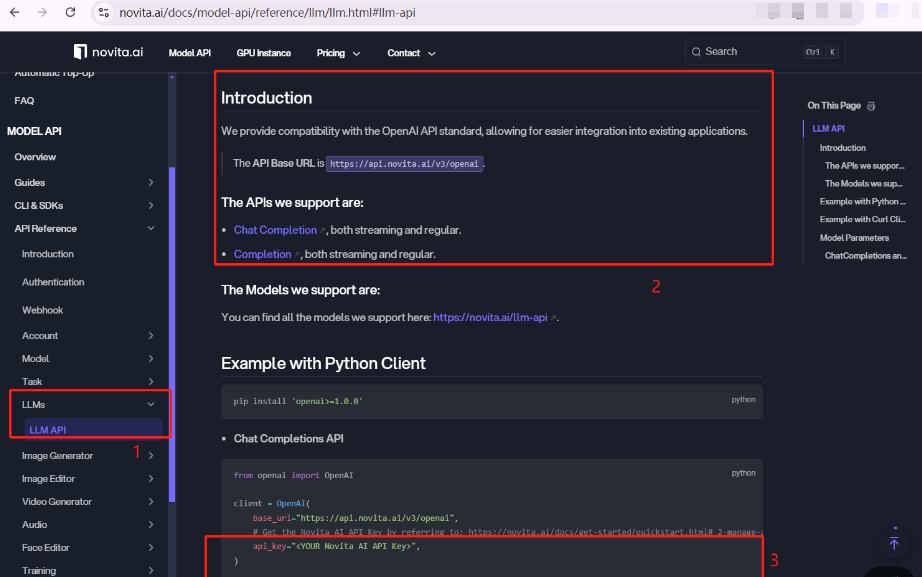

Step 4. Send API Request: View Novita AI Documentation. Find the LLM API reference on this page. Enter your API key and make requests to the Novita LLM API.

Step 5. Adjust Parameters: If needed, adjust parameters such as max_tokens, temperature, or other API settings to fine-tune the responses.

Step 6. Run Test Prompts: Define test prompts for evaluating the LLM. Create a list of scenarios to test. Send prompts to test the Novita API and gather responses.

Step 7. Evaluate Responses: Analyze the responses from the API. Check for relevance, coherence, and accuracy of the answers based on your test prompts.

Step 8. Handle Errors: Implement error handling to manage API failures or unexpected responses.

You can also try it on our LLM playground. Here is a simple guide.

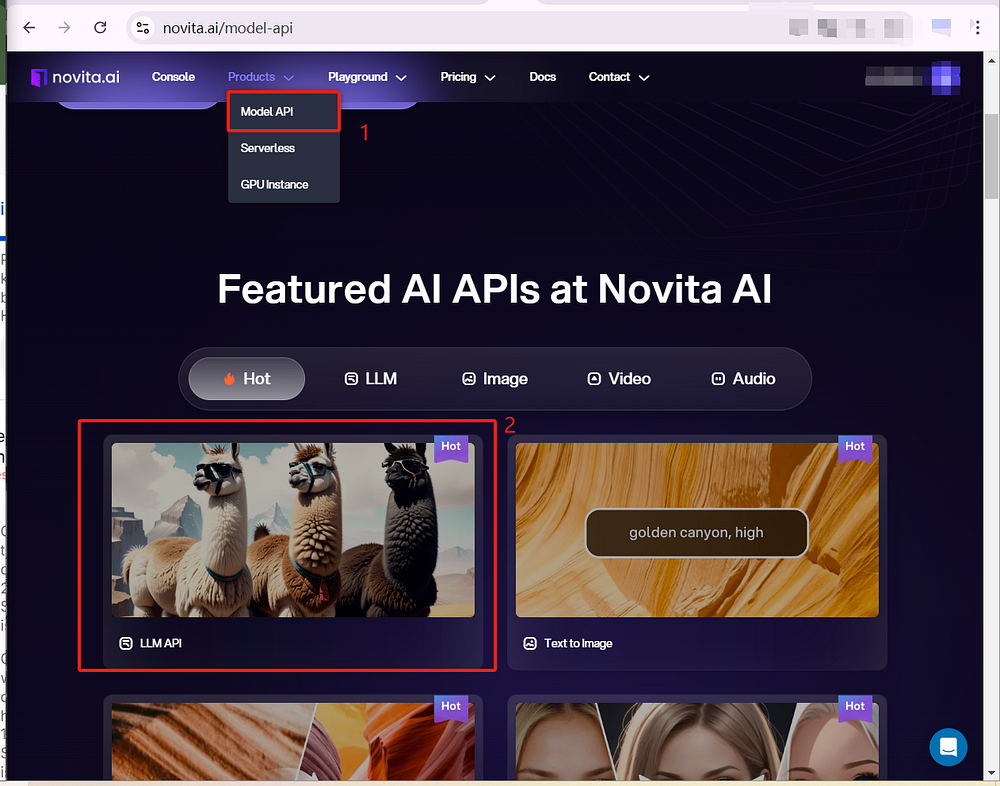

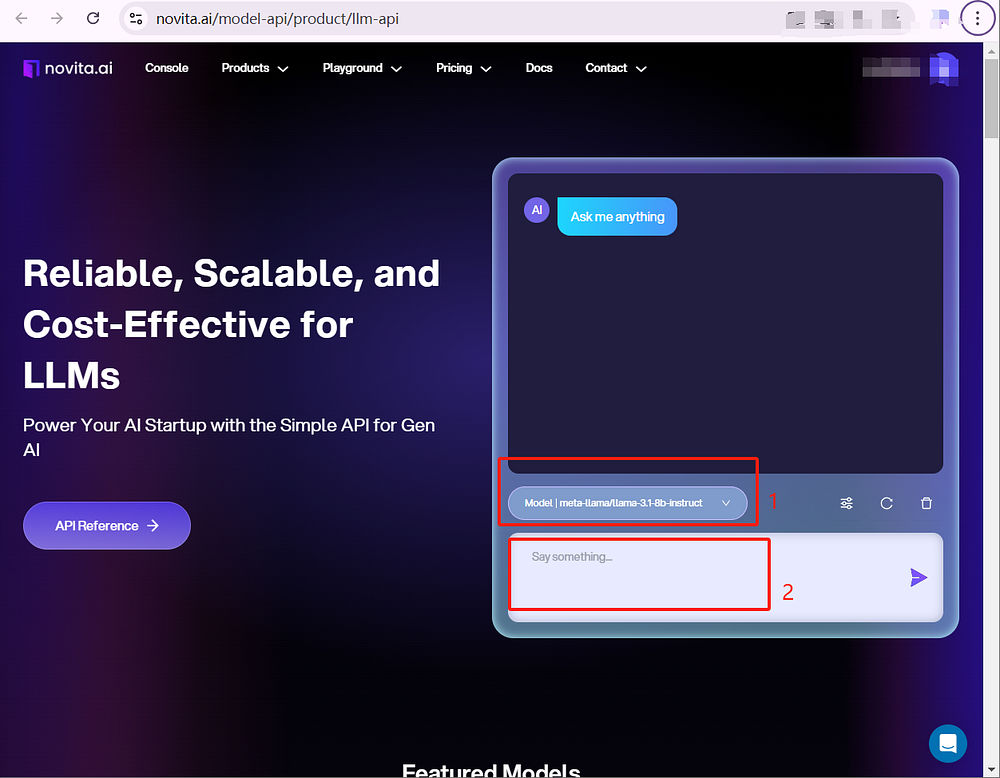

Step 1. Access the Playground: Go to Model API under the Products tab. Choose LLM API to start trying those models.

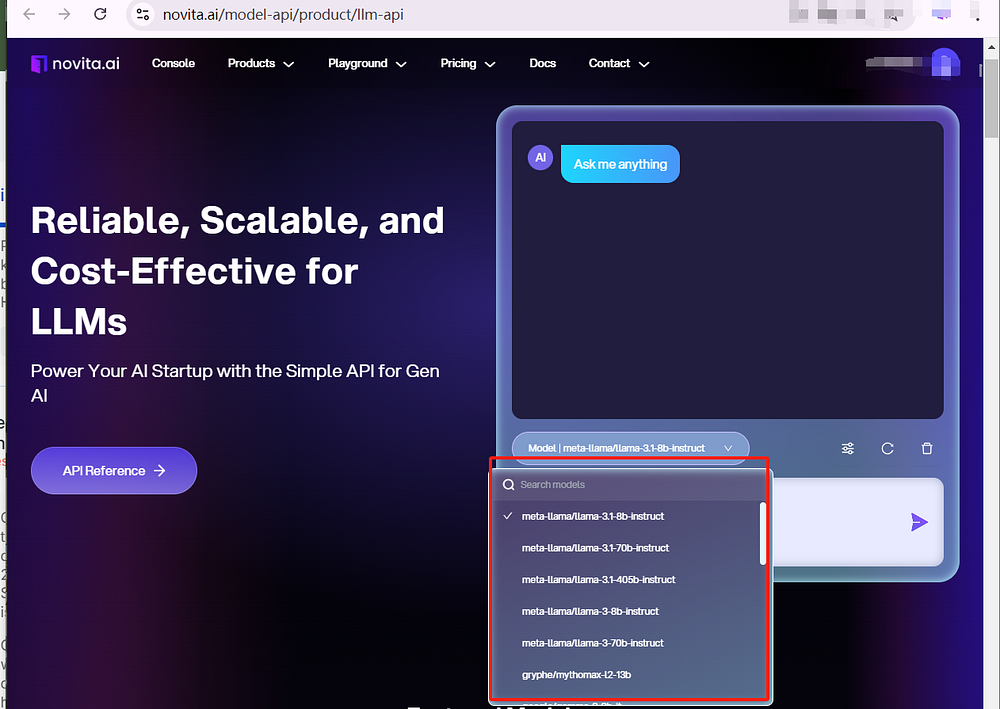

Step 2. Select Different Models: Choose the most suitable model you want to test from the available options like Llama 3.1 family models.

Step3. Enter Your Prompt: In the designated input field, type the prompt you want to test. This is where you provide the text or question you want the model to respond to.

Overcoming Common Challenges in AI LLM Test Prompt Creation

Creating effective test prompts for LLMs presents challenges like confusion, bias, and inclusivity. To address these issues, we must blend technical expertise with ethical considerations in AI development. Confronting these challenges head-on leads to the creation of robust, equitable, and dependable AI models.

Addressing Ambiguity in Test Prompts

Ambiguity hinders clarity in test prompts for LLMs, leading to inaccurate results. To enhance clarity, use precise language, avoid ambiguity, and provide examples for the LLM to understand the desired format and style.

Ensuring Diversity and Inclusivity in Test Scenarios

AI technology must reflect our diverse world for fairness. Testing large language models with diverse datasets is essential to identify and minimize biases. Including various viewpoints and experiences in tests helps create fair, equal, and representative AI models.

Conclusion

In conclusion, good AI LLM test prompts are very important. They help improve how AI models perform and work better. Creating strong test prompts means understanding what features matter, looking at the results, and using advanced tools like natural language processing. By using LLM API in your testing plan, you can make test prompts work better. It is also important to solve problems like confusion and include different perspectives in your test situations. Regularly checking and improving based on metrics can help you create better AI LLM test prompts. This will help with the overall evaluation and improvement of AI models.

FAQs

What are the most critical components of an effective AI LLM test prompt?

Crafting an effective prompt for an LLM app requires clarity, specificity, coherence, and context to elicit smart answers from the model.

How to test LLM response?

Use metrics to evaluate LLM outputs based on criteria like response completeness, conciseness, contextual understanding, and text similarity. Test application by assessing LLM responses for specific inputs.

How can I overcome ambiguity in my AI LLM test prompts?

You should use clear language, set expectations, and provide examples to guide desired output.

What role does contextual relevance play in the success of a test prompt?

Contextual relevance affects how well an AI LLM understands prompts. This directly impacts how accurate and relevant its responses are.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading

1.How to Enhance Your Content with Sentence Transformers in LLM