Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

Explore its effectiveness in arithmetic, symbolic, and commonsense reasoning tasks, revealing its scalability benefits. Witness the impressive performance improvements across various benchmarks and understand its potential for length generalization.

Introduction

The NLP field has experienced a significant transformation thanks to recent advancements in language models. Enlarging the scale of these models has been demonstrated to offer various advantages, including enhanced performance and efficiency in learning from samples. Nevertheless, merely increasing the size of models has not proven to be adequate for achieving high proficiency in demanding tasks such as arithmetic, commonsense reasoning, and symbolic reasoning.

This article delves into how the reasoning capabilities of large language models can be enhanced through a straightforward approach driven by two key concepts. Firstly, it emphasizes the significance of generating natural language explanations that elucidate the steps leading to a solution, particularly beneficial in arithmetic reasoning tasks. Additionally, it underscores the potential of large language models for in-context few-shot learning through prompting. Instead of fine-tuning a separate model for each new task, this approach involves providing the model with a few input-output examples illustrating the task, which has shown remarkable success across various simple question-answering tasks.

What is Chain-of-Thought Prompting

Chain-of-thought prompting presents several appealing qualities as an approach to enhance reasoning in language models.

- Firstly, it enables models to break down complex problems into intermediate steps, allowing for additional computation allocation for problems requiring multiple reasoning steps.

- Secondly, a chain of thought offers an understandable insight into the model’s behavior, indicating how it may have reached a specific answer and offering opportunities to identify and rectify errors in the reasoning process (although fully understanding a model’s computations supporting an answer remains a challenge).

- Thirdly, chain-of-thought reasoning is applicable to various tasks such as math word problems, commonsense reasoning, and symbolic manipulation, potentially extending to any task solvable by humans through language.

- Finally, chain-of-thought reasoning can be easily incorporated into sufficiently large pre-trained language models by including examples of chain-of-thought sequences in the few-shot prompting exemplars, making it a versatile tool for enhancing model performance.

If you want to know more general information about chain-of-thought in LLMs, you can check our blog: Unlocking the Potential of Chain-of-Thought Prompting in Large-Scale Language Models

Arithmetic Reasoning

While arithmetic reasoning may seem straightforward for humans, language models often encounter difficulties with it. Remarkably, when applied to a 540-billion-parameter language model, chain-of-thought prompting yields comparable performance to task-specific fine-tuned models across multiple tasks. It even achieves a new state of the art on the challenging GSM8K benchmark.

Experimental Setup

We delve into the effectiveness of chain-of-thought prompting across various language models on multiple math word problem benchmarks. These benchmarks include the GSM8K benchmark, SVAMP dataset, ASDiv dataset, AQuA dataset, and the MAWPS benchmark, each offering distinct challenges in math word problem solving. We provide example problems in Appendix Table 12 for reference.

Benchmarks.

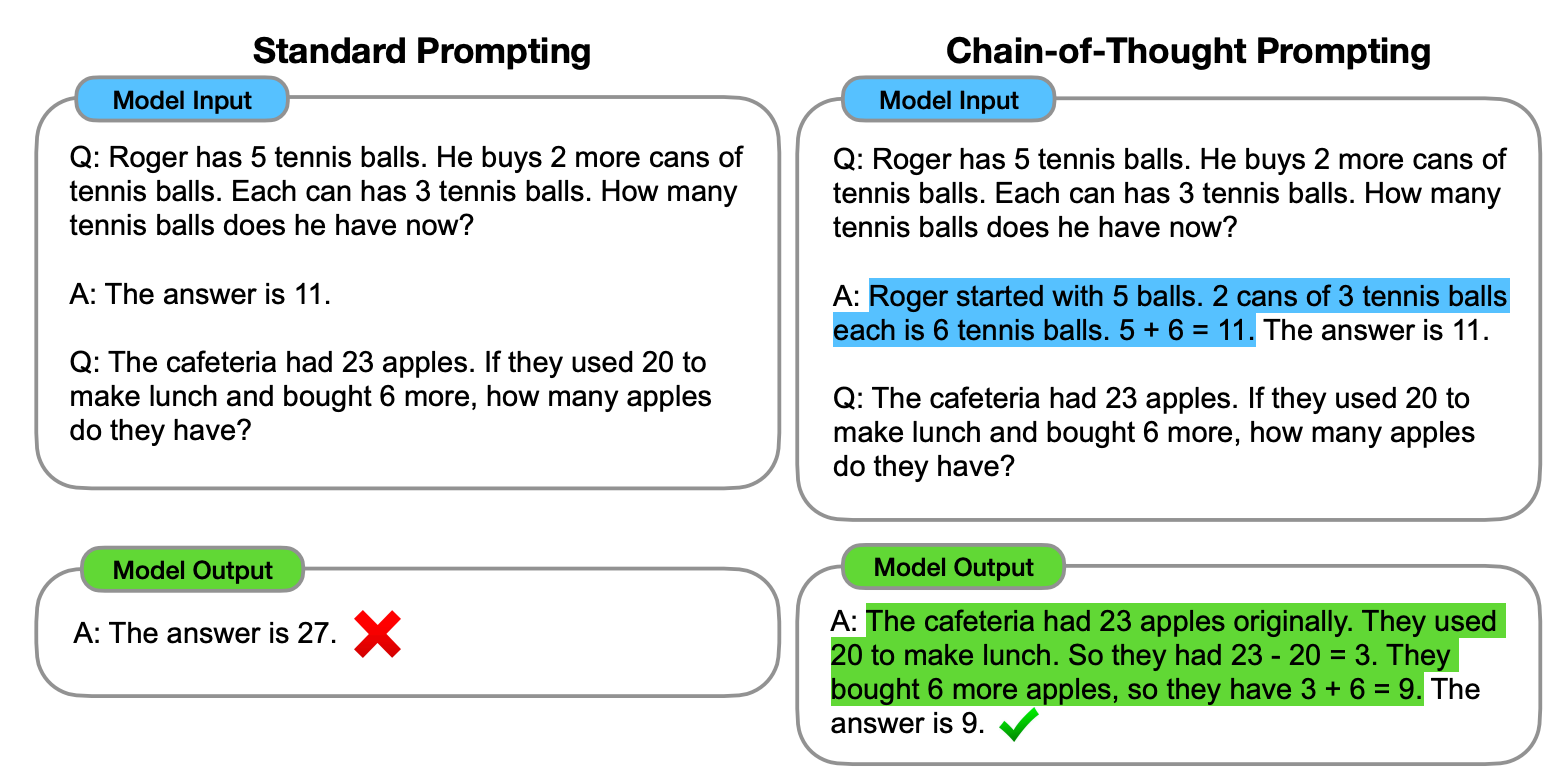

For our baseline comparison, we employ the widely-used standard few-shot prompting technique. This method involves presenting the language model with in-context examples of input-output pairs before making predictions on test-time examples. These exemplars are structured as questions and answers, with the model directly outputting the answer.

Standard prompting

In contrast, our proposed approach, chain-of-thought prompting, enhances each exemplar in few-shot prompting with a detailed chain of thought linked to the associated answer. Given that most datasets only provide an evaluation split, we manually create a set of eight few-shot exemplars with chains of thought for prompting. One such chain of thought exemplar is illustrated in Figure 1, and the complete set is available in Appendix Table 20. It’s important to note that these exemplars underwent no prompt engineering; we explore their robustness in Section 3.4 and Appendix A.2.

Our aim is to investigate whether this form of chain-of-thought prompting can effectively stimulate successful reasoning across a diverse range of math word problem scenarios.

Language models

We assess the performance of five large language models. The first one is GPT-3, for which we utilize text-ada-001, text-babbage-001, text-curie-001, and text-davinci-002 variants, corresponding to InstructGPT models of 350M, 1.3B, 6.7B, and 175B parameters respectively. The second model is LaMDA, available in versions with 422M, 2B, 8B, 68B, and 137B parameters. The third model is PaLM, offering models with 8B, 62B, and 540B parameters. The fourth model is UL2 20B, and the fifth is Codex.

We sample from these models using greedy decoding, although subsequent research suggests that chain-of-thought prompting can be refined by aggregating the majority final answer over multiple sampled generations. For LaMDA, we present averaged results across five random seeds, with each seed employing a different randomly shuffled order of exemplars. Since the experiments with LaMDA didn’t exhibit significant variance across different seeds, to optimize computational resources, we report results based on a single exemplar order for all other models.

Results

Chain-of-thought prompting empowers large language models to tackle difficult math problems. Notably, the ability to reason through chains of thought emerges as models scale up.

Commonsense Reasoning

While chain of thought methodology is particularly effective for addressing math word problems, its language-based approach makes it applicable to a wide array of commonsense reasoning tasks. Commonsense reasoning involves understanding physical and human interactions based on general background knowledge, a skill still challenging for current natural language understanding systems (Talmor et al., 2021).

Benchmarks

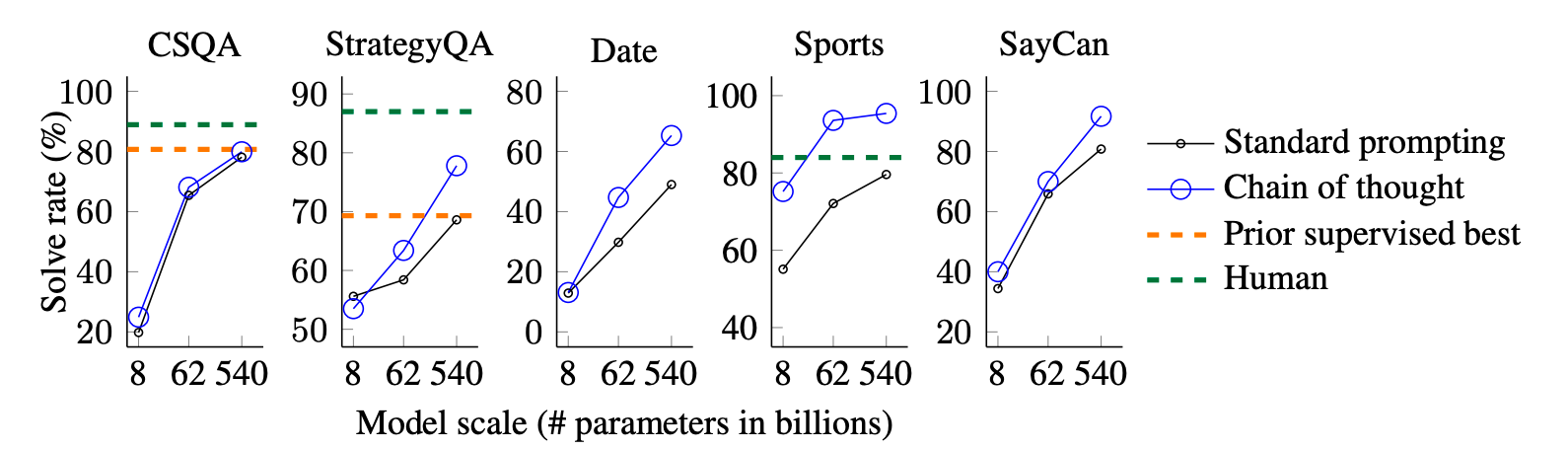

We evaluate this approach across five datasets representing various types of commonsense reasoning. The CSQA dataset involves answering commonsense questions about the world, often requiring prior knowledge of complex semantics. StrategyQA requires models to deduce multi-step strategies to answer questions. Additionally, we utilize two specialized evaluation sets from the BIG-bench initiative: Date Understanding, focusing on inferring dates from context, and Sports Understanding, which involves determining the plausibility of sentences related to sports. Lastly, the SayCan dataset involves mapping natural language instructions to sequences of robot actions from a discrete set. Examples with chain of thought annotations for all datasets are illustrated.

Prompts.

In terms of experimental setup, we follow a similar approach as in the previous section. For CSQA and StrategyQA, we randomly select examples from the training set and manually craft chains of thought for them to serve as few-shot exemplars. As the two BIG-bench tasks lack training sets, we use the first ten examples from the evaluation set as few-shot exemplars and report results on the remainder of the evaluation set. For SayCan, we utilize six examples from the training set and create chains of thought manually.

Results

The results, highlighted in Figure 7 for PaLM (with full results for LaMDA, GPT-3, and different model scales shown in Table 4), reveal that scaling up model size improves the performance of standard prompting across all tasks. Moreover, chain-of-thought prompting leads to additional performance gains, with the most significant improvements observed for PaLM 540B. With chain-of-thought prompting, PaLM 540B achieves impressive results, surpassing prior state-of-the-art performance on StrategyQA (75.6% vs 69.4%) and even outperforming unassisted sports enthusiasts on sports understanding (95.4% vs 84%). These findings underscore the potential of chain-of-thought prompting to enhance performance across a range of commonsense reasoning tasks, although the gains were minimal on CSQA.

Symbolic Reasoning

In our concluding experimental assessment, we focus on symbolic reasoning, a task that is straightforward for humans but can pose challenges for language models. We demonstrate that chain-of-thought prompting not only empowers language models to tackle symbolic reasoning tasks that are difficult under standard prompting conditions but also aids in length generalization, allowing the models to handle inference-time inputs longer than those encountered in the few-shot exemplars.

Tasks

We employ the following two simple tasks for our analysis:

- Last letter concatenation: In this task, the model is tasked with concatenating the last letters of words in a given name (e.g., “Amy Brown” → “yn”). It presents a more challenging version of the first letter concatenation task, which language models can already perform without the need for chain of thought. We generate full names by randomly combining names from the top one-thousand first and last names sourced from name census data.

- Coin flip: This task requires the model to determine whether a coin remains heads up after people either flip or don’t flip it (e.g., “A coin is heads up. Phoebe flips the coin. Osvaldo does not flip the coin. Is the coin still heads up?” → “no”).

results

In the Figure below, we present the results of in-domain and out-of-domain (OOD) evaluations for PaLM, with LaMDA results detailed in Appendix Table 5. Notably, with PaLM 540B, chain-of-thought prompting achieves nearly 100% success rates, although standard prompting already accomplishes coin flip tasks with PaLM 540B (though not for LaMDA 137B).

These in-domain evaluations involve “toy tasks,” where perfect solution structures are provided by the chains of thought in the few-shot exemplars. Despite this, smaller models still struggle, demonstrating that the ability to manipulate abstract concepts on unseen symbols only emerges at a scale of 100B model parameters.

In the OOD evaluations, standard prompting fails for both tasks. However, with chain-of-thought prompting, language models exhibit upward scaling curves, albeit with lower performance compared to the in-domain setting. This indicates that chain-of-thought prompting facilitates length generalization beyond familiar chains of thought for adequately scaled language models.

Conclusion

Our exploration of chain-of-thought prompting reveals its efficacy as a straightforward and widely applicable technique for enhancing reasoning capabilities in language models. Across experiments spanning arithmetic, symbolic, and commonsense reasoning, we observe that chain-of-thought reasoning emerges as a property of model scale. This enables sufficiently large language models to effectively tackle reasoning tasks that exhibit flat scaling curves otherwise.

By expanding the repertoire of reasoning tasks that language models can proficiently handle, we aim to stimulate continued exploration and development of language-based approaches to reasoning.

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

What is the difference between LLM and GPT

LLM Leaderboard 2024 Predictions Revealed

Novita AI LLM Inference Engine: the largest throughput and cheapest inference available