A Step-by-Step Guide to Training Large Language Models (LLMs) On Your Own Data.

Unlock the power of AI with our comprehensive guide to training your own Large Language Model (LLM). Discover the step-by-step process to create AI solutions tailored to your unique needs. Whether you’re a business seeking to enhance customer support or a content creator aiming to automate article generation, embark on a journey of AI innovation with our expert guidance.

Introduction

Large Language Models (LLMs) have significantly transformed the field of Artificial Intelligence (AI). These potent AI systems, exemplified by GPT-3, have unlocked numerous possibilities across diverse applications. From chatbots capable of engaging users in substantive dialogues to content generators adept at crafting articles and narratives seamlessly, they have emerged as indispensable resources for tackling intricate natural language processing challenges and streamlining text generation tasks reminiscent of human proficiency.

In this comprehensive guide, we’ll illuminate the path to AI innovation. We’ll demystify the seemingly complex process of training your own LLM, breaking it down into manageable steps. By the end of this journey, you’ll possess the knowledge and tools to create AI solutions that not only meet but surpass your specific needs and expectations.

Benefits of Fine Tuning and Training an LLM On Your Own Data

Fine-tuning an LLM using custom data offers numerous advantages:

- Gain a competitive edge by leveraging your data to streamline resource-intensive processes, gain deeper insights from your customer base, identify and respond swiftly to market shifts, and much more.

- Enhance the application’s functionality by enabling the LLM to process domain-specific data not available elsewhere. For instance, it can provide insights like fourth-quarter sales results or identify the top five customers.

- Optimize the LLM’s performance to enhance predictions and accuracy by integrating large volumes of contextual information.

- Simplify operational analytics by utilizing the powerful analytic capabilities of AI/ML, along with a straightforward natural language interface, for your specialized or unique datasets stored in operational or columnar databases.

- Ensure privacy and security by maintaining internal control of your data, allowing for proper controls, enforcement of security policies, and compliance with relevant regulations.

Now that we have realized the benefits of building LLM with your own customized data, let’s show you how to build your secret and private treasures.

Step-by-Step Guide to Train Your LLM with Your Own Data

Establish Your Goal

At the outset of your journey to train an LLM, clarity in defining your objective is crucial. It’s akin to inputting the destination on your GPS before embarking on a road trip. Are you aiming to create a conversational chatbot, a content generator, or a specialized AI tailored for a specific industry? Having a clear objective will guide your subsequent decisions and shape the development trajectory of your LLM.

Consider the specific use cases in which you want your LLM to excel. Are you focusing on customer support, content creation, or data analysis? Each objective will necessitate different data sources, model architectures, and evaluation criteria.

Furthermore, contemplate the unique challenges and requirements of your chosen domain. For instance, if you’re developing an AI for healthcare, you’ll need to navigate privacy regulations and adhere to stringent ethical standards.

Gather Your Data

Data serves as the essence of any LLM, acting as the foundational material from which your AI learns and generates human-like text. To gather appropriate data, strategic and meticulous approaches are essential.

Data Preprocessing — Ready for Training

Now that you’ve obtained your data, it’s time to ready it for the training process. Think of this stage as akin to washing and chopping vegetables before cooking a meal — it’s about formatting your data into a digestible form for your LLM.

Firstly, you’ll need to tokenize your text, breaking it into smaller units, typically words or subwords. This step is crucial as LLMs operate at the token level rather than on entire paragraphs or documents.

Next, consider how to manage special characters, punctuation, and capitalization. Different models and applications may have specific requirements in this area, so ensure consistency in your data preprocessing.

You may also want to explore stemming or lemmatization, techniques that reduce words to their base forms. This can aid your LLM in understanding word variations better, thereby enhancing its overall performance.

Select Your Framework and Infrastructure

Now that your data is prepared, it’s time to establish your AI workspace. Think of this step as akin to selecting the appropriate cooking tools and kitchen appliances for your culinary journey.

Choosing the right deep learning framework holds paramount importance. TensorFlow, PyTorch, and Hugging Face Transformers stand out as popular options. Your decision may hinge on your familiarity with a specific framework, the availability of prebuilt models, or the unique demands of your project.

Model Architecture

With your kitchen arranged, it’s time to devise the recipe for your AI concoction — the model architecture. Much like a recipe outlines the ingredients and cooking instructions for a dish, the model architecture delineates the structure and components of your LLM.

Numerous architectural options exist, but the Transformer architecture, popularized by models such as GPT-3 and BERT, serves as a common starting point. Transformers have demonstrated effectiveness across a broad spectrum of NLP tasks.

Consider the scale of your model. Larger models can capture more intricate patterns but necessitate greater computational resources and data. Conversely, smaller models are more resource-efficient but may face constraints in handling complex tasks.

Data Encoding and Tokenization

Now that your model architecture is in place, it’s time to prepare your data for training, akin to washing, peeling, and chopping your ingredients before cooking a meal. This step involves getting your data ready to be fed into your LLM.

Begin by tokenizing your data, breaking it into smaller units known as tokens, typically words or subwords. Tokenization is crucial as LLMs operate at the token level. It’s important to ensure that your data matches the tokenization requirements of your chosen model, as different models may have varying tokenization processes.

Consider how to manage special characters, punctuation, and capitalization. Depending on your model and objectives, standardizing these elements may be necessary to maintain consistency.

Data encoding is another vital aspect. You’ll need to convert your tokens into numerical representations that your LLM can process. Common techniques include one-hot encoding, word embeddings, or subword embeddings like WordPiece or Byte Pair Encoding (BPE).

Model Training

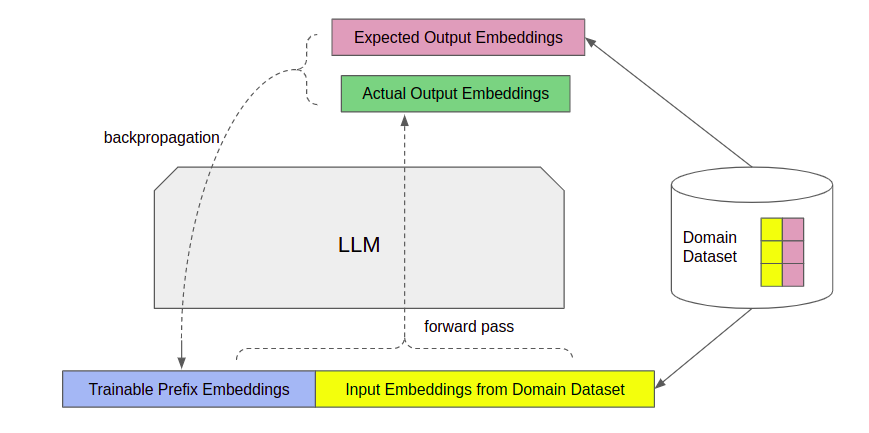

With your data primed and your model architecture established, it’s time to commence cooking your AI creation — model training. This phase mirrors a chef combining ingredients and employing cooking techniques to craft a dish.

Commence by selecting suitable hyperparameters for your training regimen. These parameters encompass the learning rate, batch size, and the number of training epochs. Given their significant impact on model performance, meticulous consideration is essential.

The training process entails systematically presenting your data to the model, enabling it to make predictions and adjusting its internal parameters to minimize prediction errors. This is typically accomplished through optimization algorithms such as stochastic gradient descent (SGD).

Keep tabs on your model’s progression throughout training. Utilize a validation dataset to gauge its performance on tasks aligned with your objective. Adapt hyperparameters as necessary to refine the training process.

Prepare for this phase to consume computational resources and time, particularly for large models with extensive datasets. Training durations may span hours, days, or even weeks, contingent upon your setup.

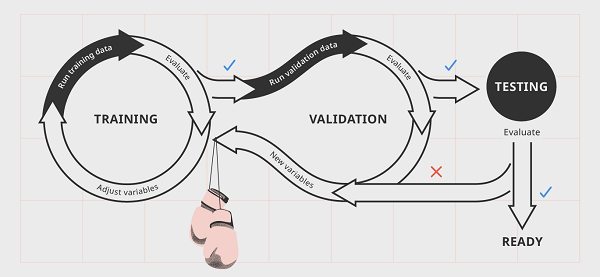

Validation

Just as a chef periodically tastes their dish during cooking to ensure it meets expectations, you must validate and evaluate your AI creation throughout training.

Validation entails regularly assessing your model’s performance using a distinct validation dataset. This dataset should differ from your training data and align with your objectives. Validation enables you to determine whether your model is learning effectively and progressing as desired.

Fine-Tuning (Optional)

After your model has finished its initial training, you might contemplate fine-tuning it to elevate its performance on particular tasks or domains. This step resembles refining your dish with extra seasoning to customize its flavor.

Fine-tuning entails training your model on a task-specific dataset that complements your original training data. For instance, if you initially trained a broad language model, you could fine-tune it on a dataset concerning customer support conversations to enhance its performance in that domain. You can choose to use LoRA to fine-tune your LLM. If you are interested in it, dive into our blog to see how: Tips for optimizing LLMs with LoRA (Low-Rank Adaptation)

This process enables you to tailor your AI creation to specific use cases or industries, rendering it more adaptable and efficient.

Testing and Deployment

Now that your AI creation is ready, it’s time to present it to the world. This phase involves evaluating your AI with real-world data and deploying it to fulfill user requirements.

Test your AI using data representative of its actual usage scenarios. Ensure it meets your criteria for accuracy, response time, and resource utilization. Thorough testing is crucial for identifying any issues or idiosyncrasies that require attention.

Deployment entails making your AI accessible to users. Depending on your project, this might involve integration into a website, application, or system. You may opt to deploy on cloud services or utilize containerization platforms to manage your AI’s availability effectively.

Continuous Enhancement

Your AI journey doesn’t conclude with deployment; it’s an ongoing endeavor of refinement and advancement. Similar to how a restaurant chef continuously adjusts their menu based on customer feedback, you should be prepared to refine your AI creation based on user experiences and evolving requirements.

Regularly gather user feedback to comprehend how your AI is performing in real-world settings. Pay attention to user suggestions and critiques to pinpoint areas for enhancement.

Monitor your AI’s performance and usage trends. Analyze data to uncover insights into its strengths and weaknesses. Anticipate any potential issues that may arise over time, such as concept drift or shifts in user behaviors.

Evaluating LLMs After Training

Once Large Language Models (LLMs) complete training, evaluating their performance is essential to gauge their success and compare them to benchmarks, alternative algorithms, or previous iterations. Evaluation methods for LLMs encompass both intrinsic and extrinsic approaches.

Intrinsic Assessment Intrinsic analysis assesses performance using objective, quantitative metrics that gauge the linguistic precision of the model and its ability to predict the next word accurately. Key metrics include:

- Language fluency: Evaluates the naturalness of the generated language, ensuring grammatical correctness and syntactic variety to emulate human-like writing.

- Coherence: Measures the model’s consistency in maintaining topic relevance across sentences and paragraphs, ensuring logical connections between successive sentences.

- Perplexity: A statistical measure indicating the model’s ability to predict a given sample. A lower perplexity score signifies better prediction accuracy and alignment with observed data.

- BLEU score (Bilingual Evaluation Understudy): Measures the similarity between machine-generated text and human references by counting matching subsequences of words, focusing on translation accuracy or response generation precision.

Key Considerations for Training LLMs

Training Large Language Models (LLMs) from scratch poses significant challenges due to high costs and complexity. Here are some key hurdles:

Infrastructure Requirements

LLMs require substantial computational resources and infrastructure to train effectively. Typically, they are trained on vast text corpora, often exceeding 1000 GB, using models with billions of parameters. Training such large models necessitates infrastructure with multiple GPUs. For instance, training GPT-3, a model with 175 billion parameters, on a single NVIDIA V100 GPU would take an estimated 288 years. To mitigate this, LLMs are trained on thousands of GPUs in parallel. For example, Google distributed the training of its PaLM model, comprising 540 billion parameters, across 6,144 TPU v4 chips.

Cost Implications

The acquisition and hosting of the requisite number of GPUs pose financial challenges for many organizations. Even OpenAI, renowned for its GPT series of models, including ChatGPT, relied on Microsoft’s Azure cloud platform for training. In 2019, Microsoft invested $1 billion in OpenAI, with a significant portion allocated to training LLMs on Azure resources.

Model Distribution Strategies

In addition to scale and cost considerations, complexities arise in managing LLM training on computing resources. Key strategies include:

- Initial training on a single GPU to estimate resource requirements.

- Utilization of model parallelism to distribute models across multiple GPUs, optimizing partitioning to enhance memory and I/O bandwidth.

- Adoption of Tensor model parallelism for very large models, distributing individual layers across multiple GPUs, requiring precise coding and configuration for efficient execution.

- Iterative training processes involving various parallel computing strategies, with researchers experimenting with different configurations tailored to model needs and available hardware.

Influence of Model Architecture Choices

The selected architecture of an LLM significantly affects training complexity. Here are some considerations for adapting architecture to available resources:

- Balance depth and width of the model (parameter count) to align with computational resources while ensuring sufficient complexity.

- Prefer architectures with residual connections, facilitating optimization of resource utilization.

- Assess the necessity of a Transformer architecture with self-attention, as it imposes specific training demands.

- Identify functional requirements such as generative modeling, bi-directional/masked language modeling, multi-task learning, and multi-modal analysis.

- Conduct training experiments using established models like GPT, BERT, and XLNet to gauge their suitability for your use case.

- Choose a tokenization technique — word-based, subword, or character-based — carefully, as it can impact vocabulary size and input length, thus affecting computational requirements.

Conclusion

In conclusion, embarking on the journey of training your own Large Language Model (LLM) is a rewarding endeavor that opens doors to endless possibilities in the realm of Artificial Intelligence (AI). By following the comprehensive step-by-step guide outlined above, you have gained insights into the intricacies of defining objectives, gathering and preprocessing data, selecting frameworks and infrastructure, designing model architectures, and training and fine-tuning your LLM. Furthermore, you’ve learned about the importance of validation, testing, deployment, and continuous enhancement in ensuring the success and relevance of your AI creation.

As you continue on your AI journey, remember that the process of building and refining an LLM is iterative and ongoing. Regularly gathering user feedback, monitoring performance metrics, and adapting to evolving requirements are essential practices for maintaining the quality and effectiveness of your AI solution. Additionally, prioritizing responsible AI development, including considerations for fairness, ethics, and compliance, is crucial in creating AI systems that positively impact society.

With dedication, innovation, and a commitment to continuous improvement, you have the opportunity to unlock the full potential of AI and create solutions that truly resonate with users and address real-world challenges. So, embrace the journey ahead with confidence and enthusiasm, and let your AI creations pave the way for a brighter future.

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

What is the difference between LLM and GPT

LLM Leaderboard 2024 Predictions Revealed

Novita AI LLM Inference Engine: the largest throughput and cheapest inference available