A LaunchRelease from OpenAI - GPT-4o: Use Cases, How it Works & How to Get Access

OpenAI unveiled its most recent large language model, GPT-4o, on Monday, marking the advancement from its predecessor, GPT-4 Turbo. Explore its features, efficiency, and potential applications in the following sections.

What is OpenAI’s GPT-4o?

GPT-4o represents OpenAI’s latest large language model. The ‘o’ in its name signifies “omni,” derived from Latin for “every,” indicating its capability to process prompts containing a blend of text, audio, images, and video. In the past, distinct models were employed for various content types within the ChatGPT interface.

For instance, in Voice Mode interactions with ChatGPT, speech would be transcribed to text using Whisper, a text response would be formulated using GPT-4 Turbo, and the response text would then be converted back to speech using TTS.

Likewise, integrating images into ChatGPT interactions required a combination of GPT-4 Turbo and DALL-E 3. Consolidating these functions into a single model for diverse content formats offers the potential for faster processing, improved result quality, a streamlined interface, and the exploration of novel use cases.

What Makes GPT-4o Different From GPT-4 Turbo?

By adopting an all-in-one model strategy, GPT-4o surpasses various constraints associated with the previous voice interaction functionalities.

1. Tone of voice is now considered, facilitating emotional responses

In the previous OpenAI system, which involved combining Whisper, GPT-4 Turbo, and TTS in a sequential process, the reasoning engine, GPT-4, only had access to the transcribed spoken words. This approach resulted in the omission of critical elements such as tone of voice, background noises, and distinctions among multiple speakers. Consequently, GPT-4 Turbo was limited in its ability to generate responses with diverse emotions or speech styles.

However, with a unified model capable of processing both text and audio, this valuable audio information can now be utilized to deliver responses of higher quality, featuring a wider range of speaking styles.

In the below video provided by OpenAI, GPT-4o showcases its ability to generate sarcastic output.

2. Lower latency enables real-time conversations

The previous three-model pipeline resulted in a minor delay, or “latency,” between interacting with ChatGPT and receiving a response.

OpenAI disclosed that the average latency for Voice Mode is 2.8 seconds with GPT-3.5 and 5.4 seconds with GPT-4. In contrast, the average latency for GPT-4o is 0.32 seconds, making it nine times faster than GPT-3.5 and 17 times faster than GPT-4.

This reduced latency approaches the average human response time of 0.21 seconds and is particularly crucial for conversational scenarios, where frequent exchanges occur between humans and AI, and delays between responses accumulate.

This functionality evokes memories of Google’s launch of Instant, its search query auto-complete feature, in 2010. While searching typically doesn’t take much time, saving a few seconds with each use enhances the overall product experience.

One promising use case made more feasible by GPT-4o’s reduced latency is real-time speech translation. OpenAI illustrated a scenario where two colleagues, one English-speaking and the other Spanish-speaking, communicate with GPT-4o facilitating instant translation of their conversation.

https://youtu.be/WzUnEfiIqP4?si=dnnqaNxT4ncX7cfJ

3. Integrated vision enables descriptions of a camera feed

Alongside its integration of voice and text capabilities, GPT-4o also incorporates features for images and videos. This implies that when granted access to a computer screen, it can provide descriptions of onscreen content, respond to questions regarding displayed images, or even serve as a supportive companion in your tasks.

In a video released by OpenAI featuring Sal Khan from Khan Academy, GPT-4o aids with Sal’s son’s math homework.

https://youtu.be/_nSmkyDNulk?si=sFvBOgk9hznhqf4f

Expanding beyond screen interaction, if you grant GPT-4o access to a camera, such as on your smartphone, it can provide descriptions of its visual surroundings.

In a comprehensive demonstration by OpenAI, all these capabilities are combined. Two smartphones equipped with GPT-4o engage in a conversation. One GPT has access to the smartphone cameras and describes its visual observations to another GPT without visual capabilities.

The outcome is a three-way conversation involving a human and two AIs. The video also includes a segment where the AIs sing, a capability not achievable with previous models.

https://youtu.be/MirzFk_DSiI?si=Dv7HoVcNliXD3lJg

4. Better tokenization for non-Roman alphabets provides greater speed and value for money

A crucial step in the workflow of large language models (LLMs) involves converting prompt text into tokens, which are units of text that the model can comprehend.

In English, a token typically corresponds to a single word or punctuation mark, although some words may be divided into multiple tokens. On average, about three English words are represented by approximately four tokens.

Reducing the number of tokens required to represent language in the model leads to fewer computational calculations and faster text generation.

Moreover, since OpenAI charges its API users based on the number of tokens input or output, fewer tokens translate to lower costs for API users.

GPT-4o features an enhanced tokenization model that necessitates fewer tokens per text. This improvement is particularly notable in languages that do not utilize the Roman alphabet.

For instance, Indian languages, including Hindi, Marathi, Tamil, Telugu, and Gujarati, have experienced reductions in tokens ranging from 2.9 to 4.4 times. Arabic has seen a token reduction of 2 times, while East Asian languages such as Chinese, Japanese, Korean, and Vietnamese have witnessed token reductions ranging from 1.4 to 1.7 times.

5. Rollout to the free plan

OpenAI’s current pricing structure for ChatGPT requires users to pay for access to the top-tier model: GPT-4 Turbo has been exclusively available on the Plus and Enterprise paid plans.

However, this is undergoing a transformation, as OpenAI has pledged to offer GPT-4o on the free plan as well. Plus users will receive five times the message quota compared to users on the free plan.

The deployment will occur gradually, starting with red team members (testers tasked with identifying model vulnerabilities) gaining immediate access, followed by broader user access being phased in over time.

6. Launch of the ChatGPT desktop app

Although not specifically tied to GPT-4o, OpenAI also introduced the ChatGPT desktop application. Considering the improvements in latency and multimodality discussed earlier, along with the app’s launch, it’s evident that the interaction dynamics with ChatGPT are poised for a transformation. For instance, OpenAI showcased a demonstration of an augmented coding workflow utilizing voice and the ChatGPT desktop app. Scroll down in the use-cases section to witness this example in action!

How Does GPT-4o Work?

Numerous content types, one neural network

Details regarding the workings of GPT-4o remain limited. The sole insight provided by OpenAI in its announcement is that GPT-4o is a unified neural network trained on text, vision, and audio inputs.

This novel approach marks a departure from the previous method of employing separate models trained on distinct data types.

However, GPT-4o is not the inaugural model to adopt a multi-modal approach. In 2022, TenCent Lab introduced SkillNet, a model merging LLM transformer features with computer vision techniques to enhance Chinese character recognition.

Similarly, in 2023, a collaborative effort from ETH Zurich, MIT, and Stanford University yielded WhisBERT, a variant within the BERT series of large language models. Although not pioneering, GPT-4o stands out for its remarkable ambition and potency compared to these earlier endeavors.

Is GPT-4o a radical change from GPT-4 Turbo?

The extent of the modifications to GPT-4o’s architecture in comparison to GPT-4 Turbo appears to be subject to interpretation, depending on whether one consults OpenAI’s engineering or marketing teams. In April, a bot named “im-also-a-good-gpt2-chatbot” emerged on LMSYS’s Chatbot Arena, a leaderboard ranking top generative AIs. This enigmatic AI has now been unveiled as GPT-4o.

The inclusion of “gpt2” in the name holds significance. It distinguishes GPT-4o from GPT-2, a predecessor of both GPT-3.5 and GPT-4. The “2” suffix was widely interpreted to signify a wholly new architecture within the GPT series of models.

Apparently, individuals within OpenAI’s research or engineering teams perceive the amalgamation of text, vision, and audio content types into a single model as a significant enough change to justify the first version number increment in six years.

Conversely, the marketing team has chosen to adopt a relatively restrained approach to naming, continuing the “GPT-4” convention.

GPT-4o Performance vs Other Models

OpenAI released benchmark figures comparing GPT-4o with several other top-tier models:

1. GPT-4 Turbo

2. GPT-4 (initial release)

3. Claude 3 Opus

4. Gemini Pro 1.5

5. Gemini Ultra 1.0

6. Llama 3 400B

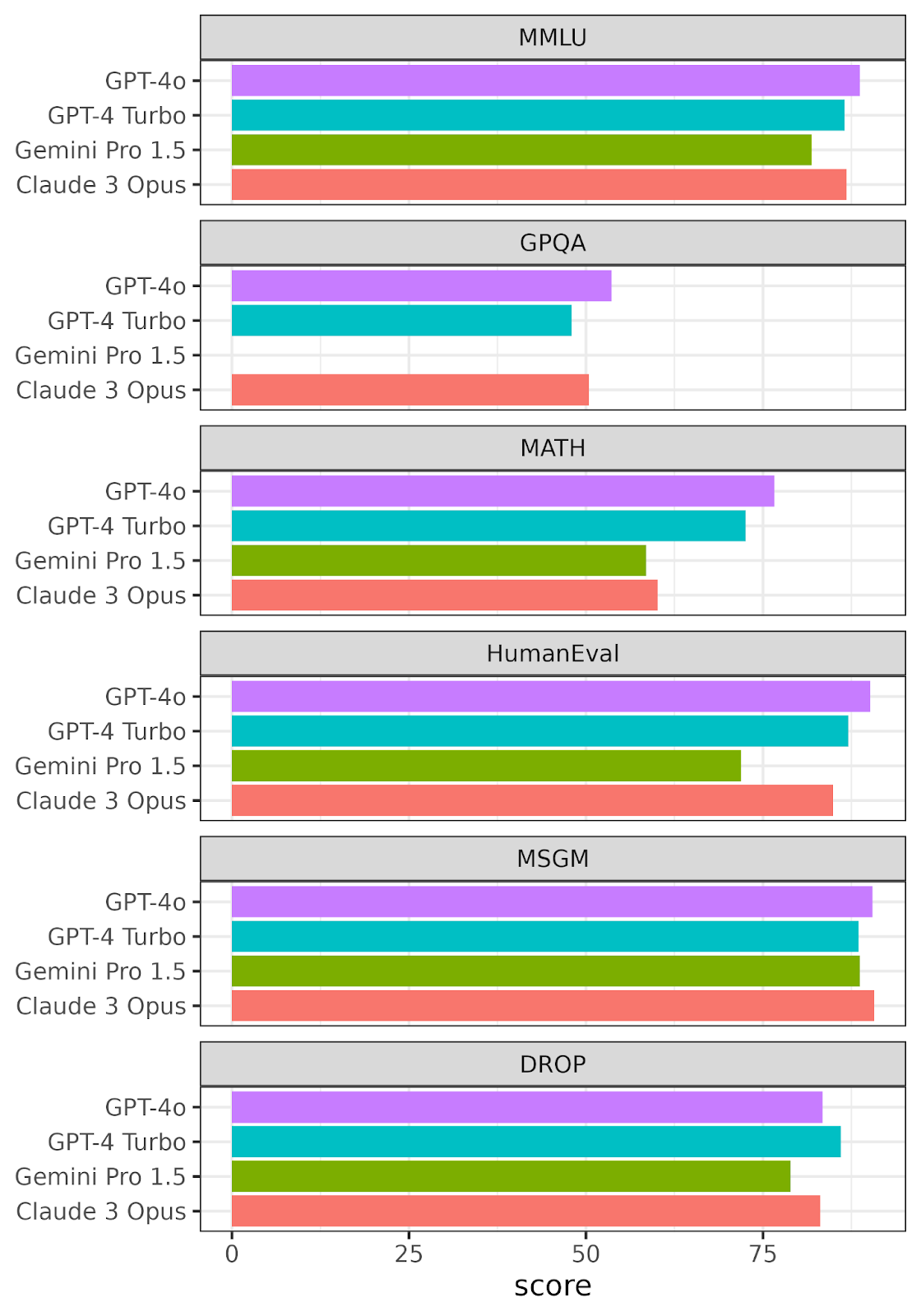

Among these, only three models hold significant relevance for comparison: GPT-4 Turbo, Claude 3 Opus, and Gemini Pro 1.5. These models have been vying for the top spot on the LMSYS Chatbot Arena leaderboard in recent months.

While Llama 3 400B may become a contender in the future, it is still under development. Therefore, the benchmark results presented here focus solely on these three models and GPT-4o.

Six benchmarks were utilized for evaluation:

- Massive Multitask Language Understanding (MMLU): Covers tasks spanning elementary mathematics, US history, computer science, law, and more. Models must possess extensive world knowledge and problem-solving abilities to achieve high accuracy on this test.

- Graduate-Level Google-Proof Q&A (GPQA): Features multiple-choice questions crafted by domain experts in biology, physics, and chemistry. The questions are of high quality and extreme difficulty, with experts holding or pursuing PhDs in the corresponding domains reaching 74% accuracy.

- MATH: Includes middle school and high school mathematics problems.

- HumanEval: Assesses the functional correctness of computer code, used for evaluating code generation.

- Multilingual Grade School Math (MSGM): Consists of grade school mathematics problems translated into ten languages, including underrepresented languages such as Bengali and Swahili.

- Discrete Reasoning Over Paragraphs (DROP): Focuses on questions that demand understanding of complete paragraphs, involving tasks like addition, counting, or sorting values spread across multiple sentences.

GPT-4o outperforms other models in four benchmarks, though it is surpassed by Claude 3 Opus in the MSGM benchmark and by GPT-4 Turbo in the DROP benchmark. Despite these specific results, the overall performance of GPT-4o is impressive, indicating potential for the new multimodal training approach.

Upon closer examination of the GPT-4o numbers compared to GPT-4 Turbo, the performance increases are relatively modest, with only a few percentage points difference. While this signifies notable progress within a year, it falls short of the dramatic performance leaps observed from GPT-1 to GPT-2 or GPT-2 to GPT-3.

It’s becoming apparent that achieving a 10% enhancement in text reasoning annually may become the new standard. The easier challenges have been tackled, making significant advancements in text reasoning increasingly challenging.

However, these LLM benchmarks do not fully capture AI’s performance on multimodal problems. The concept of multimodal training is still relatively new, and there is a lack of effective methods for measuring a model’s proficiency across text, audio, and vision.

Overall, GPT-4o’s performance is impressive and demonstrates potential for the innovative approach of multimodal training.

GPT-4o Use-Cases

1. GPT-4o for data analysis & coding tasks

Recent GPT models and their derivatives, such as GitHub Copilot, are already equipped to offer code assistance, including code writing, error explanation, and error fixing. The multi-modal capabilities of GPT-4o present intriguing possibilities.

In a promotional video featuring OpenAI CTO Mira Murati, two OpenAI researchers, Mark Chen and Barret Zoph, demonstrated using GPT-4o to interact with Python code.

The code is presented to GPT as text, and the voice interaction feature is utilized to request explanations from GPT regarding the code. Subsequently, after executing the code, GPT-4o’s vision capability is leveraged to provide explanations about the plot.

Overall, the process of showing ChatGPT your screen and asking a question verbally presents a potentially simpler workflow compared to saving a plot as an image file, uploading it to ChatGPT, and then typing out a question.

2. GPT-4o for real-time translation

Prepare to bring GPT-4o along on your vacation. With its low-latency speech capabilities, GPT-4o enables real-time translation, making it possible (assuming you have roaming data on your cellphone plan!). This makes traveling in countries where you don’t speak the language much more manageable.

3. Roleplay with GPT-4o

ChatGPT has proven to be a valuable resource for roleplaying scenarios, whether you’re simulating a job interview for your dream data career or training your sales team to enhance product sales.

Previously, it primarily supported text-only roleplays, which may not have been optimal for certain use cases. However, with enhanced speech capabilities, spoken roleplay is now a feasible option.

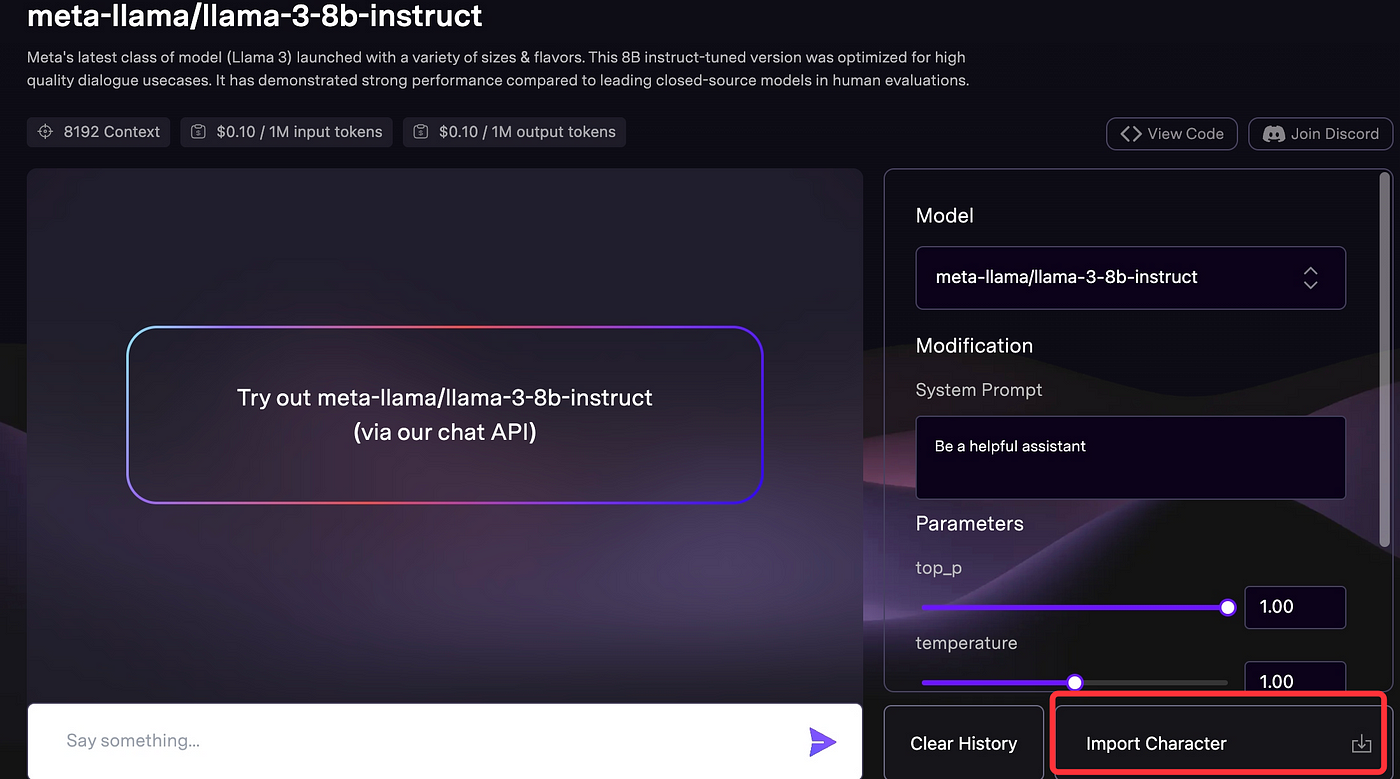

However, if you prefer the traditional text character play, you can choose novita.ai LLM API:

4. GPT-4o for assisting visually impaired users

The capability of GPT-4o to interpret video input from a camera and verbally narrate the scene holds significant potential as a crucial feature for individuals with visual impairments. Essentially, it mirrors the audio description functionality found in televisions but extends to real-life situations.

Getting access to GPT-4o in ChatGPT

The address for ChatGPT has shifted from chat.openai.com to chatgpt.com, indicating a substantial dedication to AI as a product rather than merely an experiment. If you have access to GPT-4o on your account, it will be accessible both in the mobile app and online.

Additionally, a Mac app has begun to be distributed to certain users. However, caution is advised regarding links, as scammers are exploiting this release to distribute malware onto computers. The safest approach is to await an email or notification containing a link directly from OpenAI.

Even if you possess a functional link for the app, access will not be granted until it has been authorized for your OpenAI account. You will encounter an error message stating “You don’t have access” if you attempt to use it prematurely.

Sign in to ChatGPT

Regardless of whether you opt for the paid or free version of ChatGPT, the initial step is to sign in. Visit the website or download the app and link it to your account. If you don’t have an account yet, simply sign up.

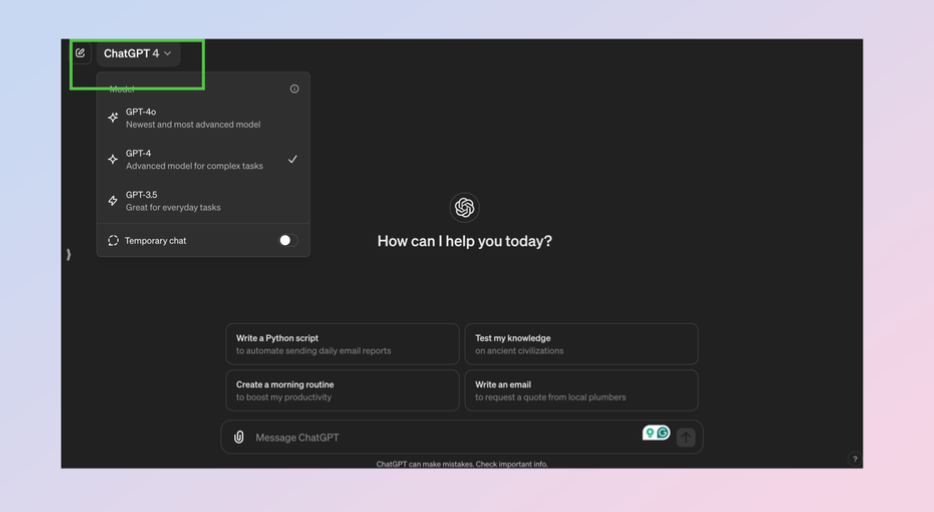

Check your model choices

Near the top of the screen, you’ll find a drop-down menu containing a list of models. On the website, it might already display “GPT-4o” as selected, but it could also show options like “GPT-4” or “GPT-3.5.” If “GPT-4o” doesn’t appear, it means you don’t have access to the model yet.

On mobile devices, if you have access, you’ll see “ChatGPT 4o” displayed in the middle of the navigation bar at the top of the screen.

Start chatting

If you have access, begin chatting with GPT-4o just as you would with GPT-4. However, be aware that rate limits are enforced, and these are significantly lower on the free plan. As a result, you’ll only be able to send a predetermined number of messages per day. If you reach this limit, you can continue the conversation with GPT-4 or GPT-3.5.

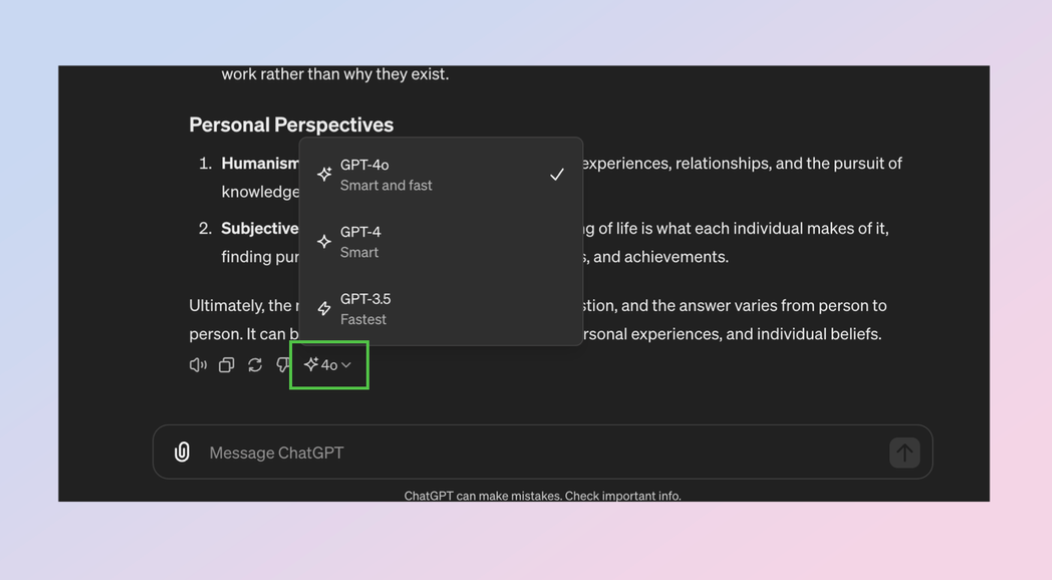

Change the model in a chat

You also have the option to switch the AI model you’re utilizing during a chat session. For instance, if you wish to regulate the number of messages you send using GPT-4o, you could initiate the chat with GPT-3.5. Then, select the sparkle icon located at the end of the response.

This action opens a model menu, and by selecting GPT-4o, which might be necessary for addressing a more complex math query, the subsequent response will be generated using GPT-4o.

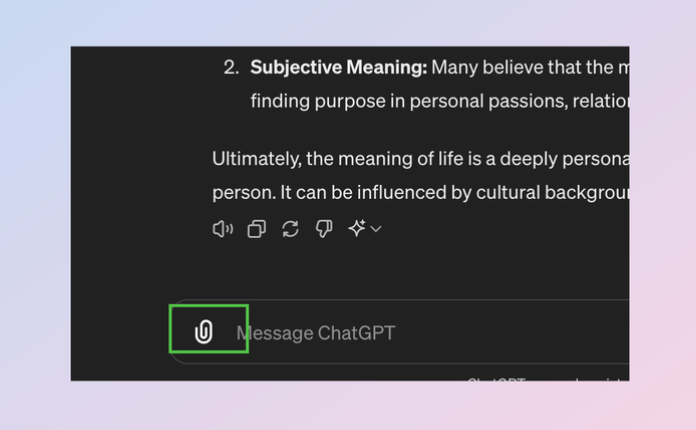

Upload files

If you have access to GPT-4o and are on the free plan, you can now upload files for analysis. These files could include images, videos, or even PDFs. Afterwards, you can pose any questions about the content to GPT-4o.

GPT-4o Limitations & Risks

Regulation for generative AI is still in its nascent stages, with the EU AI Act representing the primary legal framework currently in place. Consequently, companies developing AI must make their own determinations regarding what constitutes safe AI.

OpenAI employs a preparedness framework to assess whether a new model is suitable for release to the public. This framework evaluates four key areas of concern:

- Cybersecurity: Assessing whether the AI could enhance the productivity of cybercriminals or facilitate the creation of exploits.

- BCRN: Examining whether the AI could aid experts in devising biological, chemical, radiological, or nuclear threats.

- Persuasion: Evaluating the potential for the AI to generate persuasive (potentially interactive) content that influences individuals to alter their beliefs.

- Model autonomy: Investigating whether the AI can function as an autonomous agent, executing actions in conjunction with other software.

Each area of concern is categorized as Low, Medium, High, or Critical, and the model’s overall score corresponds to the highest grade among the four categories.

OpenAI commits not to release a model that poses a critical concern. However, this safety threshold is relatively low, as a critical concern is defined as something capable of significantly disrupting human civilization. GPT-4o comfortably avoids this, scoring a Medium concern rating.

Imperfect output

As is common with all generative AIs, the model may not always behave as expected. Computer vision technology is not flawless, which means interpretations of images or videos are not guaranteed to be accurate.

Similarly, speech transcriptions are rarely 100% precise, especially when the speaker has a strong accent or uses technical terminology.

OpenAI released a video showcasing some outtakes where GPT-4o did not function as intended. Notable instances of failure included unsuccessful translation between two non-English languages, inappropriate tone of voice (such as sounding condescending), and speaking in the wrong language.

Accelerated risk of audio deepfakes

The OpenAI announcement acknowledges that “GPT-4o’s audio modalities introduce various new risks.” In many respects, GPT-4o has the potential to accelerate the proliferation of deepfake scam calls, where AI impersonates celebrities, politicians, and individuals’ acquaintances. This is a challenge that is likely to worsen before it is effectively addressed, and GPT-4o possesses the capability to significantly enhance the persuasiveness of deepfake scam calls.

To address this risk, audio output is restricted to a selection of predefined voices.

It is conceivable that technically proficient scammers could utilize GPT-4o to generate text output and then employ their own text-to-speech model. However, it remains uncertain whether this approach would still retain the advantages in terms of latency and tone of voice that GPT-4o provides.

How Much Does GPT-4o Cost?

Despite its superior speed compared to GPT-4 Turbo and enhanced vision capabilities, GPT-4o will be approximately 50% more affordable than its predecessor. As stated on the OpenAI website, utilizing the model will incur costs of $5 per million tokens for input and $15 per million tokens for output.

Conclusion

GPT-4o represents a significant advancement in generative AI, integrating text, audio, and visual processing into a single efficient model. This innovation holds the promise of quicker responses, more immersive interactions, and a broader spectrum of applications, ranging from real-time translation to enhanced data analysis and improved accessibility for visually impaired individuals.

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

What is the difference between LLM and GPT

LLM Leaderboard 2024 Predictions Revealed

Novita AI LLM Inference Engine: the largest throughput and cheapest inference available