9 Best GPUs for Deep Learning 2024

Introduction

In 2024, the best GPU is key to getting top-notch results in deep learning. The right GPU can speed up your deep learning models. You need a GPU with lots of memory, high-speed memory access, and strong CUDA cores to handle complex deep learning tasks. With AI always changing, choosing the best GPU is important for researchers and pros who want to explore new limits in AI capabilities. Let’s check out the nine GPUs leading the pack for deep learning in 2024.

Top 9 GPUs for Deep Learning in 2024

In 2024, the best GPUs are the NVIDIA GeForce RTX 4090, AMD Radeon RX 7900 XT, and others. These GPUs are great for deep learning and AI. They can handle complex tasks very well. They are the best choice for anyone looking to do advanced computing.

1. NVIDIA GeForce RTX 4090

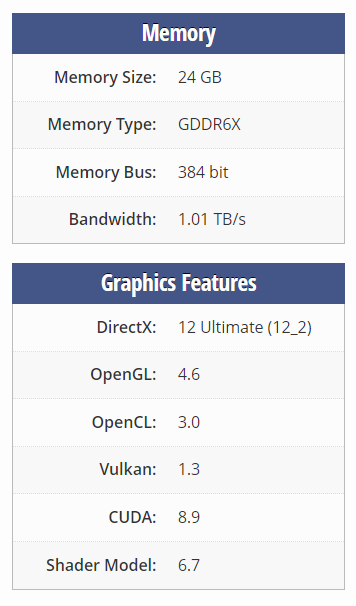

The NVIDIA GeForce RTX 4090 is great for deep learning. This powerful GPU has a high memory bandwidth and an impressive number of CUDA cores, making it great at training large models. Tensor Cores give AI workloads a big boost, perfect for complex neural network computations and deep learning frameworks. If you need a great GPU with lots of memory and excellent performance, the GeForce RTX 4090 is a good choice.

2. AMD Radeon RX 7900 XT

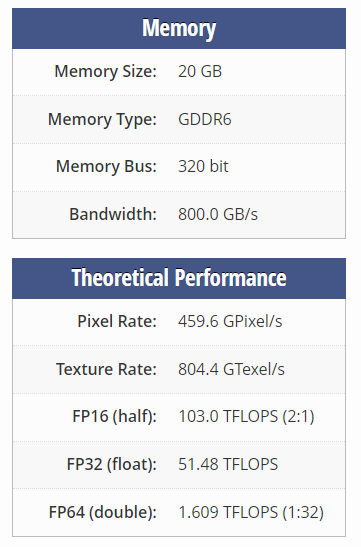

The AMD Radeon RX 7900 XT is a great choice for anyone getting into deep learning. It’s perfect for complex AI projects and handling big data. This GPU can handle complex deep learning models with ease. The Radeon RX 7900 XT is great for AI tasks that need lots of computing power and memory. It meets the varied demands of professionals in deep learning fields perfectly.

3. NVIDIA Tesla V100

The NVIDIA Tesla V100 is a powerful GPU designed for deep learning and AI, ideal for 2024 projects. Built on NVIDIA’s Volta architecture with Tensor Cores, it accelerates learning and decision-making processes. With numerous CUDA cores and fast memory, it efficiently handles complex models and large datasets, making it perfect for both training and inference workloads. The ample memory capacity ensures smooth operation on big projects with extensive data, making the Tesla V100 an excellent choice for deep learning and AI tasks.

4. AMD Instinct MI200 Series

The AMD Instinct MI200 Series makes it easy for deep learning enthusiasts to tackle complex models. This GPU is great for AI tasks that need a lot of memory. This series is great for neural networks and generative AI because it handles large amounts of data well. It has lots of memory and powerful computing power, making it ideal for anyone looking to get great results in their deep learning projects.

5. NVIDIA GeForce RTX 3080

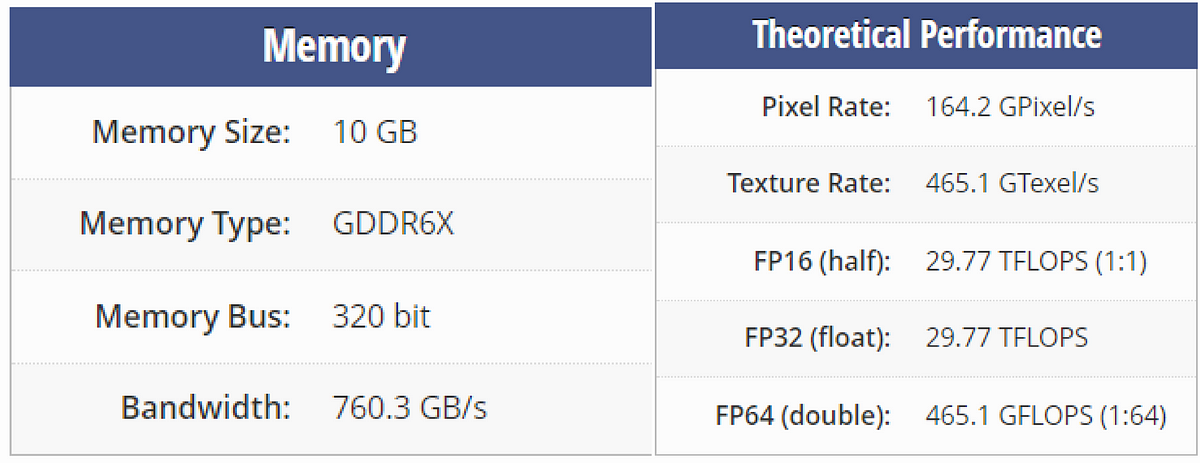

The NVIDIA GeForce RTX 3080 is an excellent GPU for deep learning, making it a top choice for 2024. It has ample memory to handle large datasets efficiently, crucial for training and running complex models. With advanced ray tracing and powerful Tensor Cores, the RTX 3080 excels in speeding up learning processes and enhancing model accuracy. Its numerous CUDA cores and high memory bandwidth ensure smooth performance during intense deep learning computations.

6. NVIDIA A100

The NVIDIA A100 is a powerhouse for AI and deep learning, thanks to its Ampere architecture and advanced Tensor Cores. It handles large datasets and complex models effortlessly, speeding up training with its ample and fast-access memory. Ideal for serious AI workloads, the A100 excels in both training and inference tasks. For top-notch deep learning performance, investing in an A100 is a smart choice.

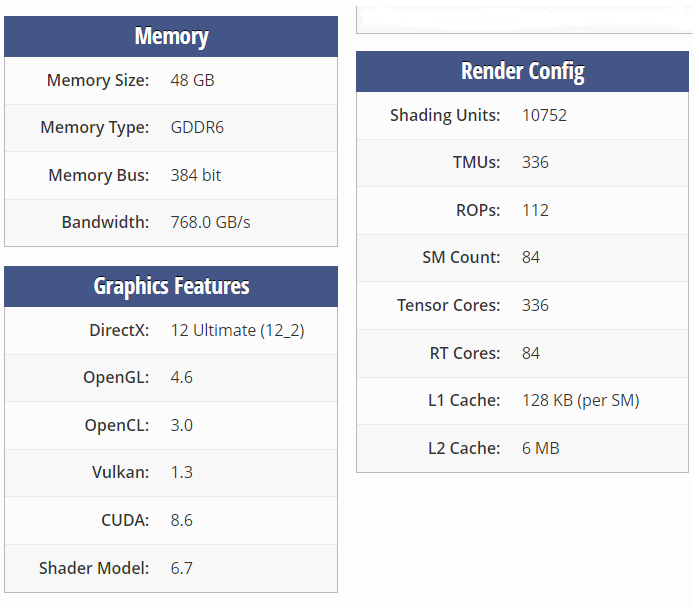

7. NVIDIA RTX A6000

The NVIDIA RTX A6000 excels in deep learning tasks with its high memory capacity and fast data handling. It’s ideal for complex models, offering quick and precise calculations. Its multitasking ability and specialized Tensor Cores make it perfect for demanding AI jobs, making it a top choice for GPU-powered activities.

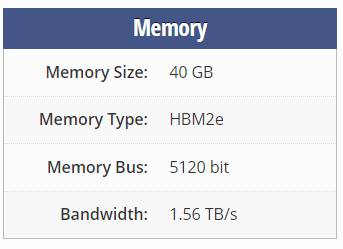

8. AMD Radeon Pro VII

The AMD Radeon Pro VII is a strong choice for deep learning, offering excellent performance for complex models. With ample memory and fast data handling, it efficiently processes large datasets. Its quick memory access is ideal for AI training and neural networks. Additionally, it uses power efficiently to speed up training times, making it perfect for quick and efficient deep learning processing.

9. NVIDIA TITAN RTX

The NVIDIA Titan RTX is a powerhouse for deep learning, boasting high performance and ample memory. It excels with large datasets and complex models, thanks to its Tensor Cores that accelerate AI training and matrix operations. This GPU strikes a perfect balance between speed and memory, making it essential for complex AI tasks.

GPU Selection Criteria for Deep Learning Tasks

When you’re trying to pick the right GPU for deep learning, there are a few important things to think about.

Understanding Performance Metrics

When choosing GPUs for deep learning, understanding key performance metrics is essential for efficient processing:

- CUDA cores: More cores mean better multitasking for deep learning computations.

- Memory bandwidth: Faster data transfer between storage and processing areas ensures smooth operations.

- Core count: Indicates the number of processing units in a GPU, enabling faster multitasking.

- Clock speed: Higher GHz values indicate quicker processing capabilities.

Evaluating Memory Capacity and Bandwidth

For optimal deep learning in 2024, focus on a GPU’s memory capacity and data transfer speed. These factors are crucial for efficiently managing large datasets and speeding up training times. Selecting a GPU with ample memory and quick data access ensures superior performance in handling complex models and neural networks.

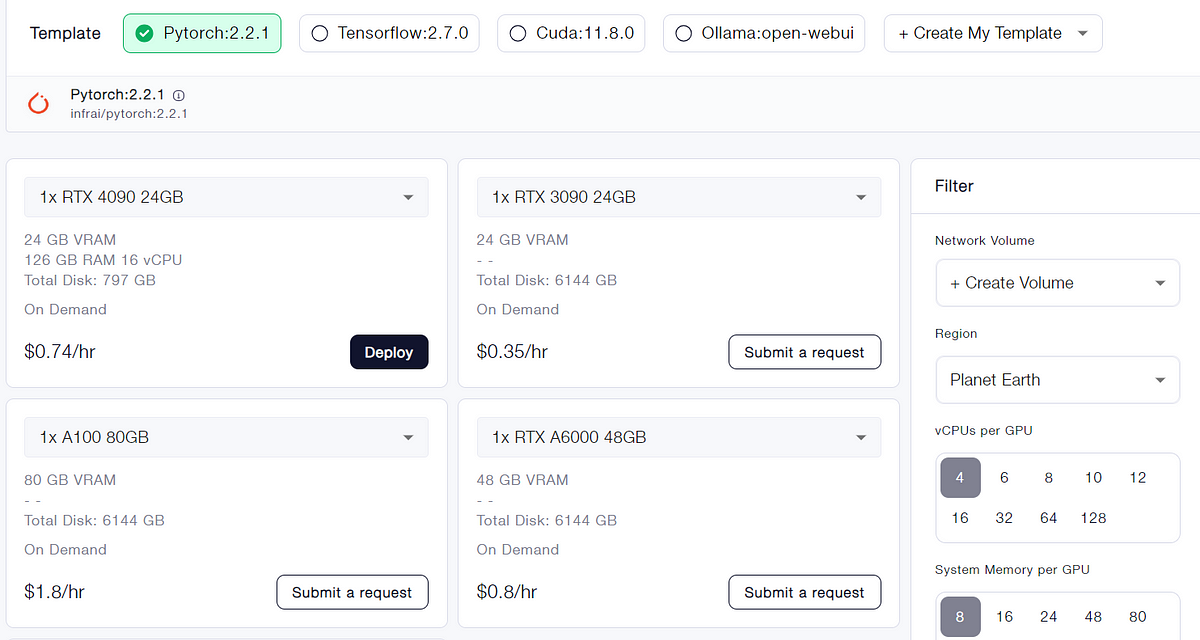

Experience Difference GPUs at the Same Time

Are you struggling to decide which GPU to choose? Each GPU has its own strengths and weaknesses. Why not try having multiple GPUs at the same time? You can select any GPU you want on Novita AI GPU Instance including RTX 4090, A100 and A6000 and configure instances with just one click.

Novita AI GPU Instance offers every developer or lerner high-quality and cost-effective GPU resource in a pay-as-you-go way. Except the multiple choices of GPUs, like RTX 4090 or A100, you can also directly open Pytorch and other framework you want. With those frameworks, it’ll be easy anf effective for you to run deep learning projects.

The Future of GPUs in Deep Learning

Looking ahead, the world of GPUs for deep learning is set to change a lot with AI playing a big part in creating new-generation GPUs. We should keep an eye on things like improvements in ampere architecture that help with AI training and boost how well computers can perform tasks.

Innovations to Watch in the GPU Market

Stay updated on GPU market advancements, particularly for deep learning and AI. Expect GPUs to enhance memory capacity, data transfer speed (memory bandwidth), and computational power. Improvements in tensor cores, parallel processing, and energy efficiency will facilitate handling complex tasks and large datasets more efficiently.

The Role of AI in Shaping Next-Generation GPUs

AI advancements are transforming GPUs, making them more powerful to handle complex tasks like image recognition and decision-making. Future GPUs are being designed with AI-specific features to enhance learning and data analysis. They will prioritize not just speed but also efficiency and suitability for AI tasks.

Conclusion

In summary, choosing the optimal GPU for deep learning in 2024 is crucial for performance and efficiency. Consider NVIDIA’s GeForce RTX 4090 and AMD’s Instinct MI200 Series, which offer specialized features for deep learning tasks. Evaluate their performance, memory, and bandwidth before making a choice. Stay updated on AI advancements and emerging tech to remain at the forefront of this dynamic field.

Frequently Asked Questions

What Makes a GPU Suitable for Deep Learning?

For deep learning tasks to be done well, GPUs need to have a lot of processing power, plenty of memory, and the ability to handle many things at once efficiently.

Is RTX 3060 enough for deep learning?

With 12GB of RAM, it can handle most deep learning models, although larger models may face memory constraints. The GPU’s performance is not as good as the high-end RTX 3090 or A100, but it is still a significant improvement over the previous generation of GPUs.

Can older GPUs still be effective for deep learning tasks, or is it necessary to invest in newer models?

Certainly! While newer GPU models offer enhanced performance and capabilities, older GPUs can still be quite effective for deep learning, depending on the specific requirements and tasks at hand.

Novita AI, is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance - the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading: