Using Docker to Run YOLO on GPUs: Boost Your Deep Learning with GPU Rentals

This guide explains how to run the YOLO model on GPUs using Docker to speed up deep learning tasks. By leveraging GPU rentals, you can boost model performance without the need for expensive hardware.

Key Highlights

- Explore YOLO (You Only Look Once) and its importance in finding objects using deep learning.

- Learn how Docker helps in launching deep learning models like YOLO and makes workflows easier.

- Discover how GPUs boost deep learning tasks and enhance object detection performance.

- Run YOLO on GPUs using Docker to accelerate deep learning tasks.

- Think about renting GPUs as a smart way to get strong computing power for your deep learning projects.

- We present Novita AI GPU Instance, a strong cloud service with good NVIDIA GPUs for your deep learning needs.

Introduction

In the fast-changing area of deep learning, object detection is very important. This blog will look at how to improve YOLO (You Only Look Once), a top object detection system. We will use Docker and GPUs to get better performance. You will learn about the benefits of using Docker for easier setup. We will also talk about how GPUs can speed up deep-learning tasks.

The Significance of YOLO in Deep Learning for Object Detection

YOLO changed object recognition in AI by simplifying the process. This speeds up and improves image analysis for tasks like autonomous driving and security systems.

Understanding YOLO: A Quick Overview

YOLO, short for You Only Look Once, is a fast and accurate algorithm for real-time object detection. It processes images quickly by identifying objects in one go, unlike previous methods. Trained on labeled images, the YOLO model can recognize various objects and their locations in pictures. It is widely used in applications such as self-driving cars, robots, and security systems.

Challenges in Establishing a Deep Learning Environment

Hardware and resource constraints often hinder setting up a deep learning environment efficiently. The demand for specialized components like NVIDIA GPUs and drivers can be challenging. Software issues, such as configuring dependencies and ensuring compatibility, pose additional obstacles.

Overcoming these hurdles requires meticulous planning to streamline the deployment process. Docker’s ability to manage various platforms and configurations simplifies this complexity, offering a solution to the intricate challenges faced in establishing a robust deep learning environment.

Hardware and Resource Constraints

Deep learning faces hardware and resource constraints, hindering optimal model performance. Insufficient GPU power may slow down training, affecting detection accuracy. Limited memory capacity could lead to out-of-memory errors with large datasets.

Ensuring adequate GPU support and memory resources is crucial for seamless object detection. Provisioning ample resources is imperative for efficient model training and inference, enhancing overall deep learning capabilities.

Software and Configuration Issues

Setting up your software and configurations properly is crucial for seamless deep learning. Compatibility issues may arise with various platforms, so ensure you have the correct NVIDIA drivers and Docker runtime installed.

Troubleshoot any problems by checking the list of runtimes available. Configuration errors can hinder performance, so double-check your settings before running your YOLO models. Consult the Docker documentation for guidance on resolving common configuration pitfalls.

Docker: Revolutionizing Deployment in Deep Learning

Docker revolutionizes deep learning by simplifying model deployment. With Docker, we can package our YOLO application and dependencies into isolated containers for consistent runtime across various systems. Its portability enables seamless sharing and movement of applications between machines or cloud platforms, ensuring compatibility and efficiency in collaborative work and model deployment.

Simplifying YOLO Model Deployment with Docker

Deploying YOLO models with Docker is simple. Pack your YOLO application code and dependencies into a Docker image, which acts as a blueprint for a Docker container.

Ultralytics’ Docker repository on Docker Hub provides pre-built YOLO Docker images for easy shipping and use, streamlining the process of launching a YOLO container with all dependencies in place.

Using Docker for YOLO deployment offers advantages like consistent environments, easy sharing, and simplified deployment across multiple platforms.

Key Components for Our Object Detector

For our object detector, crucial elements include the network architecture with optimized weights for accurate predictions.

Deployment mechanisms and input handling procedures ensure seamless integration and efficient processing of data.

These components collectively enhance the model’s performance and facilitate real-time object detection in various scenarios and environments. Integrating these elements effectively is paramount for achieving superior results in deep learning tasks.

Network Architecture and Weights

The deep learning model YOLOv3 comprises a unique architecture with 53 convolutional layers. The network’s architecture plays a crucial role in achieving high accuracy and speed for real-time object detection.

Pre-trained weights are available for download, facilitating the quick deployment of the model for inference tasks. By fine-tuning these weights on a specific dataset, users can optimize the model for their requirements, ensuring efficient object detection. Understanding and manipulating the network architecture and weights are fundamental for tailoring YOLO to specific use cases.

Deployment and Input Handling

To effectively deploy the YOLO model, understanding input handling is crucial. Docker assists in encapsulating the process, simplifying deployment across various platforms.

By managing GPU support through NVIDIA drivers and Docker volumes, handling input data becomes streamlined. Utilizing NVIDIA GPUs ensures high-speed processing, optimizing inference for deep learning tasks. The seamless integration of input handling and deployment in Docker enhances the overall object detection workflow.

Setting up Docker with NVIDIA Support

To optimize your deep learning tasks with YOLO using GPU acceleration, setting up Docker with NVIDIA support is crucial.

This integration ensures that your deep learning environment can leverage the power of NVIDIA GPUs efficiently.

By verifying the NVIDIA runtime compatibility within Docker and installing the necessary NVIDIA Docker runtime, you pave the way for seamless execution of GPU-accelerated processes. This setup is fundamental for high-speed object detection tasks, enhancing both performance and efficiency.

Verify NVIDIA Runtime with Docker

To ensure smooth GPU operation in Docker, verifying the NVIDIA runtime is crucial. Check compatibility with your local machine by running the NVIDIA Docker image, ensuring proper installation of NVIDIA drivers.

Utilize the ‘Nvidia-semi’ command to inspect GPU information and confirm Docker’s GPU support. By listing runtimes using the ‘docker run’ command with the ‘ — gpus’ flag and the ‘ — ipc=host’ option, validate NVIDIA GPU integration. This step guarantees that your Docker environment is correctly configured for accelerated deep-learning tasks.

Installing NVIDIA Docker Runtime

To install the NVIDIA Docker runtime, ensure you have Docker pre-installed on your system. Begin by adding the NVIDIA repository to your distribution using a single command.

Then proceed to install the NVIDIA Container Toolkit package and verify the installation. Finally, restart the Docker service to apply the changes successfully.

This setup allows seamless integration of NVIDIA GPU support within your Docker environment for enhanced deep learning performance, with further details available on the Docker website.

How to build an object detector

Setting up your object detector involves installing YOLOv3 and implementing object detection. Start with fetching the latest image from the Ultralytics Docker repository.

Configure the Python code to define the model architecture and weights. Ensure compatibility with your platform.

Utilize NVIDIA support for GPU acceleration. With the required dependencies in place, execute the inference on images or videos. Refer to the documentation for any setup or configuration queries. Congratulations on creating your object detector with YOLO!

Setting Up YOLOv3

To deploy YOLOv3, start by pulling the latest Ultralytics Docker image from Docker Hub.

Ensure NVIDIA drivers are installed, then run the Docker container with GPU support using the appropriate host flag. Next, set up the YOLOv3 network architecture, clone the necessary repo, and download the necessary weights.

Finally, prepare your Python code for inference using the GPU. This setup optimizes YOLO for efficient object detection tasks. Utilize the power of GPUs for accelerated deep learning solutions.

Implementing Object Detection

To implement object detection using YOLO, start by running the YOLOv3 model on Docker with GPU support while utilizing the efficient COCO dataset. Utilize GPU acceleration to enhance processing speed for real-time detection tasks.

Adjust parameters in the Python code as necessary for your specific requirements. Ensure your Docker container is set up correctly to handle inputs and outputs seamlessly.

By leveraging GPU capabilities within Docker, you can efficiently deploy and optimize your object detection system for various deep learning tasks. Enhance your model’s performance with GPU-accelerated inference.

GPU Rentals: A Cost-Effective Solution for Deep Learning

Deep learning requires strong hardware, and buying high-end GPUs can cost a lot of money. Renting GPUs has become a smart and cheaper way to get access to top hardware without spending a lot upfront. This option helps researchers, startups, and individuals use resources they might not be able to afford otherwise.

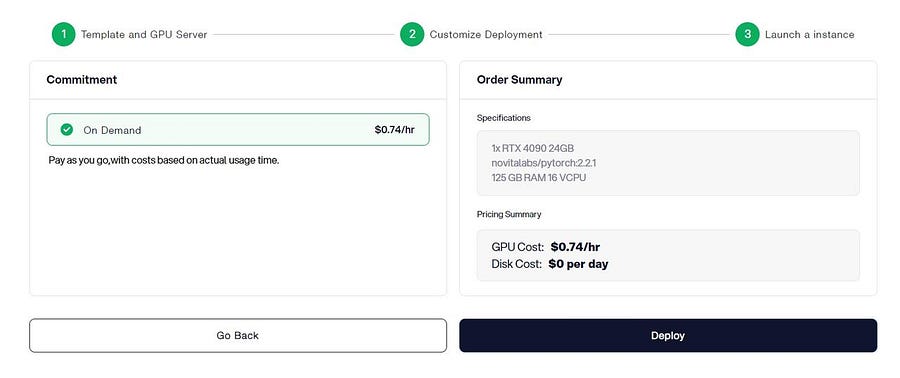

Renting GPU instances gives users flexibility, scalability, and lower costs. They can pick the type of GPU and how long they want to rent it, depending on their project needs. This pay-as-you-go method helps save money while allowing access to the latest technology. This way, deep learning projects can progress quickly without overspending.

Benefits of Renting GPU Instances for Deep Learning

Renting GPU instances for deep learning tasks is gaining popularity as a cost-effective and flexible alternative to purchasing expensive hardware. With rental services, you can access high-performance NVIDIA GPUs without the upfront costs or maintenance worries associated with hardware ownership.

GPU rentals offer affordability and scalability, allowing users to choose from various GPU options and adjust rental durations based on project requirements and budget constraints. This flexibility enables researchers and developers to optimize computing power as needed, leading to cost savings and increased efficiency.

How to Choose the Right GPU Rental Service

Choosing the right GPU rental service for your deep learning projects is crucial. Look for services offering the latest NVIDIA GPUs like A100 or V100, compatible with TensorFlow and PyTorch. Consider GPU options, performance metrics, pricing, ease of use, and customer support when making your decision.

Novita AI GPU Instance: Unleashing the Power of NVIDIA Series

For anyone looking for a strong and dependable platform for deep learning with GPUs, the Novita AI GPU Instance is a great choice.

It uses advanced NVIDIA GPUs to provide impressive performance and flexibility for tough deep-learning tasks. Users can start their projects quickly because everything is set up for popular deep-learning libraries.

Novita AI offers different GPU instances suited for various needs. This ranges from small experiments to big model training. It has a user-friendly design and clear documentation.

This makes it easy to manage instances, keep an eye on performance, and use resources well. Users can then focus on what is important — building and launching their deep learning models.

The Novita AI GPU Instance offers key features such as

The Novita AI GPU Instance is made for deep learning. It has many features that improve performance and efficiency:

- GPU Cloud Access: Novita AI gives you easy access to GPU cloud resources that work well with PyTorch Lightning Trainer, offering flexible and affordable GPU power when you need it.

- Cost Efficiency: You can cut cloud costs by up to 50%, which is especially helpful for startups and research teams working with tight budgets.

- Instant Deployment: You can quickly launch a Pod (a container for AI tasks) and start training models right away, without wasting time on setup.

- Customizable Templates: Novita AI provides templates for popular frameworks like PyTorch, so you can easily choose the best setup for your needs.

- High-Performance Hardware: You’ll get access to powerful GPUs like NVIDIA A100 SXM, RTX 4090, and A6000, all with plenty of VRAM and RAM to train even the largest AI models efficiently.

How to start your journey in Novita AI GPU Instance:

Getting started with Novita AI is quick and straightforward. Their Docker Quickstart Guide provides a step-by-step process for setting up your deep learning environment on a Novita AI GPU instance. Follow these steps and you’ll be ready to go:

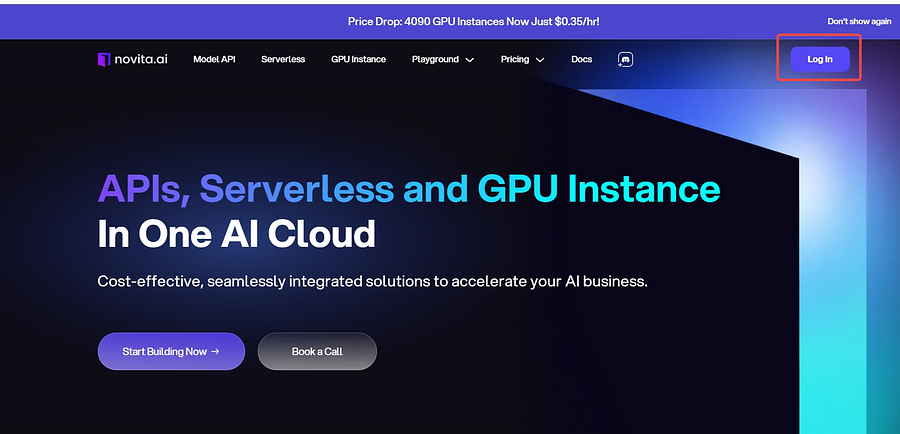

STEP1:

Sign up for an Account: Visit the Novita AI website and sign up for an account. Choose the GPU instance that suits your requirements and budget.

STEP2:

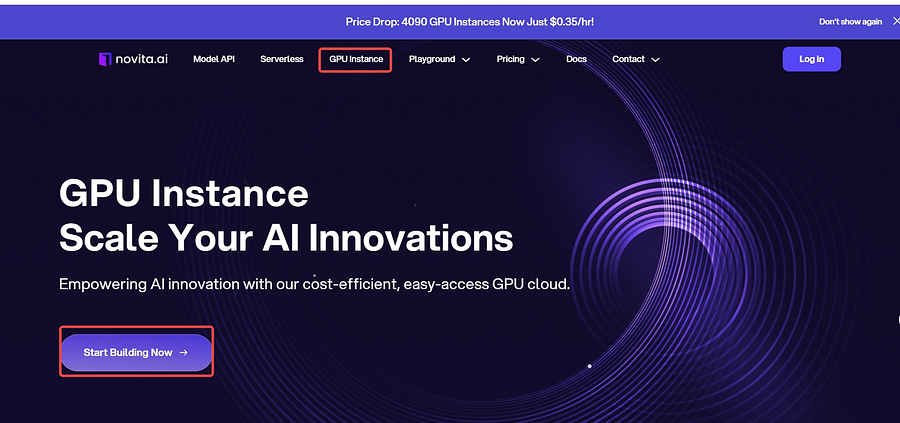

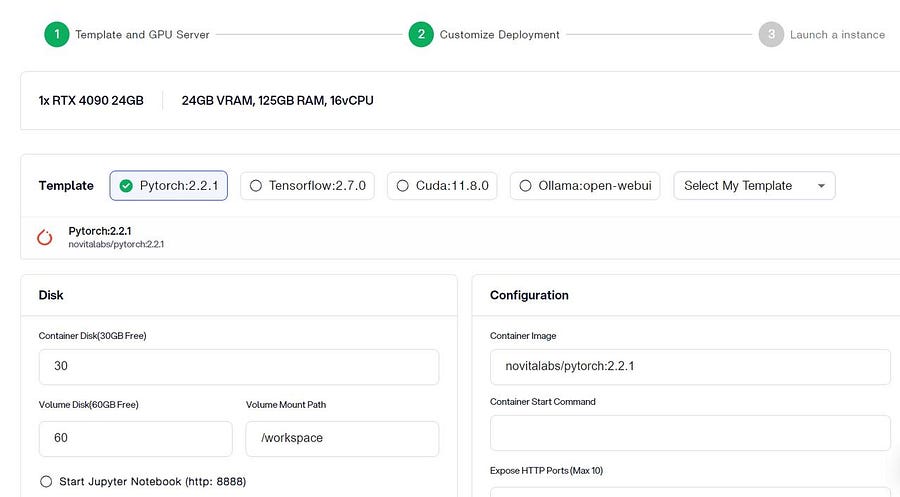

Access Your Instance:If you are a new subscriber, please register our account first. And then click on the GPU Instance button on our webpage.

STEP3:

Launch Your Deep Learning Environment

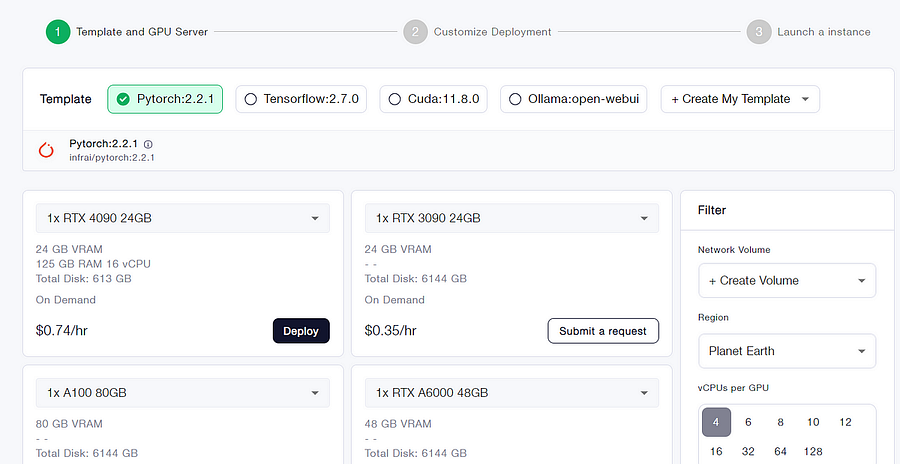

You can choose you own template, including Pytorch, Tensorflow, Cuda, or Ollama, according to your specific needs. Furthermore, you can also create your own template data by clicking the final button.

STEP4:

Whether it’s for research, development, or deployment of AI applications, Novita AI GPU Instance equipped with CUDA 12 delivers a powerful and efficient GPU computing experience in the cloud.

Then, our service provides access to high-performance GPUs such as the NVIDIA RTX 4090, and RTX 3090, each with substantial VRAM and RAM, ensuring that even the most demanding AI models can be trained efficiently. You can pick it based on your needs.

Key Features:

- After creating an instance, users can save it as a new template and use that template to launch new instances.

- The new template can be saved directly to DockerHub.

- Templates enable deployment in seconds.

- Value: Users can now debug private templates directly online, greatly improving efficiency!

Conclusion

Optimizing YOLO with Docker and GPU is a game-changer for deep learning. Using Docker makes it easy to deploy models. GPUs offer fast object detection, which boosts performance and efficiency in your work. Renting GPU instances is a smart and low-cost choice. You can pick the resources that fit your needs best. The Novita AI GPU Instance, with its advanced NVIDIA features, opens up many opportunities for your deep learning projects. Use this new technology to take your projects to the next level.

Frequently Asked Questions

How does Docker simplify the deployment of YOLO models?

Docker makes it easy to deploy the YOLO model by packing the model and all its dependencies in a Docker container. This method helps maintain the same setup in different environments. It also allows for simple sharing and deployment on platforms like the Ultralytics Docker Hub.

Why are GPUs critical for deep learning tasks like object detection?

GPUs are vital for deep learning tasks. This is especially true for NVIDIA GPUs that support CUDA. Their ability to process many tasks at once greatly speeds up calculations. This makes it much faster to train and use object detection models like YOLO.

What are the advantages of renting GPU instances for deep learning?

Renting GPU instances is a smart way to get the powerful computing power of NVIDIA GPUs. You avoid spending a lot of money upfront. This method lets you change resources based on what your project needs. At the same time, you have access to the newest hardware and software.

Can you run YOLO in Docker on a rented GPU instance?

Yes, you can run YOLO using Docker on a rented GPU instance. Make sure that the GPU rental service supports NVIDIA Docker. This will allow the container to use the GPU for faster training and inference.

What are the best practices for setting up YOLO in Docker for optimal performance?

For the best performance, use a small Docker image. Use the NVIDIA Docker runtime to access the GPU. Make sure to optimize data loading and keep an eye on GPU usage. Configuring these things properly helps to improve YOLO’s performance in the Docker setup.

Novita AI is the all-in-one cloud platform that empowers your AI ambitions. With seamlessly integrated APIs, serverless computing, and GPU acceleration, we provide the cost-effective tools you need to rapidly build and scale your AI-driven business. Eliminate infrastructure headaches and get started for free — Novita AI makes your AI dreams a reality.

Recommended Reading: