The Magic of Layers: Understanding Container Image Efficiency and Consistency

The File System View in Containerized Processes

What does the file system look like to processes running inside a container? One might immediately think this relates to Mount Namespace—the processes within a container should see a completely independent file system. This way, operations can be performed within the container's directories, such as /tmp, without any influence from the host machine or other containers. Is this the case, though?

The Role of Mount Namespace

Mount Namespace functions slightly differently from other namespaces in that its effect on the container process's view of the file system only takes effect with a mount operation. However, as regular users, we desire a more user-friendly scenario: every time a new container is created, we want the container process to see a file system that is an isolated environment rather than one inherited from the host. How can this be achieved?It's not hard to imagine that we could remount the entire root directory “/” before starting the container process. Thanks to Mount Namespace, this mount would be invisible to the host, allowing the container process to operate freely within. In the Linux operating system, there's a command called chroot that can conveniently accomplish this task in a shell. As the name suggests, it helps you "change root file system," redirecting the process's root directory to a specified location.

Container Images and rootfs

In fact, Mount Namespace was invented based on continuous improvements to chroot and is the first Namespace in Linux. To make this root directory seem more "real" for containers, we typically mount a complete operating system file system under this root, such as an Ubuntu 16.04 ISO. Consequently, after the container starts, executing "ls /" inside the container reveals all the directories and files of Ubuntu 16.04. This file system mounted on the container's root directory, providing an isolated execution environment for container processes, is known as a "container image." It also has a more technical term: rootfs (root file system).The /bin/bash executed after entering the container is the executable file under the /bin directory, entirely distinct from the host's /bin/bash. Now, you should understand that the core principle behind Docker projects essentially involves configuring Linux Namespace for the user process to be created, setting specific Cgroups parameters, and changing the root directory (Change Root) of the process. Thus, a complete container is born.

Shared Kernel and Application Dependencies in Containers

However, Docker prefers to use the pivot_root system call for the final step of switching, falling back to chroot if the system doesn't support pivot_root. Although these two system calls have similar functionalities, there are subtle differences. Moreover, it's essential to clarify that rootfs only includes the files, configurations, and directories of an operating system but not the kernel. In Linux, these two parts are stored separately, with the kernel image of a specific version being loaded only during boot-up. Therefore, rootfs includes only the "shell" of the operating system, not its "soul."So, where is the "soul" of the operating system for containers? In reality, all containers on the same machine share the host operating system's kernel. This means that if your application needs to configure kernel parameters, load additional kernel modules, or interact directly with the kernel, you should note that these operations and dependencies target the host operating system's kernel, which is a "global variable" for all containers on that machine. This is one of the main drawbacks of containers compared to virtual machines: the latter not only have simulated hardware as a sandbox but also run a complete Guest OS within each sandbox for applications to use. Nonetheless, due to the existence of rootfs, containers boast an important feature that has been widely publicized: consistency.

The Concept of Layers in Container Images

What is the "consistency" of containers? Due to differences between cloud and local server environments, the packaging process of applications has always been one of the most "painful" steps when using PaaS. However, with containers, or more precisely, with container images (i.e., rootfs), this problem has been elegantly resolved.Since rootfs packages not just the application but the entire operating system's files and directories, it encapsulates all dependencies required for the application to run. In fact, for most developers, their understanding of application dependencies has been limited to the programming language level, such as Golang's Godeps.json. However, a long-overlooked fact is that for an application, the operating system itself is the most complete "dependency library" it needs to run.With the ability of container images to "package the operating system," this fundamental dependency environment finally becomes part of the application sandbox. This grants containers their touted consistency: regardless of whether it's on a local machine, in the cloud, or anywhere else, users only need to unpack the container image to recreate the complete execution environment required for the application to run.Incremental Layer Design in Container ImagesThis consistency at the operating system level bridges the gap between local development and remote execution environments for applications. However, you might have noticed another tricky issue: do we need to recreate rootfs every time we develop a new application or update an existing one? An intuitive solution could be to save a rootfs after every "meaningful" operation during its creation, allowing colleagues to use the rootfs they need.This solution isn't scalable, though. The reason is that once colleagues modify this rootfs, there's no relationship between the old and new rootfs, leading to extreme fragmentation. Since these modifications are based on an old rootfs, can we make these changes incrementally? The benefit of this approach is that everyone only needs to maintain the incremental content relative to the base rootfs, rather than creating a "fork" with every modification.The answer is, of course, yes. This is precisely why Docker didn't follow the standard process of creating rootfs when implementing Docker images but rather made a small innovation: Docker introduced the concept of layers in its image design. Each operation users perform to create an image generates a layer, which is an incremental rootfs. This idea didn't come out of thin air but utilized a capability called Union File System (UnionFS), primarily functioning to union mount (union mount) multiple directories from different locations into a single directory.

Layering in Containers

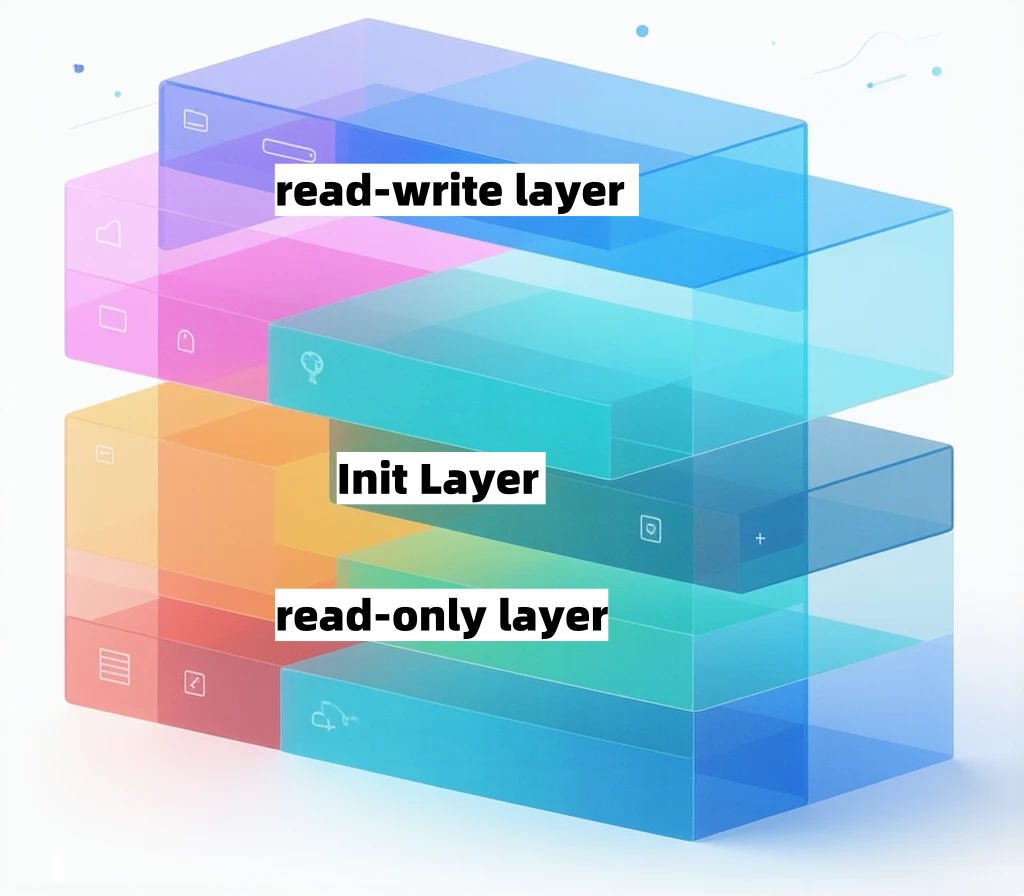

Part 1: Read-Only Layers. These are the bottom five layers of this container's rootfs, corresponding to the five layers of the ubuntu:latest image. They are mounted as read-only (ro+wh, i.e., readonly+whiteout). Each layer incrementally contains part of the Ubuntu operating system.

Part 2: Read-Write Layer. This is the top layer of this container's rootfs (6e3be5d2ecccae7cc), mounted as rw, or read-write. Before any files are written, this directory is empty. Once a write operation is performed within the container, the modifications appear incrementally in this layer. But have you considered what happens if you want to delete a file from the read-only layer? To achieve this deletion, AuFS creates a whiteout file in the read-write layer to "obscure" the file in the read-only layer. For instance, deleting a file named foo from the read-only layer actually creates a file named .wh.foo in the read-write layer. Thus, when these layers are union mounted, the foo file is obscured by the .wh.foo file and "disappears." This functionality is what the "ro+wh" mounting method signifies, i.e., read-only plus whiteout.

Part 3: Init Layer. This is an internal layer generated by the Docker project, ending with "-init," sandwiched between the read-only and read-write layers. The Init layer is specifically used to store information like /etc/hosts and /etc/resolv.conf. The need for such a layer arises because these files originally belong to the read-only Ubuntu image but often require specific values, such as the hostname, to be written at container startup. Hence, modifications are needed in the read-write layer. However, these modifications typically only apply to the current container and are not intended to be committed with the read-write layer when executing docker commit. Therefore, Docker's approach is to mount these modified files in a separate layer. When users execute docker commit, only the read-write layer is committed, excluding this content.

Advantages of Layered Design in Container Images

Through the "layered image" design, with Docker images at its core, technicians from different companies and teams are closely connected. Moreover, since operations on container images are incremental, the content pulled or pushed each time is much smaller than multiple complete operating systems; shared layers mean that the total space required for all these container images is less than the sum of each image alone. This agility in collaboration based on container images far surpasses that of virtual machine disk images, which can be several GBs in size.More importantly, once an image is published, downloading it anywhere globally yields exactly the same content, fully reproducing the original environment created by the image maker.

The Impact of Container Images on Software Development Workflows

The invention of container images not only bridges every step of the "development - testing - deployment" process but also signifies that container images will become the mainstream method of software distribution in the future. This distribution method offers advantages such as being lightweight, highly consistent, and facilitating collaboration, making software development and deployment more efficient and reliable. With its lightweight nature, consistency, and efficiency, container technology is increasingly becoming an essential tool in software development and operations. As technology continues to evolve and innovate, there's reason to believe that container technology will play an even more significant role in the future.

Novita AI, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go, it frees you from GPU maintenance hassles while building your own products. Try it for free.