Stable Diffusion Image to Image Mastery: Expert Guide

Master stable diffusion image to image techniques with our expert guide. Enhance your skills and knowledge in this cutting-edge field.

Introduction

Generative AI is an exciting field in machine learning that focuses on creating new content using models. These models can generate audio, images, text, and videos based on given inputs, having significant potential for enterprise businesses.

One of the most powerful applications of generative AI is stable diffusion, that has wide applications. In this blog post, we will explore the Stable Diffusion Image-to-Image Pipeline, which is a new approach to generating images using stable diffusion. We will also provide a step-by-step guide on how to use the image-to-image functionality of stable diffusion. By the end of this blog post, you will have a thorough understanding of stable diffusion and its applications for image generation.

What is Stable Diffusion?

Stable Difusion is a technique that uses generative models and artificial intelligence to create detailed images based on text descriptions.

The Concepts of Stable Diffusion

Stable diffusion is a deep learning model that utilizes a diffusion model to generate high-quality images based on text descriptions. The stable diffusion model operates in a latent space, where it gradually destroys an image by adding noise and then reverses this process to generate a new image. It can be used for a wide range of applications, including image generation, inpainting (adding features to an existing image), outpainting (removing features from an existing image), and image-to-image translations guided by a text prompt.

The importance of Stable Diffusion

Stable diffusion has significant implications for machine learning and computer vision applications, particularly in the area of image segmentation, as it allows for the creation of high-quality images based on text descriptions. By leveraging the iterative nature of stable diffusion, accurate and detailed image segmentation results can be achieved, which can then be used to improve the performance of computer vision algorithms and enable the development of more accurate and robust models. Moreover, campared with the vision techniques of traditional computer, the deep learning models like stable diffusion can learn complex patterns and generate realistic images.

What is image-to-image?

Image-to-image (img2img for short) is a process of generating new AI images from an input image and text prompt, often enhancing or altering its visual appearance. The important part of the input image is the color and the composition, that the output image will follow.

Step-to-step guide to image-to-image in Stable Diffusion

Follow the steps below, you will have a deep understanding of the image-to-image in Stable Diffusion.

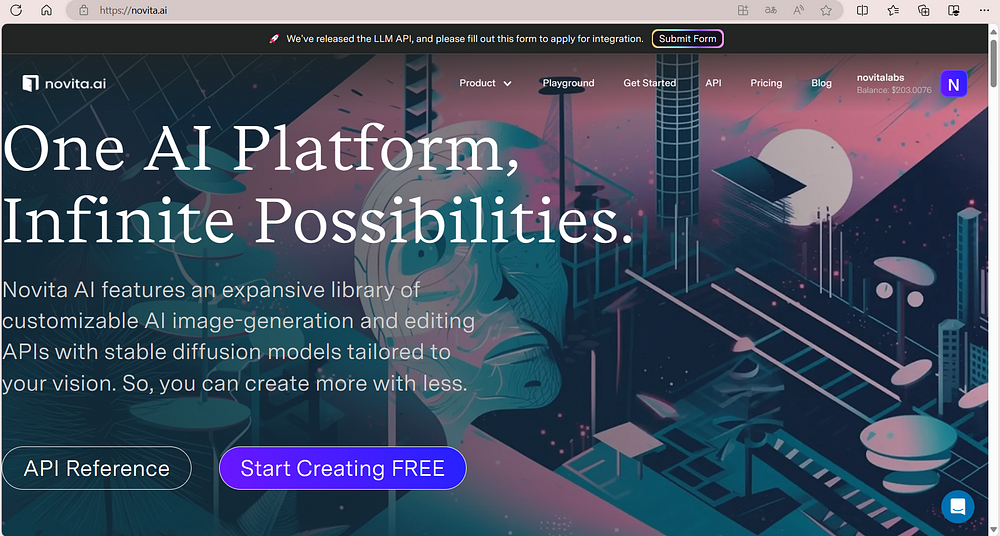

Step1: Go to and log in novita.ai

You will land on this page.

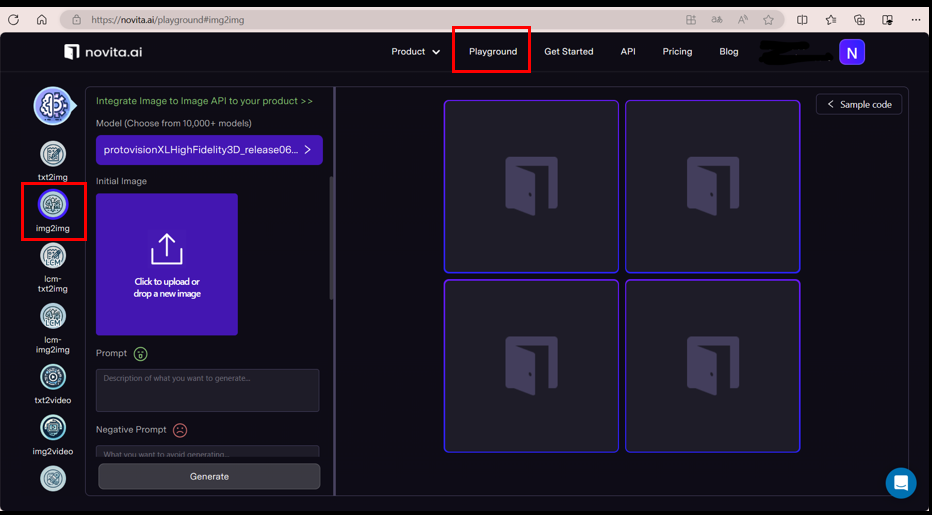

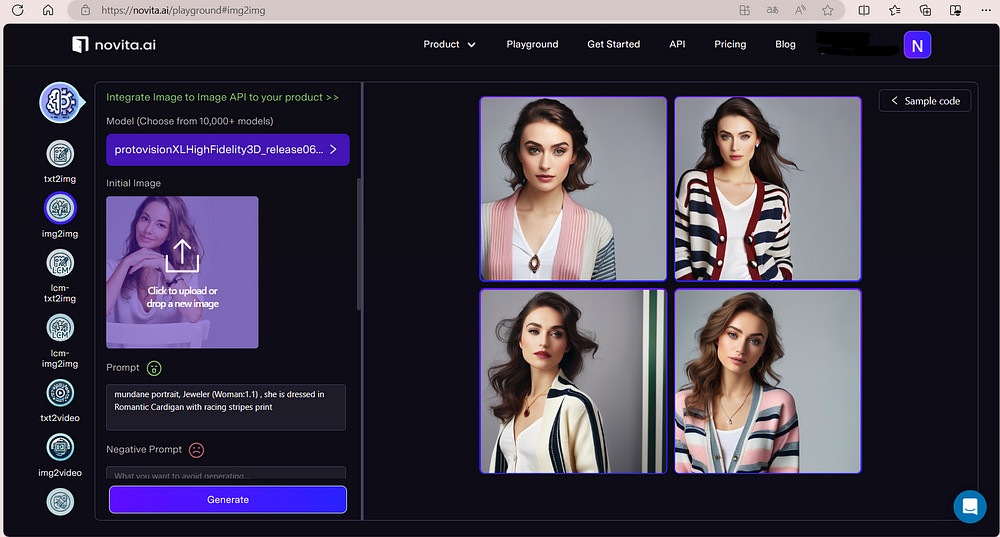

Step2: Find the “img2img”

Click on the “Playground” button, then comes to “img2img”.

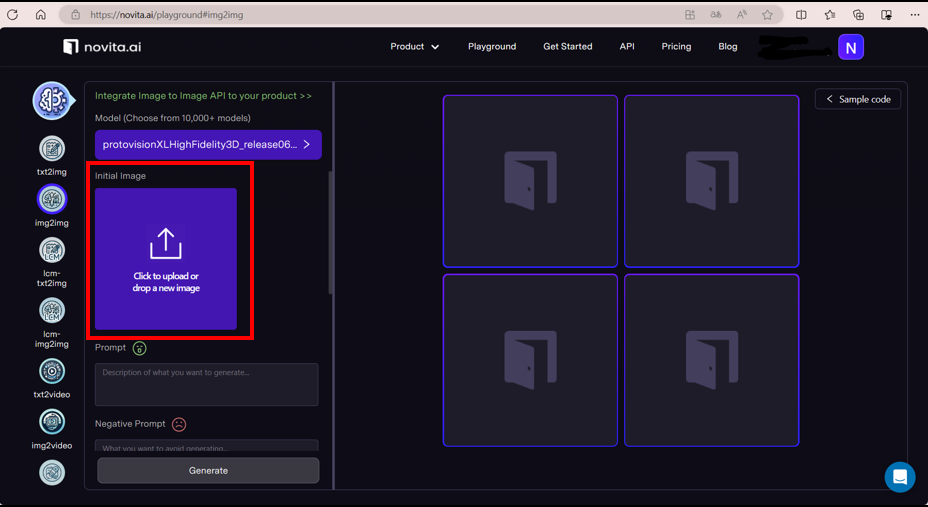

Step3: Upload the initial image

Step4: Setting the parameter

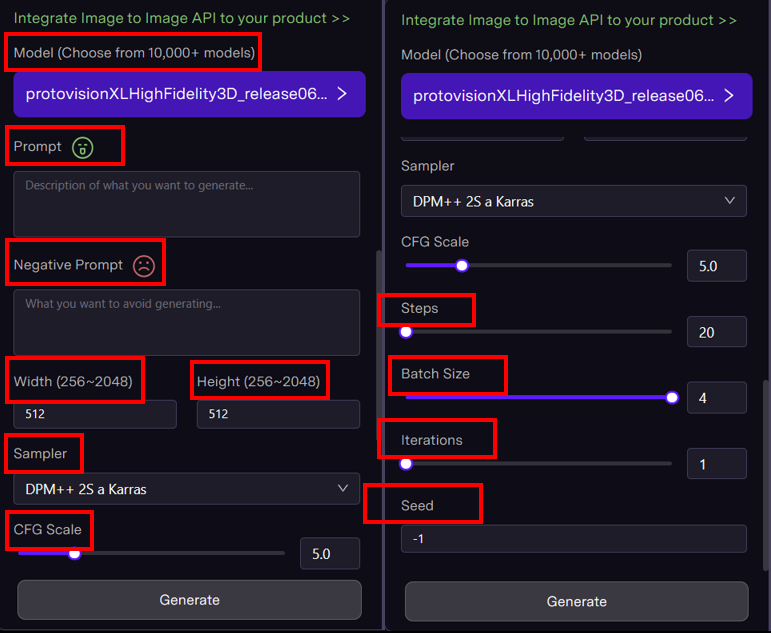

To optimize image generation in stable diffusion, setting the parameter is crucial. Different values for parameters can be customized based on specific requirements.

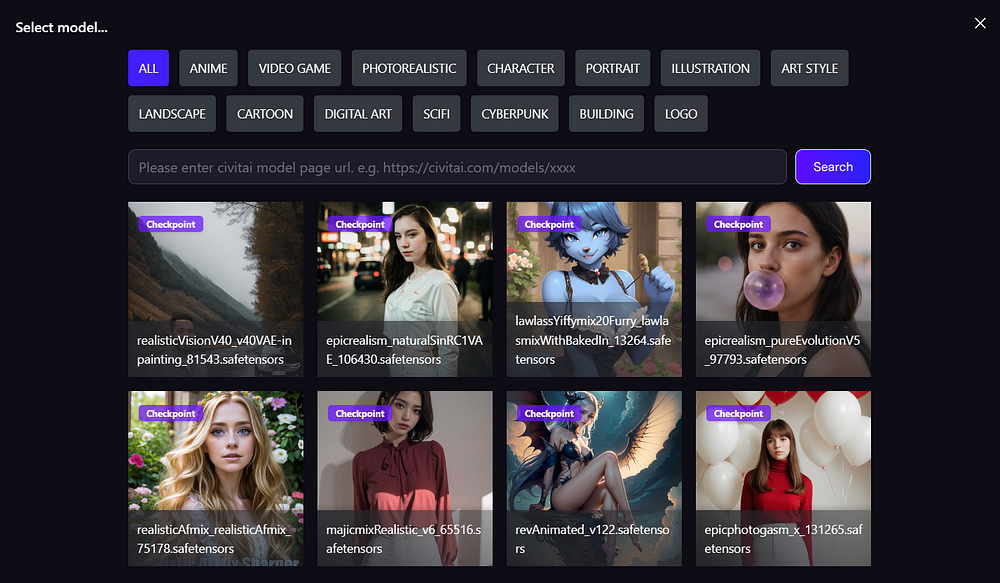

- Model: different models present different styles, choose a model according to your needs.

- Prompt: description of what you want to generate.

- Negative Prompt: description of what you want to avoid generating.

- Width & Height: the resolution you want the generate picture to be.

- Sampler: it helps determine the composition of the image. Different samplers present different effects.

- CFG Scale: the classifier-free guidance scale controls how much the image generation process follows the text prompt. The higher the value, the more the image sticks to a given text input.

- Steps: the more steps you use, the better quality you’ll achieve. But you shouldn’t set steps as high as possible.

- Batch Size

- Literations

- Seeds: a number used to initialize the generation. You don’t need to come up with the number yourself.

Step5: Generate and download

Advanced Techniques in Stable Diffusion

Leveraging advanced techniques in Stable Diffusion can provide higher quality images.

Utilizing Negative Prompts for Better Results

By providing text inputs with contrary or undesired attributes, like “low quality” and “bad proportion”, the model learns to avoid generating such features in the output. This technique helps refine the image generation process by steering the model towards creating images that align better with the desired outcome, leading to higher-quality results.

Frequently Asked Question

As Stable Diffusion become more and more important, there are some question asked frequently below.

What are some tips for creating effective prompts for Stable Diffusion?

Creating effective prompts for Stable Diffusion involves providing clear and concise descriptions of the desired image. Use specific and descriptive language, and consider incorporating creativity to guide the generative AI model effectively. Experiment with different prompts to explore the capabilities of the latent space and unleash your creativity.

What are the advantages of using Stable Diffusion for image editing?

The Stable diffusion can improve stability and quality of image results, generate high-quality images from low-quality sources, enhance specific features in an image, work for static and dynamic images, save time and effort. It can be a valuable tool for enhancing images, but it should be used cautiously and in combination with other techniques for optimal results.

Conclusion

In conclusion, understanding Stable Diffusion and its applications is crucial for enhancing image quality and achieving desired results. By following a step-by-step guide like the one provided, users can efficiently utilize image-to-image techniques in Stable Diffusion for various tasks.

novita.ai provides Stable Diffusion API and hundreds of fast and cheapest AI image generation APIs for 10,000 models.🎯 Fastest generation in just 2s, Pay-As-You-Go, a minimum of $0.0015 for each standard image, you can add your own models and avoid GPU maintenance. Free to share open-source extensions.

Recommended reading