Simplifying LLM API Integration for Developers

Key Highlights

- LLM APIs shift language processing, enabling tasks like automatic content creation, sentiment analysis, and code writing.

- Combining LLM APIs with other tools can enhance user experience. Benefits of LLM APIs include personalized answers, improved utility, and reduced errors.

- A step-by-step process involves setting up a coding environment and proper integration steps.

- Attention to detail like cost management and smooth operations is vital for successful integration.

- Novita AI, an AI API platform featuring various LLMs, offer LLM API service. Developers can integrate LLM API to produce more reliably and scalably, faster and cheaper with the platform.

Introduction

Built on deep learning algorithms, LLMs excel in understanding human language. By leveraging LLMs, developers can make applications smarter and more user-friendly. These tools enable real-time content generation, sentiment analysis, and multilingual capabilities.

This blog explores how to incorporate LLM APIs into projects effectively. It delves into understanding LLMs, their components, and the benefits of integrating them. The guide outlines the steps to seamlessly fuse an API with an LLM — from selecting the right one to integration. Additionally, it addresses common challenges and offers insights on overcoming them.

Understanding LLM API

LLMs show capabilities in natural language tasks using deep learning algorithms to analyze text data. By mimicking human language use, they produce relevant responses. Developers can integrate LLM APIs into their applications using API documentation for guidance on endpoints, formats, and authentication. This enhances user experiences with natural language processing tools without complex AI system development.

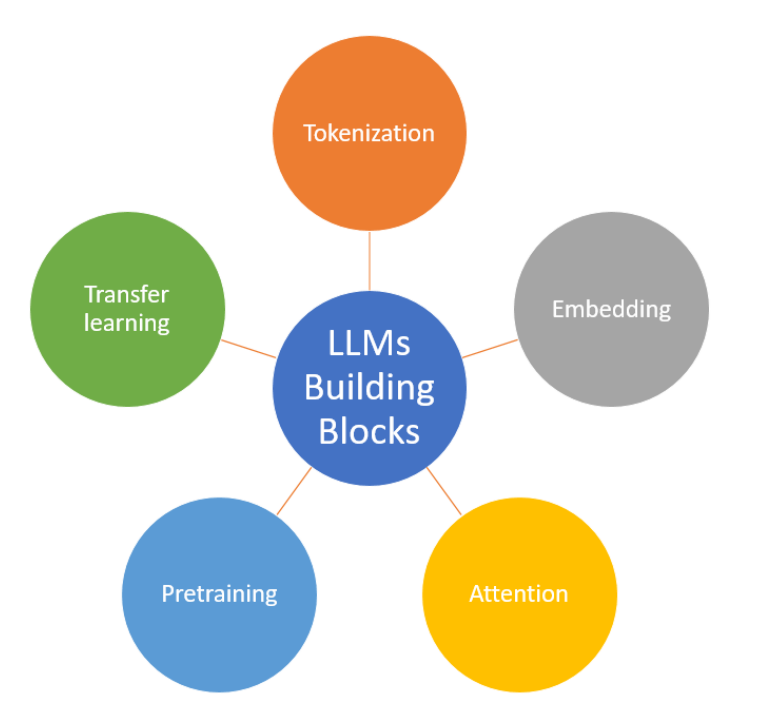

The Basics of LLMs

LLMs focus on natural language. Unlike traditional methods with set rules, LLMs learn from data examples, picking up on natural word connections. Trained with tons of text, they identify patterns to create relevant content. LLMs excel in translation, summarization, and maintaining a natural tone for applications such as chatbots, content creation tools, and sentiment analysis programs.

LLMs consist of various neural network layers such as recurrent, feedforward, embedding, and attention layers. These layers collaborate to process input text and create output content, with the embedding layer generating embeddings from the input text.

What is LLM API?

These Application Programming Interfaces (APIs) act as channels that facilitate smooth communication between software systems and LLMs. Equipped to handle a large volume of requests simultaneously, they are highly scalable for various business applications. LLM APIs ensure smooth operations and user experiences during peak times, allowing businesses to expand services and cater to more customers without sacrificing performance or reliability.

Benefits of LLM API

- Valuable insights: LLMs assist enterprises in uncovering hidden patterns for creating innovative products and marketing campaigns.

- Increased efficiency and productivity: LLMs assist companies in automating mundane tasks like writing emails, analyzing data, and generating reports.

- Enhanced customer interactions: Adding LLMs as chatbots and virtual assistants significantly enhances client retention and growth with instant responses.

- Lower risks: LLM API helps companies mitigate legal, regulation, and compliance risks by detecting fraud and suspicious activities with powerful algorithms.

- Lower costs: Automating tasks with LLMs helps businesses save money and reduce staff, making them more competitive and cost-efficient.

Practices for LLM API Integration

Enterprises can efficiently integrate LLM APIs into their systems by implementing a modular integration strategy, which helps reduce complexity, lower risk, and simplify maintenance and updates. This method establishes a solid groundwork for enhancing business value with AI-driven language processing.

Error Handling and Debugging

Tracking errors effectively helps in understanding and rectifying issues promptly. Developers can use tools like logs or code analysis to identify and resolve discrepancies efficiently.

Optimizing API Calls

Smooth API calls are vital for optimal LLM API integration. Combining requests boosts efficiency by fetching all needed data in one go. Caching popular data minimizes repeated API requests, enhancing performance. Efficient execution is achieved by smartly managing resources and optimizing data retrieval methods when integrating LLM APIs.

Integrating with CRM Systems

Integrating LLM APIs into CRM (Customer Relationship Management) systems enhances customer support and data management. LLMs provide quick and accurate information, enabling human agents to focus on complex issues. They also analyze customer data to personalize interactions, identify trends, and offer recommendations.

Integrating with CMS Systems

Mixing LLM APIs with CMS (content management system) enhances content creation and website management by automating content creation, and improving SEO. LLMs simplify generating top-quality articles, blog posts, and social media updates. These AI models adapt writing styles, analyze user behaviour, and offer personalized recommendations.

Preparing for LLM API Integration

Before integrating LLM APIs, ensure compatibility and cloud resources. Understand requirements, Python code, and system capabilities. Obtain access keys for authentication and familiarize yourself with API documentation. Set up resources correctly for integration.

Prerequisites for Integration

Before using LLM APIs in your tools and apps, developers must grasp software development basics. Here are some prerequisites.

- Defining the project’s scope

- Documenting current procedures

- Acknowledging service-level agreements

- Recognizing corporate documents

- Charting systems and connections

- Understanding data flow

Choosing the Right LLM API for Your Project

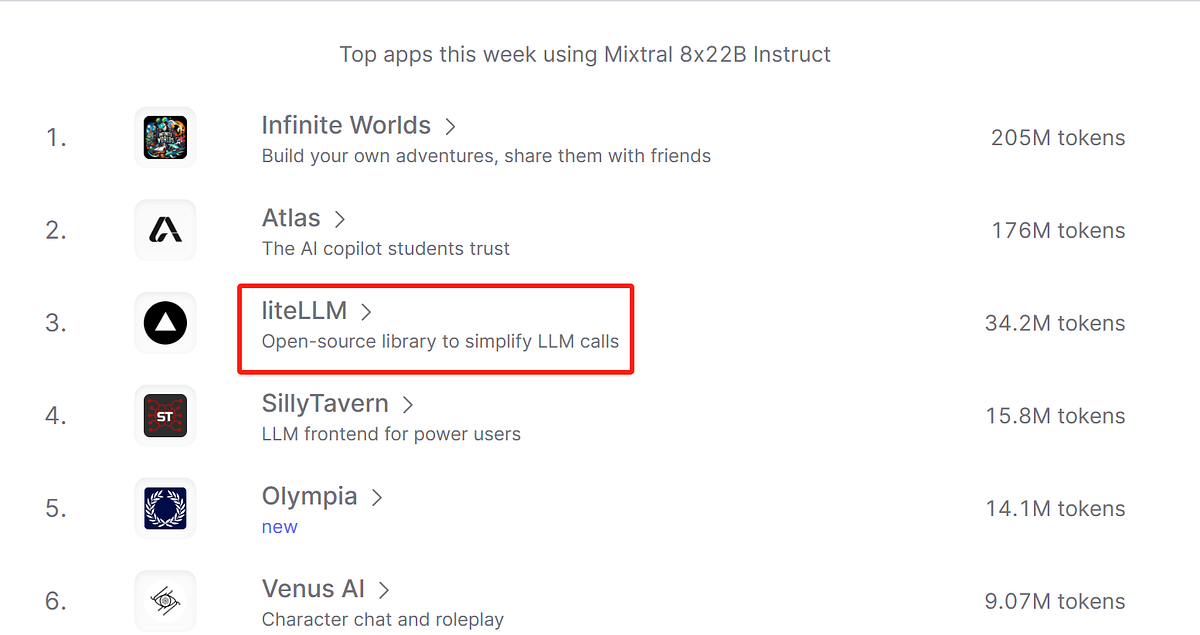

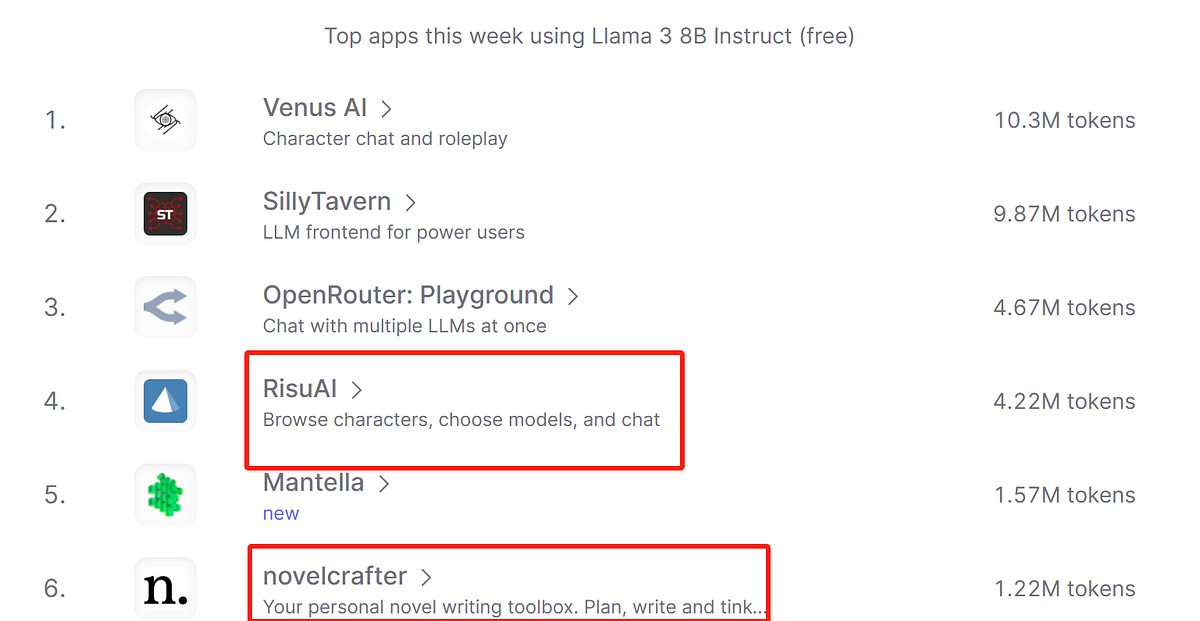

Choosing the right LLM API is vital for project success in language tasks. Consider your project’s needs, evaluate features, and identify essential capabilities. Research LLM APIs for performance, accuracy, scalability, documentation quality, and support services. Popular options like ChatGPT, llama-3, Mistral 7b instruct, and Mixtral 8x22b cater to diverse language requirements. Among them, llama-3, Mistral 7b instruct and Mixtral 8x22b are provided by Novita AI.

Step-by-Step Guide to Novita AI LLM API Integration

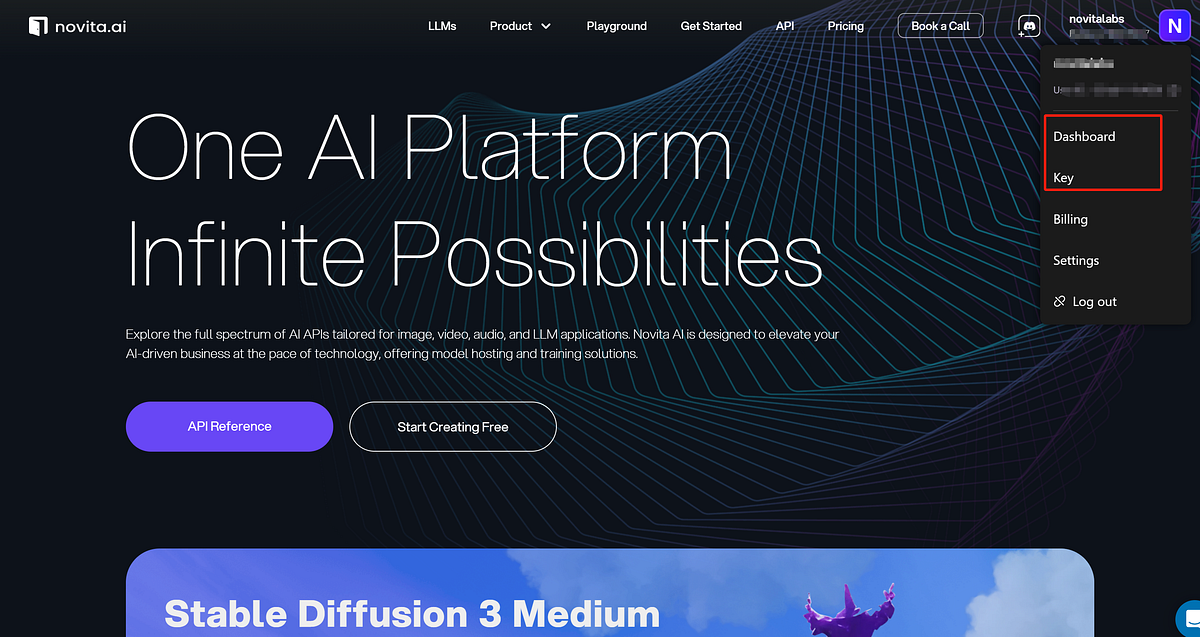

As mentioned before, Novita AI provides APIs for developers to develop and improve AI tools, including LLM APIs. Let’s look at a simple guide.

- Step 1: Visit Novita AI and create an account.

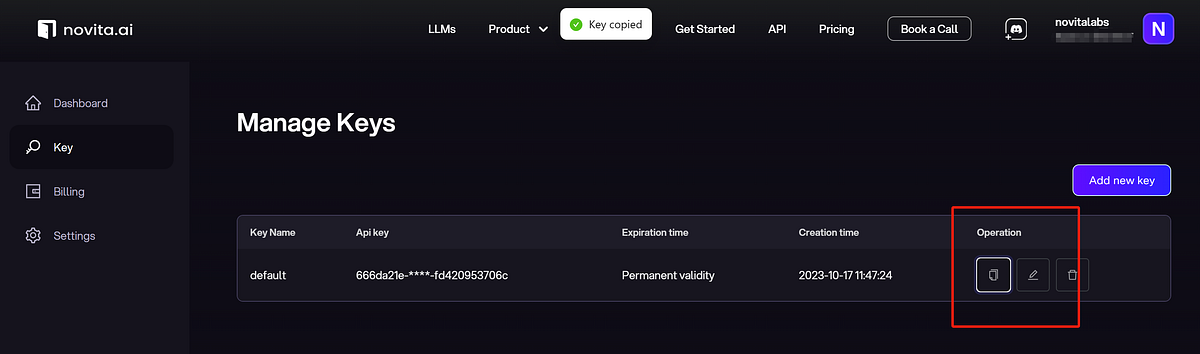

- Step 2: Create an API key from Novita AI under the “Dashboard” tab.

- Step 3: After entering the “Manage keys” page, you can click copy to obtain your key for authentication and authorization.

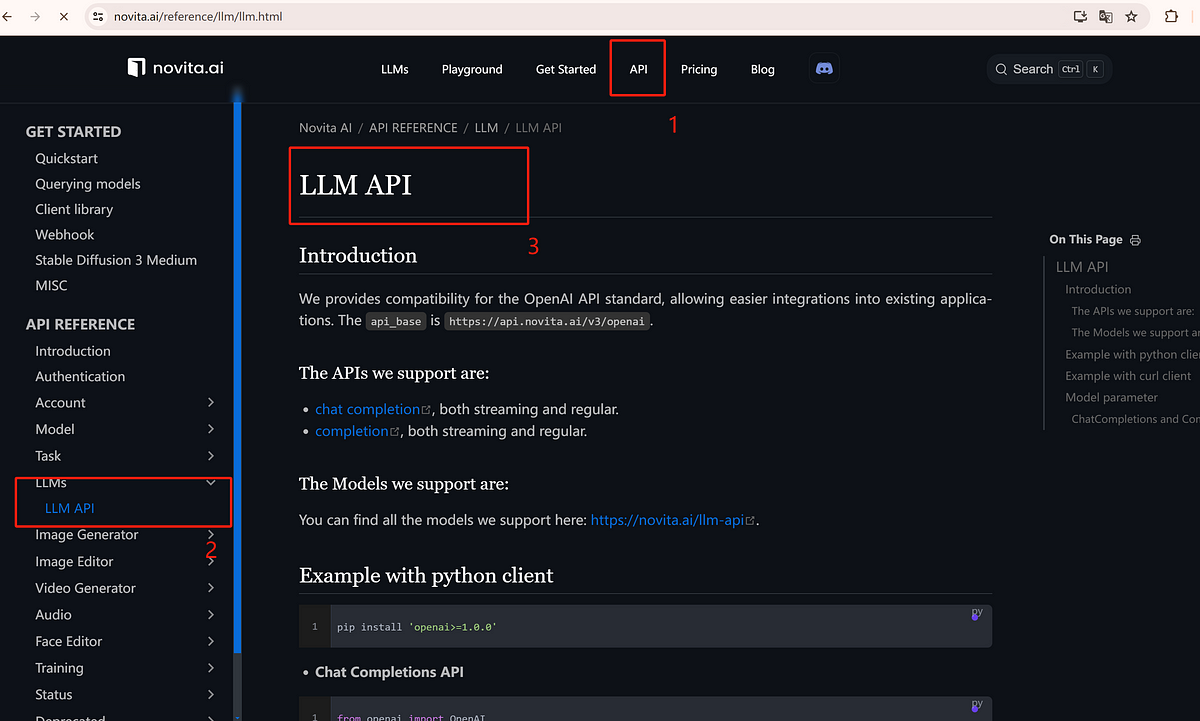

- Step 4: Proceed to the API section and locate the “LLM” within the “LLMs” tab. Study the API documentation to understand how to interact with the system, including sending requests and handling responses.

- Step 5: Set up your development environment by installing required components like Python and recommended frameworks.

- Step 6: Import the required libraries into your development environment.

For Java users, you can install the Javascript client library with npm.

npm i novita-sdk

For Python users, you can install the Python client library with pip.

pip install novita-client

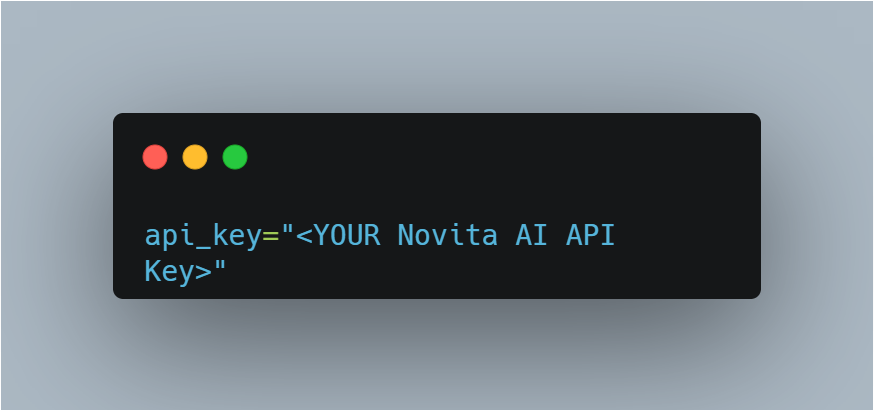

- Step 7: Initialize the API with your API key to start interacting with Novita AI LLM like:

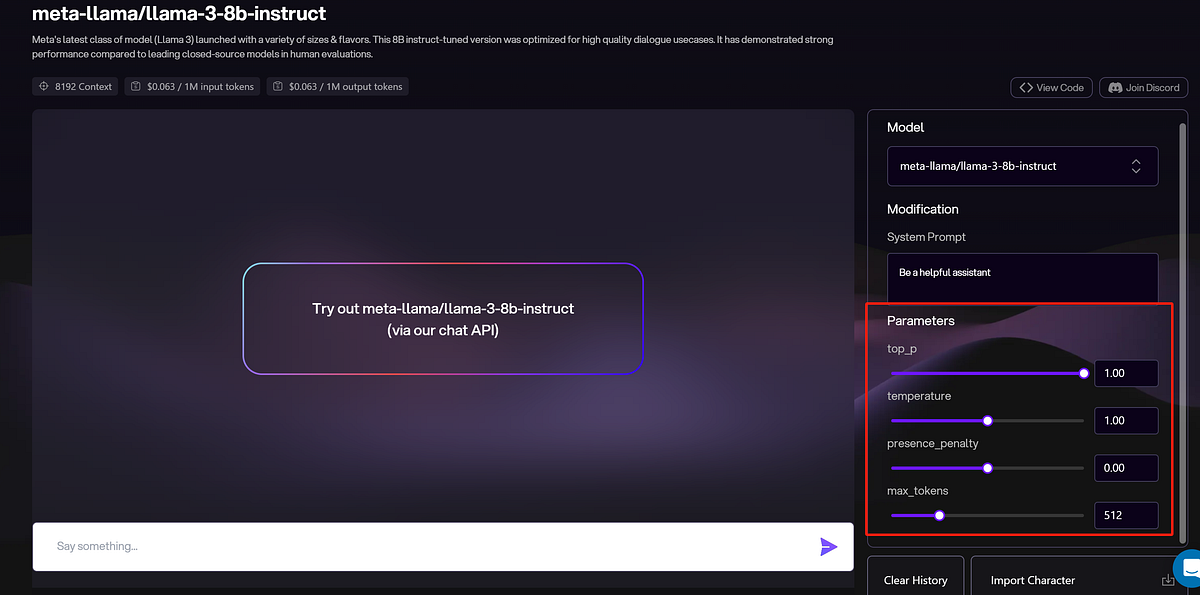

- Step 8: Make requests using the details provided in the API Reference for proper communication. You can further adjust parameters like max_tokens and temperature.

- Step 9: Test the models and extract relevant information from responses for your application’s use.

Sample Chat Completions API

Troubleshooting Common LLM API Integration Issues

Fixing common connection issues with LLM API is crucial for smooth operations. Here’s how to address typical problems.

Identifying and Solving Integration Errors

To ensure smooth LLM API integration, developers should carefully review API documentation to ensure the correct setup and necessary details in their calls. Analyzing error logs for clues and testing various scenarios can help pinpoint and resolve errors effectively, ensuring reliable integrations.

Performance Tuning for Scalability

Ensuring smooth and effective scaling of your LLM API is crucial. Performance tuning optimizes efficiency by making smarter API calls, caching data, and breaking down tasks. Monitoring response times and resource consumption helps identify areas for improvement.

Measuring Success in LLM API Integration

Key Metrics to Monitor

- Response Time: Ensure quick responses for instant usability.

- Error Rate: Monitor and address errors promptly for optimal performance.

- Cost Analysis: Evaluate the financial impact of using the API to avoid overspending.

Leveraging Analytics for Continuous Improvement

- Use user feedback analysis to identify areas for improvement in LLM API integration.

- Monitor key metrics like response times and errors using performance analytics.

- Analyze usage data to understand user behaviour and preferences for future enhancements.

Conclusion

Developers must master integrating LLM API to enhance project efficiency and accuracy. Start with understanding the basics, selecting the right API, following best practices, and addressing any issues. Utilizing advanced techniques and monitoring key metrics can optimize API integration. This streamlines operations and improves user experiences and supports project scalability. Continuous learning about new technologies is essential for a successful LLM API integration strategy.

Frequently Asked Questions

What Are the Costs Associated with LLM API Integration?

Costs are based on the provider and usage. For input costs (per 1M tokens), they usually vary from 0.1 dollar to above 1 dollar. For output costs (per 1M tokens), they are just the same as input costs but sometimes may be higher.

How to Choose Between Different LLM APIs Based on Project Needs?

Consider its compatibility with chatbots, content creation, sentiment analysis, and language translation. Evaluate ease of integration, scalability, customization options, technical support and pricing.

Are There Any Common Challenges that Developers may Face when Integrating LLM API?

The context provided for the LLM input is restricted. In many instances, more information is required, particularly when dealing with multiple documents, each consisting of several pages.

How does LLM API integration ensure security?

The API key ensures that only authorized users/applications can access the LLM API. You can also keep the API and library versions updated to fix known security issues.

Novita AI is the all-in-one cloud platform that empowers your AI ambitions. With seamlessly integrated APIs, serverless computing, and GPU acceleration, we provide the cost-effective tools you need to rapidly build and scale your AI-driven business. Eliminate infrastructure headaches and get started for free — Novita AI makes your AI dreams a reality.

Recommended Reading