Serverless & Container Engine

Introduction

When talking about Serverless, Kubernetes is a topic that cannot be avoided.

In today’s lesson, we will continue learning how to set up a private Serverless environment. Specifically, we will build the Serverless infrastructure based on the local K8s deployment from the last lesson. Then, we will install additional components to extend the capabilities of the K8s cluster, ultimately enabling the local K8s cluster to support Serverless.

Prerequisites for Building Serverless

Before we start, let’s clarify a question that was raised during the foundational course on Serverless Computing, particularly FaaS. Some students asked, “What is the relationship between microservices, Service Mesh, and Serverless?”

These concepts frequently appear in discussions, but don’t feel pressured. I was also confused by these concepts when I first started learning about Serverless. Let’s first revisit microservices. When using microservices to create BaaS, you may have noticed that many concepts in microservices are quite similar to those in Serverless.

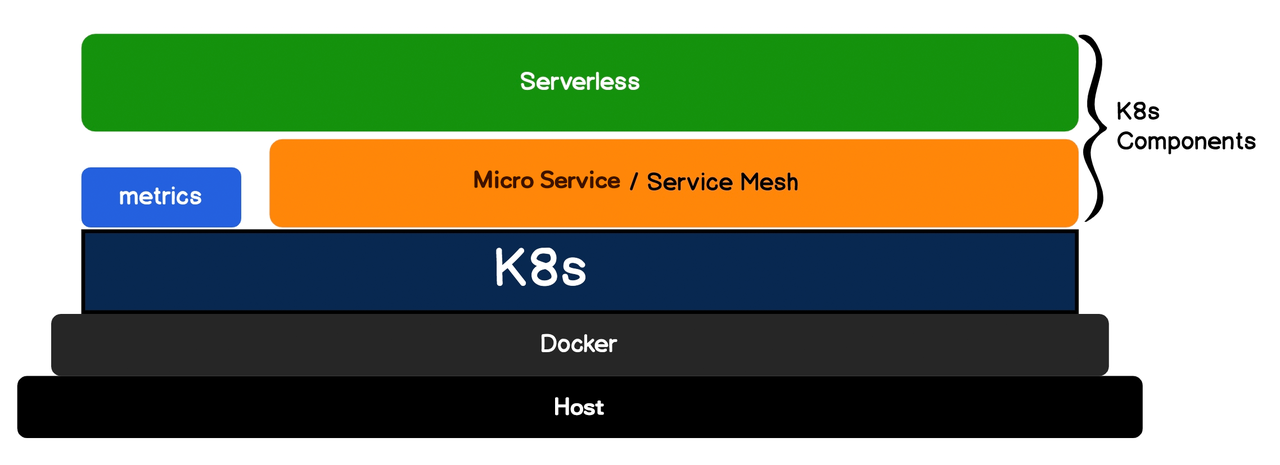

In simple terms, Service Mesh is a microservices network communication solution that operates without the microservices themselves being aware of it. We can also delegate the network communication in a Serverless architecture to a Service Mesh. Through Service Mesh, Serverless components can work closely with the K8s cluster, ultimately supporting our deployed applications in a Serverless manner. Let’s look at the following architecture diagram:

From the diagram, we can clearly see that the underlying infrastructure of Serverless can be built on Service Mesh. However, Service Mesh is only one of the solutions for implementing Serverless network communication. There are also other options like RSocket, gRPC, and Dubbo. I chose Service Mesh because this solution can be based on K8s components and provides visualization tools to help us understand the Serverless operating mechanism, such as how to achieve zero startup time and control traffic for gradual releases. If you want to practice, Service Mesh is undoubtedly the first choice.

Serverless Infrastructure: Service Mesh

Some people refer to Kubernetes, Service Mesh, and Serverless technologies as the “three pillars” of cloud-native application development. By now, I assume you understand the reason behind this. However, I must clarify that in the following lessons, we will introduce many new terms. I have given you a general overview of these terms so that you can have a broad understanding. If you have time, you should delve deeper into them.

Now, let’s get back to the main topic and take a closer look at the principles of Service Mesh.

When we discussed microservices, we only covered the theoretical guidance on decomposition and integration, without touching on the specific implementation. If we switch to implementation, the industry actually has many microservice frameworks, but most are limited to specific languages’ SDKs, especially Java microservice frameworks.

The microservice framework in SDK form usually has a heavy focus on the implementation of network communication between microservices, such as retrying failed service requests, load balancing across multiple service instances, and limiting traffic during high service request volumes. These logics often require the attention of microservice developers and need to be implemented repeatedly in each SDK language. So, is it possible to extract the microservice network communication logic from the SDK, making our microservices lighter and even eliminating the need to worry about network communication?

The answer is Service Mesh.

If we deploy decomposed applications into K8s cluster Pods, the process is as shown in the diagram below, where the MyApp application will directly call the user microservice and the to-do task microservice within the cluster via HTTP.

But what about the security issues that arise from direct HTTP access? If someone starts a BusyBox container in our cluster, wouldn’t they be able to attack our user microservice and to-do task microservice directly? Also, when we have multiple instances of the user microservice, how do we allocate traffic?

Traditionally, we would use a microservice architecture SDK, which contains a lot of this logic and many strategies that we need to decide when to activate when calling the SDK. Our code would also become heavily coupled with the microservice architecture SDK.

Service Mesh, however, takes a different approach by extracting the network communication logic from the microservice and managing network traffic in a non-intrusive manner, allowing us to no longer worry about the heavy microservice SDK. Let’s see how Service Mesh solves this problem.

Service Mesh can be divided into a data plane and a control plane. The data plane is responsible for managing network communication, while the control plane controls and monitors the state of network communication. Service Mesh intercepts all network traffic by injecting a Sidecar.

In the data plane, our applications and microservices appear to communicate directly with the Sidecar, but in reality, the Sidecar can achieve this through traffic hijacking. Therefore, our applications and microservices are generally unaware of this and require no modifications, just using HTTP requests to transmit data.

The control plane is more complex and is the core of Service Mesh operations. The pilot is the driver of the entire control plane, responsible for service discovery, traffic management, and scaling. Citadel is the control plane’s guardian fortress, responsible for security certificates and authentication. Mixer is the communication officer, distributing the control plane’s policies and collecting the operational status of each service.

Now you should have a clear understanding of what Service Mesh is, how it extracts the microservice network communication logic from the SDK, and why we say that Service Mesh is the network communication foundation for Serverless.

The Relationship Between Serverless and Container Engines

The core value of Serverless lies in not having to manage servers, allowing a focus on business logic. Container technology provides the powerful infrastructure and flexible scheduling capabilities necessary for Serverless, especially when combined with orchestration tools like Kubernetes.

Containers offer advantages such as lightweight packaging, resource isolation, and rapid startup. Containers package applications and their dependencies, ensuring consistency in the runtime environment, which facilitates the rapid deployment and startup of application instances on a Serverless platform. Additionally, containers provide isolated runtime environments, ensuring that different applications do not interfere with each other, thereby improving the overall stability of the platform. Moreover, container startup is faster than that of virtual machines, meeting the high requirements of Serverless applications for elastic scaling.

As the core of Serverless scheduling, Kubernetes has functions such as automated deployment, elastic scaling, and service discovery. It can automatically deploy containerized applications based on preset rules without manual intervention, adjust the number of application instances according to real-time load, ensuring performance while optimizing resource utilization, and provide service discovery mechanisms to facilitate communication between components within the Serverless platform.

To build a container-based Serverless platform, first, you need to package applications and their dependencies into container images, ensuring that the applications can be quickly deployed and run on the platform. Then, choose an appropriate cloud platform or set up a self-hosted Kubernetes cluster as the infrastructure for the Serverless platform. Next, develop Serverless platform components, including an API gateway for receiving and routing external requests, a function runtime for loading and running function code and managing function lifecycles, and event triggers that listen to various event sources and convert events into function calls. Finally, use Kubernetes’ Deployment to manage the deployment and updating of application instances, use Service for service discovery and load balancing, and use HPA to achieve automatic elastic scaling.

A container-based Serverless platform offers high flexibility, strong customization, and cost-effectiveness. Developers can freely choose the underlying container technology and Kubernetes platform, customize the functions and features of the Serverless platform according to their needs, and utilize Kubernetes’ resource scheduling capabilities to optimize resource utilization and reduce costs.

The combination of container technology and Kubernetes provides powerful tools and methods for building efficient, flexible, and scalable Serverless platforms, enabling developers to easily build their own Serverless platforms, focus on business logic development, and improve development efficiency.

Novita AI Serverless

At Novita AI, we have developed our own container engine, Nexus. Leveraging Nexus’s powerful distributed computing and resource scheduling capabilities, Novita AI has built a Serverless service geared towards the next generation of generative AI. Users only need to focus on business innovation without worrying about underlying computing resources. Reservations are now open; join the waitlist to be the first to experience Novita AI Serverless.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading

Scaling on Demand: How Serverless Handles Traffic Spikes with Ease

Serverless Analysis, Starting From Data Models

Unveiling the Revolution: Exploring the World of Serverless Computing