Discover reliable sampling methods for stable diffusion. Explore our blog for insights on vets sampling method stable diffusion.

Stable diffusion is a crucial process that has numerous applications in various industries, including pharmaceuticals and chemical engineering. Reliable sampling methods are essential to obtain accurate data and ensure the quality of AI image generation. Many sampling methods are available in AUTOMATIC1111. In this blog, we will discuss stable diffusion in detail, its importance, and factors affecting it. We will then dive into sampling methods in context and explore commonly utilized sampling methods. We will evaluate each sampling method’s efficiency, analyze the data obtained from experiments, and discuss how different sampling methods impact the accuracy of results. Additionally, we will provide case studies of reliable sampling methods and highlight the challenges and limitations involved in using them. Furthermore, we will share steps to ensure reliability in Sampling Methods and discuss implications for future research. Join us as we explore reliable sampling methods for stable diffusion processes.

Understanding Stable Diffusion

Stable Diffusion is a generative artificial intelligence (generative AI) model that produces unique photorealistic images from text and image prompts. It originally launched in 2022. Besides images, you can also use the model to create videos and animations.

Stable Diffusion is an innovative approach to generating AI images from text. Unlike traditional methods that directly operate in the high-dimensional image space, Stable Diffusion takes a different approach. It starts by compressing the image into a lower-dimensional latent space.

The latent space is a compressed representation of the image that captures its essential features and characteristics. By working in this lower-dimensional space, Stable Diffusion can manipulate the latent variables to generate new images that correspond to the given text prompts.

Definition and Importance of Stable Diffusion

Stable Diffusion is indeed a type of deep learning model that falls under the category of diffusion models. Diffusion models are generative models specifically designed to generate new data that is similar to what they have been trained on. In the case of Stable Diffusion, the focus is on generating images.

the idea is to simulate a similar spreading or dispersion process. Instead of particles or substances, the model works with latent variables or representations that capture the essential characteristics of the given data, such as images.

In the context of Stable Diffusion, the forward diffusion process is a key component that transforms an initial training image into an uncharacteristic noise image. This process involves gradually adding noise to the image, causing it to lose its original features and eventually becoming indistinguishable from random noise.

Forward diffusion-details in this article

During the generation process, the model applies a series of steps that introduce noise and gradually transform the initial representation into a new sample or image.

Reverse diffusion

Factors affecting Stable Diffusion

Several factors can affect the performance and outcomes of Stable Diffusion. These factors include:

Model Architecture: The architecture of the diffusion model used in Stable Diffusion plays a crucial role. Different choices of network architectures, such as the number of layers, the type of activation functions, and the connectivity patterns, can impact the model’s capacity to capture and generate high-quality images.

Latent Space Dimensionality: The dimensionality of the latent space, which represents the compressed representation of the images, can influence the expressive power and diversity of the generated samples. The dimensionality should be carefully chosen to balance the trade-off between the model’s capacity and computational efficiency.

Training Dataset: The quality, size, and diversity of the training dataset used to train the diffusion model are vital factors. A larger and more diverse dataset can help the model capture a broader range of image features and generate more varied and realistic samples.

Training Procedure: The training procedure used to optimize the diffusion model is essential. Factors such as the choice of optimization algorithm, learning rate, batch size, and training schedule can impact the convergence speed, stability, and final performance of the model.

Noise Schedule: The schedule of noise levels applied during the diffusion process is a critical factor. The choice of noise levels and their progression over time should be carefully tuned to balance the exploration of the latent space and the preservation of image details.

Text Prompt Quality: In the context of generating images from text prompts, the quality and specificity of the text prompts provided can affect the generated results. More detailed and specific prompts can guide the model to generate images that better align with the desired characteristics.

Computational Resources: The availability of computational resources, such as processing power and memory, can influence Stable Diffusion’s performance. Larger models or higher-resolution images may require more computational resources to train and generate high-quality samples.

Sampling Methods in Context

The sampler is responsible for carrying out the denoising steps

To generate an image using Stable Diffusion, the process typically involves starting with a randomly generated image in the latent space.

The predicted noise is subtracted from the initial image, which helps to remove or reduce the unwanted noise components. This subtraction process aims to refine the image and enhance its clarity and quality. This step is repeated multiple times, typically around a dozen iterations or diffusion steps.

Commonly utilized Sampling Methods

There are several different sampling methods available, including Euler, Heun, and DDIM. These methods differ in their specific algorithms and approaches to generating samples.

Euler sampling is a simple and widely used method. It approximates the underlying distribution by taking small steps in the direction of the gradient of the distribution and updating the current sample accordingly. This method is computationally efficient but may suffer from a large amount of noise in the generated samples.

Heun sampling, also known as the improved Euler method, is an enhancement of the Euler method. It takes two steps to update the current sample: an initial estimation using the Euler method and a refined estimation using a weighted average of the Euler estimate and the original sample. Heun sampling can provide more accurate results compared to Euler sampling but may require additional computational resources.

DDIM (Doubly Diffusive Implicit Monte Carlo) is another sampling method that incorporates implicit time integration and diffusion techniques. It aims to improve the efficiency and accuracy of sampling by reducing the noise and bias in the generated samples. DDIM is particularly useful for complex and high-dimensional distributions.

Detailed Evaluation of Sampling Methods

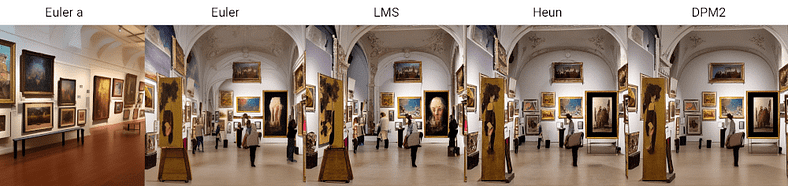

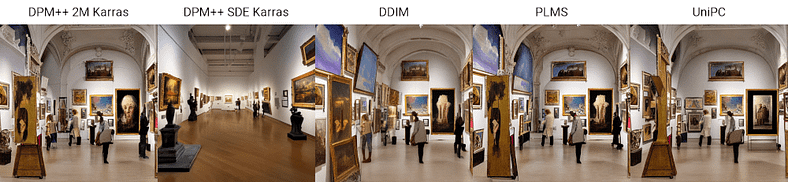

Euler, LMS, Heun, DPM, DDIM, PLMS, UniPC, and sampler “a” are all different methods or models used in the context of solving differential equations or denoising tasks.

Euler Method: The Euler method is a numerical approximation technique used to solve differential equations. It provides an iterative approach that estimates the solution at discrete time steps based on the derivative of the function at each step.

LMS (Linear Multistep Method): LMS is an extension of the Euler method, specifically designed to improve the accuracy of the approximation. It incorporates multiple previous solution values to enhance the accuracy of the current estimation.

Heun Method: The Heun method, named after Karl Heun, is an improved version of the Euler method. It utilizes a more sophisticated approach to estimate the derivative, leading to better accuracy in approximating the solution to differential equations.

DPM (Diffusion Probabilistic Model): DPM refers to a model that learns the construction of a noised image and uses that knowledge to denoise the image. It leverages probabilistic methods to capture the underlying distribution of the noisy data and reconstruct the clean image.

DDIM (Denoising Diffusion Implicit Model): DDIM is an enhanced and faster denoising model derived from the DPM. It employs an implicit diffusion process to efficiently denoise images by iteratively reducing noise levels.

Determination of sampling efficiency

Sampling efficiency plays a crucial role in influencing the quality of data collected. For a better comparison, we generate AI graphics using the following conditions with different samplers.

- Model — Stable Diffusion v1.5

- Prompt — A middle century art gallery with several digital art pieces

- Steps — 27

- Image Size — 512 x 512

- CFG Scale — 7

- Seed — 1573819953

Case Study 1: Application of Sample Method X in Stable Diffusion

When applied to the textual description of the Mona Lisa, Stable Diffusion can potentially generate images that embody the essence and characteristics of the famous painting. We check it out in diferent sampler.

Model:sd_xl_base_1.0.safetensors

Prompt:A portrait of a woman set against a dark background. positioned in a three-quarter view, facing slightly toward the viewer. upper body and face prominently displayed. brown hair, dark-colored garment with a veil covering her hair

Negative Prompt:3d render, smooth,plastic, blurry, grainy, low-resolution,anime, deep-fried, oversaturated

Width (256~2048):1024

Height (256~2048):1024

Sampler:Heun

CFG Scale:7

Steps:20

Batch size:4

Iterations:1

Seed:17641893

Case Study 2: Efficiency of Sample Method Y in Stable Diffusion

Model:sd_xl_base_1.0.safetensors

Prompt:A portrait of a woman set against a dark background. positioned in a three-quarter view, facing slightly toward the viewer. upper body and face prominently displayed. brown hair, dark-colored garment with a veil covering her hair

Negative Prompt:3d render, smooth,plastic, blurry, grainy, low-resolution,anime, deep-fried, oversaturated

Width (256~2048):1024

Height (256~2048):1024

Sampler:Heun

CFG Scale:7

Steps:20

Batch size:4

Iterations:1

Seed:17641893

Conclusion

In conclusion, reliable sampling methods play a crucial role in stable diffusion studies. They help ensure accurate and representative data, leading to more reliable results and meaningful conclusions. Understanding the concept of stable diffusion and its importance is key to selecting appropriate sampling methods. By evaluating different sampling techniques and assessing their efficiency, researchers can make informed decisions about which method is most suitable for their study. It is also essential to consider the impact of sampling on the accuracy of results and to link the chosen method to the discussion of findings. By addressing the challenges and limitations associated with sampling and taking steps to ensure reliability, researchers can contribute to the advancement of stable diffusion research. Implementing more reliable sampling methods will ultimately enhance our understanding of this phenomenon and pave the way for future studies in this field.

novita.ai provides Stable Diffusion API and hundreds of fast and cheapest AI image generation APIs for 10,000 models.🎯 Fastest generation in just 2s, Pay-As-You-Go, a minimum of $0.0015 for each standard image, you can add your own models and avoid GPU maintenance. Free to share open-source extensions.

Recommended reading

- Stable Diffusion NSFW: Exploring the Risks and Benefits

- Stable Diffusion Img2Img Tutorial: Mastering the Technique

- Stable Diffusion Inpainting Guide: The Expert’s View

Discover more from Novita

Subscribe to get the latest posts sent to your email.