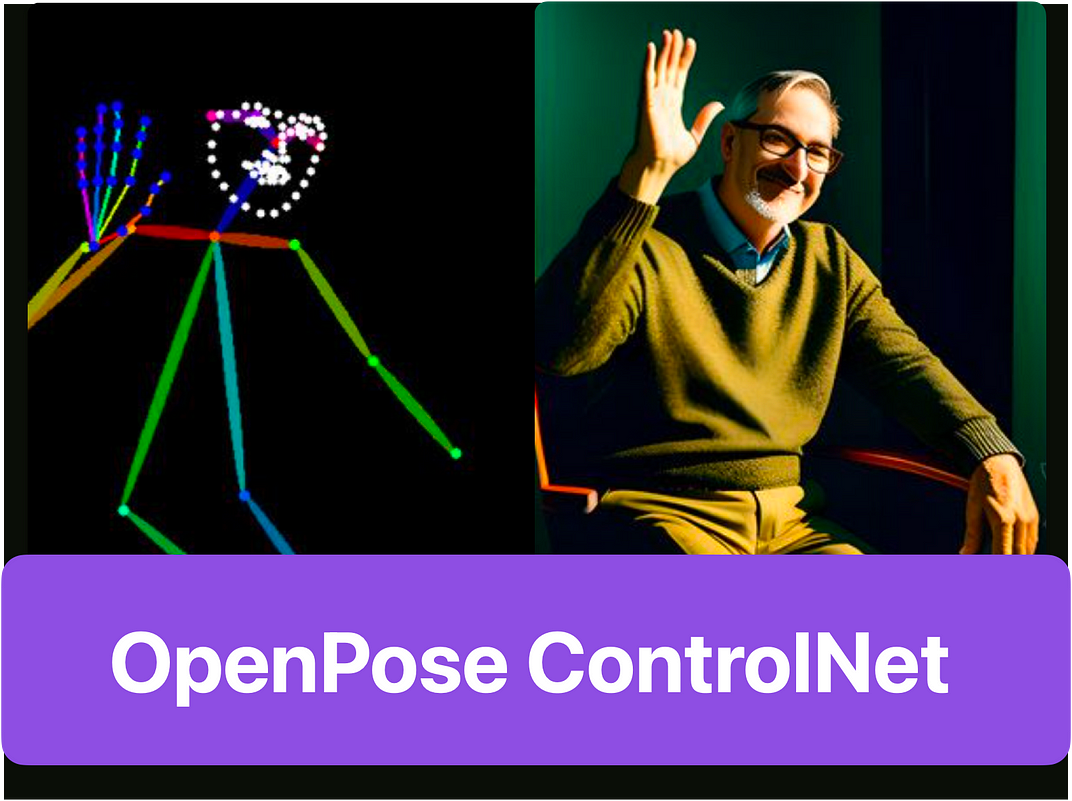

OpenPose ControlNet: A Beginner's Guide

What is OpenPose ControlNet and how does it work?

OpenPose ControlNet may seem intimidating to beginners, but it’s an incredibly powerful AI tool. It allows users to control and manipulate human body parts in real-time videos and images. In this blog post, we will take a closer look at OpenPose ControlNet, from understanding its core concepts to exploring its practical applications in the field of AI. We will also guide you through the installation process and delve into the settings of ControlNet. In addition, we will explore how to choose the right model for your needs, examine the role of Tile Resample, and learn how to copy a face with ControlNet using the IP-Adapter Plus Face Model. Lastly, we will discuss innovative ideas for using ControlNet in various fields and uncover how the interaction between Stable Diffusion Depth Model and ControlNet enhances performance. By the end of this beginner’s guide, you’ll be able to utilize OpenPose ControlNet like a pro!

Understanding ControlNet in OpenPose

ControlNet in OpenPose provides advanced control over the generation of human poses with stable diffusion and conditional control based on reference image details. The control map guides the stable diffusion of generated human poses, and the OpenPose editor facilitates the controlnet settings for stable pose details diffusion.

The Core Concept of ControlNet

The ControlNet extension in the OpenPose model facilitates detailed control over facial features and expressions. It incorporates neural network models for stable diffusion of human pose details, crucial for precise control of head and eye positions. The control map ensures stable diffusion of human pose from the input image.

Practical Applications of ControlNet in OpenPose

Practical applications of ControlNet in OpenPose encompass various use cases, such as animation, workflow, and functionalities. Its stable diffusion model benefits detailed face and facial control in diverse human subjects, enabling the stable diffusion of human pose details in the input image.

Getting Started with Stable Diffusion ControlNet

A crucial step for achieving stable diffusion controlnet settings is the installation of the controlnet extension in Google Colab. Whether on a Windows PC or Mac, installing controlnet is vital for stable diffusion of human pose details. Additionally, updating the controlnet extension is necessary to maintain stability and achieve the desired results in OpenPose model. To install the v1.1 controlnet extension, go to the “extensions” tab and install it from this URL: https://github.com/Mikubill/sd-webui-controlnet. If you already have v1 controlnets installed, delete the folder from stable-diffusion-webui/extensions/. Install the v1.

Steps to Install ControlNet in Google Colab

The installation process of the controlnet extension involves a reference image, negative prompt, and stable diffusion model. Installing controlnet in Google Colab requires the input image, hard time, and final image details. This process also involves the neural network structure, base model, and controlnet openpose model, which lead to stable diffusion of human pose details in the generated image.

Click the Play button to start AUTOMATIC1111.

Procedure for Installing ControlNet on Windows PC or Mac

The process of setting up ControlNet on a Windows PC or Mac involves integrating openpose face and neural network details for stable diffusion of human pose data. This includes employing reference images, negative prompts, and controlnet settings to govern key points’ positions.

- Navigate to the Extensions page.

2. Select the Install from URL tab.

3. Put the following URL in the URL for extension’s repository field.

https://github.com/Mikubill/sd-webui-controlnet

4. Click the Install button.

5. Restart AUTOMATIC1111.

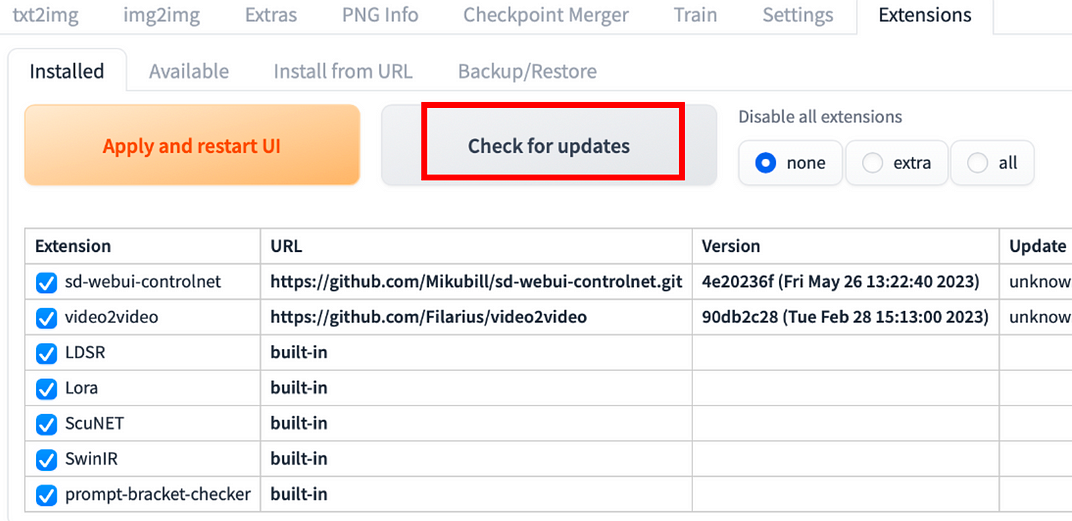

How to Update the ControlNet Extension

Updating the ControlNet extension involves adjusting the control map, aspect ratio, and QR code settings, as well as personal devices. This update includes neural network improvements and control map settings for stable diffusion. The control settings dictate head, eye, and facial feature positions.

- Navigate to the Extensions page.

- In the Installed tab, click Check for updates.

- Wait for the confirmation message.

- Restart AUTOMATIC1111 Web-UI.

Diving into ControlNet Settings

Exploring the text-to-image settings, including the text prompt, in ControlNet extension is crucial for stable diffusion. ControlNet settings enable stable diffusion of human pose details, involving detailed face and facial control, stable diffusion, and control map settings. They allow control over head, eyes, and facial details positions in the input image, essential for stable diffusion in the generated image.

An Overview of Text-to-Image Settings

Controlnet settings in the openpose model enable precise control over the positions of facial details, head, and eyes in input images. The text-to-image settings also facilitate stable diffusion of human pose details through the control map.

Exploring ControlNet Settings in Depth

Exploring the intricate aspects of controlnet settings encompasses the control map, aspect ratio, qr code, and personal devices, guiding the stable diffusion of human pose details. This involves the neural network, image generation, reference image, negative prompt, and key points. Controlnet settings regulate the positions of facial details, enabling stable diffusion.

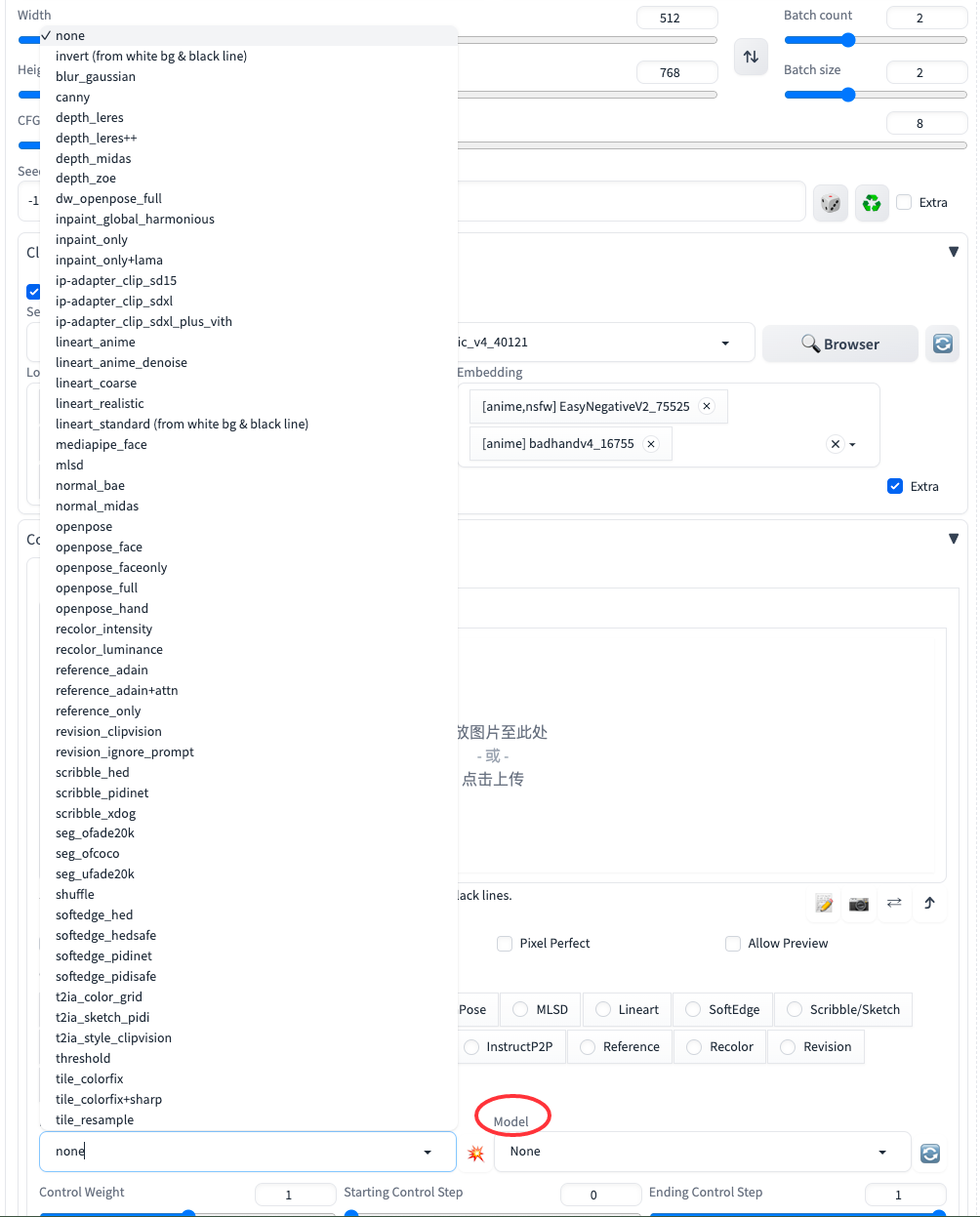

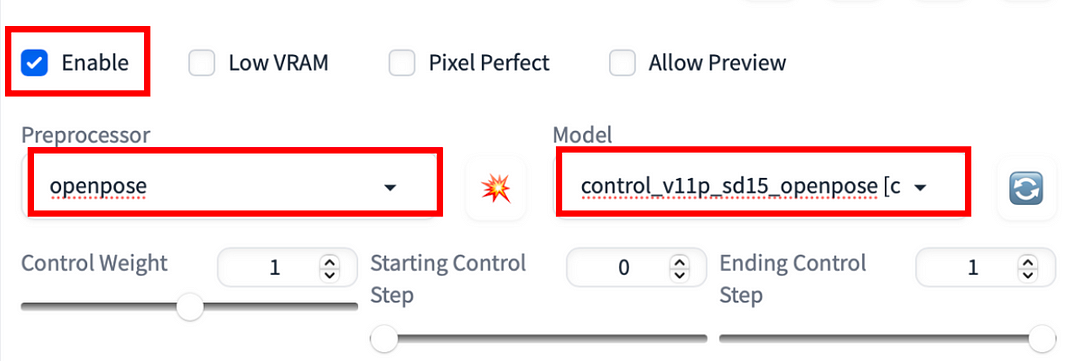

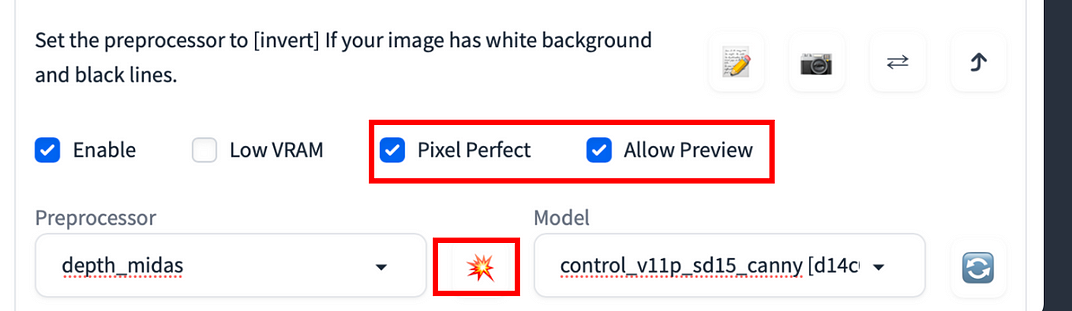

A Look at Preprocessors and Models in OpenPose

Preprocessors in OpenPose enable image diffusion while OpenPose models use neural network structure. Different preprocessor functionalities adapt to various use cases, and models in OpenPose ControlNet extension control the openpose model. The relationship between preprocessor workflow functionalities is crucial.

How to Choose the Right Model for Your Needs

When selecting a model, consider your specific use case. Take into account the controlnet settings for OpenPose model selection. The base model significantly impacts the final image generation. Additionally, the controlnet model reference image plays a crucial role in model selection. Neural network model controlnet openpose editor settings are also essential.

Delving into OpenPose and Its Features

To begin using ControlNet, the first step is to select a preprocessor. Enabling the preview feature can be beneficial as it allows you to observe the transformations applied by the preprocessor. Once the preprocessing is complete, the original image is no longer utilized, and only the preprocessed image is retained for further use with ControlNet.

Understanding the Role of Tile Resample

Tile resample alters original image pixel positions, crucial for face skeleton generation. Its qr code generation and personal device compatibility are vital. The base model settings control image pixel density, ensuring optimal performance.

The Art of Copying a Face with ControlNet

ControlNet’s diffusion model ensures stable image generation, impacted by facial details’ control map settings. Replicating a face with ControlNet openpose demands precise facial and eye positions. This underlines the importance of understanding ControlNet’s intricate features.

How to make AI Faces. ControlNet Faces Tutorial.

Installation Guide for the IP-Adapter Plus Face Model

Installation of the IP-Adapter Plus Face Model offers user-friendly settings and an easy-to-manage download folder structure. The gpu checkpoint settings enhance performance, whereas the dslr animation settings provide high-quality image diffusion. Additionally, anime image generation showcases an interesting use case.

Utilizing the IP-Adapter Plus Face Model Effectively

When using the IP-Adapter Plus Face Model, stable diffusion model control is assured. Its workflow functionalities suit various use cases and enhance image diffusion effectively. Dataset browser functionalities simplify image generation, while controlnet settings govern the final image quality.

Unveiling the Magic of Multiple ControlNets

Multiple ControlNets enhance image generation with conditional control over diffusion. The interaction of stable diffusion model and ControlNets enhances control map generation, providing detailed head positions and flexibility in image creation.

Innovative Ideas for Using ControlNet in Various Fields

ControlNet openpose model offers stable diffusion for human subjects image generation. The extension settings facilitate image generation for different use cases, offering unique possibilities in personal devices image generation. ControlNet settings for neural network structure enhance image diffusion control and open up new image generation opportunities.

How Does the Interaction between Stable Diffusion Depth Model and ControlNet Enhance Performance?

The interaction between the stable diffusion depth model and ControlNet in OpenPose enhances performance by improving control map generation for image generation. The advanced capabilities of the stable diffusion model and ControlNet open up new possibilities for image generation. Neural network structure settings in ControlNet influence the performance of the stable diffusion model. Additionally, input image pose control with the stable diffusion depth model impacts the final generated image. The hard time ControlNet settings further enhance image diffusion control and keypoints.

Conclusion

In conclusion, ControlNet in OpenPose is a powerful tool that allows for precise control and manipulation of various parameters in image generation. Whether you’re a beginner or an experienced user, understanding ControlNet and its applications can greatly enhance your experience with OpenPose. By following the installation and setup instructions, exploring the different settings, and utilizing the available models, you can unleash your creativity and achieve incredible results. From copying faces to exploring innovative ideas in different fields, the possibilities are endless. The interaction between the Stable Diffusion Depth Model and ControlNet further enhances performance and opens up new avenues for experimentation. So, dive into the world of ControlNet and see what amazing creations you can achieve. Happy exploring!

novita.ai provides Stable Diffusion API and hundreds of fast and cheapest AI image generation APIs for 10,000 models.🎯 Fastest generation in just 2s, Pay-As-You-Go, a minimum of $0.0015 for each standard image, you can add your own models and avoid GPU maintenance. Free to share open-source extensions.

Recommended reading