NVIDIA V100 vs 3090 vs 4090: Which GPU Reigns Supreme?

Curious about the differences between NVIDIA V100 vs 3090 vs 4090 GPUs? Learn more about these powerful graphics cards on our blog.

Key Highlights

- This blog compares NVIDIA's powerful GPUs: V100, 3090, and 4090.

- We will explore their capabilities in gaming, deep learning, and professional rendering.

- Understand the strengths, weaknesses, and ideal use cases of each GPU.

- Analyze the cost implications and value proposition of each graphics card.

- Discover the potential of GPU cloud rentals for flexible and cost-effective computing.

Introduction

In high-performance computing, Graphics Processing Units (GPUs) are very important. They especially shine in deep learning tasks because they can process many operations at once. When picking a GPU, you need to think about your needs. For example, if you want to game, the GeForce RTX series is strong. On the other hand, if you have tough AI tasks, look into data center GPUs such as the NVIDIA RTX series. In this blog, we will specifically compare the NVIDIA Tesla V100, NVIDIA RTX 3090, and the upcoming NVIDIA RTX 4090 to determine which reigns supreme in terms of AI performance, 3D rendering, and Cryo-EM performance. Knowing the strengths and weaknesses of each GPU will help you choose the best one for your needs.

Understanding the Contenders: NVIDIA V100, 3090, and 4090

The NVIDIA V100 is a powerful processor often used in data centers. It has great compute performance, making it perfect for deep learning, scientific simulations, and tough computational tasks. The GeForce RTX 3090 and 4090 focus on different users. The 3090 is good for intense AI work, but it really excels in high-resolution gaming and creative projects. The 4090 gives better performance for both gamers and content creators.

Key Features of NVIDIA V100

The NVIDIA V100, based on Volta architecture, is a strong choice for data centers. It specializes in handling AI and high-performance computing tasks. Here are its main features:

- Great Tensor Performance: The V100 comes with 640 Tensor Cores. This offers amazing performance for deep learning tasks, speeding up training and inference.

- Large CUDA Core Count: With 5120 CUDA cores, it provides great parallel processing power. This is important for solving complex simulations and scientific calculations.

- High Memory Bandwidth: The V100 uses HBM2 memory. It offers up to 900 GB/s of bandwidth, allowing quick data access for memory-heavy workloads.

These features make the V100 a top choice for researchers and businesses that work with large datasets and complicated computational tasks.

Key Features of NVIDIA 3090

The GeForce RTX 3090 is one of the best graphics cards by NVIDIA. It uses the new Ampere architecture to provide a great gaming experience and perform well with AI tasks. Here are some of its key features:

- GeForce RTX Technology: This allows for real-time ray tracing and AI-powered DLSS (Deep Learning Super Sampling), giving you very realistic graphics and smoother frame rates.

- High CUDA Core Count: With 10,496 CUDA cores, the 3090 has a lot of processing power. This makes it great for tough gaming, professional rendering, and AI work.

- Fast PCIe Interface: It uses PCIe 4.0, which offers fast data transfer speeds. This is helpful for things like video editing and moving large files.

The 3090 works well for gamers seeking excellent performance, content creators handling graphics-heavy projects, and professionals exploring AI research and development.

Key Features of NVIDIA RTX4090

The NVIDIA GeForce RTX 4090 takes over from the great 3090. It works on the Ampere architecture and brings even better performance for gamers and those who create content. Here are some key features:

- Improved Ampere Architecture: This new design allows for faster ray tracing and AI work. This means it can create more realistic graphics and has better performance in applications that support it.

- More CUDA Cores: The 4090 has many more CUDA cores than older models, with 16384 CUDA cores. This makes gameplay and creative work run very smoothly, no matter how challenging the games or applications are.

- Greater Memory Size: The 4090 has 24 GB GDDR6X memory. This helps it run high-resolution textures and complex scenes better, perfect for professional work and creative tasks.

Performance Battle: Comparing GPU Capabilities

To compare these three GPUs, we need to look closely at their strengths and who they are meant for.

It’s not always fair to compare them directly. Instead, we can look at how they perform in certain areas. Let’s think about speed, energy use, gaming, deep learning, and professional rendering. This way, we can better understand what each GPU is good at and who should use them.

Computational Power and Speed

V100:

In terms of power, the V100 stands out with its 5120 CUDA cores, especially for deep learning training. Its Tensor Cores help speed up important matrix operations for deep learning, making complex models train faster. If you're into deep learning or scientific tasks, the V100 is great with its specialized features.

RTX 3090 & RTX 4090:

The 3090 and 4090 are also strong with lots of CUDA cores, but they focus more on graphics for gaming. Their TFLOPS performance in gaming can even be better than the V100. For gaming and graphics, the 3090 and 4090 do well with their design and high clock speeds.

Efficiency in Energy Consumption

Energy efficiency is very important, especially for data centers that run heavy tasks all the time.

The V100 usually shows better performance for each watt of energy when we compare it to the 3090 and 4090. This makes it more efficient for big jobs over a long period, with its lower power consumption of 250 Watt compared to the 700 Watt of a dual RTX 3090 setup with comparable performance. The V100’s efficiency comes from its Volta architecture and HBM2 memory, which are made for data center use. In areas like deep learning training, where energy costs can add up over time, the V100’s efficiency makes it a smart and cost-effective choice for the future.

Gaming Performance Showdown

When you think about gaming, the GeForce RTX series is the best.

V100:

The V100 can run games, but it is not made or optimized for that. It works best for compute-heavy tasks. This makes it a less good and more expensive choice for gamers.

- Primarily used for data centers and AI computing, not optimized for gaming

- Performs poorly in games, not comparable to the RTX series

NVIDIA RTX 3090:

The 3090 uses GeForce RTX technology. It gives great performance for 4K gaming. It offers real-time ray tracing for lifelike graphics and DLSS for smoother frame rates.

- Excellent 4K gaming performance

- Supports ray tracing and DLSS technology

- Performs well at high settings but may experience bottlenecks under extreme condition

NVIDIA RTX 4090:

The 4090 takes it even higher with better design and more memory. It gives a big boost in performance, especially for high resolutions and demanding settings.

- Significant improvements over the RTX 3090, especially in ray tracing and DLSS 3

- Exceptional performance at 4K and higher resolutions, capable of handling high frame rates and high-quality settings

- Better power efficiency and cooling design

From this comparison, we can see that for gamers, the RTX 4090 is the best choice, followed by the RTX 3090, while the V100 is not suitable for gaming.

- Gaming Performance: RTX 4090 > RTX 3090 > V100

- Use Case: RTX 3090 and RTX 4090 are suitable for high-end gamers, while the V100 is primarily used for professional computing and deep learning tasks, making it unsuitable for gaming.

Cost Analysis: Investment vs. Return

The prices of these reviewed GPUs can be very different. This is because they have different performance levels and are aimed at different users.

When looking at return on investment, it's important to think about how you will use the GPU and its long-term value. For researchers and companies that depend on deep learning, the V100 might be worth the money. However, gamers and content creators need to look at the performance benefits of the 3090 or 4090 while also considering their budget and needs.

Upfront Costs

V100:

- Launch Price: Approximately $8,999 (for the 32 GB version)

- Current Market Price: Often remains high due to its specialized use, typically around $6,000 to $10,000.

NVIDIA RTX 3090:

- Launch Price: Approximately $1,499

- Current Market Price: Varies due to demand and availability, typically around $1,000 to $1,500.

NVIDIA RTX 4090:

- Launch Price: Approximately $1,599

- Current Market Price: Typically around $1,500 to $2,000, depending on availability.

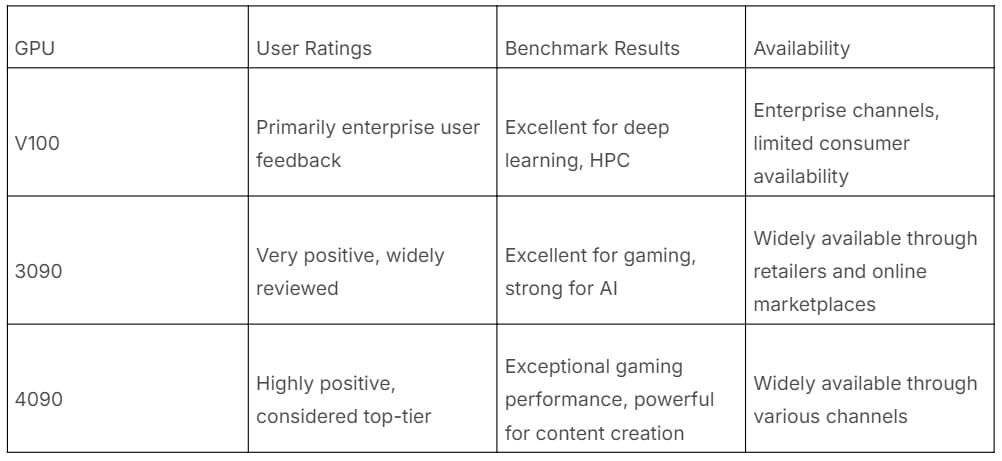

Availability and Market Trends

Market trends and availability can greatly impact purchasing decisions.

User feedback for the 3090 and 4090 is readily available through reviews, forums, and benchmark websites, highlighting their gaming prowess and providing insights into their performance in other applications.

It's essential to stay updated on market trends and factor in user experiences to make informed decisions.

Exploring the Benefits of GPU Cloud Rentals

GPU cloud rentals are getting more popular. They offer a flexible and affordable way to access high-end GPUs without buying them. This lets you adjust your resources based on your project needs, saving you from spending a lot of money upfront.

For the crowd

This option is great for researchers, startups, and anyone looking into deep learning, rendering, or other tasks that need a lot of GPU power. The mix of flexibility and lower costs makes it an attractive choice for many people.

What benefits can you get from renting GPU in GPU Cloud

- Cost-Effectiveness: Utilizing cloud services reduces initial investment costs, as users can select instance types tailored to their workloads, optimizing costs accordingly.

- Scalability: Cloud services allow users to rapidly scale up or down resources based on demand, crucial for applications that need to process large-scale data or handle high concurrency requests.

- Ease of Management: Cloud service providers typically handle hardware maintenance, software updates, and security issues, enabling users to focus solely on model development and application.

Novita AI GPU Instance: Harnessing the Power of NVIDIA Series

As you can see, the NVIDIA GeForce RTX 3090, RTX 4090,V100 are indeed a good GPU for you to choose. But what if you may consider how to get GPUs with better performance, here is an excellent way — — try Novita AI GPU Instance!

Novita AI GPU Instance, a cloud-based solution, stands out as an exemplary service in this domain. This cloud is equipped with high-performance GPUs like NVIDIA A100 SXM and RTX 4090. This is particularly beneficial for PyTorch users who require the additional computational power that GPUs provide without the need to invest in local hardware.

What can you get from renting them in Novita AI GPU Instance?

Get $0.5 in API Credits and $1 in GPU Credits for Free with Your First Login!

- cost-efficient: reduce cloud costs by up to 50%

- flexible GPU resources that can be accessed on-demand

- instant Deployment

- customizable templates

- large-capacity storage

- various the most demanding AI models

- get 100GB free

Take Renting RTX 3090 in Novita AI GPU Instance for Example

What benefits will you get by renting in our GPU cloud?

When you are deciding which GPU to buy and considering both its function and price of it, you can choose to rent it in our Novita AI GPU Instance! Let's take renting NVIDIA GeForce RTX 3090 for example:

- Price:

When buying a GPU, the price may be higher. However, renting GPU in GPU Cloud can reduce your costs greatly for charging based on demand.Just like NVIDIA RTX 3090 24GB, it costs $0.35/hr, which is charged according to the time you use it, saving a lot when you don’t need it.

- Function

Don’t worry about the function! Users can also enjoy the performance of a separate GPU in the Novita AI GPU Instance.

The same features:

- 24GB VRAM

- Total Disk:6144GB

Conclusion

In conclusion, the comparison of NVIDIA V100, 3090, and 4090 shows that there are many powerful GPUs available. Each one has unique features for different needs, whether for gaming, deep learning, or professional work. Performance, energy use, and prices differ among these options. This means it is important to match their abilities with what you really need. Understanding how powerful they are and keeping up with trends can help you make the right choice. You can decide to invest money upfront for value over time or consider cloud rentals for flexibility in your projects. Choose wisely to balance performance, cost, and growth to improve your GPU experience.

Frequently Asked Questions

Which GPU is Best for Deep Learning Tasks?

The NVIDIA V100 is great for deep learning. It has special Tensor Cores built for deep learning frameworks. This, along with a high number of CUDA cores and a lot of memory, provides excellent tensor performance.

Can I Use These GPUs for 4K Gaming?

Yes, the GeForce RTX 3090 and 4090 are great options for 4K gaming. Their GeForce RTX technology, along with a lot of CUDA cores and quick memory, provides a strong gaming experience at 4K resolutions.

Is Upgrading to a 4090 Worth It from a 3090?

Upgrading from a 3090 to a 4090 gives you a big boost in GPU performance. The 4090 comes with more CUDA cores and better GeForce RTX features. It also has the newest NVIDIA driver support. All these upgrades help make your gaming smoother and more enjoyable.

Novita AI, is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance - the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading: