Mixtral 8x22b Secrets Revealed: A Comprehensive Guide

Discover the hidden features of the Mixtral 8x22b in our comprehensive guide. Uncover tips, tricks, and insights to maximize your experience!

Introduction

The Mixtral 8x22b, a top-tier large language model by Mixtral AI, is a game-changer in natural language processing (NLP). This model ensures quick deployment and low latency for real-time responses, making it ideal for fast-paced applications requiring prompt language understanding. Additionally, its open-source nature allows developers to customize and enhance the model to pioneer new AI solutions in NLP effectively and affordably. Mixtral 8x22b redefines the landscape of AI and NLP with its advanced features and capabilities.

Understanding Mixstral 8x22b Technology

Mixtral 8x22b uses the latest machine learning tricks and big language models to work its magic. It’s built on a special setup called a 22B sparse Mixture-of-Experts (SMoE) architecture, which helps it handle and make sense of human language really well. Mixtral 8x22B excels in multilingual capabilities, mathematics, coding tasks, and reasoning benchmarks. Its open-source nature promotes innovation and collaboration, driving AI advancements.

What Makes the Mixtral 8x22B Model Unique?

Mixtral 8X22B is the latest model released by Mistral AI. It boasts a sparse mixture of experts (SMoE) architecture with 141 billion parameters. It is this SMoE architecture that gives many of its advantages. SMoE is a type of neural network that uses different smaller models (experts) for different tasks, turning on only the needed ones to save time and computing power.

Key Components and Architecture

Mixtral 8x22b is built on a special setup called the 22B Sparse Mixture-of-Experts (SMoE) architecture. This design helps it handle and make sense of natural language really well. Here’s what stands out about Mixtral 8x22b:

- Sparse Mixture-of-Experts (SMoE):

- Mixtral 8x22B employs an innovative MoE architecture.

- Instead of having one monolithic neural network, it assembles a team of experts, each specializing in a specific aspect of language understanding.

- These experts collaborate to provide accurate predictions, making Mixtral highly adaptable.

- Activation Patterns:

- Mixtral’s activation patterns are like a spotlight in a dark room.

- Instead of illuminating the entire neural network, it selectively activates only the relevant parts.

- This sparse activation reduces computation time and memory usage, resulting in faster inference.

Installation and Setup

System Requirements for Mixtral 8x22B

Before installing Mixtral 8x22B, ensure your system meets the following requirements:

- GPU: NVIDIA GeForce RTX 4090 or equivalent with at least 24GB VRAM.

- CPU: Modern multi-core processor, such as AMD Ryzen 7950X3D.

- RAM: Minimum 64GB.

- Operating System: Linux or other compatible systems.

- Storage: At least 274GB of free space.

Step-by-Step Installation Guide

To install Mixtral 8x22B, follow these steps:

- Prepare Your Environment:

- Update your system and install the necessary drivers for your GPU.

- Ensure you have Python installed on your system.

- Install Required Libraries:

- Use package managers like

pipto install libraries such asollama, which is used to run Mixtral models.

- Download the Model:

- Use the command

ollama pull mixtral:8x22bto download the Mixtral 8x22B model to your local machine.

- Run the Model:

- Execute

ollama run mixtral:8x22bto start the model. You can interact with it using the command line interface.

Optimizing Performance with Mixtral 8x22b

To get the most out of Mixtral 8x22b and boost its efficiency, it’s a good idea to dive into some tuning tips and tricks. Developers have the chance to tweak this model so it fits their needs perfectly.

Comparative Analysis with Previous Models

A comparative analysis between Mixtral 8x22b and previous models can provide insights into the advancements and improvements achieved with Mixtral 8x22b. The following table compares the key features and performance metrics of Mixtral 8x22b with Mixtral 7B and Mixtral 8x7B:

This comparative analysis highlights the significant improvements achieved with Mixtral 8x22b, making it the most performant open model in the Mixtral AI family.

Tuning Tips for Enhanced Efficiency

To get the best out of Mixtral 8x22b and make it work more efficiently, here are some tips developers can use:

- Utilize Multi-GPU Setup: Leverage the multiple GPUs effectively by distributing workloads across them. Use frameworks that support multi-GPU training, such as TensorFlow or PyTorch.

- Optimize Batch Size: Experiment with different batch sizes to find the optimal one that maximizes GPU utilization without causing memory overflow.

- Use Mixed Precision Training: Implement mixed precision training to speed up computation and reduce memory usage by using both 16-bit and 32-bit floating-point types.

- Profile Your Code: Use profiling tools to identify bottlenecks in your code. Optimize the slowest parts to improve overall performance.

- Data Pipeline Optimization: Ensure that your data loading and preprocessing do not become a bottleneck. Use efficient data loading techniques and consider using data augmentation on the fly.

- Monitor Resource Usage: Use monitoring tools to track GPU utilization, memory usage, and temperature. Adjust workloads accordingly to ensure optimal performance.

Ways of Using Novita AI to Achieve Your Goals with Mixtral

As you can see, GPU plays an important role in running Mixtral AI, giving the fundamental protection for it. Like we mentioned above, both the series and VRAM have restrictions for Mixtral to run it efficiently, with at least 24GB VRAM and GPU like NVIDIA GeForce RTX 4090 required. Therefore, choosing the best GPU for your needs is also important.

Run Mixtral 8x22b on Novita AI GPU Instance

Novita AI GPU Instance, a cloud-based solution, stands out as an exemplary service in this domain. This cloud is equipped with high-performance GPUs like NVIDIA A100 SXM and RTX 4090. This is particularly beneficial for PyTorch users who require the additional computational power that GPUs provide without the need to invest in local hardware.

The cloud infrastructure is designed to be flexible and scalable, allowing users to choose from a variety of GPU configurations to match their specific project needs. When facing a variety of software, Novita AI GPU Instance can provide users with multiple choices. And users just pay what you want, reducing costs greatly.

Rent NVIDIA GeForce RTX 4090 in Novita AI GPU Instance

When you are deciding which GPU to buy and considering both its function and price of it, you can choose to rent it in our Novita AI GPU Instance! Let's take renting NVIDIA GeForce RTX 4090 for example:

- Price:

When buying a GPU, the price may be higher. However, renting GPU in GPU Cloud can reduce your costs greatly for it charging based on demand. Just like NVIDIA GeForce RTX 4090, it costs 0.74 dollars per hour, which is charged according to the time you use it, saving a lot when you don't need it.

- Function:

Don't worry about the function! Users can also enjoy the performance of a separate GPU in the Novita AI GPU Instance. The same features:

- 24GB VRAM

- 134GB RAM 16vCPU

- Total Disk: 289GB

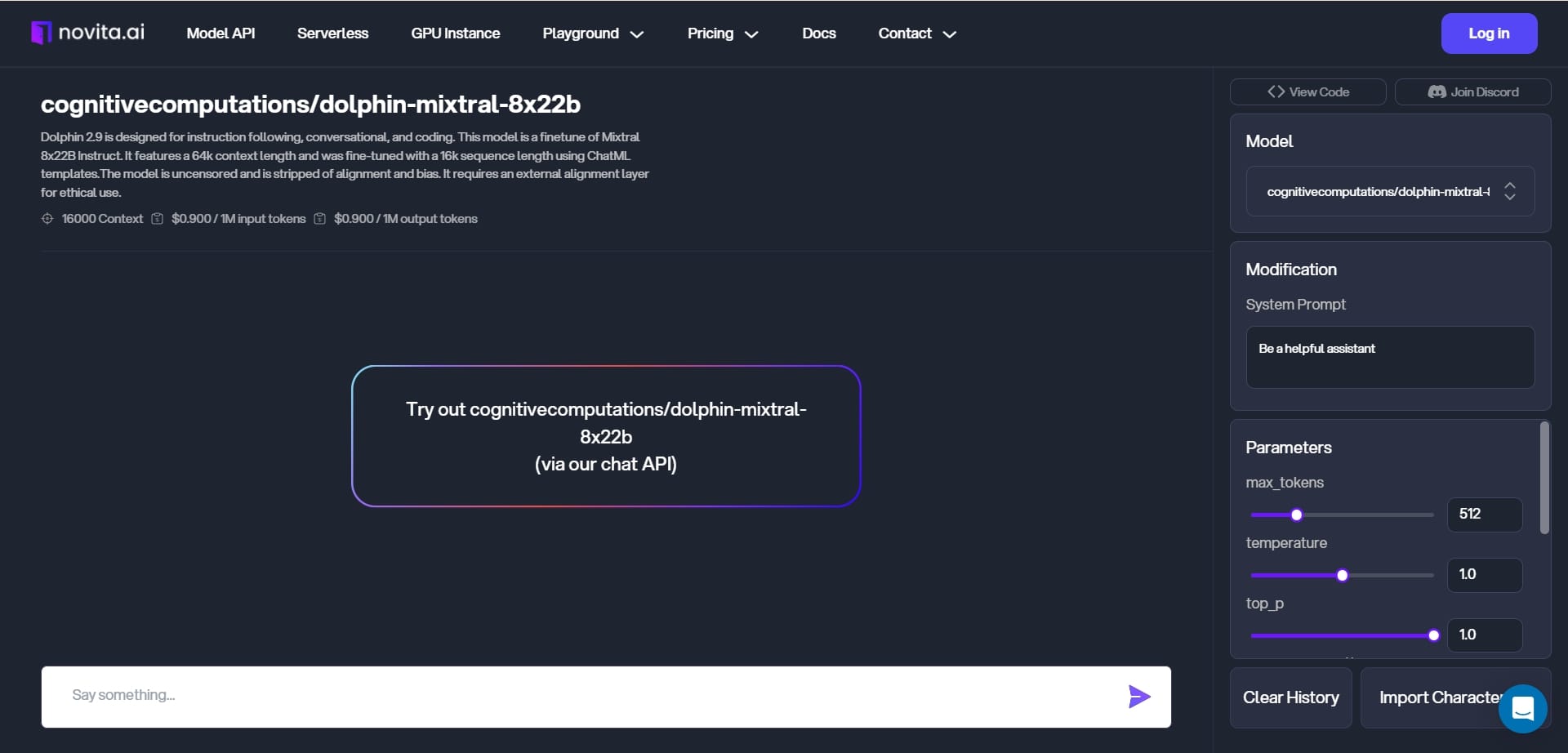

Novita AI LLM API Offers API of Mixtral 8x22b

If you don't want to install Mixtral 8x22b, a quick way to use Mixtral LLM is to try it on Novita AI LLM API. Novita AI offers LLM API key for developers and all kinds of users. If you want to know more about Mixtral 8x22b in Novita AI LLM API, you can click this link to explore more about this.

Except for Mixtral 8x22b, Novita AI LLP API also offers Llama 3 model.

Conclusion

Wrapping things up, getting to know the Mixtral 8x22b tech really opens doors for setting it up efficiently, making sure it runs at its best, and fitting it smoothly into different systems. By looking closely at how it has grown over time, what parts make it tick, and how everything is put together, you can really get the most out of using this technology in all sorts of real-life situations across various fields.

If you want to keep up with today’s tech game by using Mixtral 8x22b effectively, you’ve got to have a sharp eye for doing things right from the start — knowing just what buttons to push when there are hiccups or figuring out ways to tweak settings so they’re just perfect. What makes Mixtral stand out from others is that you can tailor-make some bits here and there depending on your project needs. So go ahead and dive deep into learning about Mixtral 8x22b; discover all its cool tricks for boosting your tech skills.

Frequently Asked Questions

How much RAM do I need for 8x22B?

Heavy on memory: Due to its architecture, all parameters of the model must be loaded into memory during inference, taking up all of your GPU VRAM. To run inference with Mixtral 8X22B, you need a GPU with at least 300GB of memory.

How Can Businesses Benefit from Mixtral-8x22B?

The model offers cost efficiency by utilizing a fraction of the total set of parameters per token during inference. This allows businesses to achieve high-quality results while optimizing resource usage and reducing costs.

Future Updates and Roadmap for Mixtral-8x22B

The development team is committed to continuously improving the model’s performance and capabilities based on user feedback. Users can expect regular updates and additions to further enhance the versatility and efficiency of Mixtral-8x22B.

Novita AI, is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance - the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading: