Meta's Llama 3.3 70B Instruct: Powering AI Innovation on Novita AI

Explore Llama 3.3 70B Instruct on Novita AI. Discover enhanced performance, cost-effectiveness, and seamless integration for your AI projects.

Meta's latest Llama 3.3 70B Instruct model has arrived, promising enhanced performance at a lower cost. This powerful language model is now available on Novita AI, offering developers a robust tool for building cutting-edge AI applications.

In this article, we'll explore the capabilities of Llama 3.3 70B Instruct, its integration with Novita AI's platform, and how it can accelerate your AI projects.

Table of Contents

- Understanding Llama 3.3 70B Instruct

- Key Features and Improvements

- Integrating Llama 3.3 70B Instruct with Novita AI

- Practical Applications and Use Cases

Understanding Llama 3.3 70B Instruct

Llama 3.3 70B Instruct is the latest addition to Meta's Llama family of large language models. Released on December 6, 2024, this model represents a significant advancement in AI technology, offering performance comparable to the larger Llama 3.1 405B model but at a more accessible scale and cost.

| Feature | Details |

|---|---|

| Model Architecture | Built on optimized transformer architecture with Grouped-Query Attention (GQA) for improved inference scalability. |

| Training Data | Trained on 15 trillion tokens of publicly available data. Knowledge cutoff: December 2023. |

| Multilingual Capabilities | Supports multiple languages including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai. Ideal for global applications. |

| Context Window and Performance | 128K token context window for processing extensive input text. Maintains coherence and relevance across long-form content. |

Key Features and Improvements

The Llama 3.3 70B Instruct model brings several enhancements over its predecessors, making it a compelling choice for developers and AI enthusiasts.

Enhanced Performance Across Tasks

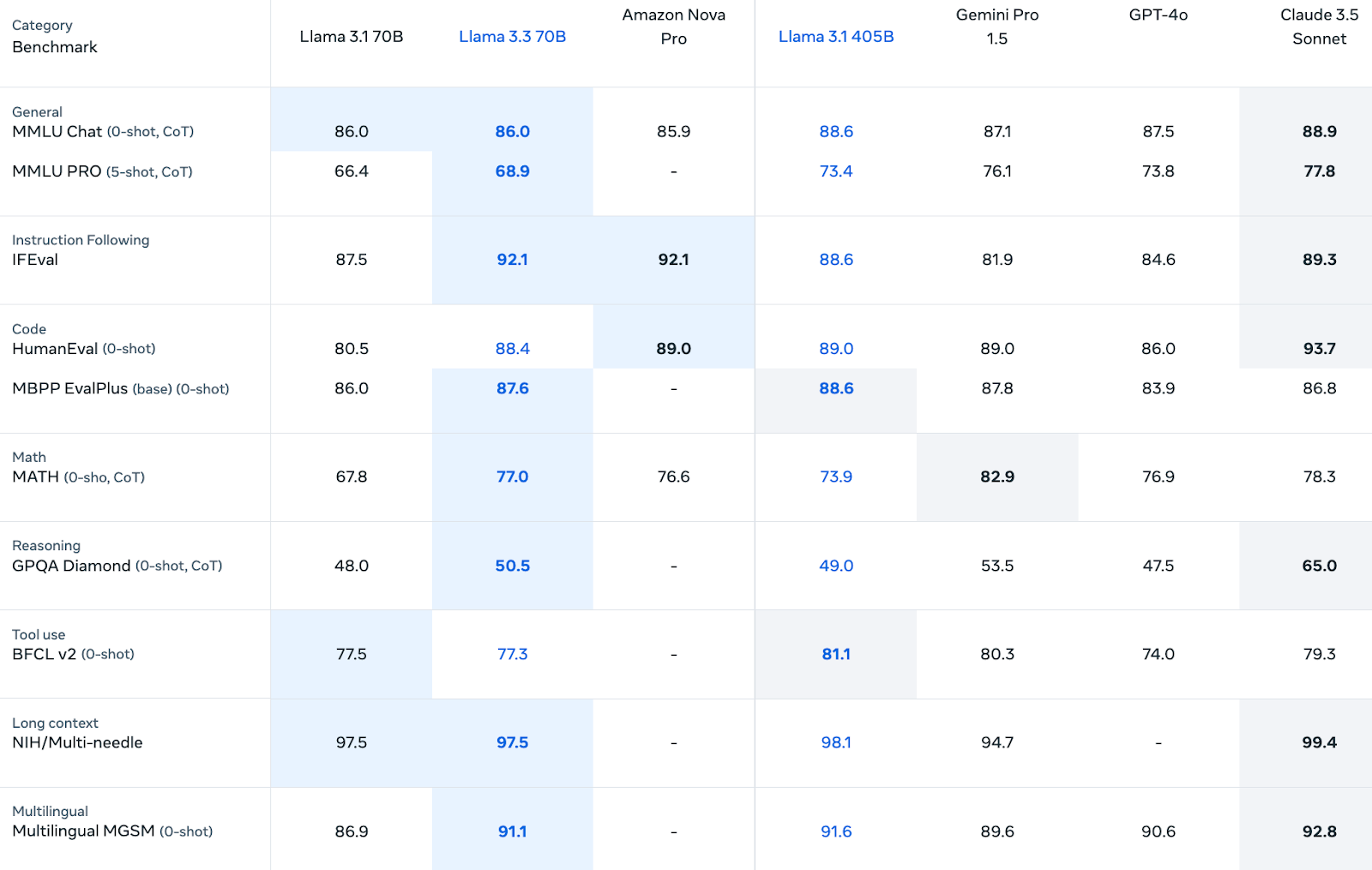

Llama 3.3 70B Instruct has demonstrated impressive performance across various benchmarks, showcasing its capabilities in different domains. Here's a comparison of its performance against other Llama models:

The Llama 3.3 70B model demonstrates competitive performance across various benchmarks when compared to other leading models like Claude 3.5 Sonnet, GPT-4o, and Gemini Pro 1.5.

General Language Understanding: In the MMLU Chat benchmark, Llama 3.3 achieves 86.0%, slightly below Claude 3.5 Sonnet's 88.9% and GPT-4o's 87.5%. For MMLU PRO, it scores 68.9%, which is notably lower than Claude 3.5's 77.8%.

Specialized Capabilities: The model excels in instruction following with an IFEval score of 92.1%, outperforming both GPT-4o (84.6%) and Gemini Pro (81.9%). In coding tasks, it achieves 88.4% on HumanEval, though falling short of Claude 3.5's impressive 93.7%.

Mathematical and Reasoning Skills: In mathematical reasoning, Llama 3.3 scores 77.0% on the MATH benchmark, positioning itself between Claude 3.5 (78.3%) and GPT-4o (76.9%). However, it shows relative weakness in the GPQA Diamond reasoning test with 50.5%, significantly below Claude 3.5's 65.0%.

Advanced Capabilities: The model particularly shines in long-context handling, scoring 97.5% on the NIH/Multi-needle benchmark, though still slightly behind Claude 3.5's 99.4%. In multilingual tasks, it achieves a strong 91.1% on the Multilingual MGSM, demonstrating robust cross-language capabilities.

Advanced Capabilities

Some of the standout features of Llama 3.3 70B Instruct include:

- JSON Output Generation: The model can produce structured JSON outputs, facilitating function calling and integration with other systems.

- Step-by-Step Reasoning: It provides detailed, step-by-step explanations for complex problems, enhancing transparency and user understanding.

- Improved Code Feedback: The model offers more accurate and helpful feedback on code, including error handling and fixing suggestions.

- Tool Invocation: Llama 3.3 70B Instruct can identify when to use specific tools or external resources and invoke them appropriately.

Cost-Effectiveness

One of the most significant advantages of Llama 3.3 70B Instruct is its cost-effectiveness. Despite offering performance comparable to the larger 405B model, it comes at a fraction of the cost. This makes it an attractive option for developers and businesses looking to leverage advanced AI capabilities without breaking the bank.

Integrating Llama 3.3 70B Instruct with Novita AI

Novita AI has made it easy for developers to access and integrate Llama 3.3 70B Instruct into their projects. By leveraging Novita AI's platform, you can harness the power of this advanced model without the complexities of infrastructure management.

Accessing Llama 3.3 70B Instruct on Novita AI

To get started with Llama 3.3 70B Instruct on Novita AI, follow these steps:

Step 1: Try the Llama 3.3 70B Instruct Demo

Step 2:Go to Novita AI and log in using your Google, GitHub account, or email addressStep 3:Manage your API Key:

Step 3:Manage your API Key:

- Navigate to "Key Management" in the settings

- A default key is created upon first login

- Generate additional keys by clicking "+ Add New Key"

Explore the LLM API reference to discover available APIs and models

Step 4:Set up your development environment and configure options such as content, role, name, and prompt

Step 5:Run multiple tests to verify API performance and consistency

API Integration

Novita AI provides client libraries for Curl, Python and JavaScript, making it easy to integrate Llama 3.3 70B Instruct into your projects:For Python users:

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

api_key="Your API Key",

)

model = "meta-llama/llama-3.3-70b-instruct"

stream = True # or False

max_tokens = 65536

system_content = """Be a helpful assistant"""

temperature = 1

top_p = 1

min_p = 0

top_k = 50

presence_penalty = 0

frequency_penalty = 0

repetition_penalty = 1

response_format = { "type": "text" }

chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": system_content,

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

temperature=temperature,

top_p=top_p,

presence_penalty=presence_penalty,

frequency_penalty=frequency_penalty,

response_format=response_format,

extra_body={

"top_k": top_k,

"repetition_penalty": repetition_penalty,

"min_p": min_p

}

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "", end="")

else:

print(chat_completion_res.choices[0].message.content)

For JavaScript users:

import OpenAI from "openai";

const openai = new OpenAI({

baseURL: "https://api.novita.ai/v3/openai",

apiKey: "Your API Key",

});

const stream = true; // or false

async function run() {

const completion = await openai.chat.completions.create({

messages: [

{

role: "system",

content: "Be a helpful assistant",

},

{

role: "user",

content: "Hi there!",

},

],

model: "meta-llama/llama-3.3-70b-instruct",

stream,

response_format: { type: "text" },

max_tokens: 65536,

temperature: 1,

top_p: 1,

min_p: 0,

top_k: 50,

presence_penalty: 0,

frequency_penalty: 0,

repetition_penalty: 1

});

if (stream) {

for await (const chunk of completion) {

if (chunk.choices[0].finish_reason) {

console.log(chunk.choices[0].finish_reason);

} else {

console.log(chunk.choices[0].delta.content);

}

}

} else {

console.log(JSON.stringify(completion));

}

}

run();

For Curl users:

curl "https://api.novita.ai/v3/openai/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer Your API Key" \

-d @- << 'EOF'

{

"model": "meta-llama/llama-3.3-70b-instruct",

"messages": [

{

"role": "system",

"content": "Be a helpful assistant"

},

{

"role": "user",

"content": "Hi there!"

}

],

"response_format": { "type": "text" },

"max_tokens": 65536,

"temperature": 1,

"top_p": 1,

"min_p": 0,

"top_k": 50,

"presence_penalty": 0,

"frequency_penalty": 0,

"repetition_penalty": 1

}

EOF

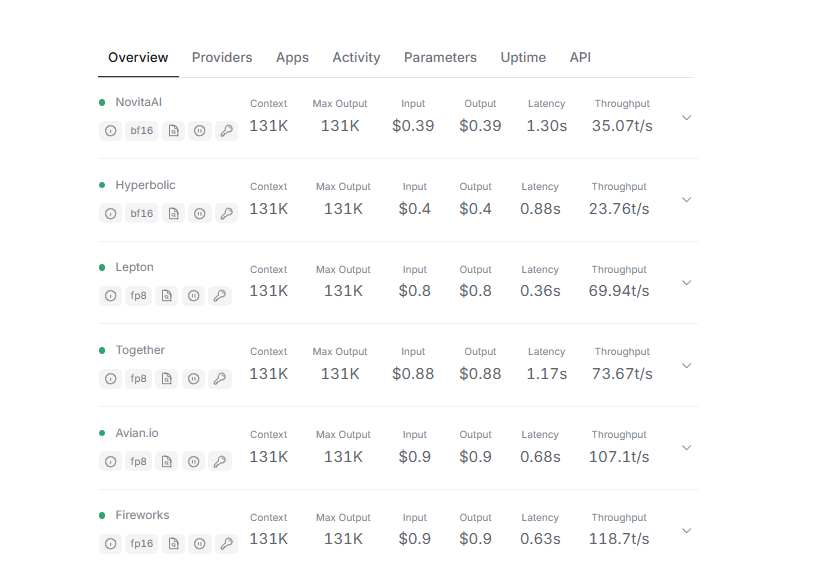

Pricing and Resource Management

Novita AI offers competitive pricing for using Llama 3.3 70B Instruct:

- Input tokens: $0.39 per million tokens

- Output tokens: $0.39 per million tokens

To manage costs effectively, consider implementing token counting and setting appropriate limits in your application.

Practical Applications and Use Cases

Llama 3.3 70B Instruct's balance of strong performance and hardware efficiency opens up a wide range of possibilities for developers and researchers. Its ability to run effectively on standard developer workstations makes it an approachable option for those without access to enterprise-level infrastructure. Let's explore some key areas where Llama 3.3 70B Instruct can be particularly useful.

Multilingual Chatbots and Assistants

One of Llama 3.3 70B Instruct's strengths is its ability to handle multiple languages, supporting eight core languages including English, Spanish, French, and Hindi. This makes it ideal for building multilingual chatbots or virtual assistants.

- Customer Support: Create chatbots that can answer queries in multiple languages, running efficiently on a single GPU.

- Educational Tools: Develop language learning assistants or multilingual tutoring systems.

- Virtual Travel Guides: Build AI-powered guides that can communicate in various languages for international travelers.

Coding Support and Software Development

With strong performance on coding benchmarks like HumanEval and MBPP EvalPlus, Llama 3.3 70B Instruct serves as a reliable assistant for various programming tasks.

- Code Generation: Automate the creation of boilerplate code or generate entire functions based on descriptions.

- Debugging Assistance: Analyze code snippets to identify and suggest fixes for bugs.

- Unit Test Creation: Automatically generate comprehensive unit tests for existing codebases.

- Documentation Generation: Create detailed code documentation and comments based on existing code.

Synthetic Data Generation

Llama 3.3 70B Instruct excels in generating high-quality, labeled synthetic datasets, which is particularly valuable for smaller teams needing domain-specific data.

- Training Data Creation: Generate labeled datasets for machine learning models in various domains.

- Augmenting Existing Datasets: Expand limited datasets with synthetically generated examples to improve model performance.

- Scenario Simulation: Create diverse text-based scenarios for testing chatbots or other NLP systems.

Multilingual Content Creation and Localization

Leveraging its multilingual capabilities, Llama 3.3 70B Instruct is a powerful tool for content creation and localization efforts.

- Marketing Materials: Produce localized marketing copy for different regions and languages.

- Technical Documentation: Translate and adapt technical documents for international audiences.

- Multilingual Blogging: Generate blog posts or articles in multiple languages simultaneously.

Research and Experimentation

For researchers, Llama 3.3 70B Instruct provides an efficient platform for exploring various aspects of language modeling and AI.

- Alignment Studies: Investigate techniques for improving AI safety and alignment.

- Model Distillation: Explore methods for creating smaller, specialized models from Llama 3.3 70B Instruct.

- Fine-tuning Experiments: Test different fine-tuning approaches for specific tasks or domains.

Knowledge-Based Applications

The strong text-processing capabilities of Llama 3.3 70B Instruct make it suitable for applications that require handling large volumes of text efficiently.

- Automated Summarization: Generate concise summaries of long documents, reports, or articles.

- Question Answering Systems: Build systems that can accurately answer questions based on large knowledge bases.

- Report Generation: Automatically create structured reports from unstructured data sources.

Flexible Deployment for Small Teams

Llama 3.3 70B Instruct's ability to run on local servers or even a single workstation makes it an excellent choice for startups, solo developers, or small teams.

- Prototype Development: Quickly build and test AI-powered features without relying on cloud infrastructure.

- Lightweight Production Systems: Deploy AI capabilities for small-scale applications or services.

- On-Premise Solutions: Offer AI functionalities while maintaining data privacy and reducing cloud costs.

Conclusion

Llama 3.3 70B Instruct represents a significant leap forward in AI language models, offering enhanced performance, multilingual capabilities, and cost-effectiveness. By leveraging this powerful model through Novita AI's platform, developers can create sophisticated AI applications with ease.

If you're a startup looking to harness this technology, check out Novita AI's Startup Program. It's designed to boost your AI-driven innovation and give your business a competitive edge. Plus, you can get up to $10,000 in free credits to kickstart your AI projects.

Frequently Asked Questions

What's new in Llama 3.3?

Llama 3.3 brings improved fine-tuning with SFT and RLHF, enhanced safety features, support for eight languages, and a longer context window of 128k tokens. It also introduces tool-use capabilities, better energy efficiency, and a robust responsible AI framework.

How does Llama 3.3 compare to Llama 405B?

Comparable performance but significantly more efficient, requiring less computational power.

Which languages are supported?

English, French, German, Hindi, Italian, Portuguese, Spanish, and Thai. Fine-tuning for other languages is possible with additional safeguards.

What are its benchmark strengths?

High scores in MMLU, HumanEval, and MGSM, excelling in reasoning, coding, and multilingual tasks.

Can it run on standard hardware?

Yes, designed to run on common GPUs and developer-grade workstations.

Recommended Reading

- Llama 3.1 VS 3.2: A Deep Dive into Meta's Latest LLM Evolution

- Llama 3.2 VS Claude 3.5: Which AI Model Suits Your Project?

- Llama 3.2 Vision: Unleashing Multimodal Open Source AI Power

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.