Mastering Torch Batch Norm in PyTorch 2.3

Introduction

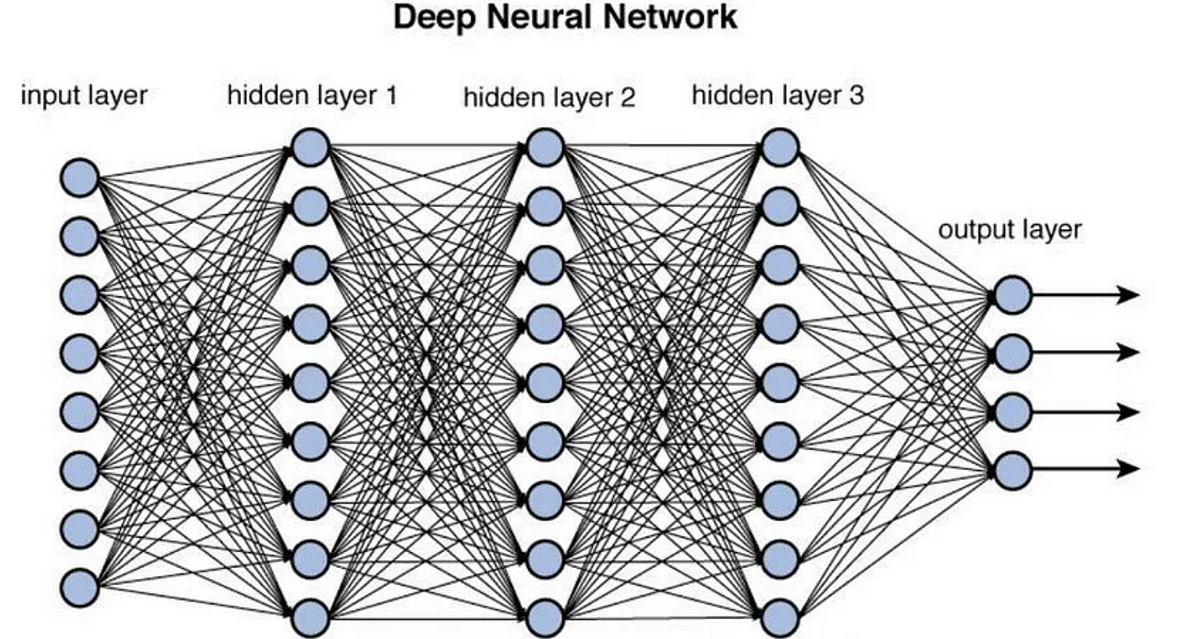

Batch normalization helps train neural networks better. It’s important to understand batch normalization layers and why they matter when data moves through the network. This helps models learn efficiently. In this PyTorch blog, we’ll explore batch normalization. We’ll see how it affects the network and offer tips for common issues. Let’s dive into batch normalization in deep learning and see why it’s important.

Understanding the Basics of Batch Normalization

Batch normalization is important in neural networks. Batch normalization makes training more stable and quicker by tweaking and scaling the activations within a network. Normalizing the input data makes it easier for the network to learn and work effectively. This step keeps the gradients in check while training, which means models get better faster. Understanding these fundamentals is important if you want to fully benefit from batch normalization in machine learning models.

The Concept Behind Batch Normalization

Batch normalization makes sure the data in each layer of a neural network is more consistent. This stops big changes from happening inside the network, which can mess things up. It makes the whole training process steadier and faster. With batch normalization, there are two adjustable settings called gamma and beta that tweak these consistent values to work just right. In simple terms, batch normalization helps every part of the neural network get inputs that don’t swing wildly in value, making learning smoother and more effective.

Why Batch Normalization is Crucial for Neural Network Training

Batch normalization helps neural networks learn faster and more steadily. Batch normalization makes sure the data in the network stays consistent. This helps with problems like vanishing gradients, which slow down learning. With batch normalization, each layer of the network gets inputs that are more predictable. This avoids situations where progress halts during training. This technique also means we don’t have to depend as much on dropout layers. We can use higher learning rates to speed up how quickly a neural network improves.

Implementing Batch Normalization in PyTorch 2.3

To get batch normalization right in PyTorch 2.3, here’s what you need to do. Make sure your model is ready for training first.

Step-by-Step Guide to Applying Batch Norm in Layers

To add batch normalization to your PyTorch neural network layers, first import the right tools.

Go ahead and import a couple of libraries by using import torch.nn as nn along with import torch.nn.functional as F.

Next up, create a class for your neural network model. In the class's __init__ method, add batch normalization layers. For 2D images through convolutional layers, use nn.BatchNorm2d.

For linear data points fed into networks without spatial dimensions, use nn.BatchNorm1d.

To make these normalizations work during the forward pass, just apply them after each layer has done its part. “let x be equal to self.bn1(x),” where ‘bn1’ represents one batch normalization layer.

Integrating these elements into your model ensures that everything is normalized before moving on.

Code Snippets for Batch Normalization with PyTorch

To add batch normalization in PyTorch, you can use the nn.BatchNorm1d/2d/3d module. Here’s a simple example to show how it works:

import torch

import torch.nn as nn

# A basic neural network with batch normalization added

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.fc1 = nn.Linear(10, 5) # First layer

self.bn = nn.BatchNorm1d(5) # BatchNorm for first layer output

self.relu = nn.ReLU() # ReLU activation function

def forward(self, x):

x = self.fc1(x)

x = this.bn(x)

x= this.relu(x)

returnxThis piece of code is an easy way to see how batch normalization fits into your neural network setup using PyTorch’s built-in modules like nn.BatchNorm.

The Impact of Batch Normalization on Training Dynamics

Batch normalization affects how training works. In training mode, it figures out things like the average and spread of the data you’re working with. It makes sure everything is on a level playing field, which helps keep the training steady. This tackles problems like gradients disappearing or getting too big, which are common in deep learning networks. With batch stats being used during training, your model gets better at handling new kinds of data, leading to more accurate results.

Accelerating Deep Network Training

Batch normalization helps deep neural networks learn faster, especially with GPU acceleration. GPUs handle multiple calculations at once, which is important for processing large models. This combination reduces training time. Batch normalization speeds up training by ensuring data input is stable and consistent. GPU power and batch normalization together make training quicker.

Improving Gradient Flow Through Networks

Batch normalization stabilizes gradients in neural networks, ensuring better and quicker learning. It prevents overfitting and optimizes complex models, especially with challenging datasets or problems.

Use GPU Cloud to Accelerate Deep Learning

Novita AI GPU Instance , a cloud-based solution, stands out as an exemplary service in this domain. It offers access to NVIDIA RTX 3090 GPUs, which are renowned for their ability to handle intensive computational workloads.

This is particularly beneficial for PyTorch users who require the additional computational power that GPUs provide without the need to invest in local hardware.

Here’s how Novita AI GPU Instance can be integrated with PyTorch to improve efficiency:

Computational Acceleration: PyTorch’s seamless integration with CUDA-enabled GPUs like the RTX 3090 provided by Novita AI GPU Instance allows for accelerated model training and inference. PyTorch’s .to(device) method ensures that models and tensors are efficiently transferred to the GPU, unlocking the parallel processing capabilities necessary for deep learning.

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)Elastic Scalability: Novita AI GPU Instance offers the flexibility to scale resources up or down based on the project’s demands. This means that during periods of high computational need, additional GPU resources can be allocated, and once the task is complete, these resources can be deallocated, optimizing costs.

Cost Efficiency: The pay-as-you-go pricing model of cloud services like Novita AI GPU Pods ensures that users only pay for the resources they consume. This is particularly cost-effective for projects with fluctuating computational needs or for those requiring a burst of computational power for a limited time.

The Future of Batch Normalization in Deep Learning

Batch normalization is a popular method in deep learning because it helps with training and makes models more accurate. It will likely remain an important part of the toolkit as deep learning advances.

Innovations and Trends in Normalization Techniques

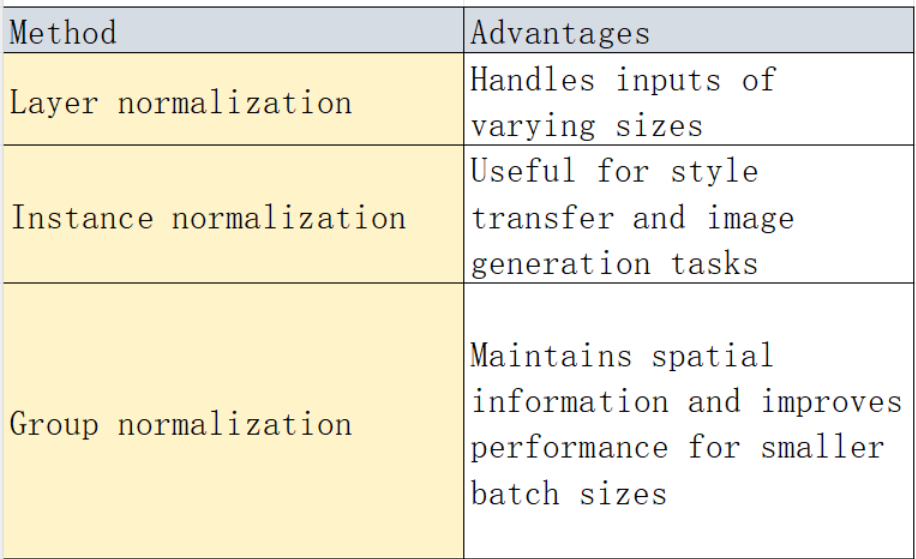

With layer normalization, inputs are adjusted across features, not based on batches. This change helps models handle different input sizes better and makes recurrent neural networks more efficient. Instance normalization adjusts inputs individually across spatial dimensions. It’s great for projects like changing an image’s style or creating new images. Group normalization divides channels into groups for separate adjustments.

Beyond Batch Norm: Exploring Alternative Methods

Batch normalization is a powerful tool, but there are other normalization methods that can be used depending on the model’s needs. Other methods include layer normalization, instance normalization, and group normalization. Each method has advantages and can be used in different scenarios.The table below provides a summary of these alternative normalization methods:

By exploring these alternative methods, developers can find the most suitable normalization technique for their specific use case, improving model performance and stability.

Conclusion

In the world of deep learning, getting really good at using Torch Batch Norm in PyTorch 2.3 is super important for making neural network training better. By starting with the basics and then applying Batch Normalization, you can make your network learn faster and handle data more smoothly. If you dive into more complex stuff like making sure your model is as accurate as possible and figuring out how to fix common problems, you’ll see a big improvement in how well your models work. Looking ahead at what’s coming next for normalization techniques in this field means keeping up with new ideas and trends will be key. By sticking to best practices and being open to trying different approaches, you’re setting yourself up for discovering exciting improvements in how stable and efficient models can be.

Frequently Asked Questions

What Are the Best Practices for Implementing Batch Norm in PyTorch?

When you’re doing inference, switch your model to evaluation mode so it uses the running averages. Also, picking a good batch size is key and keeping an eye on how well your model is performing and staying stable matters a lot.

How Does Batch Normalization Influence Model Performance and Stability?

Batch normalization makes models work better and more stable by making sure the data going into each layer is normalized, which means it tackles the problem of internal covariate shift.

Where can I read the implementation of Batch Normalization?

Check this page to find the implementation.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance - the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading: