Mastering LLM API Gateway: Your Ultimate Guide

Introduction

In the world of tech today, there’s a big push for AI-driven tools and services. Big players like OpenAI and Gemini are leading the charge with their LLM (Large Language Model) APIs, offering powerful ways to process language that let developers create cool new stuff. But getting to these tools in a way that’s both easy and safe can be tricky.

That’s where something called an API Gateway comes into play, specifically designed for LLMs. Think of it as your go-to spot for reaching out to all sorts of LLM APIs without hassle. It gives you one place to connect from, making things like sending requests, keeping data safe, and checking on how everything is running much smoother.

We’re going dive deep into mastering this API Gateway made just for LLMs in this guide. From starting with what it is exactly to setting it up right; adding those large language models; handling what goes in and out smoothly; plus keeping tabs on everything through monitoring analytics — we’ll cover all you need know by the time we wrap up here.

Understanding the Basics of LLM API Gateway

Defining LLM API Gateway and Its Significance

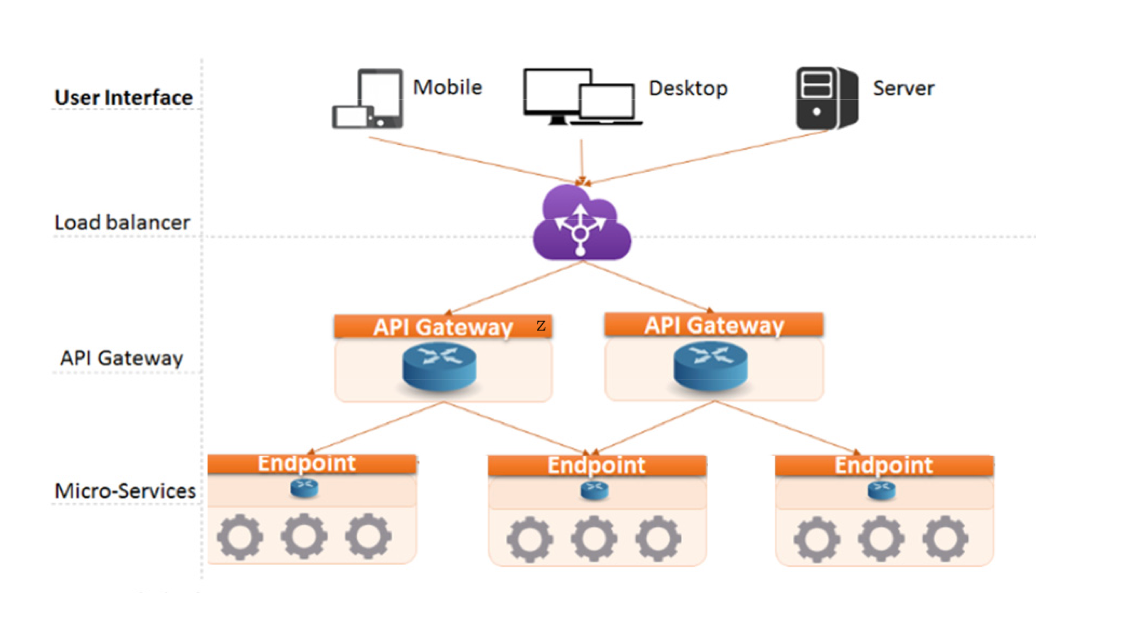

Let’s start with the basics to get a good grip on what the LLM API Gateway is all about. Think of it as a key tool for getting into LLM APIs easily. It’s like a bridge that connects clients, who use these APIs, to the services they need in the backend. This gateway makes sure everyone talks through one single spot.

With this setup, using big brain AI technologies becomes smooth because the LLM API Gateway works as an AI connector. Its job is super important for keeping things safe — it checks who’s trying to access something (authentication), decides if they’re allowed (authorization), and keeps an eye on all requests coming in and out. For companies looking to dive deep into AI without getting tangled up in complex tech stuff, having this gateway means they can focus more on creating cool new apps while making sure their data stays safe under lock and key.

Key Components of an LLM API Gateway

The LLM API Gateway is made up of a few important parts that all work together to make sure you can get to the LLM APIs easily and safely. Here’s how it breaks down:

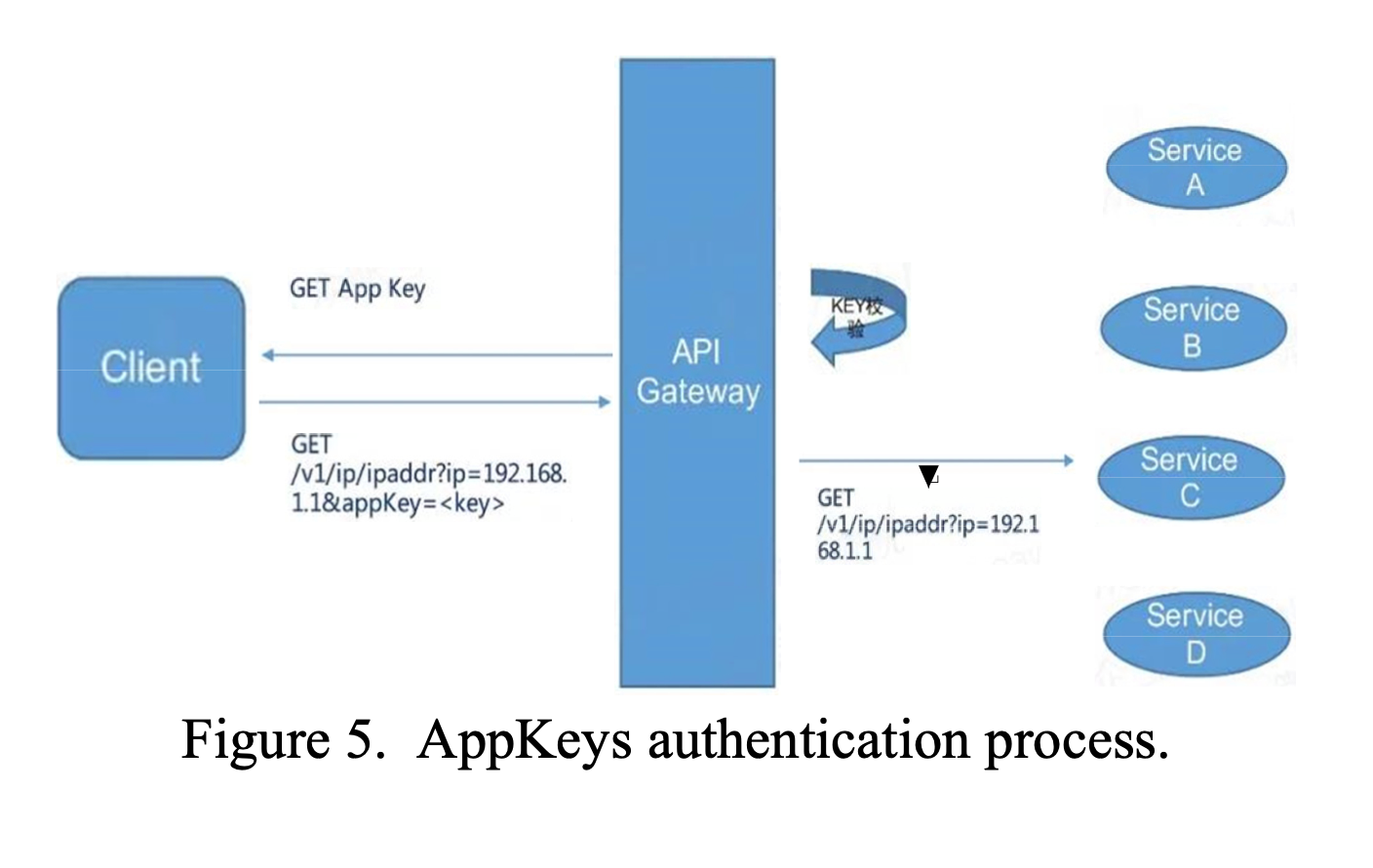

- API Key: Think of an API key like a special passcode. It lets people prove who they are so they can use the gateway. This way, we know only the folks who should be getting in are getting in.

- Front Door: The front door is basically where all requests knock first when they want access. From there, these requests get sent off to where they need to go based on some set rules.

- Unified Endpoint: This part is like having one mailbox for everything you need from the LLM API Gateway. Instead of going to different places for different things, you just go here every time.

Putting these pieces together means anyone trying to use Llm apis gets a smooth ride without any bumps along the way because only those with permission (thanks to their api key) can come through our front door and head straight for what they need at our unified endpoint.

Setting Up the LLM API Gateway

Installation and Configuration Steps

To get the LLM API Gateway up and running smoothly on your server or cloud, you’ll need to follow a few important steps. Here’s how it goes:

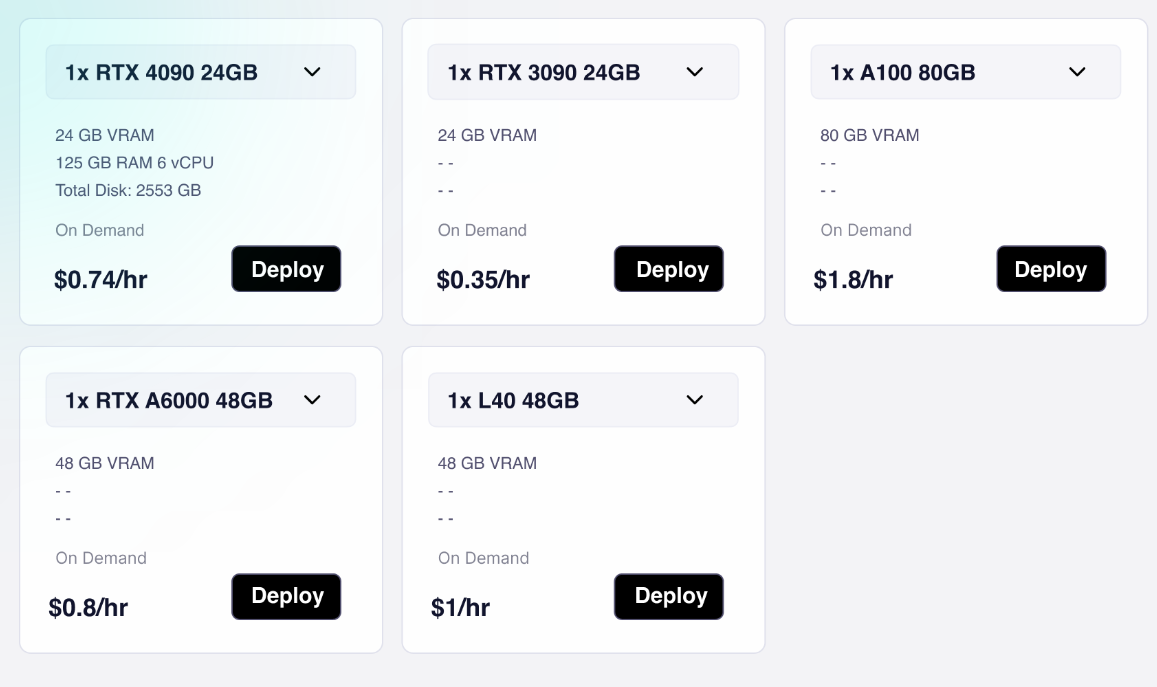

- First, you need a cloud or server environment to install and host your LLM API Gateway. Novita AI GPU Pods offer cutting-edge GPU technology at a fraction of the cost, with savings of up to 50% on cloud expenses. Join the Novita AI Discord to see the latest changes of our service.

2. Then, on your server or in the cloud, you have to install the software for the LLM API Gateway. Usually, this means downloading some files from whoever provides your gateway and getting them set up on your system.

3. Next step, run this package and do what the instructions on your screen tell you.

4. With that done, move onto setting up how your APIs will talk to each other and making sure they know where to go.

5. Pick out which LLM models you’re planning to use with this setup.

6. Next, lay out all the paths these models can take when they send data through your new gateway.

7. The next big thing is keeping everything secure so only those who should access it can.

8. Create special keys that let clients prove who they are when sending requests.

9. Put rules in place that decide who gets into what parts of your system.

10. Lastly, make sure any info sent back and forth stays private by encrypting it.

11. Switch everything over to HTTPS for encryption during transit

12. Get SSL/TLS certificates sorted out too; these help keep communications safe.

By sticking closely with these guidelines for configuration and security measures like authentication plus authorization controls at every endpoint involved in handling api calls between servers hosting llm apis or accessing llm models directly ensures not just smooth operation but also secures access against unauthorized entries effectively managing control over gateways designed specifically for such tasks within an infrastructure whether based locally or hosted remotely. API gateways play a crucial role in enforcing security policies and ensuring that applications comply with organizational standards established by security and infosec teams, providing observability and control over traffic routing.

Securing Your Gateway with Best Practices

Making sure your API gateway is safe is really important to keep your company’s data secure and make sure everything runs smoothly. Here are some smart ways to do that:

- For starters, with secure access controls: Make it a point to use things like API keys or different ways of checking who’s trying to get in, so you know only the right users or programs can use the gateway and LLM APIs.

- On top of that, enforce strong authentication protocols: Put into place tough-to-crack methods such as OAuth or JWT for confirming if clients are who they say they are. This helps stop unwanted visitors.

- With robust authorization policies in play: Set up rules on who can see what within your system based on their job role or what permissions they have. Keep an eye on these rules and tweak them as needed so they always match how tight you want security.

- By using encryption and making communication secure: Stick with HTTPS and SSL/TLS certificates for scrambling data being sent back and forth. It keeps private info out of the wrong hands.

Following these steps will beef up the safety around your LLM API Gateway, keeping all parts of your api infrastructure under lock-and-key while maintaining its reliability.

Integrating LLM Models with Your API Gateway

When you hook up LLM models to your API Gateway, it’s like setting up a smooth chat line between your users and the LLM models. Think of the AI Gateway as a middleman that helps people talk to the LLM models.

Connecting LLM API to the Gateway

To hook up the LLM API with the Gateway, you’ve got to set up the API Gateway so it acts like a middleman for your clients and the LLM API. Here’s what needs doing after setting up your LLM API gateway:

- Set Up Your API Gateway: Log in to your chosen API gateway provider’s console. Create a new API project or select an existing one where you want to integrate the LLM API.

- Create a New API Endpoint: Define a new API endpoint within your project. This endpoint will serve as the entry point for accessing your LLM API. Specify the endpoint URL path and HTTP methods (e.g., POST for sending requests to LLM).

- Configure Endpoint Integration: Configure the endpoint integration settings to connect with your LLM API backend. Depending on your LLM API setup, choose the appropriate integration type (e.g., HTTP/HTTPS endpoint).

- Security Settings: Set up security measures such as authentication and authorization for your API endpoint to ensure secure access to the LLM API. Consider using API keys, OAuth tokens, or other authentication mechanisms supported by your API gateway.

- Testing and Deployment: Test your API endpoint integration to ensure it correctly forwards requests to the LLM API and handles responses as expected. Deploy your API gateway configuration to make the endpoint publicly accessible according to your application’s requirements.

By linking up these two Gateway and LLM models, we’re basically putting everyone through one main entrance (api gateway) ensuring not only smoother visits but also keeping things safe (secure access). It makes managing traffic easier while making sure each visit is legit thanks authentication steps.

Customizing Model Integration for Advanced Use Cases

The LLM API Gateway gives you the power to tweak how models work together, making sure they fit perfectly with what you need them for. Here’s a look at some ways to make that happen:

- With specific use cases in mind: Figure out exactly why you’re using LLM models. It might be for breaking down text, creating content, translating languages, or something else that needs understanding language.

- By choosing model names: Pick the right LLM models for your projects and name them when setting things up. This way, each task uses the most suitable model.

- Through managing query parameters: Set up and manage extra options in your setup so users can change how the LLM models act. These options could control things like creativity level or length of generated content.

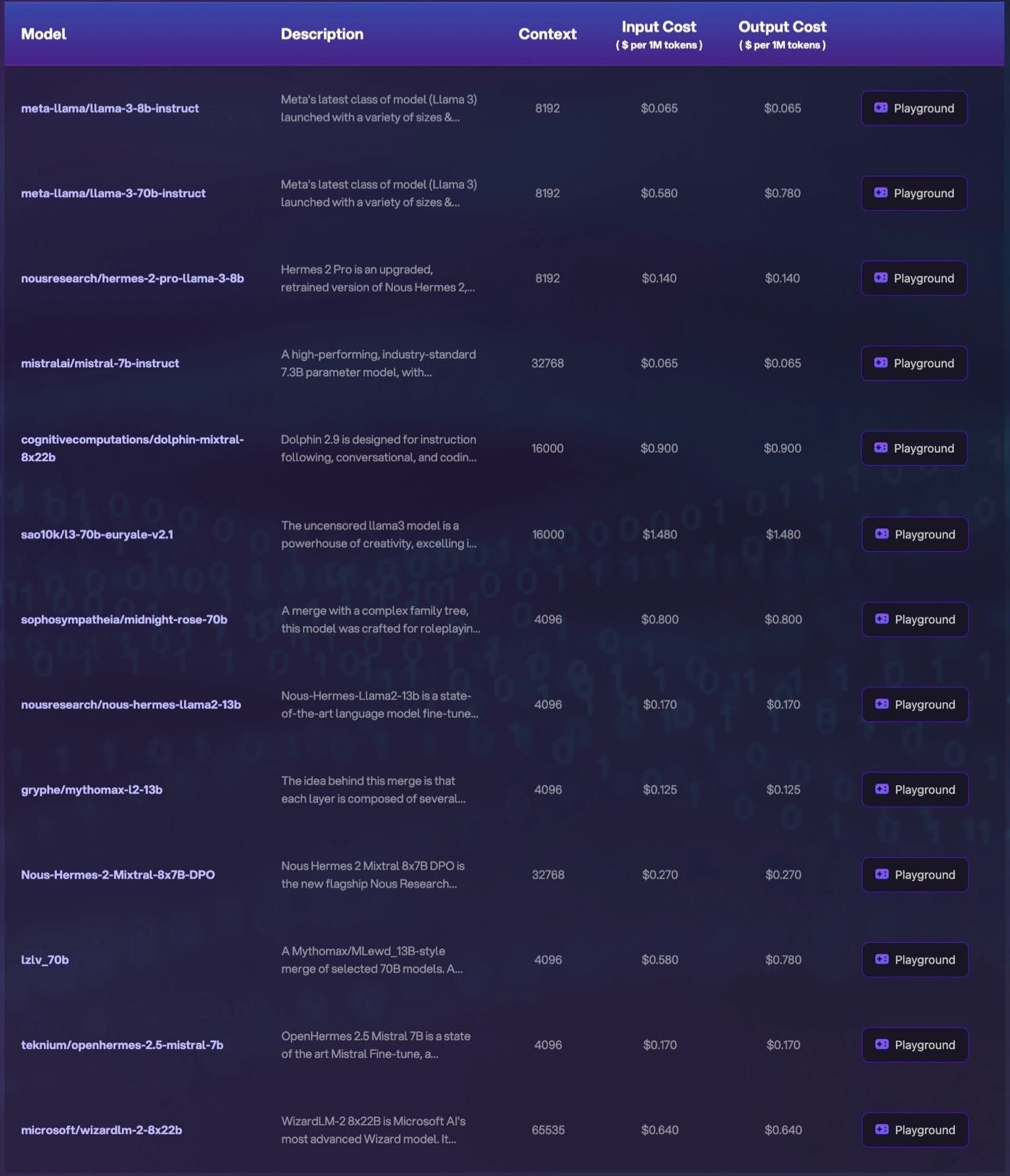

Novita AI provides developers with LLM API which contains many trendy LLM choices, e.g. llama-3–8b-instruct, llama-3–70b-instruct, mistral-7b-instruct, hermes-2-pro-llama-3–8b. You can compare our different LLM options for free on our Novita AI Playground.

Managing LLM API Requests and Responses

Handling and sorting out the requests coming into your LLM API Gateway, along with organizing the replies you send back, is super important if you want everything to run smoothly.

Optimizing Request Handling for Efficiency

To make sure your LLM API Gateway runs smoothly and quickly, think about these tips:

- Use smart routing: Set up the gateway to use clever ways of directing traffic that can handle lots of requests at once and spread them out evenly over the different services in the back.

- Use caching: By storing answers that are asked for a lot, you won’t have to do the same work over again. This makes things faster for everyone and eases the load on your backend systems.

- Limit how many requests can be made: Put rules in place to control how often someone can ask for something within a certain time frame. This stops too much pressure on your backend services and makes sure resources are used fairly by everyone.

- Check requests carefully: Make sure only valid or safe requests get through by checking them first. This keeps bad or harmful attempts away from your important backend services.

Structuring Responses for Maximum Utility

When it comes to making sure responses are super useful, it’s all about giving clear and easy-to-understand info back to the apps that need it. Here’s how you can do a great job at organizing these responses:

- Start by creating straightforward and uniform response layouts: It means setting up neat templates for all your api answers.

- With including stuff like timestamps or request IDs in your replies, you add extra bits of information that could be really helpful for the folks on the other end.

- Tailoring how these answers look based on what people need is another smart move. Whether it’s picking out specific pieces of data, arranging them in order or grouping some together — doing so can make the information even more valuable for users.

Monitoring and Analytics

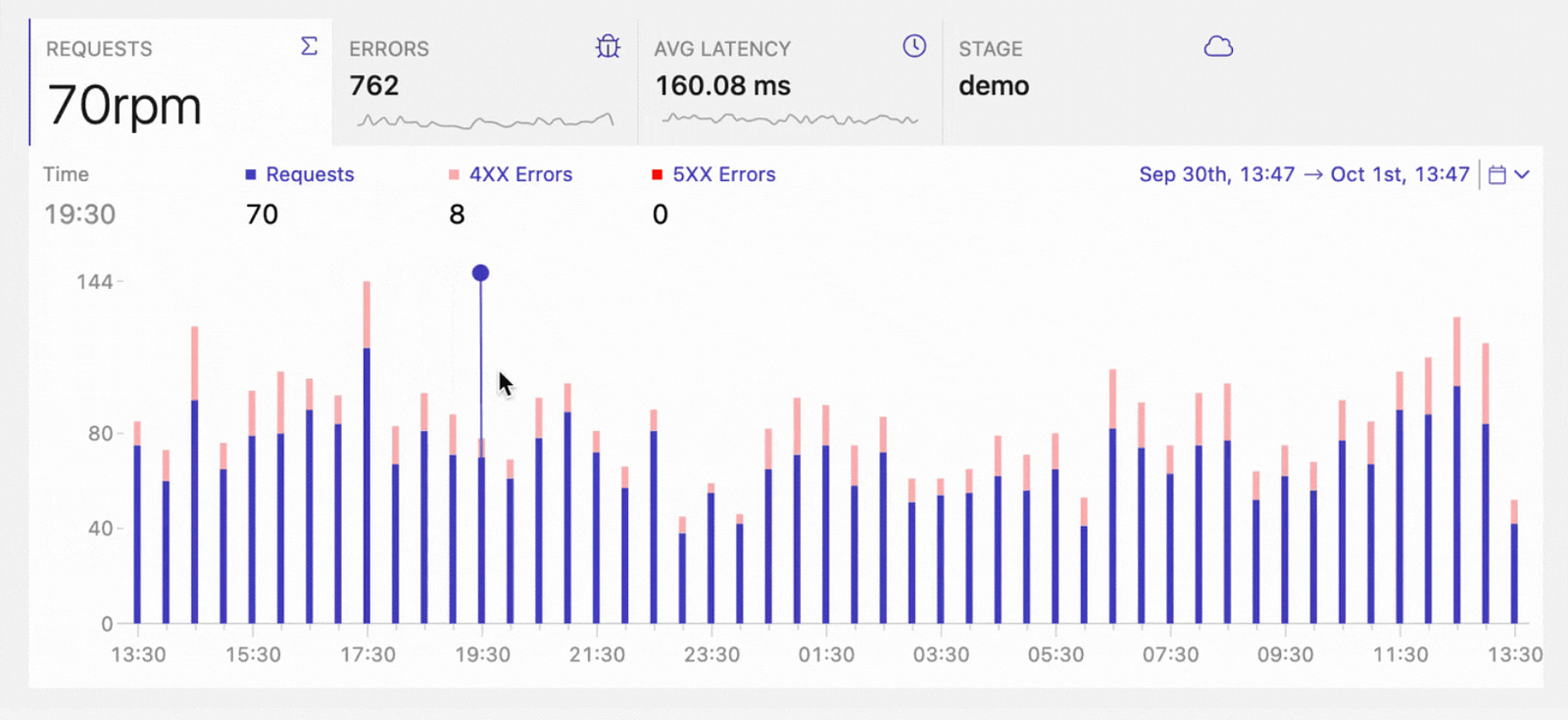

Keeping an eye on things and digging into the data is super important for making sure your LLM API Gateway runs smoothly and reliably. By watching over key metrics and breaking down the info, you’ll understand how people are using it, where it might be getting stuck, and if everything’s working as it should. Here’s why keeping tabs on your gateway matters:

Tracking Gateway Performance and Usage Metrics

API gateways are great for keeping an eye on how well they’re doing and how much the LLM APIs are being used. With these tools, companies can really understand what’s going on with their traffic, spot any trouble spots quickly, and make sure everything is running as smoothly as possible.

By looking into how the gateway performs, businesses can check that it’s working right without any hiccups slowing things down. This means they can fix anything that isn’t working well to keep operations smooth.

With usage metrics showing details like how many requests are made, response times, and errors rates for using LLM APIs; organizations get a clear picture of demand patterns. This info helps them decide when to add more resources or tweak settings to improve service for everyone using it.

Keeping tabs on both performance and usage stats is key for companies wanting their LLLM API gateway to work without a hitch while also making sure users have a good experience.

Leveraging Analytics for Informed Decision-Making

LLM API gateways, come with analytics features. These features let organizations look into the data the gateway collects. With this info, they can make smart choices to boost both performance and security for their LLM APIs.

By digging into this collected data, companies can spot trends and oddities. This helps them see how people are using the LLM APIs, if their security is tight enough, and how well these APIs work overall.

With these insights in hand, decisions about where to put resources or when it’s time to scale up become clearer. They also help fine-tune security rules and tweak settings so everything runs smoothly.

Advanced Features of LLM API Gateway

LLM API gateways, come packed with cool features that really help out when you’re working with LLM APIs. They’ve got stuff like caching, rate limiting, and quotas to make things run smoother.

Utilizing Caching for Performance Improvement

Using a feature like caching in API gateways can really speed up how LLM APIs work. When an organization caches responses from these LLM APIs, it means they’re keeping the answers to often-asked questions ready to go. So, when someone asks that question again, instead of taking time asking the LLM API all over again, they just pull up the answer straight from this cache. This way is quicker and makes everything run smoother.

For those dealing with slow-to-respond or super popular LLM APIs, caching is a game-changer. It lessens the burden on these APIs by not having to process repeat requests and lets users get their info much faster.

But there’s a catch — you’ve got to think about how long you keep data in your cache because you always want fresh info out there for your users. On top of that, if any of this cached stuff is private or sensitive information; well then security becomes another big thing organizations need to watch out for.

Implementing Rate Limiting and Quotas

LLM API gateways offer two key features to manage how LLM APIs are used: rate limiting and quotas. With rate limiting, companies can control how many requests are made to the LLM APIs in a set timeframe. This is crucial for stopping misuse, keeping the APIs from getting too busy, and making sure everyone gets their fair share.

On another note, quotas let companies put caps on how much they use the LLLM APIs — this could be in terms of request numbers or data amounts being handled. It’s all about helping businesses keep an eye on resource usage and costs while sticking to their own rules.

By putting these measures into place — rate limiting and quotas — organizations make sure that their LLM APIs stay available, reliable, secure access is maintained ,and safe from any potential overuse or abuse.

Troubleshooting Common LLM API Gateway Issues

Troubleshooting plays a key role in keeping an LLM API gateway running smoothly and fixing any problems that might pop up.

Identifying and Resolving Gateway Errors

To tackle these problems, the first step is looking closely at the error messages and logs. This helps figure out why there’s a problem in the first place. You might need to check how things are set up, make sure everything’s connected properly or see if there’s something wrong with the LLM APIs themselves.

After figuring out what went wrong, it’s time to fix it. This could mean changing some settings around, fixing connection issues or even getting in touch with whoever provides your LLLM API if you need extra help.

By sorting out these gateway errors efficiently organizations can keep their API gateways working well without any hiccups ensuring users get a smooth experience.

Best Practices for Smooth Gateway Operations

To keep an API gateway running smoothly, it’s important for companies to stick to some key guidelines and set up their systems properly.

For starters, they should make a habit of checking and tweaking the gateway setup regularly. On top of that, setting up ways to watch over the system and get alerts about any odd behavior is crucial. With this in place, companies can quickly deal with problems before they bother users.

Keeping the software fresh with regular updates is another must-do. This means fixing bugs and adding new stuff as needed so everything stays safe against threats.

By sticking to these steps — reviewing settings often; monitoring closely; updating frequently — businesses can ensure their LLM API gateway works like a charm without hiccups for everyone using it.

Conclusion

By diving into the LLM API Gateway, you’ve really gotten to grips with some cool advanced stuff and smart ways to make managing your APIs way better. Starting from scratch, getting everything set up, mixing in models, and making sure requests run smoothly means you’re pretty much geared up for handling things efficiently. Always keep an eye on how things are running by checking out the analytics and fixing any problems that pop up. Picking the best LLM model is super important because it’s what makes your gateway do its magic at its best. With all these tips from this ultimate guide, you’re definitely on track to become a pro at managing LLM API Gateways.

Frequently Asked Questions

1. How to Choose the Right LLM Model for Your Gateway?

When it comes to picking the best LLM model for your gateway, it really boils down to what you need it for. Think about the kind of language processing your project demands, how complex these tasks are going to be, and what performance level you’re aiming for with your application. With those factors in mind, look at various LLM models and see which ones line up well with your requirements in terms of what they can do, how well they perform, and whether they fit nicely with your gateway setup before settling on one. You can check for LLM leaderboards (e.g. Hugging Face) for LLM comparison.

Novita AI is the all-in-one cloud platform that empowers your AI ambitions. With seamlessly integrated APIs, serverless computing, and GPU acceleration, we provide the cost-effective tools you need to rapidly build and scale your AI-driven business. Eliminate infrastructure headaches and get started for free — Novita AI makes your AI dreams a reality.

Recommended Reading

Powering High-Performance: GPU Farms or GPU Cloud?

Best LLM APIs 2024: Top Choices for Best LLM