Mastering Llama 3 Paper Techniques: A Comprehensive Guide

Key Highlights

- Advanced Large Language Models: Meta AI introduces Llama 3, a suite of advanced language models with capabilities in multilinguality, coding, reasoning, and tool usage.

- Enhanced Capabilities: Llama 3 includes an upgraded tokenizer, Grouped-Query Attention (GQA), expanded datasets, advanced filtering techniques, and a commitment to responsible development.

- Tokenizer Upgrade: Llama 3 increases vocabulary size to 128,256 tokens, enhancing multilingual text encoding efficiency and expanding parameter count to 8 billion.

- State-of-the-Art Performance: Llama 3’s 8B and 70B parameter models set new benchmarks for LLMs, with significant advancements in pretraining and post-training.

- Developer Empowerment: Meta AI envisions empowering developers with customization options for Llama 3, including new trust and safety tools and integration with platforms like Hugging Face.

- LLM API on Novita AI: Developers can access and utilize Llama 3’s features through a streamlined interface provided by Novita AI’s LLM API.

Introduction

Meta AI’s Llama 3 marks a significant advancement in large language models (LLMs), raising the bar for multilingual abilities, reasoning, and coding. Building on Llama 2, it introduces key features like an enhanced tokenizer and Grouped-Query Attention (GQA). This article highlights Llama 3’s innovations, explores their real-world applications, and offers a guide to integrating Llama 3 with Novita AI’s LLM API.

Exploring the Core Concepts of Llama 3 Paper

The Llama 3 paper introduces advanced large language models by Meta AI, enhancing natural language processing in various languages. It features innovations like an enhanced tokenizer, the GQA mechanism, expanded training datasets, advanced filtering techniques, and a commitment to responsible development. These advancements redefine AI capabilities and set new standards for large language model utilization.

An Introduction to Llama 3’s Advanced Models

Llama 3 is a suite of language models supporting multilinguality, coding, reasoning, and tool usage. The largest model is a dense Transformer with 405 billion parameters and a context window of up to 128K tokens. Llama 3 matches the quality of leading language models like GPT-4 across various tasks. Meta AI is releasing Llama 3 publicly, featuring pre-trained and post-trained versions of the 405 billion parameter model, along with the input and output safety model, Llama Guard 3.

Goals for Llama 3

Meta AI’s Llama 3 aims to create top open models to rival proprietary options. By incorporating developer input, Meta AI enhances Llama 3’s usability and advocates for responsible practices in large language models (LLMs). The early release of these text-based models encourages community involvement. Llama 3 will expand with multilingual and multimodal features, longer context support, and improved core capabilities like reasoning and coding.

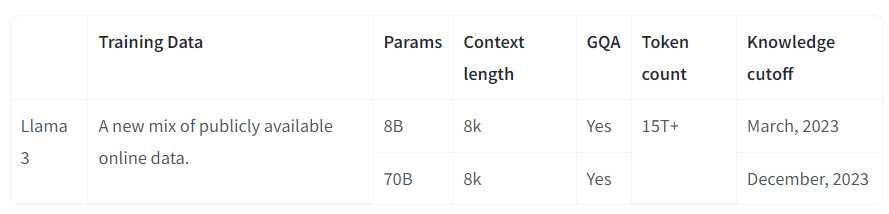

Llama 2 VS Llama 3: Key Differences Explained

Llama 3 introduces a new tokenizer, increasing the vocabulary size from 32K tokens to 128,256. This enhances text encoding efficiency for multilingual capabilities. However, this expands the input and output embedding matrices, raising the parameter count from 7 billion in Llama 2 to 8 billion in Llama 3.

Additionally, the 8 billion version of the model now incorporates Grouped-Query Attention (GQA), an efficient representation designed to improve handling of longer contexts.

How to Build with Llama 3?

Meta AI’s vision is to empower developers to customize Llama 3 for relevant use cases, facilitating the adoption of best practices and enhancing the open ecosystem. This release includes new trust and safety tools, such as updated components with Llama Guard 2, Cybersec Eval 2, and the introduction of Code Shield — a guardrail for filtering insecure code generated by LLMs.

Llama 3 has been co-developed with torchtune, a new PyTorch-native library designed for easy authoring, fine-tuning, and experimenting with LLMs. Torchtune offers memory-efficient training recipes fully implemented in PyTorch and integrates with platforms like Hugging Face, Weights & Biases, and EleutherAI. It also supports Executorch for efficient inference on mobile and edge devices. For guidance on prompt engineering and using Llama 3 with LangChain, Meta AI provides a comprehensive getting started guide that covers everything from downloading Llama 3 to large-scale deployment in generative AI applications.

Deploying Llama 3 at Scale

Llama 3 is now available on major platforms, including cloud providers and model API providers. For examples of how to utilize all these features, please refer to Llama Recipes, which includes all of Meta AI’s open-source code.

Understanding the Llama Model Family

Llama (Large Language Model Meta AI, previously stylized as LLaMA) is a series of autoregressive large language models (LLMs) developed by Meta AI, with its initial release in February 2023. The most recent version is Llama 3.2, which was launched in September 2024.

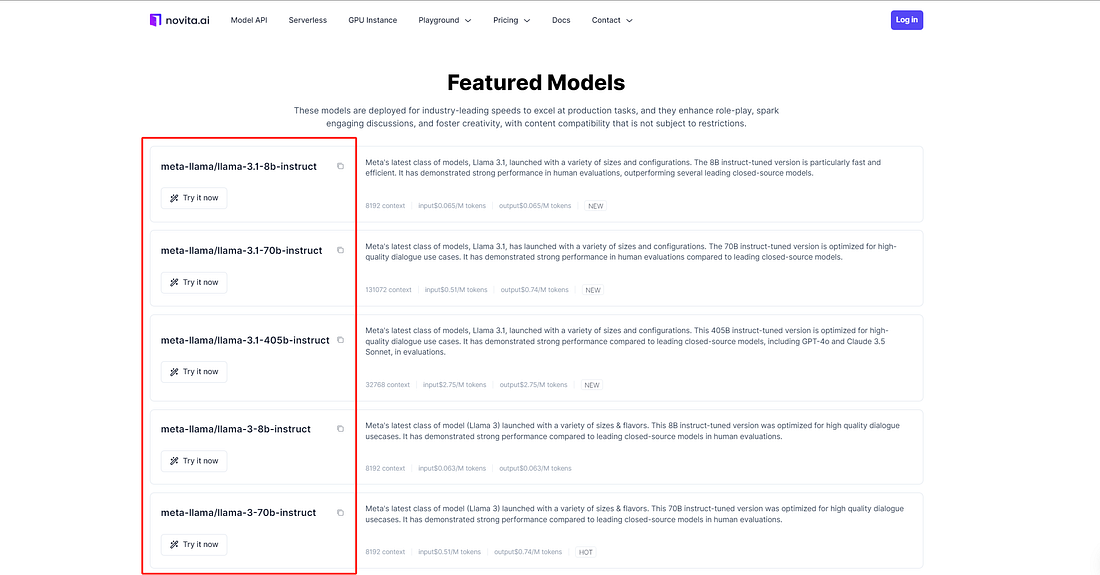

What are the models in the Llama series?

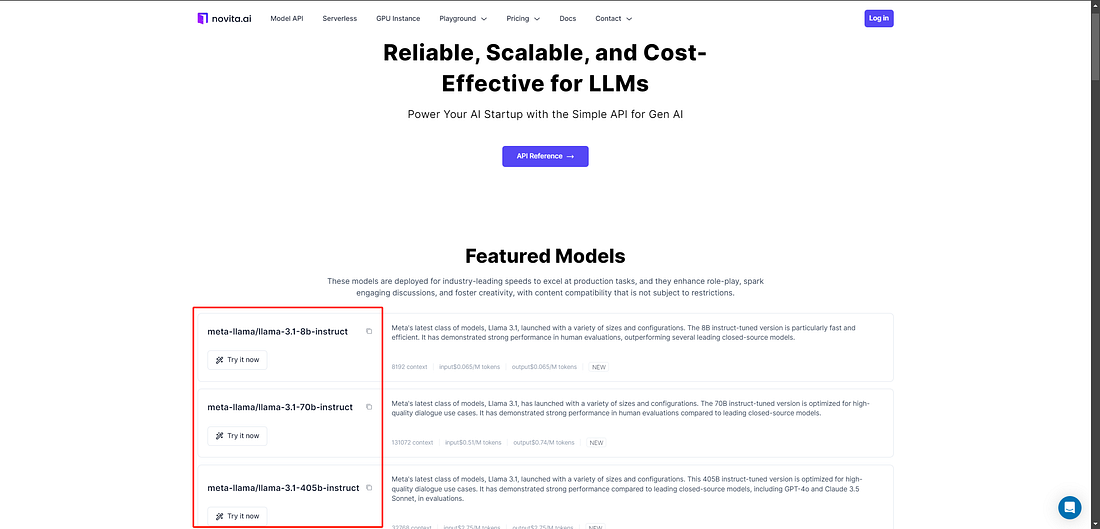

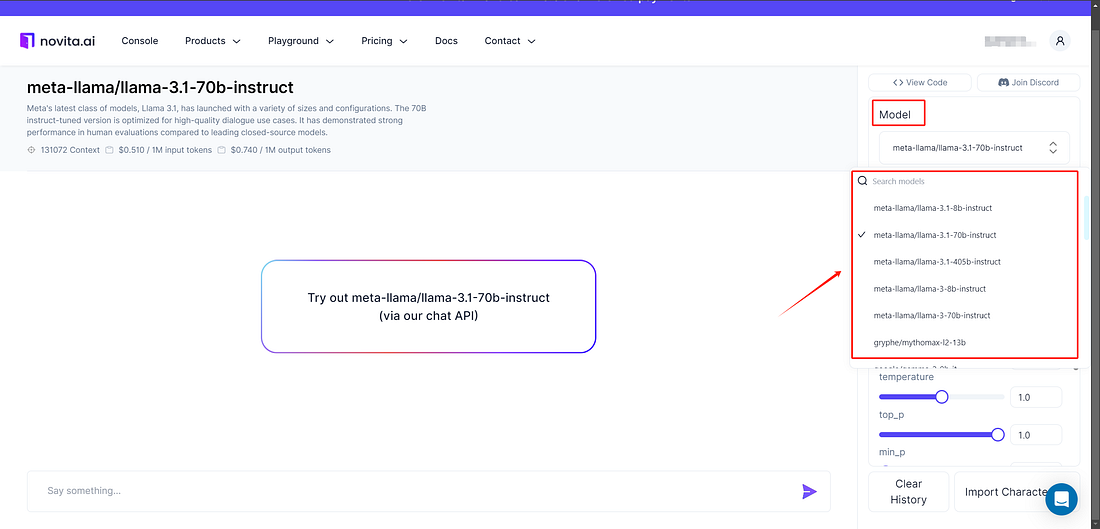

Below are the Llama family models available in Novita AI. If you’re interested in any model, feel free to click and try it out directly.

- meta-llama/llama-3.1–8b-instruct

- meta-llama/llama-3.1–70b-instruct

- meta-llama/llama-3.1–405b-instruct

- meta-llama/llama-3–8b-instruct

- meta-llama/llama-3–70b-instruct

Comparison of models

The training cost column reflects only the cost for the largest model. For instance, ‘21,000’ represents the training cost of Llama 2 69B, measured in petaFLOP-days. To clarify, 1 petaFLOP-day is equivalent to 1 petaFLOP/sec multiplied by 1 day, which equals 8.64E19 FLOP.

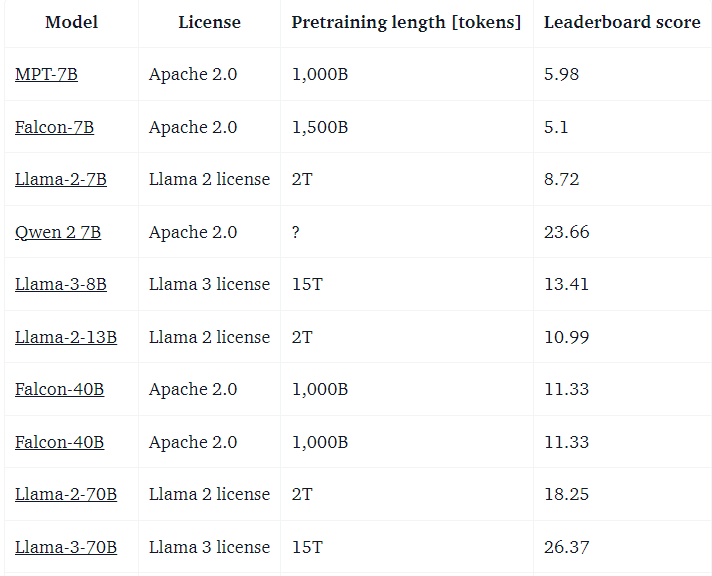

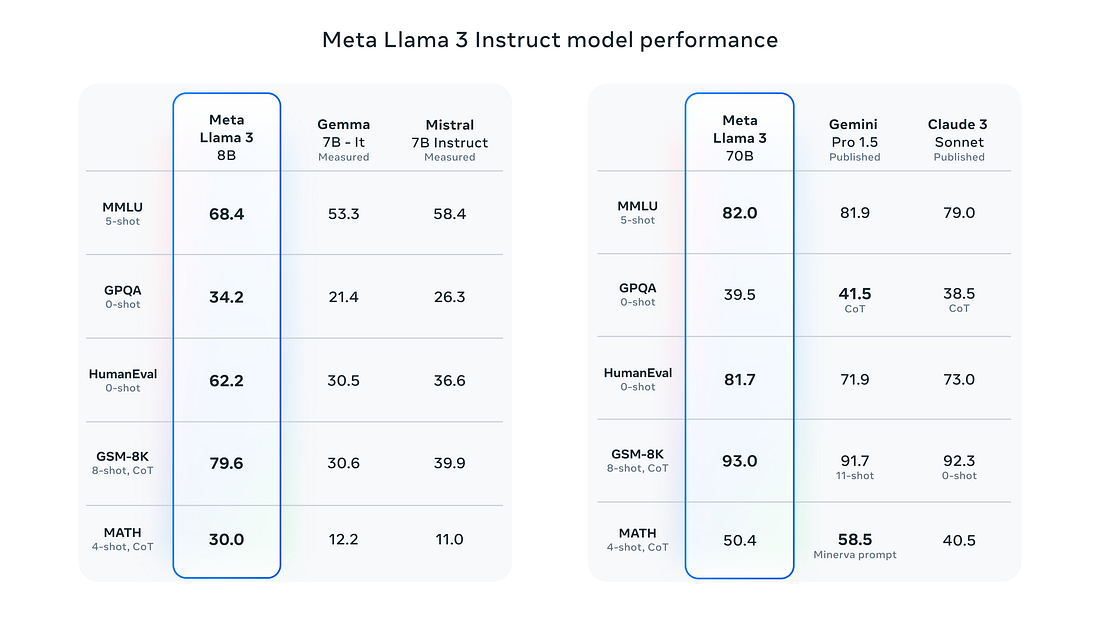

Achieving State-of-the-Art Performance with Llama 3

Meta AI’s new 8B and 70B parameter Llama 3 models represent a significant advancement over Llama 2, setting a new benchmark for LLMs at these scales. With enhancements in pretraining and post-training, the pretrained and instruction-fine-tuned models are now the leading options available today at the 8B and 70B parameter levels. Improvements in post-training procedures have significantly reduced false refusal rates, enhanced alignment, and increased diversity in model responses. Additionally, Llama 3 demonstrates greatly improved capabilities in reasoning, code generation, and instruction following, making it more steerable than ever.

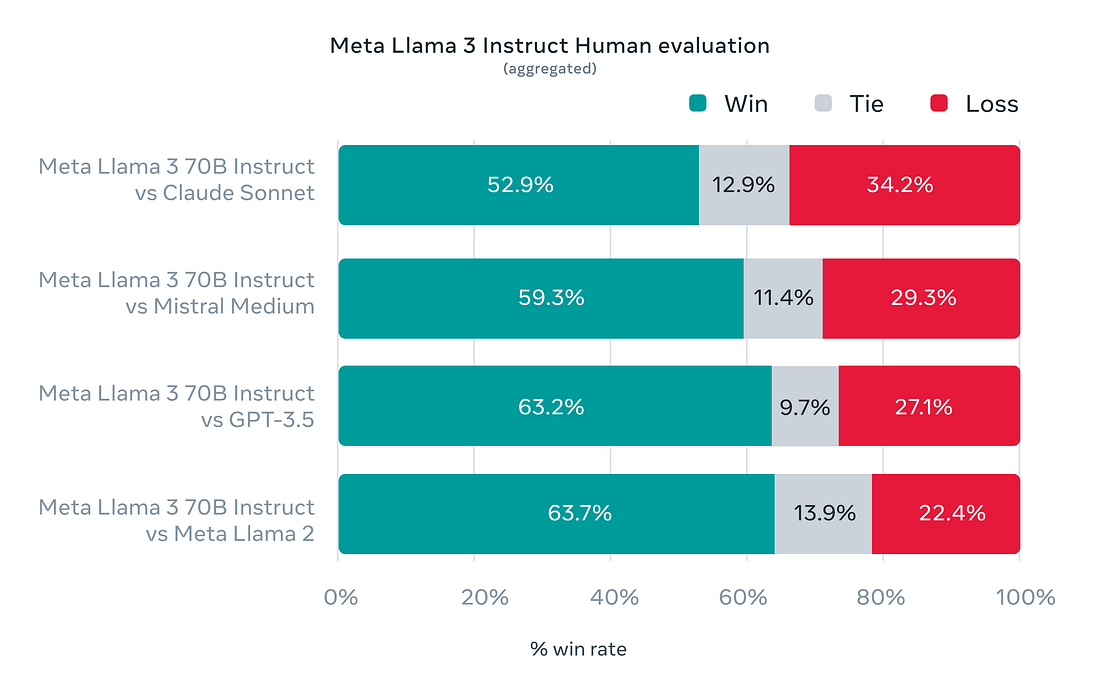

In developing Llama 3, Meta AI focused on model performance on standard benchmarks while also optimizing for real-world applications. To achieve this, a new high-quality human evaluation set was created, comprising 1,800 prompts that address 12 key use cases: seeking advice, brainstorming, classification, closed question answering, coding, creative writing, extraction, embodying a character or persona, open question answering, reasoning, rewriting, and summarization. To avoid unintentional overfitting, even the modeling teams at Meta AI do not have access to this evaluation set. The chart below displays the aggregated results of human evaluations across these categories and prompts, comparing Llama 3 to Claude Sonnet, Mistral Medium, and GPT-3.5.

Preference rankings from human annotators, based on this evaluation set, demonstrate the superior performance of Meta AI’s 70B instruction-following model compared to similar-sized competing models in real-world scenarios.

Llama 3 paper highlights Meta’s advancements in large language models. Implementing these innovations effectively in real-world scenarios is crucial. The LLM API on Novita AI offers developers a streamlined interface to access and utilize these powerful models based on the insights from the Llama 3 paper. Learn how to integrate Llama 3’s features into your applications using the LLM API on Novita AI.

How to Use LLM APIs on Novita AI for Model Integration

Integrating LLM APIs on Novita AI is a simple process that enables developers to harness the powerful features of large language models, including the Llama 3 series. Below is a step-by-step guide to help you get started.

Step 1: Create an account and access Novita AI.

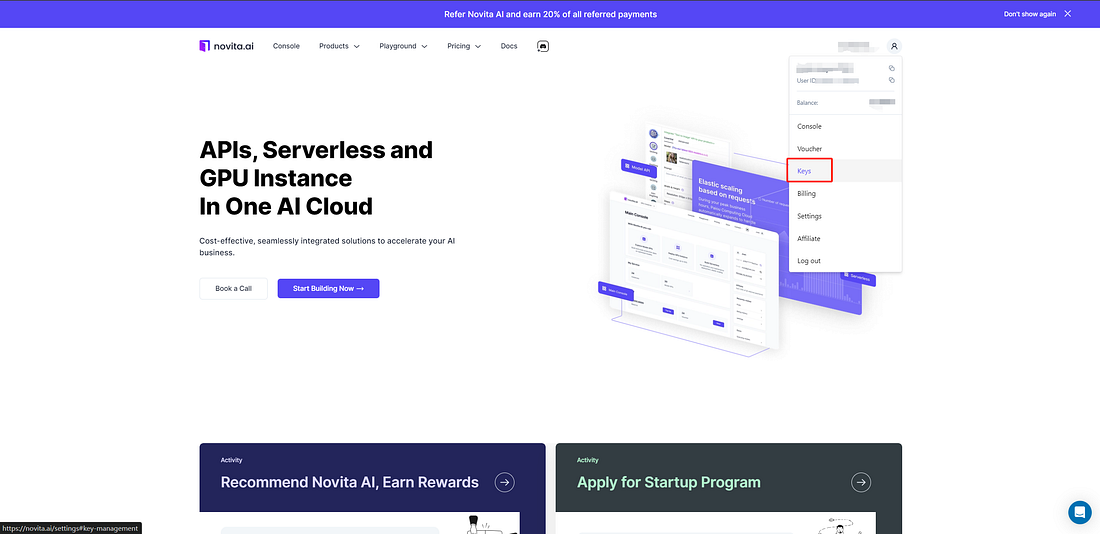

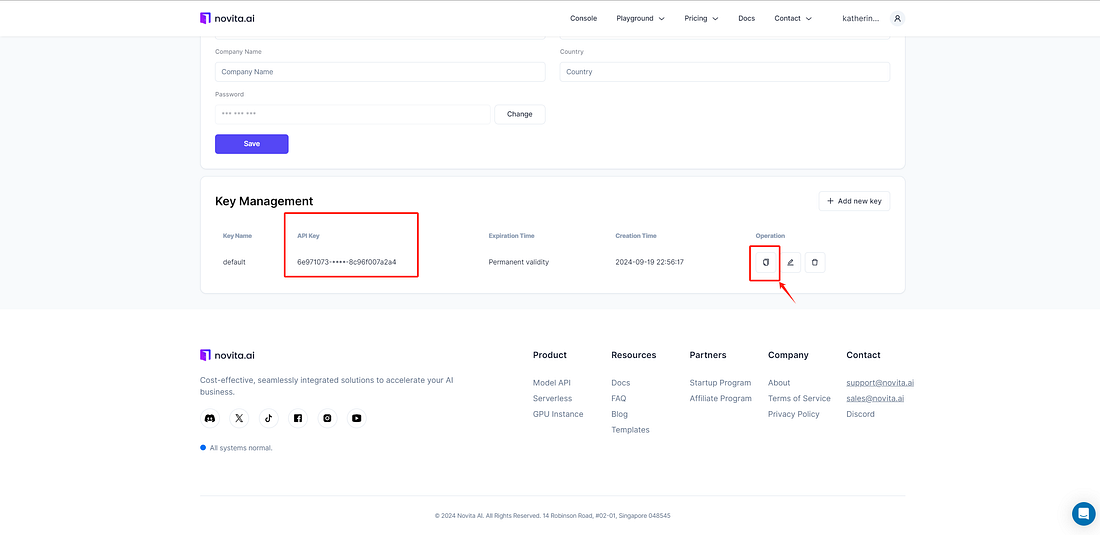

Step 2: Obtain Your API Key

You will need an API key to authenticate your requests. You can manage your API key by following the instructions outlined in the Novita AI documentation. Be sure to keep your API key secure and avoid exposing it in public code repositories.

Step 3: Choose Your Model

Novita AI offers a range of models, including different versions of Llama. You can view the full list of available models here. Choose the model that best suits your application’s requirements, whether for chat completion, text generation, or other tasks.

If you’d like to see the full list of models we offer, you can visit the Novita AI LLM Models List.

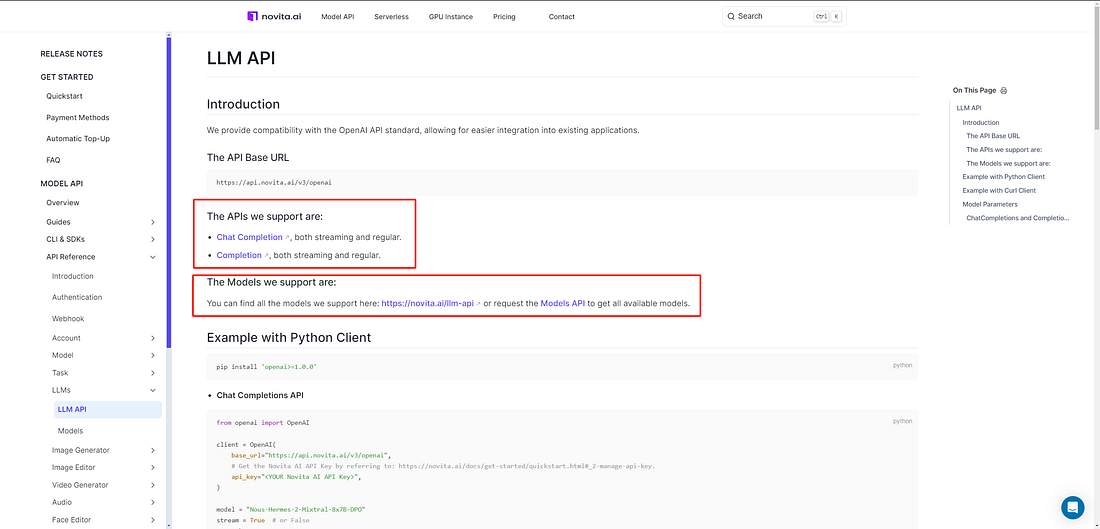

Step 4: Visit the LLM API reference to explore the APIs and models available from Novita AI.

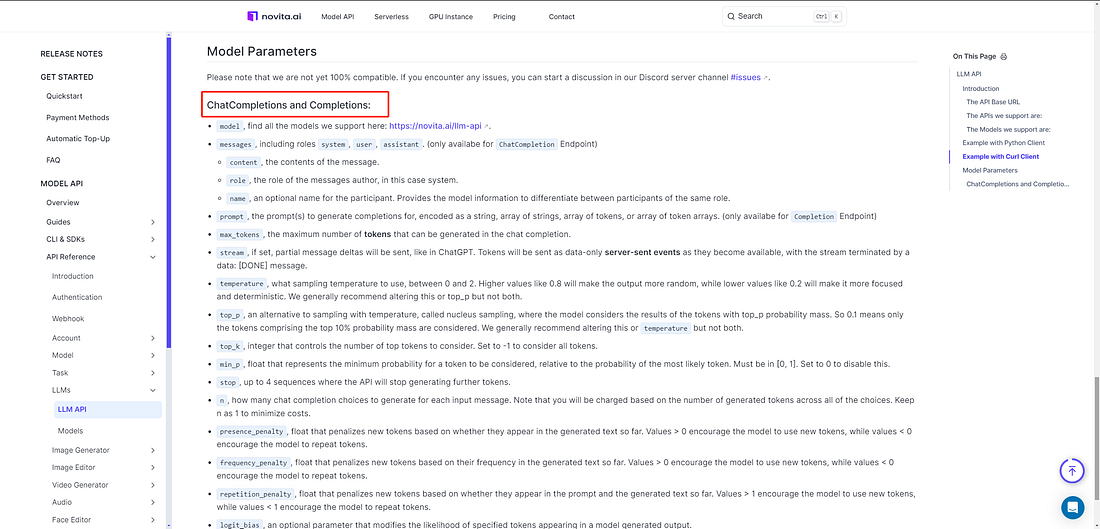

Step 5: Select the model that fits your needs, then set up your development environment and configure options like content, role, name, and prompt.

Step 6: Run multiple tests to ensure the API performs consistently.

By following these steps, you can effectively integrate LLM APIs on Novita AI, enabling you to harness the power of advanced language models like Llama 3 for your applications.

Try Llama 3 on Novita AI’s LLM Playground

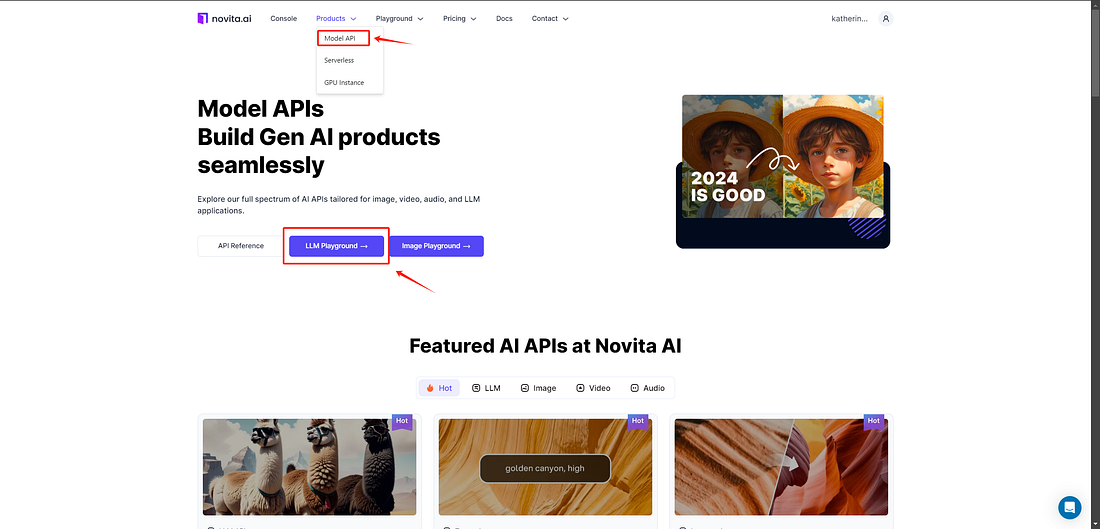

Before deploying the LLM API on Novita AI, you can experiment with it in the LLM Playground. Developers are provided with a free usage quota. Now, I’ll walk you through the steps to get started.

Step 1: Access the Playground: Navigate to the Products tab, select Model API, and start exploring the LLM API.

Step 2:Choose a Model: Select the Llama model that best fits your evaluation needs.

Step 3: Enter Your Prompt: Type your prompt in the input field to generate a response from the model.

Conclusion

Llama 3, a cutting-edge large language model, excels in text generation, coding, and multilingual processing. Accessible via Novita AI’s LLM API, developers can harness its full potential for various applications. As Meta AI pushes AI research boundaries, Llama 3 leads the open-source AI future, promoting innovation and broader access to advanced models. Whether for experiments or extensive deployment, Llama 3 equips you with the necessary tools for enhancing your AI projects.

Frequently Asked Questions

What Makes Llama 3 Different from Previous Models?

Llama 3 excels in image and speech recognition with its larger size, enhanced efficiency, and seamless integration with other models.

What Are the Major Challenges in Implementing Llama 3 Techniques?

Implementing Llama 3 techniques requires ample computer power due to the model’s size. Obtaining diverse, high-quality training data is crucial for optimal performance and bias reduction.

Can Llama 3 Be Applied to Non-English Languages?

Llama 3 excels in multiple languages, leveraging diverse training data to learn patterns effectively across different languages.

What is the difference between llama 3.1 and llama 3?

Llama 3.1 supports text inputs and outputs, while Llama 3 was limited to text-only interactions without multimodal capabilities.

Is llama 3.1 multimodal?

Llama 3.1 is not multimodal; it only processes text.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.