Llama 3 vs ChatGPT 4: A Comparison Guide

Introduction

Today, we delve into two giants in the realm of generative AI: Llama 3 and ChatGPT 4. How do these models differ in architecture, performance, and real-world applications? Join us as we explore their capabilities, strengths, and the future they promise.

Overview of Generative AI Models: Llama 3 and ChatGPT 4

What is Llama 3?

Llama 3, developed by Meta, represents the next generation of open-source language models. With an aim to match proprietary models in performance, Llama 3 introduces two models with 8 billion and 70 billion parameters. These models are designed to be multilingual and multimodal, capable of handling a wide range of tasks with improved reasoning and coding abilities. Llama 3 is part of Meta’s commitment to the open AI ecosystem, fostering innovation across various applications and developer tools.

What is ChatGPT 4?

ChatGPT 4, a product of OpenAI, is a significant leap forward in the field of generative AI. This large multimodal model processes both images and text, producing human-level performance on numerous professional and academic benchmarks. Unlike its predecessor, ChatGPT 4 exhibits enhanced reliability, creativity, and the ability to interpret nuanced instructions. It has been fine-tuned for improved factuality, steerability, and adherence to guardrails, making it a powerful tool for various applications.

Llama 3 vs ChatGPT 4: Technical Specifications

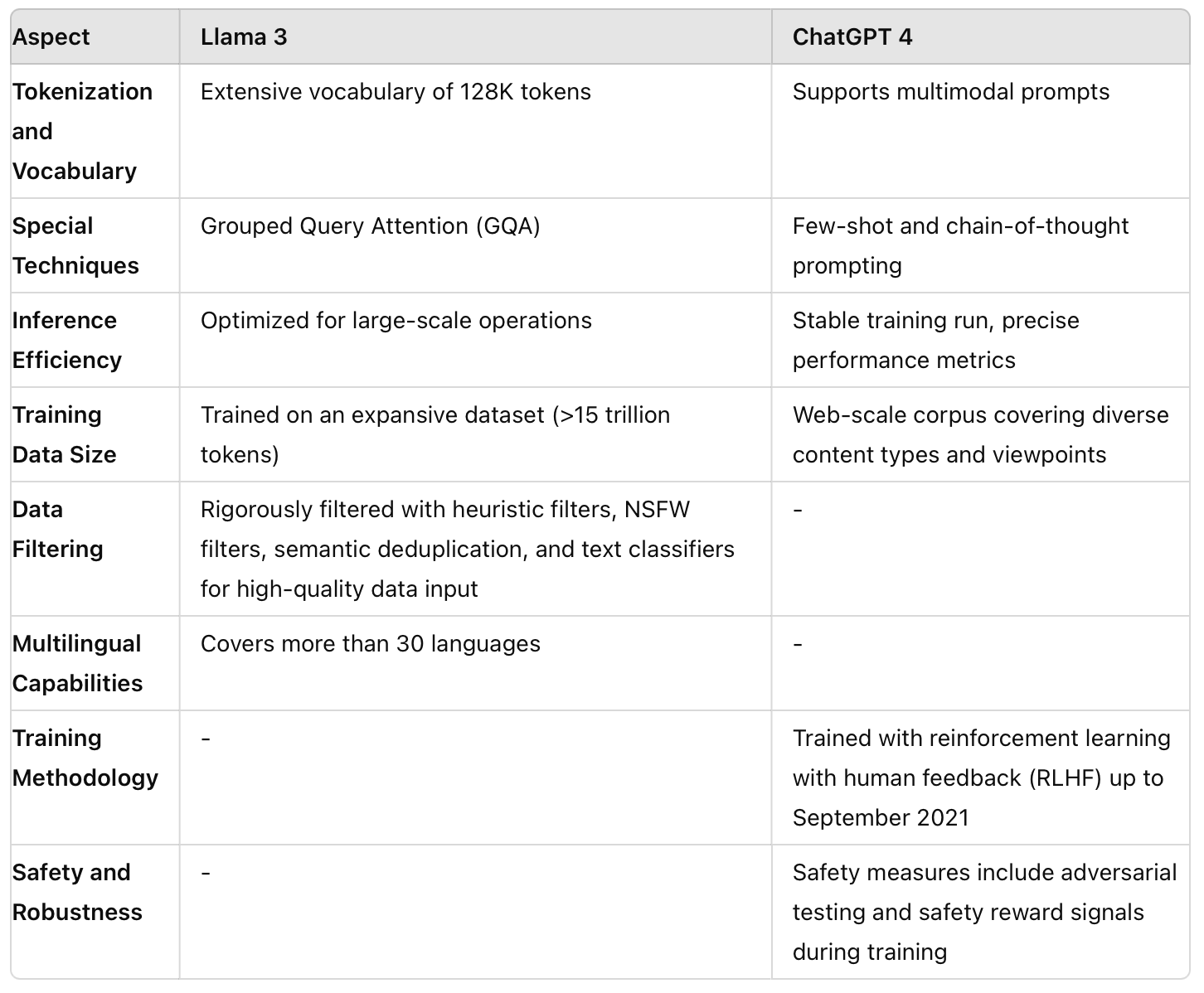

Llama 3 and ChatGPT 4, while both representing cutting-edge advancements in AI, have distinct architectural and training nuances that set them apart.

Model Architecture and Design

Llama 3’s architecture is a standard decoder-only transformer model, which has proven effective for processing sequential data. It features a tokenizer with an extensive vocabulary of 128K tokens, allowing for more detailed language representation. To enhance inference efficiency, Llama 3 adopts Grouped Query Attention (GQA), a technique that is particularly valuable for handling large-scale operations without compromising on response times. The model is trained on long sequences of up to 8,192 tokens, enabling it to process extensive contexts and generate comprehensive responses.

ChatGPT 4 introduces a significant innovation with its multimodal capability, processing both text and images. This feature is supported by a sophisticated architecture that can interpret and generate responses to prompts that combine visual and linguistic elements. The model’s design scales effectively, as evidenced by its stable training run and the ability to predict performance metrics with precision. ChatGPT 4 also leverages test-time techniques such as few-shot and chain-of-thought prompting, expanding its versatility in addressing a wide array of tasks.

Training Data and Knowledge Base

Llama 3 is trained on an expansive dataset exceeding 15 trillion tokens, sourced from both public and licensed materials. This dataset is notably larger than its predecessors, providing a rich and diverse foundation for the model to learn from. With over 5% of the data dedicated to non-English content, Llama 3 is primed for multilingual capabilities, covering more than 30 languages. The training data undergoes rigorous filtering through a series of pipelines, including heuristic filters, NSFW filters, semantic deduplication, and text classifiers, ensuring high-quality data input.

ChatGPT 4’s training data is derived from a web-scale corpus that includes a vast array of content types and ideologies. This comprehensive dataset encompasses correct and incorrect solutions to problems, weak and strong reasoning, and a variety of viewpoints. The model’s training process involves reinforcement learning with human feedback (RLHF) to align its outputs with user intent and safety guidelines. It is important to recognize that ChatGPT 4’s knowledge is current only up to September 2021, after which it does not learn from new experiences. The model has been subjected to adversarial testing by over 50 experts to identify and mitigate high-risk behaviors, and it incorporates a safety reward signal during RLHF training to reduce harmful outputs.

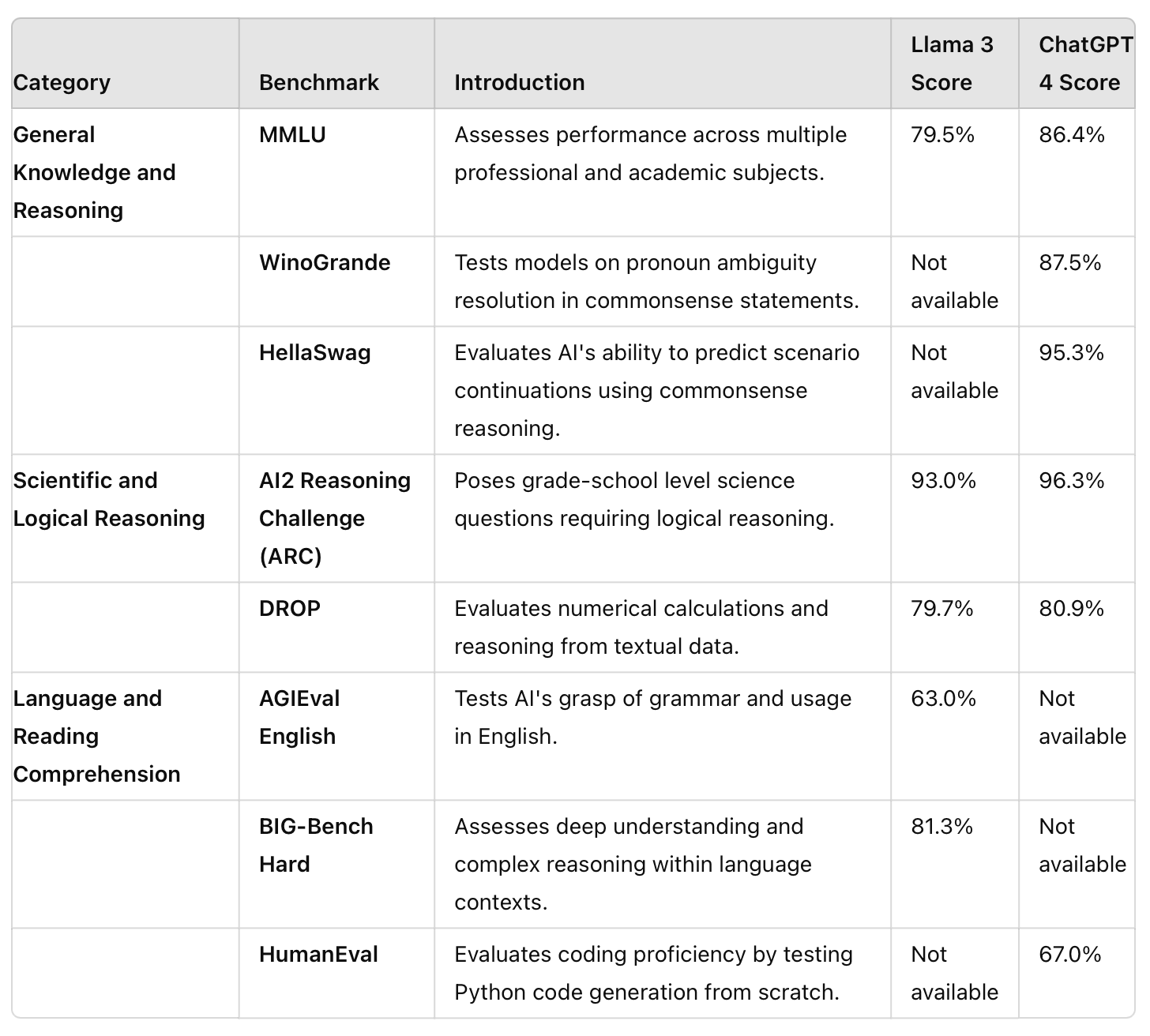

Llama 3 vs ChatGPT 4: Performance Benchmarking

General Knowledge and Reasoning

MMLU (Multiple-choice questions in 57 subjects)

- Llama 3: Scores varied among models, with a high of 79.5% seen in the Meta Llama 3 (70B).

- ChatGPT 4: Scored 86.4% in a 5-shot setting.

WinoGrande (Commonsense reasoning around pronoun resolution)

- Llama 3: Not explicitly shown in the data for Llama 3.

- ChatGPT 4: Scored 87.5% in a 5-shot setting.

HellaSwag (Commonsense reasoning around everyday events)

- Llama 3: Not explicitly shown in the data for Llama 3.

- ChatGPT 4: Scored 95.3% in a 10-shot setting.

Scientific and Logical Reasoning

AI2 Reasoning Challenge (grade-school level, multiple-choice science questions that require logical reasoning)

- Llama 3: Meta Llama 3 (70B) performed best with 93.0%.

- ChatGPT 4: Scored 96.3% in a 25-shot setting.

DROP (Reading comprehension and arithmetic)

- Llama 3: Best score of 79.7% was by Meta Llama 3 (70B).

- ChatGPT 4: Scored 80.9% in a 3-shot setting.

Language and Reading Comprehension

AGIEval English (grammar and usage in English)

- Llama 3: Highest score of 63.0% in Meta Llama 3 (70B) version.

- ChatGPT 4: Not specifically mentioned for this benchmark in your data.

BIG-Bench Hard (tasks that require deep understanding and complex reasoning within language contexts)

- Llama 3: Best performance was 81.3% by Meta Llama 3 (70B).

- ChatGPT 4: Not specifically mentioned for this benchmark in your data.

HumanEval (Python coding tasks)

- Llama 3: Not explicitly shown in the data for Llama 3.

- ChatGPT 4: Scored 67.0% in a 0-shot setting.

Llama 3 vs ChatGPT 4: Multimodal Capabilities Comparison

The multimodal capabilities of AI models have become a cornerstone for evaluating their adaptability and responsiveness to a variety of inputs. Llama 3 and ChatGPT 4, while both aiming for state-of-the-art performance, approach multimodality from different angles.

Llama 3’s Multimodal Vision

Llama 3 is envisioned to become a multimodal model in its future iterations, with plans to extend beyond text to include images and possibly other forms of data. The current release of Llama 3, however, focuses primarily on text-based models. The roadmap for Llama 3 includes making the model multilingual and multimodal, indicating a commitment to expanding its capabilities to handle diverse input types. While the specifics of Llama 3’s multimodal integration are yet to be detailed, the intention to evolve towards a more inclusive model of data processing is clear.

ChatGPT 4’s Multimodal Reality

ChatGPT 4 has already taken significant strides in the realm of multimodality. It is designed to accept image and text inputs, setting it apart from models that process text alone. This capability allows ChatGPT 4 to interpret and generate responses to prompts that combine visual and linguistic elements. In practical terms, ChatGPT 4 can analyze a document with text and photographs, diagrams, or screenshots, and then produce relevant text outputs. This feature positions ChatGPT 4 at the forefront of AI models that can handle complex, real-world tasks requiring the understanding of both visual and textual information.

Llama 3 vs ChatGPT 4: Real-World Applications

For Llama 3

- Developer Tools: Llama 3’s focus on coding and reasoning could make it an ideal assistant for developers, helping with code generation, debugging, and providing insights on best coding practices.

- Educational Platforms: With its reasoning and instruction-following capabilities, Llama 3 could be used to create interactive educational platforms that adapt to a student’s learning pace and style.

- Content Creation: Llama 3’s creative writing and summarization skills could be employed in content creation tools for social media, blogs, or news outlets.

- Data Analysis: For businesses requiring extraction and summarization of insights from large datasets, Llama 3’s extraction and summarization capabilities would be beneficial.

For GPT-4

- Professional Assessments: GPT-4’s ability to exhibit human-level performance on professional benchmarks like the simulated bar exam suggests its use in creating or assessing professional qualification exams.

- Advanced Academic Research: GPT-4’s performance on academic benchmarks could be utilized in research environments to assist with literature reviews, hypothesis generation, and academic writing.

- Visual Task Solutions: The capability to process image inputs can be applied in scenarios requiring visual interpretation, such as analyzing medical imaging, satellite imagery, or assisting in design and architecture by understanding visual elements.

- Programming and Code Review: GPT-4’s internal use in programming support suggests it could be used for code review, suggesting optimizations, and detecting potential bugs in development projects.

Llama 3 vs ChatGPT 4: LLM API Access

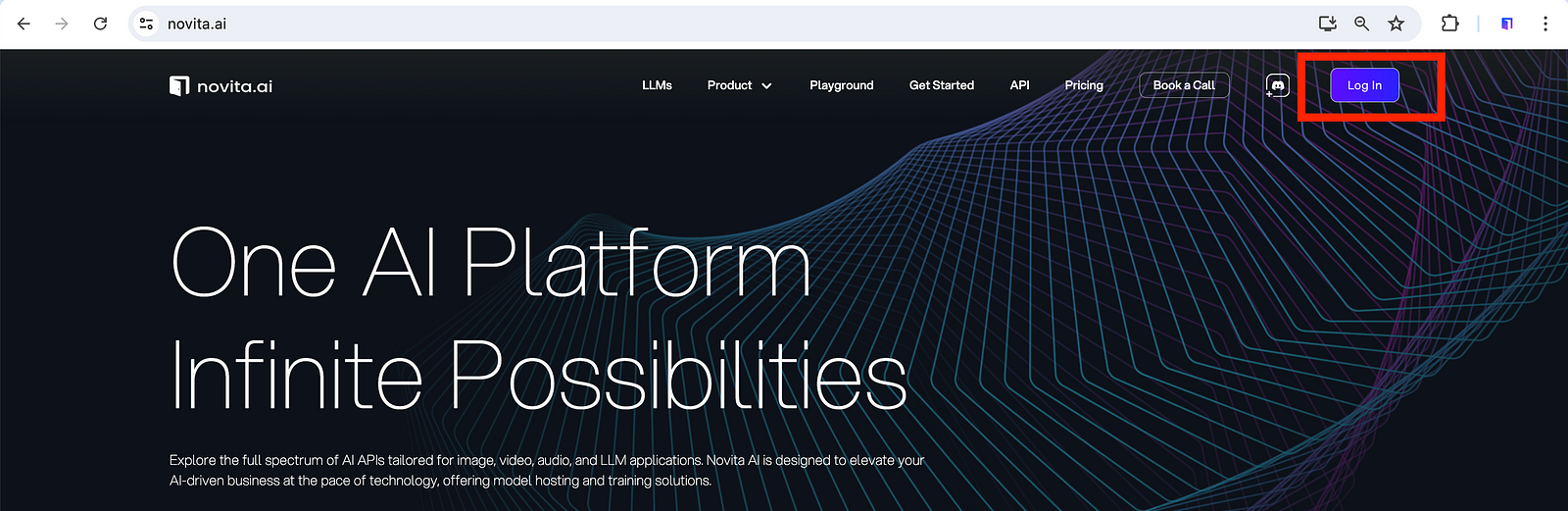

Accessing Llama 3 LLM API

Step 1: Create an Account

Visit Novita AI. Click the “Log In” button in the top navigation bar. At present, we only offer both Google login and Github login authentication method. After logging in, you can earn $0.5 in Credits for free!

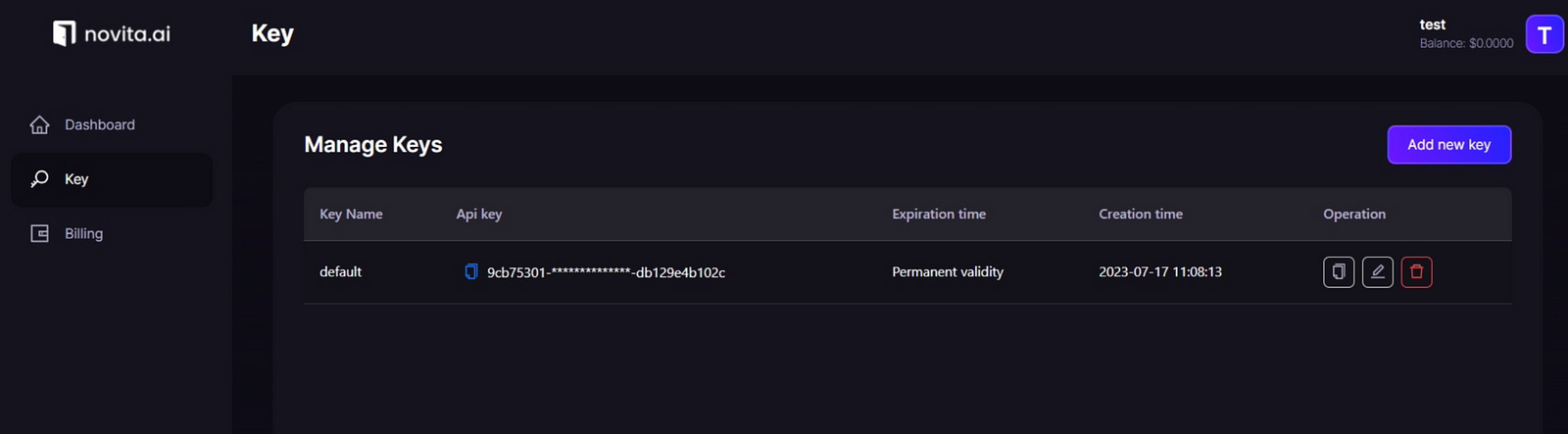

Step 2: Create an API key

Currently authentication to the API is performed via Bearer Token in the request header (e.g. -H “Authorization: Bearer ***”). We’ll provision a new API key.

You can create your own key with the Add new key.

Step 3: Making an API Call

Just within several lines of code, you can make an API call and leverage the power of Llama 3 and other powerful models:

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring: https://novita.ai/get-started/Quick_Start.html#_3-create-an-api-key

api_key="<YOUR Novita AI API Key>",

)

model = "meta-llama/llama-3–8b-instruct"

completion_res = client.completions.create(

model=model,

prompt="A chat between a curious user and an artificial intelligence assistant".

stream = True, # or False

max_tokens = 512,

)

Accessing ChatGPT 4 LLM API

Step 1 Account Setup

First, create an OpenAI account or sign in. Next, navigate to the API key page and “Create new secret key”, optionally naming the key.

Step 2 Quickstart language selection

Select the tool or language you want to get started using the OpenAI API with: curl, Python or Node.js.

Step 3: Setting up Python

To use the OpenAI Python library, you will need to ensure you have Python installed. Once you have Python 3.7.1 or newer installed and (optionally) set up a virtual environment, the OpenAI Python library can be installed. From the terminal / command line, run:

pip install --upgrade openaiStep 4: Set up your API key

Set up your API key for all projects: The main advantage to making your API key accessible for all projects is that the Python library will automatically detect it and use it without having to write any code.

Step 5: Making an API request

After you have Python configured and set up an API key, the final step is to send a request to the OpenAI API using the Python library. To do this, create a file named openai-test.py using th terminal or an IDE.

Inside the file, copy and paste one of the examples below:

from openai import OpenAI

client = OpenAI()

completion = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a poetic assistant, skilled in explaining complex programming concepts with creative flair."},

{"role": "user", "content": "Compose a poem that explains the concept of recursion in programming."}

]

)

print(completion.choices[0].message)Llama 3 vs ChatGPT 4: LLM API Pricing Comparison

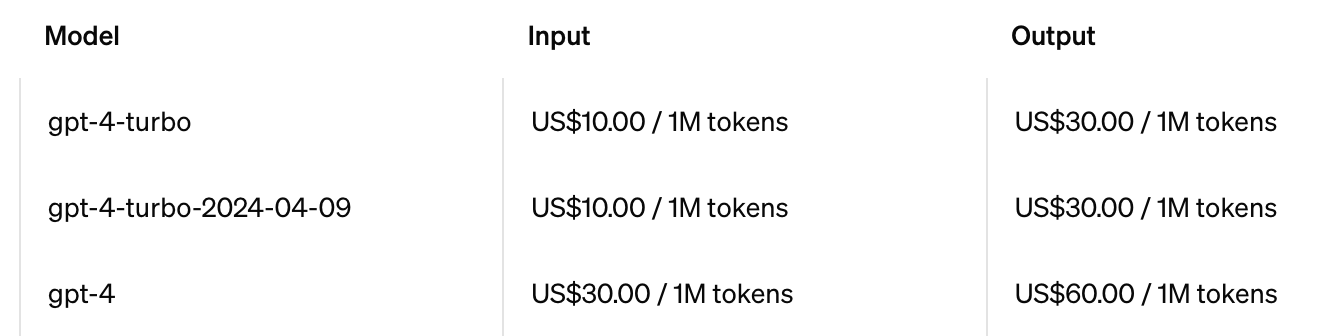

ChatGPT 4 LLM API Pricing

On the OpenAI official website, the pricing for ChatGPT 4 is listed at $30.00 for 1 million prompt tokens and $60.00 for 1 million completion tokens.

Llama 3 LLM API Pricing

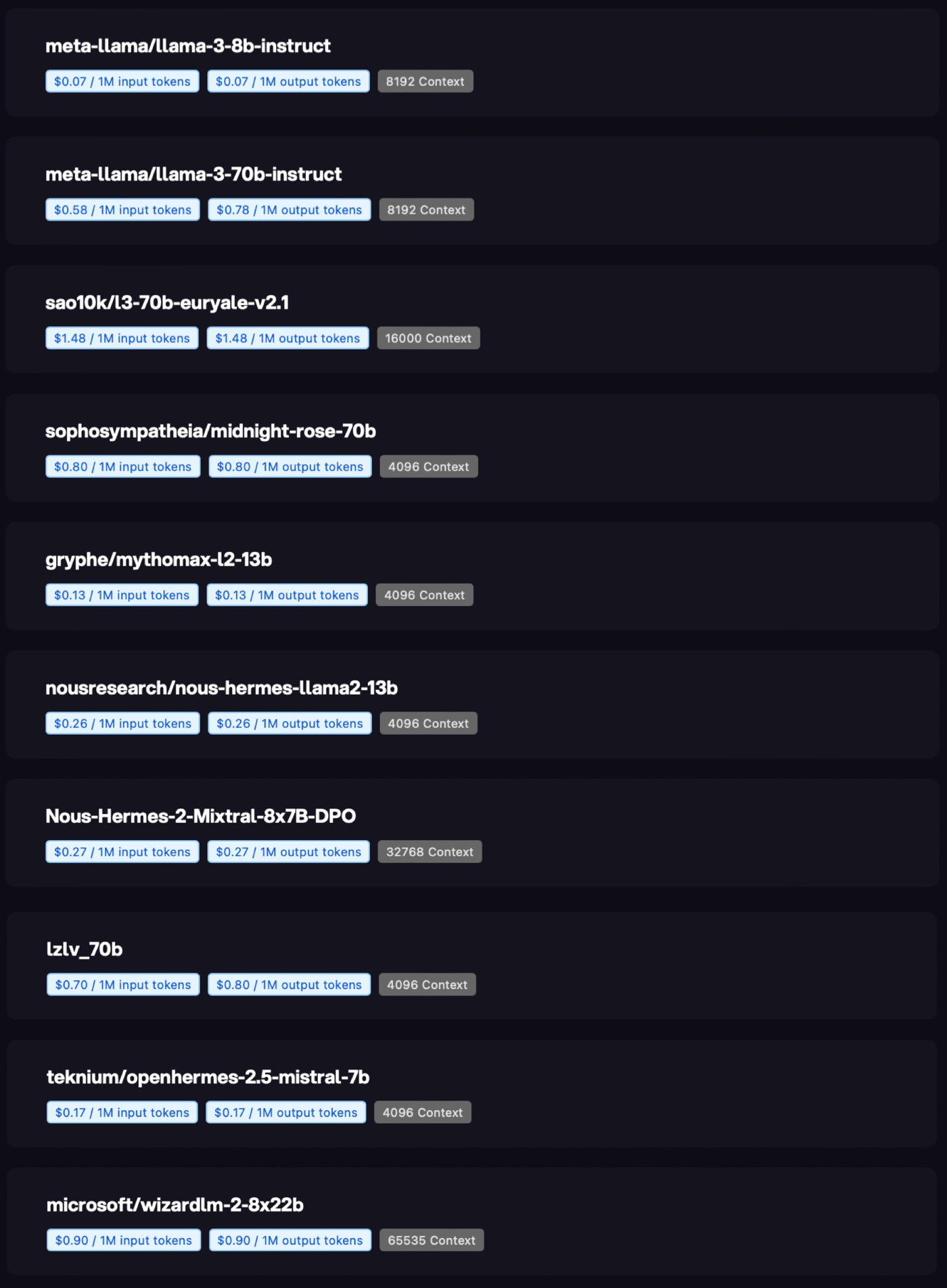

At Novita.ai, our pricing strategy is designed to align with our dedication to accessibility and innovation:

- We offer transparent and cost-effective pricing: For meta-llama/llama-3–8b-instruct, the rate is $0.07 per million tokens, with no hidden fees or increasing costs. For meta-llama/llama-3–70b-instruct, the rate is $0.78.

- Volume discounts are available: We provide competitive discounts for users with high volumes, making large-scale deployments more affordable.

Explore our pricing policy for details on other available models.

Llama 3 vs ChatGPT 4: Future Developments

Llama 3: The Path Ahead

Multilingual and Multimodal Capabilities: Llama 3 is gearing towards becoming a multilingual model, which will open a plethora of opportunities for global applications. The ability to understand and generate content in multiple languages will make it a powerful tool for international businesses, translation services, and cross-cultural content creation.

Longer Context and Enhanced Reasoning: With plans to extend the context window, Llama 3 will be able to process longer documents and data sets, which is invaluable for in-depth research, comprehensive data analysis, and maintaining narrative coherence in long-form content creation.

Performance Improvements: The continuous improvement in core capabilities such as reasoning and coding will make Llama 3 an even more formidable assistant for complex problem-solving and software development.

GPT-4: Charting New Territories

Advanced Multimodality: GPT-4’s current capabilities with text and image inputs are just the beginning. Future developments will likely enhance its ability to interpret and interact with a wider array of media types, possibly including video and 3D models.

Specialized Training and Fine-tuning: With its demonstrated high performance on professional and academic benchmarks, GPT-4 is set to receive specialized training to cater to specific industries, potentially revolutionizing fields like law, medicine, and academia.

Internationalization and Accessibility: GPT-4’s performance on non-English benchmarks indicates a promising direction for future internationalization efforts, making cutting-edge AI more accessible to non-English speaking populations.

Safety and Alignment: As GPT-4 continues to evolve, the focus on safety and alignment will remain paramount. Future iterations will likely include more robust mechanisms for content moderation, bias reduction, and ensuring ethical AI practices.

Conclusion

Llama 3, developed by Meta, focuses on text-based capabilities with plans to expand into multilingual and multimodal processing in the future. Its strengths lie in areas like developer tools, educational platforms, content creation, and data analysis, leveraging its strong coding, reasoning, and summarization skills.

On the other hand, ChatGPT 4 from OpenAI has already achieved remarkable multimodal capabilities, seamlessly processing both text and image inputs. This puts it at the forefront of handling complex, real-world tasks that require the understanding of visual and linguistic elements. ChatGPT 4’s performance on professional and academic benchmarks makes it a promising tool for applications in fields like professional assessments, advanced academic research, visual task solutions, and programming support.

As these models continue to evolve, we can anticipate further advancements in their core abilities, safety mechanisms, and specialized training to cater to diverse industries and use cases.

Novita AI is the all-in-one cloud platform that empowers your AI ambitions. With seamlessly integrated APIs, serverless computing, and GPU acceleration, we provide the cost-effective tools you need to rapidly build and scale your AI-driven business. Eliminate infrastructure headaches and get started for free — Novita AI makes your AI dreams a reality.