How to Use Llama 3 8B Instruct and Adjust Temperature for Optimal Results?

Key Highlights

- Llama 3 8B Instruct: A language model from Meta, optimized for instruction-following and generating human-like responses with 8 billion parameters.

- Key Upgrades: Enhanced with a 128K token vocabulary, Grouped-Query Attention (GQA), and a large context window of 8,192 tokens for handling complex prompts and longer conversations.

- Use Cases: Ideal for building chatbots, content creation, customer support systems, and educational tools that require high-quality, coherent text generation.

- Model Comparison: The 8B variant balances performance and efficiency, with significantly less training time compared to larger models like Llama 3 70B.

- Customization: Temperature settings (0.2–1.0) allow users to fine-tune outputs from creative to precise text generation, tailored for various applications.

- Getting Started: Accessible via the Novita AI LLM API for easy integration and API key management.

Introduction

Llama 3 8B Instruct, developed by Meta, is an advanced language model designed to follow instructions and generate human-like responses. With 8 billion parameters, it excels in applications like content creation, customer support, and educational tools. Accessible via the Novita AI LLM API, developers can easily call Llama 3 8B Instruct into their systems, benefiting from features like adjustable temperature settings and a powerful context window for efficient text generation.

Explore Llama 3 8B Instruct

What is Llama 3 8B Instruct?

Llama 3 8B Instruct is a version of Meta’s Llama 3 model, designed to excel in following instructions and generating human-like responses. With 8 billion parameters, it is optimized for tasks such as answering questions, summarizing text, and handling more complex language tasks based on specific prompts, making it ideal for developers looking for powerful, versatile language models.

Key Features and Capabilities

Meta-Llama-3–8B-Instruct builds on the foundations laid by the previous Llama and Llama 2 models, incorporating several key upgrades:

- A 128K token vocabulary for more efficient language encoding

- Grouped-Query Attention (GQA) across all model sizes

- An 8,192 token context window with cross-document masking for improved training and handling of larger contexts.

What are the use cases of Llama 3 8B Instruct?

- Chatbots: Used to build chatbots that can understand user instructions and provide natural language responses.

- Content Creation: Assists in generating articles, blog posts, or social media content, enhancing both the efficiency and quality of content creation.

- Customer Support: Integrated into automated response systems or customer service chat tools to quickly address common customer questions and requests.

- Educational Tools: Applied in educational platforms to create interactive learning materials or simulate conversations, helping language learners practice dialogue skills.

These use cases represent some of the most common and practical applications of the Llama 3 8B Instruct model, especially in areas where understanding and generating natural language text is essential.

Comparison with other Llama 3 Model

Parameters

- Llama 3 8B: 8B

- Llama 3 70B: 70B

Context Length

- Llama 3 8B: 8K

- Llama 3 70B: 8K

Token count

- Llama 3 8B: 15T+

- Llama 3 70B: 15T+

Training Time (GPU hours)

- Llama 3 8B: 1.3M

- Llama 3 70B: 6.4M

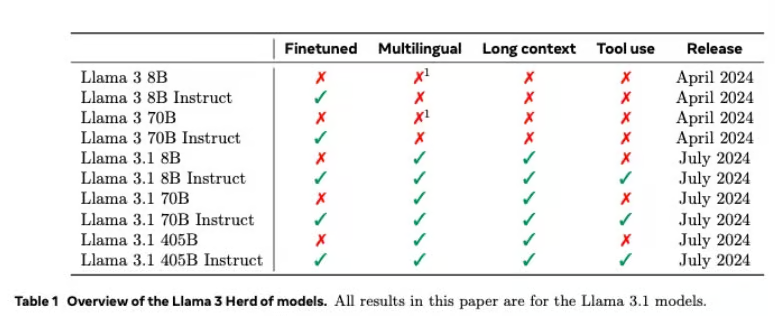

Llama 3 vs 3.1

Below is a straightforward comparison chart of the Llama 3 and Llama 3.1 model families.

How to Use Llama 3 Instruct?

Llama 3 8B Instruct is a powerful tool for a wide range of tasks, from content generation to conversational AI. To get the best results, it’s important to understand how to craft the right prompts and adjust settings like temperature. Here’s how to make the most of it.

llama3 8b instruct prompt

The prompt is the starting point for Llama 3 8B Instruct’s response. It’s essential to provide a clear, well-defined prompt to guide the model in generating the most relevant and accurate output. A good prompt should be specific, with clear instructions on what you want the model to do. For example:

- Example: “Write a brief summary of the latest trends in AI technology.”

By specifying the task in the prompt, you can guide the model towards generating text that aligns with your needs. The more context and detail you provide in the prompt, the more tailored and precise the response will be.

llama3 8b instruct temperature

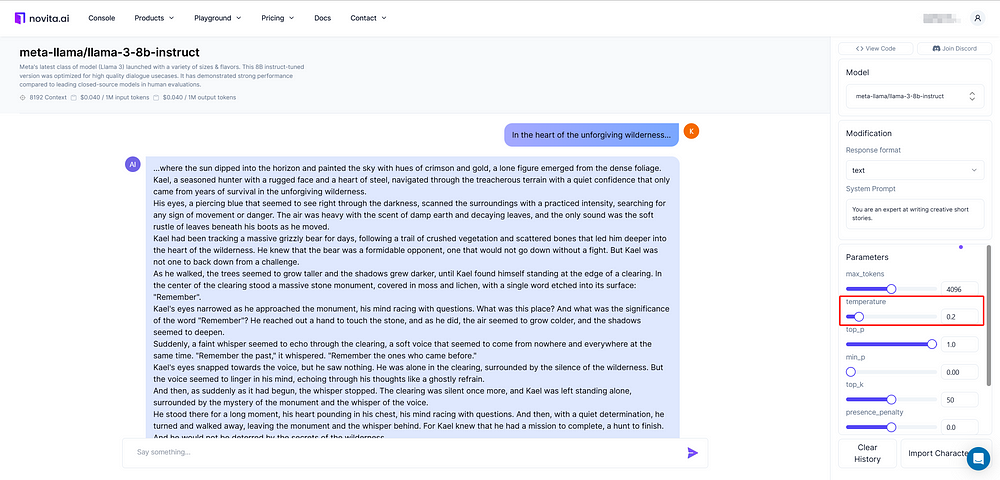

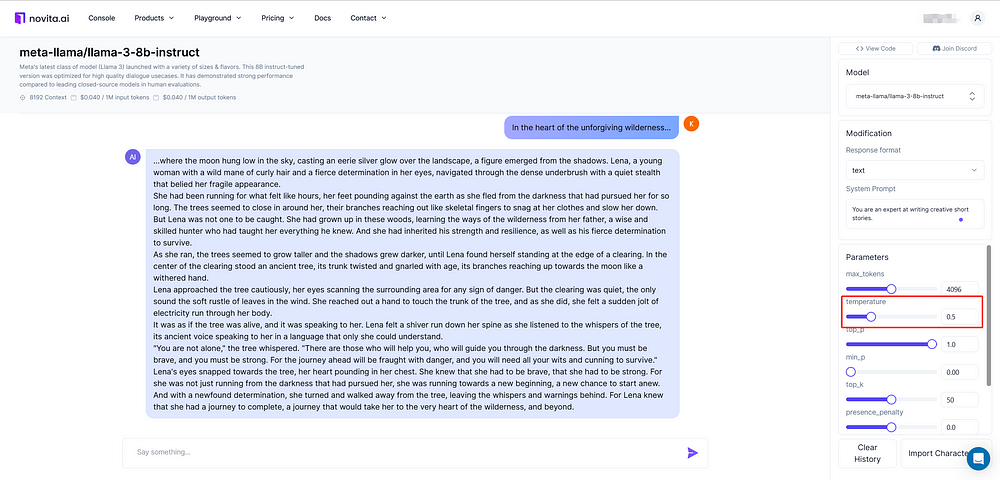

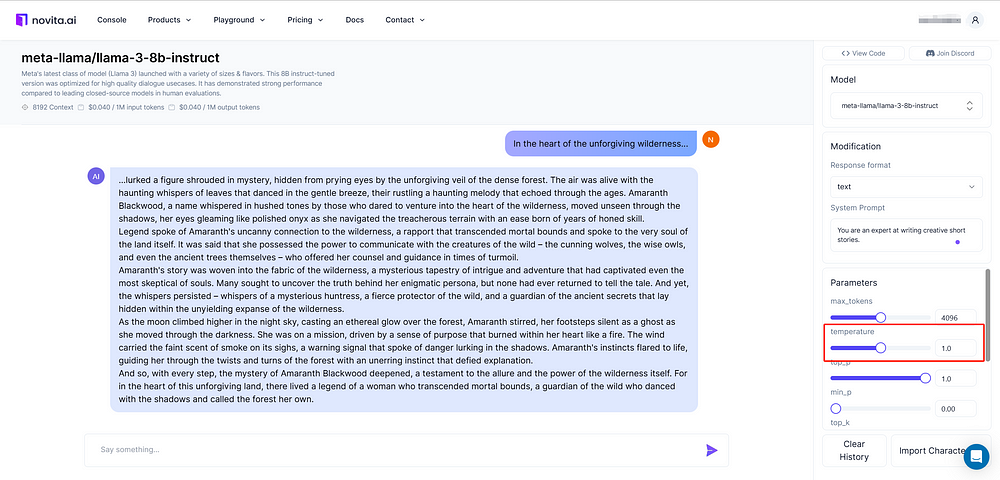

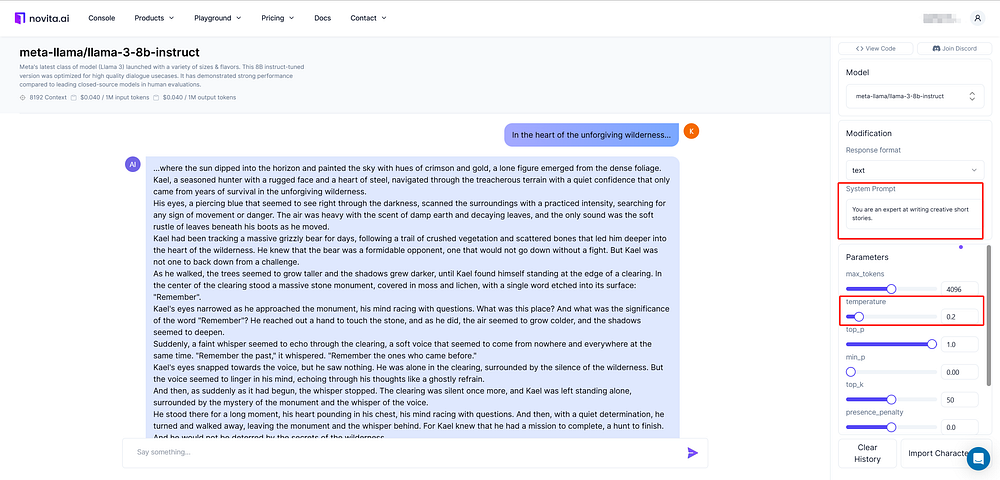

Let’s walk through an example of using Llama 3 8B with different temperature settings. Suppose we want the model to generate a creative short story about survival in the wilderness. Here’s the sample prompt: “In the heart of the unforgiving wilderness…”

- Low Temperature (0.2)

At this low temperature, the story is straightforward, cohesive, and detail-focused, producing a logical narrative. However, it lacks significant imaginative elements and sticks to predictable descriptions without unexpected twists.

- Medium Temperature (0.5)

With a medium temperature, the narrative remains coherent and introduces a bit more flair. The setting becomes more atmospheric, and the characters are described with additional depth, showing a balance between creativity and logical flow. This setting often works well for applications requiring engaging but still grounded storytelling.

- High Temperature (1.0)

At high temperature, the model produces a more imaginative, almost poetic output. Here, unexpected phrases like “haunting melody that echoed through the ages” and vivid character descriptions create an intense, mystical atmosphere. This setting is ideal for creative writing, poetry, or scenarios where bold, unexpected language is desired.

Choosing the Right Temperature for Your Needs

The ideal temperature setting depends on your specific use case:

- Creative Content: For highly creative tasks, like storytelling, poetry, or brainstorming, a higher temperature (around 0.7–1.0) encourages the model to use more imaginative language and explore unexpected ideas.

- Technical or Precise Writing: When clarity and precision are key, such as in technical documentation or instructional content, a lower temperature (0.1–0.3) helps the model stay focused and avoids unnecessary elaboration.

How to Get Started with Llama 3.1 8B Instruct?

Having explored the features, capabilities, and use cases of the Llama 3 8B Instruct model, it’s time to see how you can get started with it. Whether you’re looking to call Llama 3.1 8B Instruct into your applications or test it out for yourself, Novita AI provides a straightforward way to access and customize the model. Here’s a step-by-step guide to help you get started.

Try Llama 3.1 8B Instruct on Novita AI LLM API

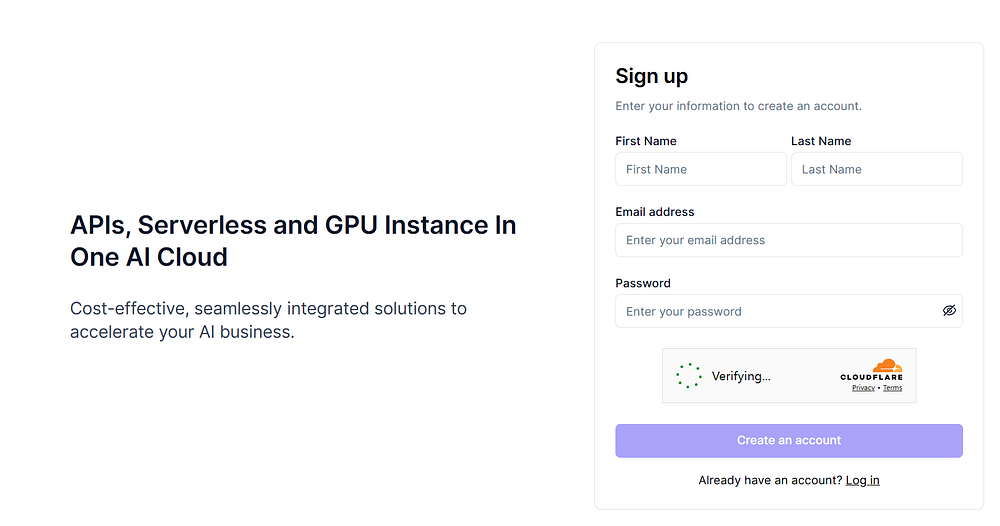

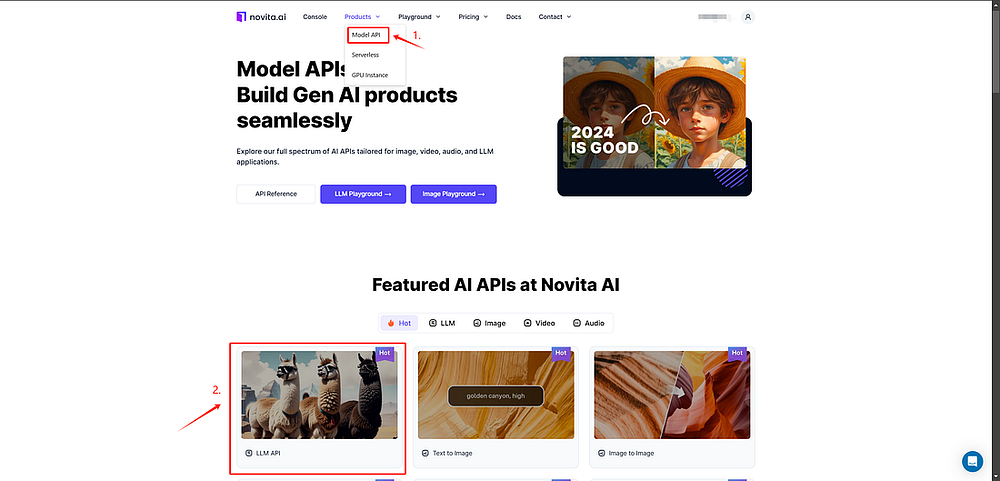

Step 1: Visit Novita AI and sign in.

You can log in with your Google or GitHub account. A new account will be created when you log in for the first time.

Alternatively, you can register using your email address.

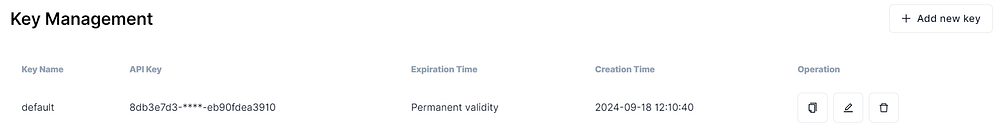

Step 2: API Key Management

Novita AI uses Bearer authentication to validate API access, requiring an API Key in the request header, such as “Authorization: Bearer {API Key}”.

To manage your API keys, go to “Key Management” in the settings.

A default API key is automatically generated upon your first login. To create more keys, simply click “+ Add New Key.

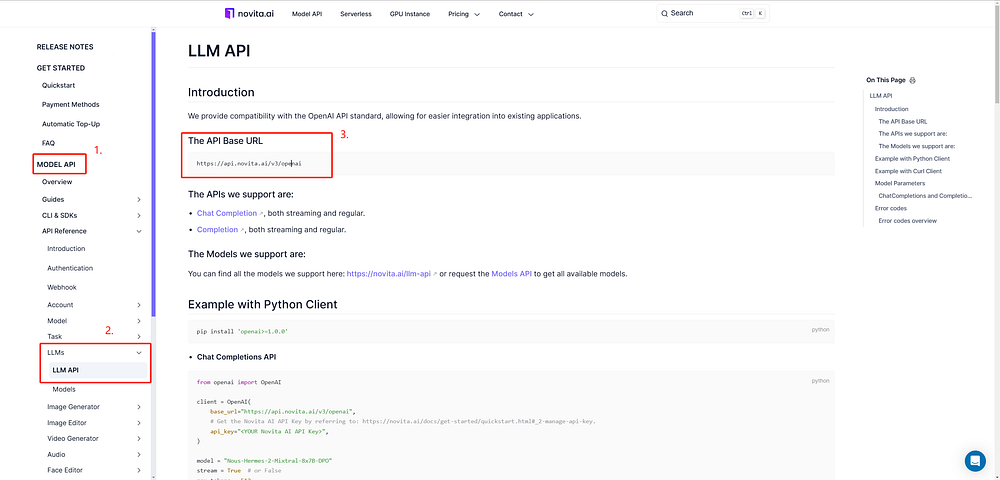

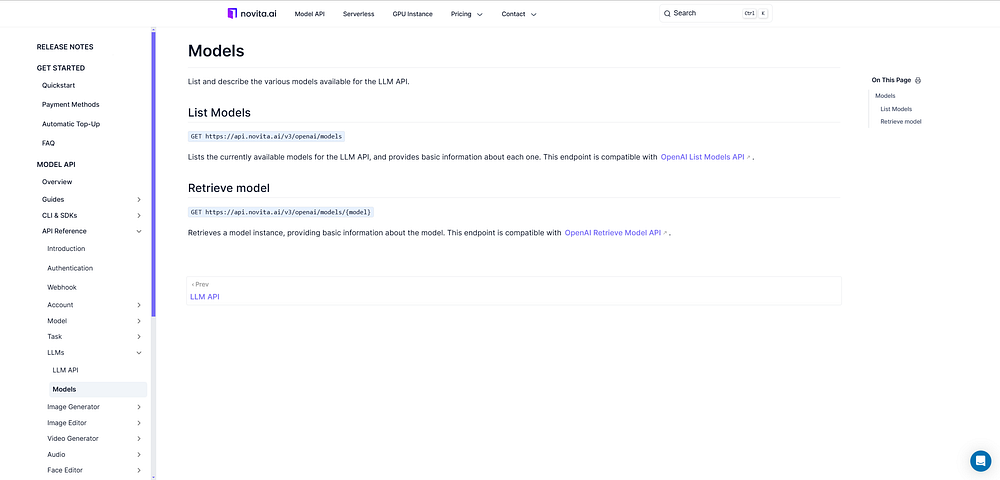

Step 3: LLM API Documentation

To access the LLM API documentation, click on “Docs” in the navigation bar, select “Model API,” and find the LLM API section to view the API Base URL.

Step 4: Select a Model

Novita AI offers a range of Model APIs, including Llama, Mistral, Mythomax, and more. To view the full list of available models, you can access the Novita AI LLM Models List.

In this case, choose the Llama 3 8b model to meet your needs.

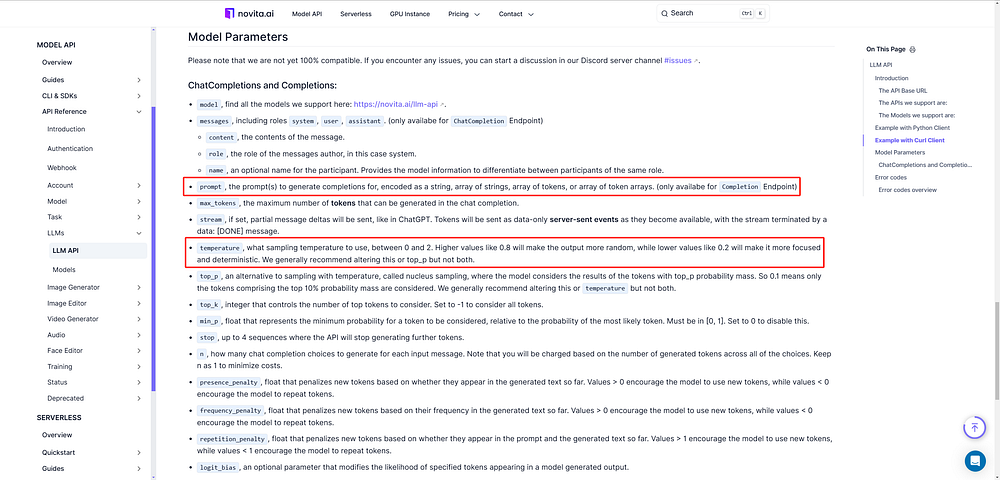

Step 5: View the Supported Parameters for Our Models

Novita AI models offer a variety of parameters, each with specific requirements and limitations. You can review the details for each parameter. Additionally, the prompt and temperature parameters can be customized to better suit your needs.

By following the steps above, you’ll be able to easily use the Llama 3 8B Instruct model on the Novita AI LLM API.

Try Llama 3.1 8B Instruct on Novita AI LLM Demo

Step 1: Access the Novita AI LLM Demo

You can quickly test the Llama 3 8b Instruct model by entering the Novita AI LLM Demo.

Step 2: Customize prompt and temperature for tailored output.

After selecting the Llama 3 8b Instruct model, you can adjust the prompt and temperature parameters to get outputs that better align with your specific instructions.

Right now, begin your journey exploring the Llama models on Novita AI!

Conclusion

Llama 3 8B Instruct, available through Novita AI’s LLM API, offers a flexible solution for developers looking to enhance their AI-driven applications. With customizable parameters and support for complex tasks, this model enables a wide range of use cases, from chatbots to content generation. By leveraging the Novita AI platform, users can quickly access and integrate this powerful model into their workflows, optimizing both performance and efficiency.

Frequently Asked Questions

How accurate is the llama 3 8B?

Llama 3 8B stands out for its superior accuracy and impressive cost efficiency. The advantages are clear when comparing the total cost of ownership (TCO) between it and previous generations at a given accuracy budget, such as Llama 2 70B.

What are the parameters for Llama 3 generation?

Meta Llama 3 comes in two parameter sizes — 8B and 70B with 8,000 context length — that can support a broad range of use cases with improvements in reasoning, code generation, and instruction following.

How fast is LLaMA 8B?

Llama 3 8B is faster compared to average, with a output speed of 119.9 tokens per second. Latency: Llama 3 8B has a lower latency compared to average, taking 0.32s to receive the first token (TTFT).

Is Llama 3 better than gpt 4?

If you prioritize accuracy and efficiency in coding tasks, Llama 3 might be the better choice.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading

1.Llama 3.1 405B Inference Service Deployment: Beginner's Guide