How does GPU help boost DL, ML and AI?

Discover how GPUs accelerate deep learning, machine learning, and artificial intelligence. Explore GPU by renting them in GPU cloud.

Key Highlights

- Deep learning is a subset of machine learning, which in turn is a necessary path to achieving artificial intelligence.

- Traditional CPUs are not well-suited for deep learning due to the fact that even the fastest CPUs only contain up to 24 cores optimized for sequential processing.

- Conversely, GPUs are capable of handling massive computational tasks.

- Renting GPU in Novita AI GPU Instance.

Introduction

Deep learning is a subset of machine learning, which in turn is a necessary path to achieving artificial intelligence. It employs multi-layer neural networks to mimic the complex decision-making capabilities of the human brain.

Traditional CPUs are not well-suited for deep learning due to the fact that even the fastest CPUs only contain up to 24 cores optimized for sequential processing. Conversely, GPUs are capable of handling massive computational tasks simultaneously and are well-suited for executing matrix operations and vector computations prevalent in deep learning. And you can also experience GPU in Novita AI GPU Instance.

Overview of DL, ML and AI

Artificial intelligence is a macro concept that encompasses various technologies and methods for achieving intelligence; Machine learning is an important branch of artificial intelligence that enables computers to learn and improve through algorithms and data; Deep learning is an advanced form of machine learning that uses deep neural network models to handle complex nonlinear problems.

The three have formed a hierarchical relationship from broad to specific, from basic to advanced.

Artificial Intelligence (AI):

It is a broad field aimed at enabling computer systems to perform tasks that typically require human intelligence, such as visual perception, speech recognition, natural language processing, decision-making, and more.

Machine Learning (ML):

It is a subset of artificial intelligence that enables computer systems to learn and improve their performance from data without explicit programming. Machine learning algorithms automatically refine their predictive or decision-making capabilities by identifying patterns in the data.

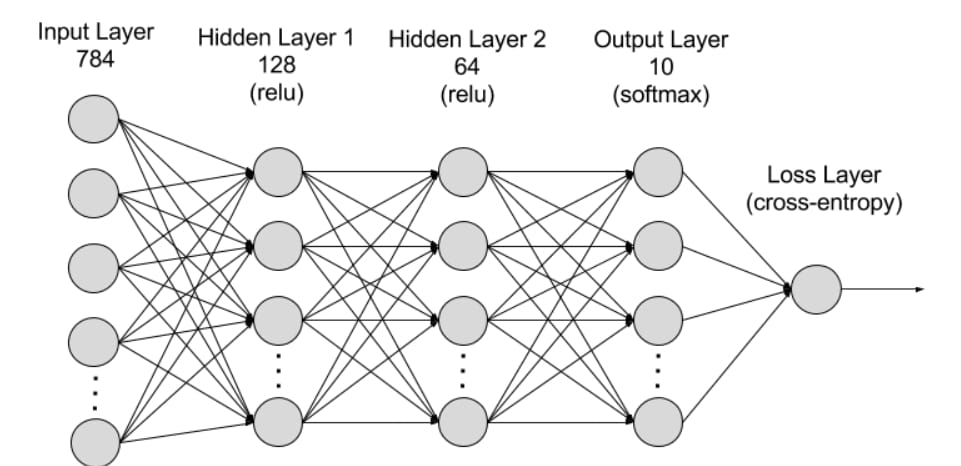

Deep Learning (DL):

It is a subset of machine learning that utilizes Deep Neural Networks (DNNs), neural networks with multiple hidden layers, to mimic the learning process of the human brain. Deep learning excels at processing complex data representations such as images, sounds, and texts, and has achieved remarkable successes in various domains including computer vision, natural language processing, and reinforcement learning.

How are GPUs Used in deep learning or AI?

GPUs significantly accelerate the training and inference processes of deep learning models, enabling faster development and deployment of AI applications.

Why are GPUs? Why not CPUs?

AI applications, especially those based on deep learning, require the processing of large datasets and the execution of complex mathematical operations. The parallel processing capabilities of GPUs make them ideal for these tasks.

The CPU is the core processing unit of a computer, adept at performing complex logical operations and control tasks.

However, when dealing with large-scale parallel computing tasks such as matrix multiplication and convolution operations in deep learning, CPU efficiency is relatively low. The design of CPU is mainly for sequential execution of instructions, rather than parallel processing of a large number of simple tasks.

Features of GPU for DL, ML and AI to enhance workflow

GPUs (Graphics Processing Units) have several features that significantly enhance efficiency and performance:

- Parallel Processing Capability: GPUs can handle a large number of computational tasks simultaneously, making them well-suited for the matrix and vector operations common in neural network training.

- High Memory Bandwidth: GPUs typically have higher memory bandwidth than CPUs, allowing for rapid data reading and writing, which is crucial for handling large datasets and complex models.

- Floating Point Performance: Modern GPUs support efficient floating-point operations, particularly FP16 (half-precision floating-point), which can speed up training and reduce memory usage.

- Dedicated Hardware Acceleration: Many GPUs include specialized hardware units (such as Tensor Cores) that accelerate computations specific to deep learning, further enhancing performance.

- Scalability: Multiple GPUs can work in parallel, employing data parallelism or model parallelism to further increase training speed.

- Support for Deep Learning Frameworks: Many deep learning frameworks (like TensorFlow and PyTorch) are optimized for GPUs, providing a rich set of libraries and tools that simplify GPU usage and management.

- Efficient Batch Processing: GPUs can efficiently process batch data, which is essential for accelerating training processes and improving model convergence speed.

Real World GPU-Accelerated Applications

GPU-Accelerated Applications in AI

- Image Recognition: In image recognition, deep learning models like Convolutional Neural Networks (CNNs) are used to identify and classify objects within images. GPUs accelerate the training of these models by efficiently handling the vast number of computations required for processing large image datasets.

- Speech Recognition: Speech recognition systems use deep learning models to process and analyze speech signals, converting them into text or instructions. Similar to image recognition, models such as Recurrent Neural Networks (RNNs) benefit from GPU acceleration, allowing for real-time speech-to-text conversion.

- Natural Language Processing (NLP): Applications such as machine translation, sentiment analysis, and text generation rely on models like Transformers and Bidirectional Encoder Representations from Transformers (BERT). These models, due to their complex architecture, demand high computational power, which GPUs provide, thereby reducing training time from weeks to days.

- Autonomous Vehicles: By analyzing and processing a large amount of image and sensor data, deep learning algorithms can help vehicles achieve autonomous navigation and intelligent decision-making. GPUs are crucial in processing the massive amount of data generated by the vehicle's sensors in real-time, ensuring quick and accurate decision-making.

GPU-Accelerated Applications in ML

- Pattern Recognition: GPUs aid in the complex pattern recognition tasks involved in identifying fraudulent transactions, which often require the analysis of subtle and complex patterns in large datasets.

- Climate modelling: GPUs are used in climate modelling, helping scientists run complex simulations more rapidly. This speed is crucial for studying climate change scenarios and making timely predictions.

- Molecular Dynamics: In drug discovery and molecular biology, GPUs facilitate the simulation of molecular dynamics, allowing for faster and more accurate modelling of molecular interactions.

- Real-time Data Processing: GPUs are essential in processing vast amounts of data from sensors in real time, a critical requirement for autonomous driving systems.

- Computer Vision and Decision Making: Tasks like image recognition, object detection, and decision-making in autonomous vehicles heavily rely on deep learning models, which are efficiently trained and run on GPUs.

- Language Models Training: Training large language models, like those used in translation services and voice assistants, requires significant computational power, readily provided by GPUs.

Future Directions

As the usage of GPUs in artificial intelligence (AI) and machine learning (ML) workloads increases, we can expect to see the following exciting trends:

- Higher Computational Efficiency:

GPUs can process large amounts of data in parallel. With advancements in technology, future GPUs will have stronger computational capabilities and higher energy efficiency, enabling them to handle more complex models and larger datasets.

- Wider Adoption of Edge Computing:

As edge devices (such as IoT devices) increasingly adopt GPU acceleration, AI and ML models can be processed where data is generated, reducing latency and improving real-time response capabilities.

- Automation and Self-Optimization:

Future systems may leverage GPUs for automated model optimization and hyperparameter tuning, reducing the need for human intervention and enhancing model performance.

- Mixed Precision Training:

With enhanced support for FP16 (half-precision floating point) and other low-precision computations, model training will become more efficient, saving memory and computational resources while maintaining accuracy.

5. Further Development of Cloud Computing:

As cloud service providers continue to offer GPU-accelerated services, businesses will have easier access to powerful computing capabilities, reducing infrastructure investments.

Rent GPU in GPU Cloud

As we can see, in the emerging trend, cloud service providers continue to offer GPU-accelerated services, businesses will have easier access to powerful computing capabilities, reducing infrastructure investments.

By renting GPU in GPU cloud, you can get:

- Cost-Effectiveness: Utilizing cloud services reduces initial investment costs, as users can select instance types tailored to their workloads, optimizing costs accordingly.

- Scalability: Cloud services allow users to rapidly scale up or down resources based on demand, crucial for applications that need to process large-scale data or handle high-concurrency requests.

- Ease of Management: Cloud service providers typically handle hardware maintenance, software updates, and security issues, enabling users to focus solely on model development and application.

Novita AI GPU Instance: Harnessing the Power of NVIDIA Series

As you can see, those NVIDIA series are indeed good GPUs for you to choose. But what if you may consider how to get GPUs with better performance, here is an excellent way — — try Novita AI GPU Instance!

Novita AI GPU Instance, a cloud-based solution, stands out as an exemplary service in this domain. This cloud is equipped with high-performance GPUs like NVIDIA A100 SXM and RTX 4090. This is particularly beneficial for PyTorch users who require the additional computational power that GPUs provide without the need to invest in local hardware.

Novita AI GPU Instance has key features like:

- GPU Cloud Access: Novita AI provides a GPU cloud that users can leverage while using the PyTorch Lightning Trainer. This cloud service offers cost-efficient, flexible GPU resources that can be accessed on-demand.

- Cost-Efficiency: Users can expect significant cost savings, with the potential to reduce cloud costs by up to 50%. This is particularly beneficial for startups and research institutions with budget constraints.

- Instant Deployment: Users can quickly deploy a Pod, which is a containerized environment tailored for AI workloads. This streamlined deployment process ensures developers can start training their models without any significant setup time.

- Customizable Templates: Novita AI GPU Instance comes with customizable templates for popular frameworks like PyTorch, allowing users to choose the right configuration for their specific needs.

- High-Performance Hardware: The service provides access to high-performance GPUs such as the NVIDIA A100 SXM, RTX 4090, and A6000, each with substantial VRAM and RAM, ensuring that even the most demanding AI models can be trained efficiently.

How to start your journey

STEP1:

If you are a new subscriber, please register our account first. And then click on the GPU Instance button on our webpage.

STEP2: Template and GPU Server

You can choose you own template, including Pytorch, Tensorflow, Cuda, Ollama, according to your specific needs. Furthermore, you can also create your own template data by clicking the final bottom.Then, our service provides access to high-performance GPUs such as the NVIDIA RTX 4090, and RTX 3090, each with substantial VRAM and RAM, ensuring that even the most demanding AI models can be trained efficiently. You can pick it based on your needs.

STEP3: Customize Deployment

In this section, you can customize this data according to your own needs. There are 30GB free in the Container Disk and 60GB free in the Volume Disk, and if the free limit is exceeded, additional charges will be incurred.

STEP4: Launch an instance

Whether it’s for research, development, or deployment of AI applications, Novita AI GPU Instance equipped with CUDA 12 delivers a powerful and efficient GPU computing experience in the cloud.

Conclusion

the integration of GPUs into AI and ML workflows has been a game-changer, enabling faster and more efficient development and deployment of AI applications. As the field continues to evolve, we can expect to see even more exciting advancements in GPU technology and its applications in AI and ML. For those looking to harness the power of GPUs, cloud-based GPU instances like Novita AI GPU Instance offer a compelling solution that combines cost-efficiency, scalability, and high-performance hardware.

Frequently Asked Questions

Which GPU is better for beginners in deep learning, A6000 or A100?

If you're just starting out with deep learning, going for the NVIDIA A6000 is a smart move. It's more budget-friendly but still gives you performance that can stand up to the A100.

Which is the best GPU for AI Training?

The NVIDIA A100 is considered the best GPU for AI training. It offers up to 20x faster than previous generations to accelerate demanding workloads in AI.

Novita AI, is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance - the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading: