GPU Container Core Binding Strategy Based on Affinity

Introduction to Optimizing CPU and GPU Performance

In high-performance computing and large-scale parallel task processing, GPUs have become indispensable accelerators. To fully utilize GPU computing capabilities, it is crucial to optimize CPU and GPU relationships by reasonably allocating and binding CPU cores to GPUs. This article will delve into the concepts of sockets and NUMA (Non-Uniform Memory Access) and discuss how to implement CPU and GPU core binding based on these hardware architectures to ensure optimal system performance.

Socket Concept

What is a Socket?

A socket typically refers to the physical CPU installation slot on the motherboard. Each socket corresponds to a physical CPU, usually containing multiple cores and one or more cache levels (e.g., L1, L2, L3 caches). In multi-socket systems (e.g., dual or quad servers), each socket has a physical CPU connected via a high-speed interconnect (e.g., Intel's QPI or AMD's Infinity Fabric).

Characteristics of Multi-Socket Systems

In multi-socket systems, each socket's CPU can access its local memory and also access memory from other sockets. This memory access pattern introduces the concept of NUMA, aiming to optimize memory access efficiency.

NUMA (Non-Uniform Memory Access) Architecture

What is NUMA?

NUMA stands for Non-Uniform Memory Access, meaning non-uniform memory access. Unlike traditional uniform memory access (UMA), in NUMA architecture, system memory is divided into multiple regions, each associated with a specific CPU (socket). Accessing local memory (within the same socket) is faster than accessing remote memory (from another socket), resulting in higher latency.

NUMA Nodes and Memory Access Delay

In NUMA systems, each socket and its directly connected memory form a NUMA node. Memory access within the same NUMA node is faster, while cross-node memory access incurs higher latency due to additional bus transmission. Optimizing memory and CPU affinity, ensuring tasks run within the same NUMA node, is a critical performance optimization step.

CPU and GPU Physical Relationship

GPU Hardware Architecture

GPUs typically communicate with CPUs via PCIe (Peripheral Component Interconnect Express) buses. In multi-socket systems, GPUs are usually connected to only one socket (and its corresponding NUMA node) and not across sockets. This means, in actual operation, the GPU has higher bandwidth and lower latency with the connected socket's CPU cores and memory.

CPU and GPU Affinity

CPU and GPU communication primarily relies on data transfer. Data is transferred from CPU to GPU and back to CPU, involving memory access operations that significantly impact performance. If the GPU-bound CPU core is located within the same NUMA node as the GPU, data transfer latency is significantly reduced. Therefore, binding CPU cores to GPUs is a key performance optimization step.

GPU and CPU Core Binding Strategy Based on Affinity

In Docker containerized deployment, implementing GPU and CPU affinity binding is crucial for improving containerized task performance. Docker's CPU and GPU resource control features allow for precise control over the CPU cores and GPUs used by containers.

Container CPU and GPU Resource Allocation

Docker containers allow for precise control over allocated CPU and GPU resources. By specifying the CPU cores and GPU devices used by a container, affinity binding can be implemented to optimize performance.

Docker CPU Settings

In Docker, CPU resource allocation can be controlled using the following parameters:

--cpuset-cpus: Specifies the physical CPU cores that can be used by the container. For example,--cpuset-cpus="0-3"restricts the container to using CPU cores 0 through 3.--cpu-shares: Controls the CPU usage weight of the container but does not restrict specific core usage.--cpus: Limits the total number of CPU cores (in virtual cores) that can be used by the container.

Docker GPU Settings

GPU device binding can be achieved using the following Docker parameters:

--gpus: Specifies the GPU devices that can be accessed by the container. For example,--gpus '"device=0"'assigns GPU 0 to the container.

Implementing GPU and CPU Core Binding in Docker

To implement GPU and CPU core binding in Docker containers, combine CPU and GPU settings. Here's an example of how to bind specific CPU cores and a GPU when starting a Docker container:

docker run --cpuset-cpus="0-3" --gpus '"device=0"' --memory="8g" my_gpu_containerIn this command:

--cpuset-cpus="0-3"binds the container to CPU cores 0 through 3, which should be within the same NUMA node as GPU 0.--gpus '"device=0"'assigns GPU 0 to the container.--memory="8g"limits the container's memory usage to 8 GB, ensuring memory allocation is also aligned with CPU/GPU affinity.

Achieving Optimal Binding

To ensure optimal binding in containers, first determine the physical topology of the machine. Use nvidia-smi topo -m to view the machine's topology:

nvidia-smi topo -mFrom the output, you can determine the machine's NUMA configuration and GPU assignments. For example, if the machine has two NUMA nodes, NUMA 0 with 4 GPUs, and NUMA 1 with 4 GPUs, you can identify the GPU and CPU affinity for each NUMA node.

Pseudo-Code for Affinity Calculation

Here's a simplified pseudo-code for calculating affinity and determining the GPU and CPU IDs:

type Affinity struct {

}

// Calculate whether the affinity is satisfied and return the corresponding GPU and CPU IDs

func calAffinity(affinity *Affinity, cpuUse []int, gpuUse []int, gpuReq int, cpuReq int) (bool, []int, []int) {

return true, []int{}, []int{}

}This method can be used to determine whether a node satisfies the optimal binding requirements. When multiple nodes exist, this method can be used to evaluate and select the most suitable node.Novita AI has developed a container engine tailored for next-generation AI computing, dynamically adjusting algorithms to real-time monitor hardware usage and perform end-to-end optimization. Users can enjoy the strongest computing performance without worrying about NUMA technical details.

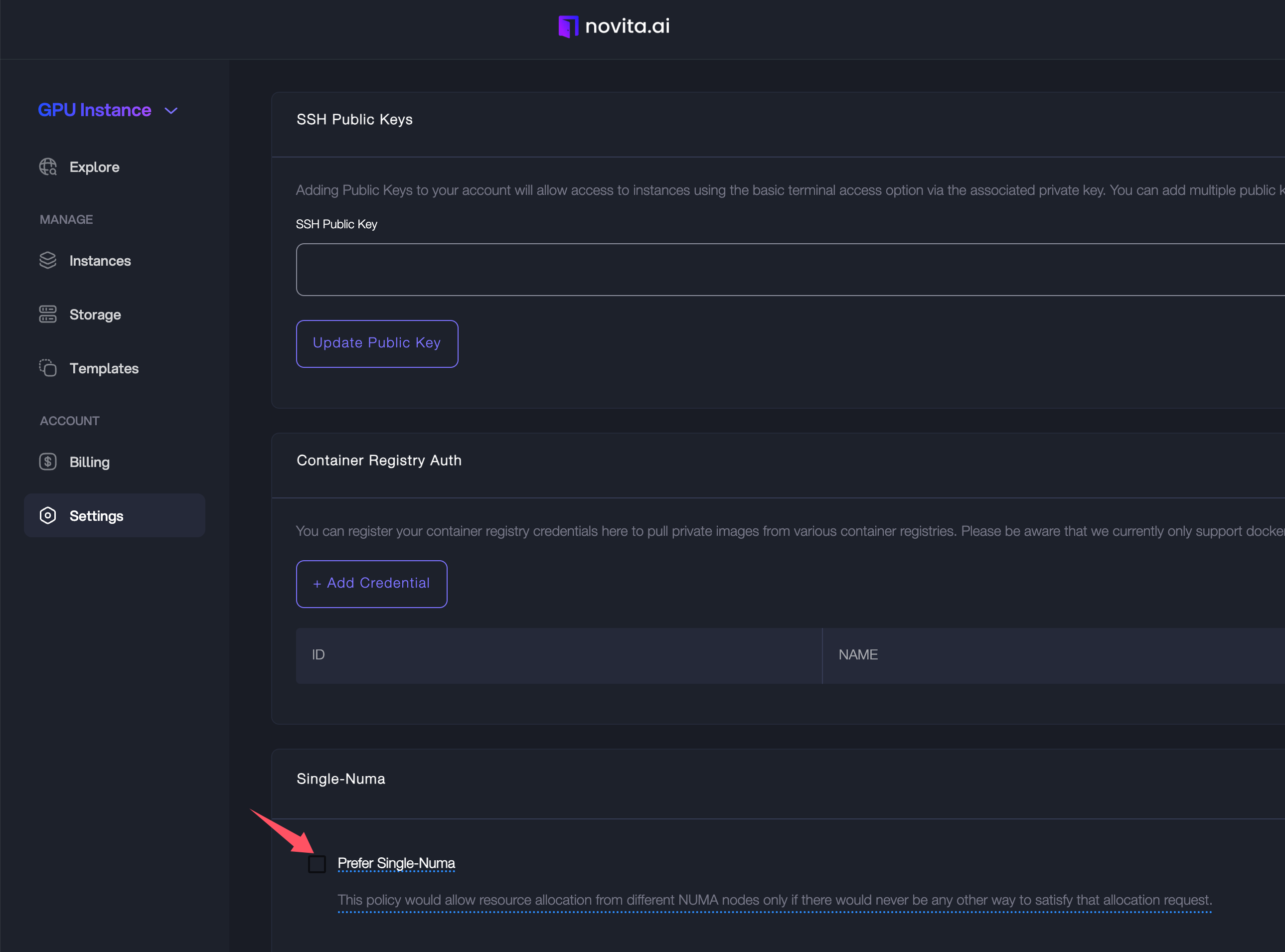

Users can also perform more advanced NUMA settings in the control panel. If you have more related requirements, please feel free to contact us in Discord!

Visit Novita AI for more details!