CUDA 12.1: Powerful Engine Driving GPU Performance

Explore the latest features in CUDA 12.1 update. Stay updated on the new enhancements and improvements. Find out more on our blog!

Key Highlights

- Better performance and efficiency for AI and machine learning apps

- Enhanced compute capabilities for both GPU and CPU

- Improvements in CUDA graphs for smarter management and optimization

- Addition of new libraries along with updates to existing ones from NVIDIA Corporation

- Support for the newest Ampere architecture GPUs as well as H series GPUs

- Works well with the most recent driver versions

Introduction

CUDA 12.1 brings some cool updates for folks who develop software and do research. It makes NVIDIA GPUs work better than before by adding on to what was already great in previous releases. This time around, they've made it even easier to handle complex calculations and added new stuff for CUDA graphs that's pretty neat if you're into AI or machine learning tasks. And with its ability to get along with different types of hardware and operating systems, CUDA continues being super useful for a bunch of different jobs people need done.

Overview of CUDA 12.1 Update

The latest update, CUDA 12.1, is a fresh version of the CUDA toolkit and comes with the newest NVIDIA driver. This update gives developers all they need - tools, libraries, and APIs - to create, fine-tune, and launch applications that run faster thanks to GPU acceleration on different platforms.

With this toolkit, you get the CUDA compiler (cc), essential runtime libraries for running your apps smoothly; debugging and optimization tools to help iron out any kinks; plus a special C++ standard made just for writing code that GPUs can understand easily. And it's not just about C++; there’s also support if you're into programming in C++, Python or Fortan.

Key Highlights and Improvements

The CUDA 12.1 update is packed with cool stuff that makes programs run faster and better, especially if they're using GPUs to do their work. Here's a quick rundown of what's new:

- Faster AI Stuff: If you're into AI or machine learning, this update is sweet because it makes things like PyTorch and TensorFlow run quicker. This means your computer can learn stuff faster.

- Better Use of Your Computer’s Brain: With this update, both the GPU (the part that usually handles graphics) and CPU (your computer's main brain) get better at working together on tough tasks. So now, everything runs smoother.

- Smarter Ways to Organize Work for GPUs: There are these things called CUDA graphs which help organize how jobs are done on the GPU. The latest updates make them even smarter so your GPU works more efficiently.

- New Tools in the Toolbox: They've added some new libraries - think of libraries as toolboxes full of ready-to-use tools - that help with specific tasks like math operations or transforming data really fast.

- Polishing Up Existing Tools: Not only did we get new tools but also improvements to existing ones in our toolbox; fixing bugs, making them perform better or adding neat features so they’re even more useful than before.

Comparison with Previous Versions

CUDA 12.1 is the latest update, building on what was introduced in earlier versions of the CUDA toolkit. Let's see how it stacks up against its predecessors:

- With every new release, there's a boost in performance for applications powered by GPUs. Developers can look forward to better speed and methods that make full use of new hardware features.

- On top of this, each version brings fresh functionalities that expand what GPU-powered apps can do. These additions help developers handle more complex projects with greater efficiency and effectiveness.

- Regarding working with the newest NVIDIA graphics cards, updates like CUDA 12.1 ensure everything runs smoothly together. This means developers get to harness all the capabilities of recent GPUs to speed up their work.

- Also important are fixes and tweaks made with each update which tackle problems users have found and make sure everything works reliably for developing GPU-accelerated programs.

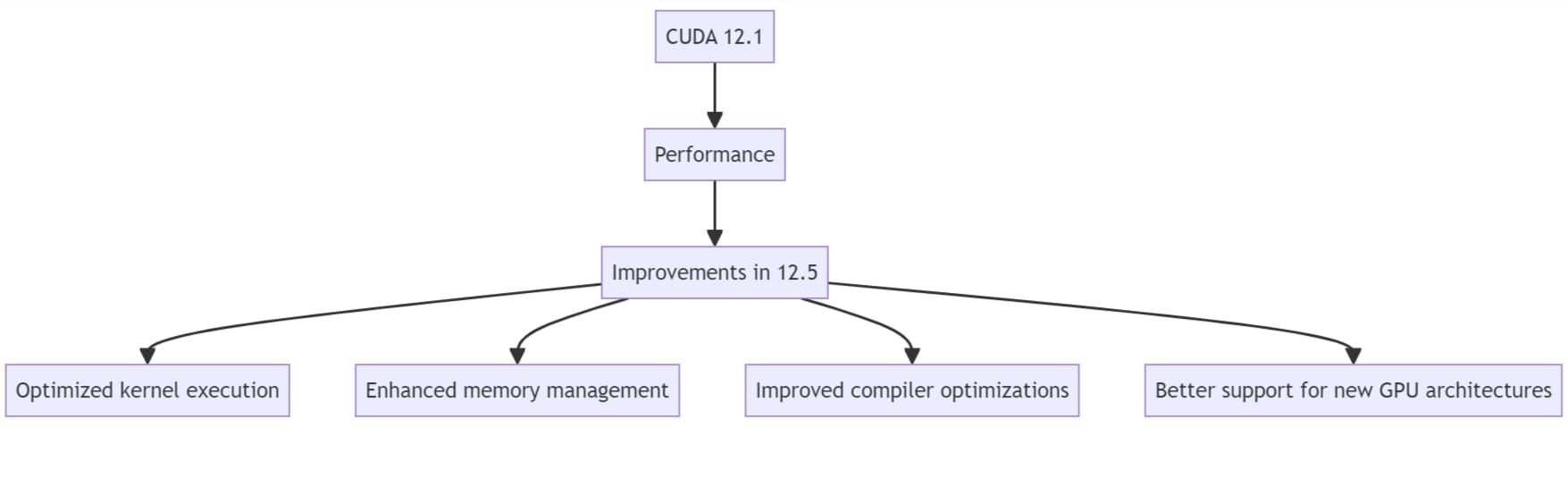

Comparison with the latest CUDA 12.5

CUDA 12.5, the latest CUDA Toolkit version, is released by NVIDIA, launched in April 2023, providing more powerful GPU acceleration capabilities, particularly suitable for developing applications in the fields of artificial intelligence and machine learning.

CUDA 12.5 has the following major improvements in performance compared to CUDA 12.1:

- Optimized kernel execution and improved GPU computing efficiency.

- The memory management mechanism is enhanced and memory access overhead is reduced.

- Compiler optimization measures have been enhanced and code generation is more efficient.

- The support for the new generation GPU architecture is more complete and can better utilize the hardware performance.

Developer Tools and Libraries

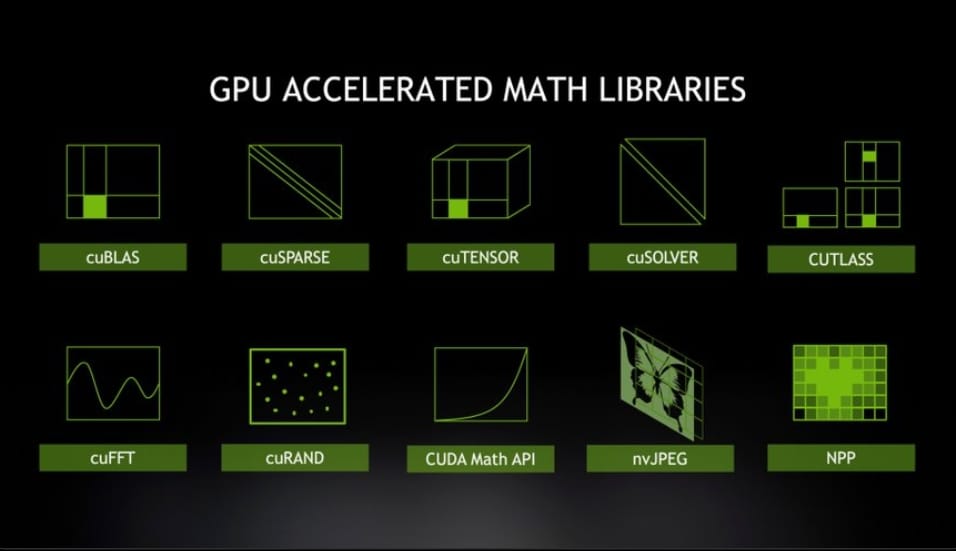

The toolkit gives developers everything they need to create, make better, and launch applications that run faster with GPU support. With the CUDA 12.1 update, there are new libraries added and improvements made to the ones already there to boost what you can do with CUDA. Let's take a closer look at these tools for developers:

- Newly Added: The latest update of CUDA 12.1 brings in fresh libraries that broaden the scope of the cuda toolkit capabilities. Among them are CUFFT and CUBLAS which help with fast Fourier transforms and linear algebra tasks respectively. These additions offer specialized solutions for routine problems allowing devs to tackle complex calculations more effectively.

- Enhancements on Existing Libraries: On top of introducing new resources, this version also polishes up existing ones within the cuda library collection by fixing bugs, stepping up performance levels, and adding cool features especially in cuBLAS and cuFFT areas thus improving their overall functionality gpu-wise.

New Libraries Introduced

With the CUDA 121 update, there's a bunch of new libraries added to the cuda toolkit. These are here to give developers more tools and ways to make their GPU-powered apps work better and faster. Let's dive into some highlights from these additions in CUDA 12.1:

- CUFFT: This library is all about making fast Fourier transform (FFT) algorithms work well with gpu-accelerated applications. With CUFFT, folks working on stuff like frequency and signal processing can get things done quicker thanks to nvidia GPUs.

- CUBLAS: Here we have a library that tackles linear algebra operations for those using gpus in their projects. It covers everything from multiplying matrices, flipping them inside out (inversion), to handling vector tasks and beyond. Using CUBLAS means tapping into efficient ways of doing heavy-duty math computations with NVIDIA’s hardware.

These updates aim at enhancing how developers use algorithms within the realm of high-performance computing by leveraging what NVIDIA has under its hood.

The relationship between CUDA 12.1, GPUs, and LLMs

GPUs and CUDA:

- CUDA is NVIDIA's parallel computing platform and programming model.

- CUDA enables NVIDIA GPUs to be used for general computing tasks, not just graphics rendering.

- Many GPU-accelerated applications, including machine learning and deep learning, rely on CUDA technology.

CUDA and LLMS:

- Many GPU-accelerated applications, including machine learning and deep learning, rely on CUDA technology.

- CUDA provides highly optimized low-level computing support for LLMs.

- Many LLM frameworks, such as PyTorch and TensorFlow, leverage CUDA to implement GPU acceleration.

- Using the latest version of CUDA can provide better performance and efficiency for LLMs.

Running Cuda on Novita AI GPU Instance

You'll need an NVIDIA GPU that plays nice with the new stuff, as well as having both the latest NVIDIA driver installed alongside this very toolkit itself.

Novita AI GPU Instance, a cloud-based solution, stands out as an exemplary service in this domain. This cloud equipped with high-performance GPUs like NVIDIA A100 SXM and RTX 4090. Novita AI GPU Instance provide access to cutting-edge GPU technology that supports the latest CUDA version, enabling users to leverage the advanced features.

How to start your journey in Novita AI GPU Instance:

STEP1: If you are a new subscriber, please register our account first. And then click on the GPU Instance button on our webpage.

STEP2: Template and GPU Server

You can choose you own template, including Pytorch, Tensorflow, Cuda, Ollama, according to your specific needs. Furthermore, you can also create your own template data by clicking the final bottom.

Then, our service provides access to high-performance GPUs such as the NVIDIA RTX 4090, and RTX 3090, each with substantial VRAM and RAM, ensuring that even the most demanding AI models can be trained efficiently. You can pick it based on your needs.

STEP3: Customize Deployment

In this section, you can customize these data according to your own needs. There are 30GB free in the Container Disk and 60GB free in the Volume Disk, and if the free limit is exceeded, additional charges will be incurred.

STEP4: Launch a instance

Whether it’s for research, development, or deployment of AI applications, Novita AI GPU Instance equipped with CUDA 12 delivers a powerful and efficient GPU computing experience in the cloud.

Novita AI GPU Instance has key features like:

1. GPU Cloud Access: Novita AI provides a GPU cloud that users can leverage while using the PyTorch Lightning Trainer. This cloud service offers cost-efficient, flexible GPU resources that can be accessed on-demand.

2. Cost-Efficiency: Users can expect significant cost savings, with the potential to reduce cloud costs by up to 50%. This is particularly beneficial for startups and research institutions with budget constraints.

3. Instant Deployment: Users can quickly deploy a Pod, which is a containerized environment tailored for AI workloads. This deployment process is streamlined, ensuring that developers can start training their models without any significant setup time.

4. Customizable Templates: Novita AI GPU Pods come with customizable templates for popular frameworks like PyTorch, allowing users to choose the right configuration for their specific needs.

5. High-Performance Hardware: The service provides access to high-performance GPUs such as the NVIDIA A100 SXM, RTX 4090, and A6000, each with substantial VRAM and RAM, ensuring that even the most demanding AI models can be trained efficiently.

Migrating to CUDA 12.1

To make the switch to CUDA 12.1 smooth, start with looking at the release notes for any updates. Make sure your NVIDIA driver is up-to-date and matches this latest version; you might need to update kernel modules too. If there's a mismatch in the driver version, think about putting in the CUDA toolkit again. For those using Visual Studio edition, check that it works well with CUDA 12.1. Also, bring your standard libraries up to date so everything runs smoothly together.

Steps for a Smooth Transition

To make sure you switch to CUDA 12.1 without any hitches, developers should do the following:

STEP1: Before jumping into the new version, it's wise to save a copy of your current CUDA projects. This way, you won't lose anything or run into trouble with your code.

STEP2: Head over to NVIDIA's site and grab the latest CUDA 12.1 toolkit that matches your system. The website will have all the steps you need to get it set up on your computer.

STEP3: With CUDA 12.1 ready on your machine, go ahead and compile your code again using this new toolkit. It'll make sure everything works smoothly together and lets you use all the cool updates.

STEP4: After getting everything compiled, put it through its paces by testing thoroughly. You want to catch any issues early so they can be fixed before they cause real problems.

By sticking closely to these guidelines, developers can move onto CUDA 12 seamlessly while tapping into improved features for their GPU-powered apps.

Tips for Maximizing Performance and Compatibility

To get the most out of CUDA 12.1 and make sure everything works smoothly, developers should keep a few things in mind:

- Make your memory work smarter:

It's really important to handle memory well when you're working with GPU-accelerated apps. Try to cut down on how often you move data back and forth between the computer and the GPU, use shared memory wisely, and think about ways to manage memory better like pooling or organizing your data differently.

- Do more at once:

CUDA lets you run different tasks at the same time on a GPU which can help make everything faster overall. Look for chances where tasks don't depend on each other so they can be done together, boosting how much work your GPU can do at one time.

- Keep things up-to-date:

By making sure that both your NVIDIA GPU drivers and software are current, you'll not only ensure that they all play nicely with CUDA 12.1 but also benefit from any improvements or fixes that have been made since the last update.

- Use what's already there for you:

There’s a bunch of ready-made libraries in CUDA like cuBLAS, cuFFT, and cuDNN designed specifically for speeding up certain types of calculations especially those used in math-heavy operations or deep learning projects. These tools are fine-tuned to improve performance significantly without needing to reinvent the wheel.

In addition, for folks who love diving into the newest GPU technology trends, CUDA 12.1 lays out an exciting playground full of possibilities for pushing boundaries in all sorts of computer tasks.

Conclusion

The CUDA 12.1 update is packed with cool upgrades, making things better for AI and machine learning work. It's like giving your computer a super boost, especially if you're into heavy-duty computing or creating smart tech stuff. With this new version, everything runs more smoothly thanks to improvements in how well it works with different hardware and software. Plus, they've squashed some bugs and worked around certain issues that were bugging users before.

Frequently Asked Questions

How does CUDA 12.1 affect existing projects?

For AI, machine learning, and HPC applications, these optimizations mean better performance and smoother operations all around.

Do I need to install CUDA before PyTorch?

No, you don’t need to download a full CUDA toolkit and would only need to install a compatible NVIDIA driver, since PyTorch binaries ship with their own CUDA dependencies.

Is CUDA compatible with PyTorch?

Yes, the current PyTorch code base supports all CUDA 12 toolkit versions if you build from source.

Novita AI, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go, it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended Reading: