Can Docker Containers Share a GPU? Expert Perspective

Can Docker Containers Share a GPU? Expert Perspective

Key Highlights

- Efficient GPU Utilization with Docker: Docker containers effectively utilize GPUs for AI and ML tasks, ensuring stability and consistency across platforms.

- GPU Acceleration in AI and ML: GPUs play a vital role in accelerating AI and ML tasks, particularly deep learning, by processing multiple calculations simultaneously.

- Optimized Resource Sharing: The sharing of GPUs among Docker containers is facilitated by tools like the NVIDIA Container Toolkit, leading to optimized resource utilization.

- Cost-Effective Cloud GPU Rentals: Renting GPUs in the cloud is a scalable and cost-effective alternative for AI and ML development, with platforms such as Saturn Cloud making this option accessible.

- High-Performance Computing with Novita AI GPU Instance: Novita AI GPUs deliver advanced computing capabilities for AI and ML tasks, and when integrated with Docker, they ensure seamless operation and maximum resource efficiency.

Introduction

In the field of AI and ML, GPUs play a key role in speeding up complex tasks. Can Docker Containers Share a GPU? This question often comes up when managing workloads efficiently. Docker has become a useful tool for handling and running these tasks that rely on GPUs. It packages applications and the things they need.

This way, Docker provides stable and repeatable results in different settings. This makes it great for training and using AI and ML models. This blog looks at how Docker and GPUs work together. It will help you understand how to use these tools well for your AI and ML projects.

What are Docker containers?

A Docker container is a small, independent package. It has everything an application needs to work. This includes the code, runtime, tools, libraries, and settings. Because it is self-sufficient, the application runs the same way in different environments. This happens no matter what operating system is used.

How Docker Enables GPU Sharing for AI and ML Workloads?

By using the latest version of Docker with NVIDIA GPU support, the answer to “Can Docker Containers Share a GPU?” becomes clear. Users can share GPUs easily for AI and ML tasks in containers. When Docker containers are set up properly with NVIDIA GPU drivers and commands, they can use GPU resources well for better performance. This setup makes it easier to launch GPU-powered applications like TensorFlow or PyTorch, improving the way they compute in parallel for many AI and ML cases. Bringing together Docker and NVIDIA GPUs provides many new options for powerful computing in AI and ML workloads.

The importance of GPUs in accelerating AI and ML

GPUs are very important for speeding up AI and ML tasks. They can do many calculations at once, while CPUs are better for doing tasks one after the other. This ability lets GPUs work on thousands of threads at the same time. This makes them perfect for tasks like matrix multiplications, which are very important in deep learning.

Because they can process many tasks together, training machine learning models becomes much faster. Models that could take days to train on CPUs can now be ready in hours or even minutes with GPUs. This speeds up the whole process of developing AI and ML.

Also, the power of GPUs helps researchers and developers to handle bigger datasets and create more complex models. This results in better and more reliable AI and ML solutions.

Why use Docker containers for AI and ML?

Docker containers are very useful for AI and ML tasks. Can Docker Containers Share a GPU? Yes, they can, and they can create separate and repeatable environments. This is important because it helps keep consistency during development, testing, and deployment.

Also, Docker has strong GPU support. Tools like the NVIDIA Container Toolkit make it easy for AI and ML applications in containers to use GPU power. Frameworks such as TensorFlow work well with Docker. This makes it easier to deploy and manage AI and ML workflows.

Can Docker Containers Share a GPU? How to Enable GPU Sharing in Docker

Certainly, Docker containers have the capability to share a GPU, and knowing how to activate GPU sharing in Docker is essential for optimizing resource utilization. The issue of “Can Docker Containers Share a GPU?” commonly arises in scenarios requiring the distribution of high-performance tasks such as AI and machine learning (ML) among various containers. Fortunately, with correct setup, Docker containers can share a GPU by leveraging the NVIDIA Container Toolkit. By including the — gpus flag during Docker container launch, multiple containers can access the same GPU resource. Nevertheless, to guarantee peak performance and prevent conflicts, it is vital to establish resource constraints and utilize monitoring tools, ensuring safe and efficient GPU sharing among Docker containers.

Important factors to consider

When you think about sharing GPUs with Docker containers, it’s important to make sure they work well with NVIDIA GPUs and the latest Docker version. First, check that your NVIDIA driver supports this and that GPU devices can be accessed on the host system. Next, read the terms of service for the NVIDIA container toolkit and learn about Docker run commands for a good setup. Also, consider your specific needs, such as machine learning tasks, to find the best configuration. Finally, stay informed on community ideas from places like Stack Overflow for help with any problems you might have.

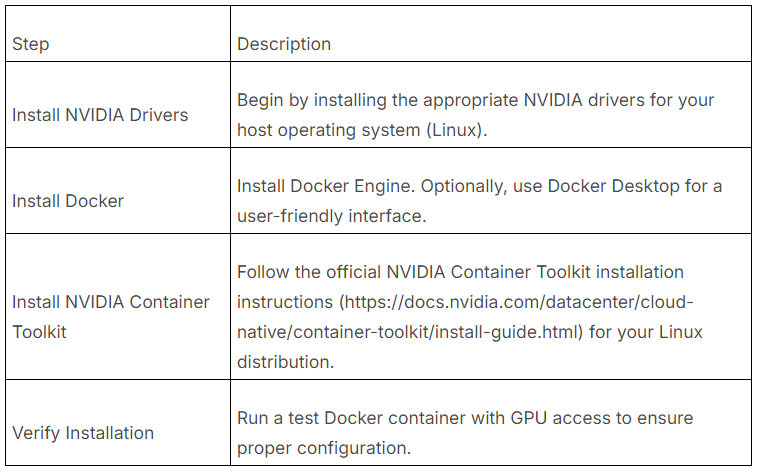

Install Docker with GPU Support

Configuring Docker for GPU support is essential to unlock the power of NVIDIA GPUs for your AI/ML applications. The NVIDIA Container Toolkit simplifies this process, ensuring seamless integration between Docker and your NVIDIA GPU.

Here’s a simplified breakdown of the configuration process:

watch the detailed installation video,If you are interested.

How can two containers share the usage of a GPU safely?

Can Docker containers share a GPU? The answer is yes. But how can two containers safely share the usage of a GPU? Sharing the usage of a GPU among two Docker containers can be a complex but achievable task. While it is technically possible for multiple containers to share a GPU, it requires careful configuration and management to ensure safe usage. One approach is to use NVIDIA Docker, which allows you to isolate GPU usage using the “ — gpus” flag when launching containers. Additionally, setting up resource limits and monitoring tools can help prevent conflicts and ensure that each container gets its fair share of GPU resources without causing instability or performance issues.

Advantages and Disadvantages of Sharing GPUs in Docker Containers

Advantages:

- Optimized Resource Utilization: Sharing GPUs among containers reduces the overall cost of running GPU-intensive tasks like AI/ML.

- Isolation: Docker isolates containers, preventing interference, ensuring stability even when multiple containers share the same GPU.

Disadvantages:

- Performance Drops: If several containers require heavy GPU usage simultaneously, it can lead to performance degradation.

- Complex Setup: Sharing a GPU across containers requires careful configuration and monitoring to avoid resource contention.

Renting GPUs and configuring Docker containers in GPU cloud

Renting GPUs in the cloud is now a common choice for people and companies. They want to use GPUs without spending a lot of money at the start. GPU cloud providers give different types of it instances. Users can pick the best one that fits their needs.

Can Docker containers share a GPU? Certainly, if security is a concern, utilizing GPU rentals and setting up Docker containers in the GPU cloud can be a great solution.If you prefer not to use local resources or rent in the GPU cloud, you can also try Novita AI Serverless AI, making deployment even simpler.

Setting up Docker containers on these rented GPU instances is usually easy. Most GPU cloud providers have ready-made Docker images or tools. These help connect your Docker containers to the rented GPU resources. This way, you can quickly set up and run your AI and ML tasks in the cloud. You can enjoy the benefits of cloud computing, like flexibility and saving money.

Benefits you can get by renting in GPU cloud

Renting GPUs in the cloud has many benefits, starting with cost savings. You only pay for the GPU resources you actually use. This makes cloud renting cheaper than buying and keeping costly hardware.

Another big plus is scalability. You can easily increase or decrease the amount of GPU power you need. Whether you are training one model or doing big experiments, you can change the resources. This helps you get the best performance while saving money.

Also, GPU cloud platforms make deployment easier. They usually have ready-to-use setups. This makes it simpler to set up and manage your AI and machine learning (ML) tasks.

Optimize AI Performance with Novita AI GPUs

Novita AI GPUs are sophisticated technology created to speed up AI and ML operations. When paired with a properly configured Docker arrangement, they transform into a potent asset for attaining outstanding outcomes in your AI endeavors. By utilizing Novita AI GPUs, you are able to set up Docker container settings, and in general, the Docker containers on Novita AI GPUs are kept exclusive to guarantee security and optimal performance.

These GPUs come with high-speed memory and special cores for AI tasks. This helps to greatly improve training and inference speeds. By easily fitting these powerful GPUs into a Docker environment, you can use their full power while still enjoying the benefits of portability and reproducibility that come with containerization.

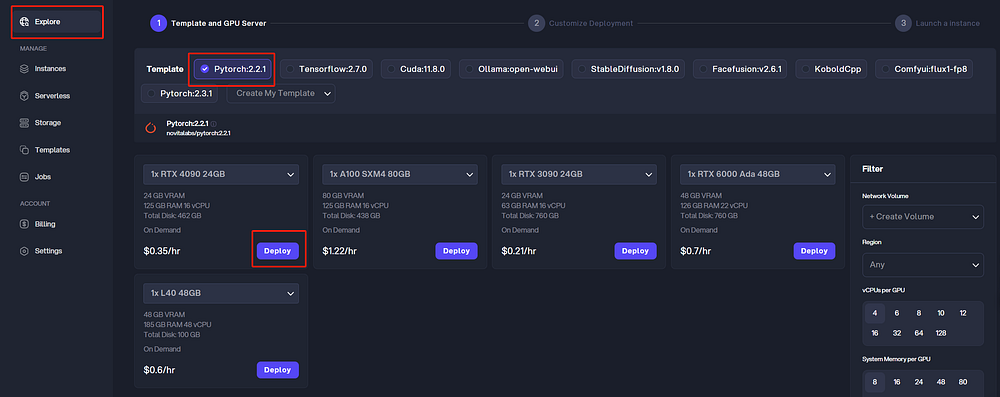

How to Begin Your Journey with Novita AI

- STEP1:Create a new instance based on your own resource requirements

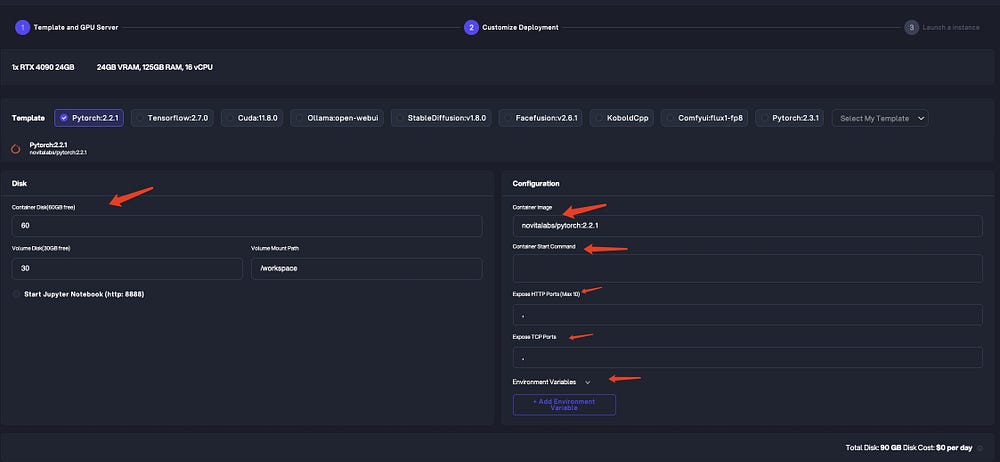

- STEP2:Configuring relevant parameters

Novita AI GPU Instance has key features like:

Novita AI GPU instances are designed to provide great performance for AI workloads. They use NVIDIA’s latest GPUs. This means you have the most advanced computing abilities available.

Here are some important features of Novita AI GPU instances that make them perfect for AI and ML:

- Cost-Effectiveness: Save up to 50% on cloud costs, great for startups and research institutions optimizing their budget.

- Scalability: Easily scale GPU resources for large-scale AI projects.

- Instant Deployment: Quickly deploy optimized Pods for AI tasks, boosting productivity.

- Customizable Templates: Access pre-configured templates for PyTorch and TensorFlow.

- High-Performance Hardware: Leverage top-tier NVIDIA GPUs like the A100 SXM and RTX 4090 for smooth AI model training.

Conclusion

Docker containers change the way we handle AI and ML tasks. Can Docker Containers Share a GPU? Absolutely. They make it easy to share GPUs, which are important for speeding up these tasks. Using Docker allows us to be flexible and scalable when it comes to managing GPU resources. Security and resource management are key factors to think about when sharing GPUs. While there are benefits to sharing GPUs, we need to make sure we optimize performance. This can be done with tools like Novita AI GPUs and the right Docker setups. Renting GPUs in the cloud is convenient and helps improve AI performance. Make the most of Docker containers and GPU sharing to boost your AI and ML projects.

Frequently Asked Questions

Can I share my docker container?

Yes, you can share your Docker container, but typically, it’s more efficient to share the Docker image rather than the running container itself.

Do docker containers share RAM?

Docker containers utilize the host system’s RAM via the operating system kernel, allowing for effective memory usage without complete hardware virtualization.

Can docker containers share libraries?

Absolutely, Docker containers can share libraries and data using Docker volumes and bind mounts, promoting collaboration between containers.

Why is Docker-in-Docker not recommended?

Docker-in-Docker is not advisable due to potential security risks and performance challenges; a better approach is to use Docker socket binding for improved safety and efficiency.

Can multiple Docker containers use the same GPU simultaneously?

Multiple containers sharing one GPU can reduce performance due to shared resources, emphasizing the need for efficient resource management.

Originally published at Novita AI

Novita AI is your comprehensive cloud platform designed to fuel your AI aspirations. With integrated APIs, serverless computing, and GPU instances, we provide the cost-effective tools essential for your success. Simplify your infrastructure needs and start with zero cost — transform your AI vision into reality with ease and efficiency.

Recommended Reading

1.Using Docker to Run YOLO on GPUs: Boost Your Deep Learning with GPU Rentals