Building Strong LLM Infrastructure for Efficiency

Optimize LLM infrastructure for maximum efficiency. Learn how to streamline processes and improve performance on our blog.

Key Highlights

- Trained on vast datasets, LLMs use deep learning to understand content and perform tasks like code writing, translation and chatbot.

- LLMs require extensive training and fine-tuning processes before delivering reliable and useful results.

- The infrastructure underpinning these powerful LLMs is crucial to their performance, scalability, and accessibility, requiring careful optimization and engineering.

- Efficient LLM infrastructure involves considerations around hardware, software, data management, model training, and deployment, all of which must be carefully balanced for optimal results.

Introduction

The growth of LLMs has changed artificial intelligence, leading to great progress in NLP. To work well, LLMs need a strong infrastructure to manage their complex needs related to computing and data. This blog will dive deeper into the key elements of LLM infrastructure, explore the latest advancements, and provide insights on how organizations can best position themselves to capitalize on the future of AI powered by large language models.

Understanding LLM Infrastructure

What is LLM Infrastructure?

LLM infrastructure encompasses the software, network, and API resources required to train, deploy, and maintain large language models. This includes high-performance computing clusters and specialized storage solutions for software frameworks and networking components. The goal is to create an environment that can handle the immense computational load and data throughput that LLMs demand.

Key Components of LLM Infrastructure

1. High-Performance Computing and Network

- GPUs power LLM infrastructure for parallel processing, vital for training and deploying LLMs.

- High network bandwidth to keep the internet working well

2. Software System Architecture

- Distributed training and inference frameworks

- Data management and preprocessing tools

- Model optimization and deployment tools

3. Training and Deployment Processes

- Large-scale data collection and preprocessing

- Efficient model training techniques

- Model compression and deployment optimization

Why is Robust LLM Infrastructure Important?

Scalability

A well-designed LLM infrastructure efficiently handles growing workloads, data volumes, and user demands without sacrificing performance. It must scale to accommodate increased requests and larger models as demands and data volume expand.

High Performance

Efficient infrastructure ensures low latency and fast responses, enhancing user experience, especially in real-time applications and queries.

Continuous Learning

Optimize the model through user feedback and new data to ensure accuracy in a dynamic environment.

Flexible for Use

A modular and extensible LLM infrastructure allows for easy integration of new models, services, and functionalities. This helps organizations adapt quickly to changing requirements, new use cases, and evolving language model technologies.

Reliability

Robust infrastructure provides redundancy and fault tolerance, reducing the risk of system failures and downtime.

Cost-Efficiency

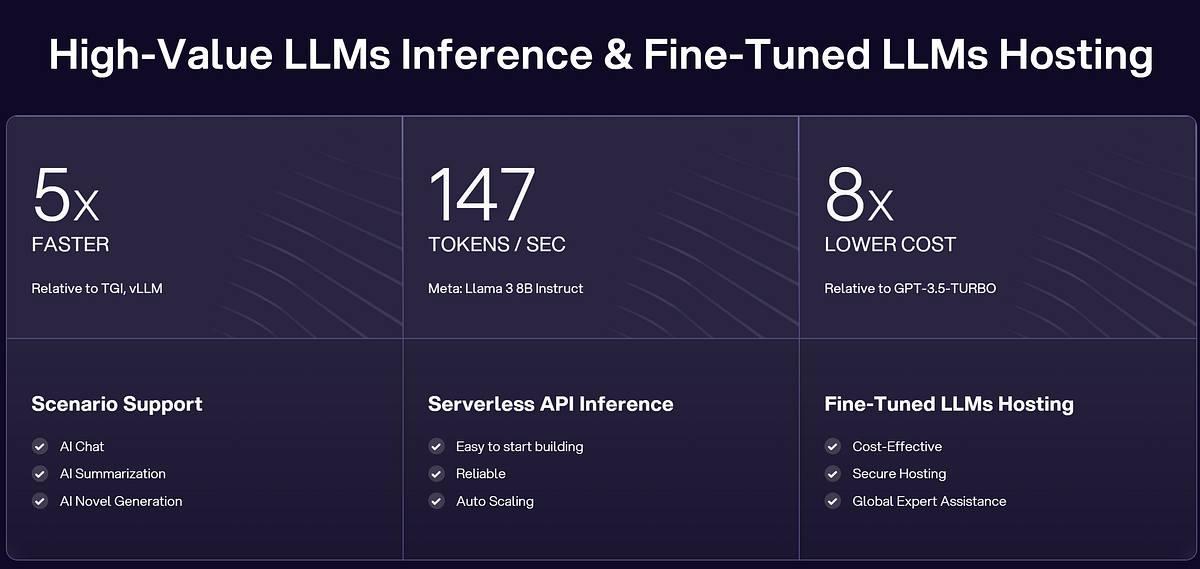

Efficient infrastructure optimizes resource utilization, reducing operational costs while maintaining high performance. Novita AI is a good choice providing cost-efficient LLM API service for developers.

Top Examples of LLM Infrastructure

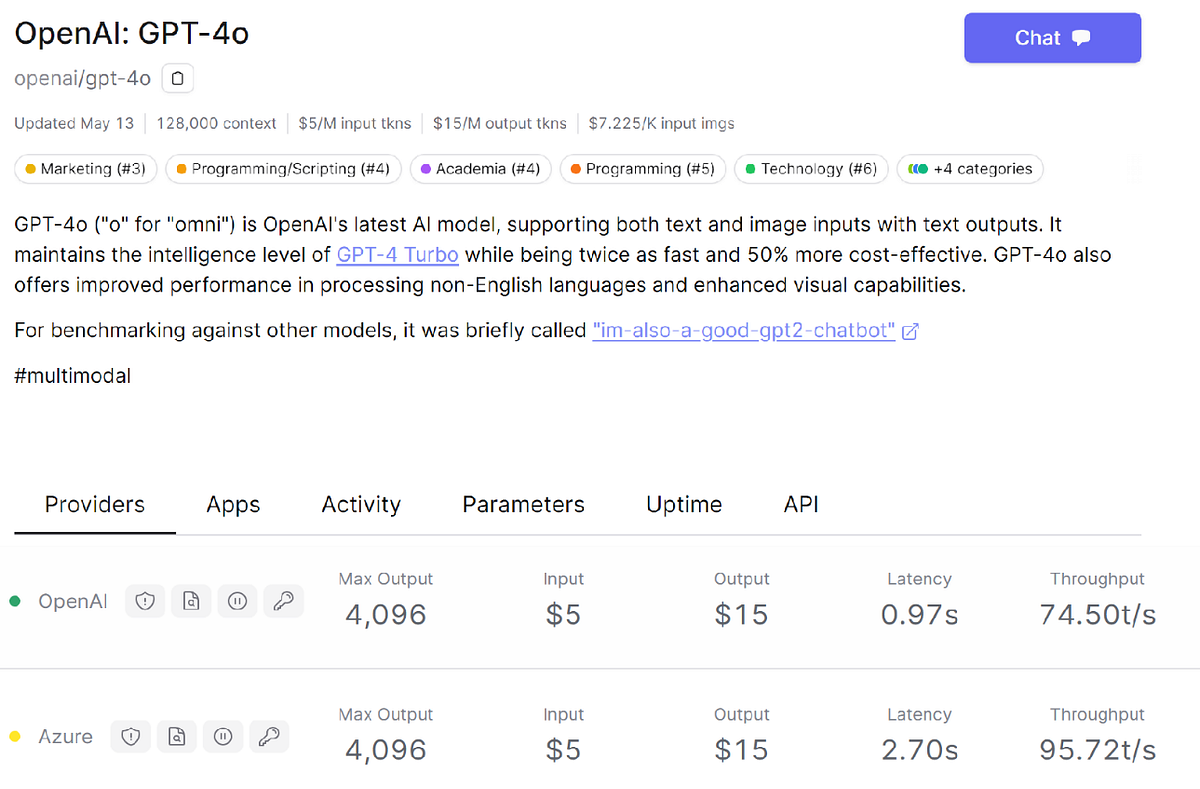

OpenAI

OpenAI’s GPT model is a leading large language model. It utilizes thousands of GPUs spread across various data centres, employing techniques like model parallelism and mixed precision training to enhance performance and resource efficiency.

Features

- API interface seamlessly integrates LLM into applications

- Possesses high availability and scalability

- High computing ability yet with high costs

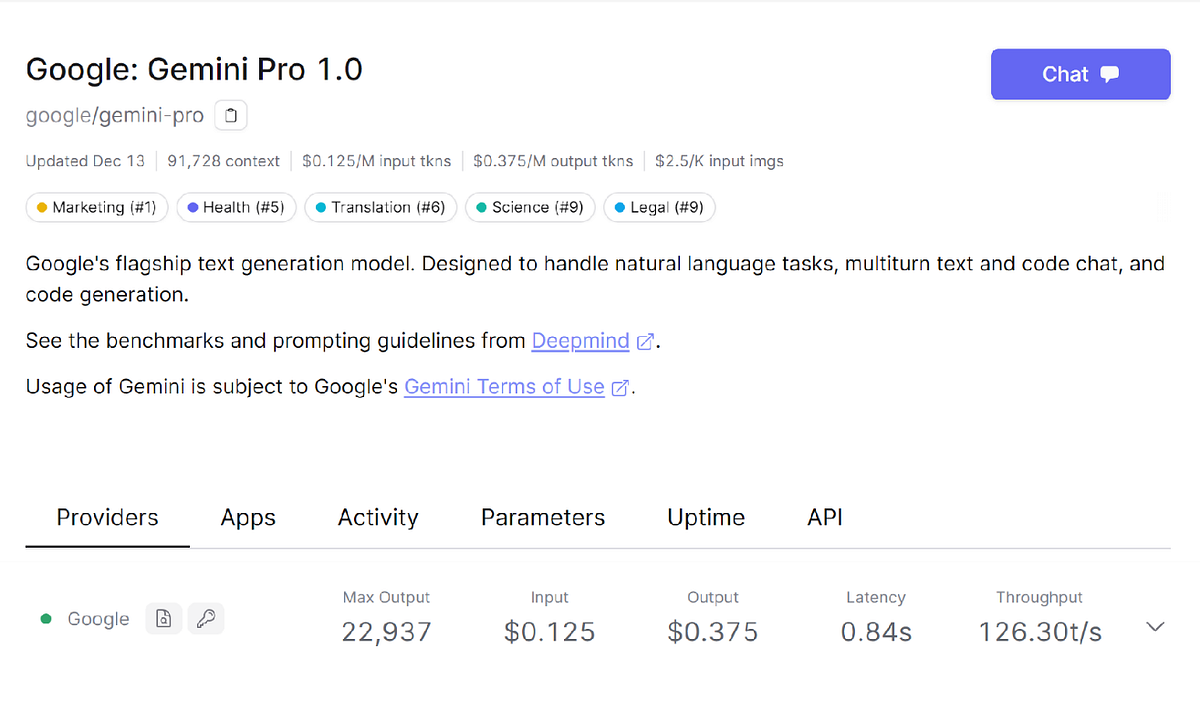

Google AI

Google’s Gemini is a significant LLM for training and high-throughput storage solutions for massive datasets. Google’s AI services offer scalability and flexibility for deploying its model in diverse applications.

Features

- Offer comprehensive machine learning services, including model training, deployment, and monitoring

- Support frameworks such as TensorFlow and PyTorch

- Certain Models for Use

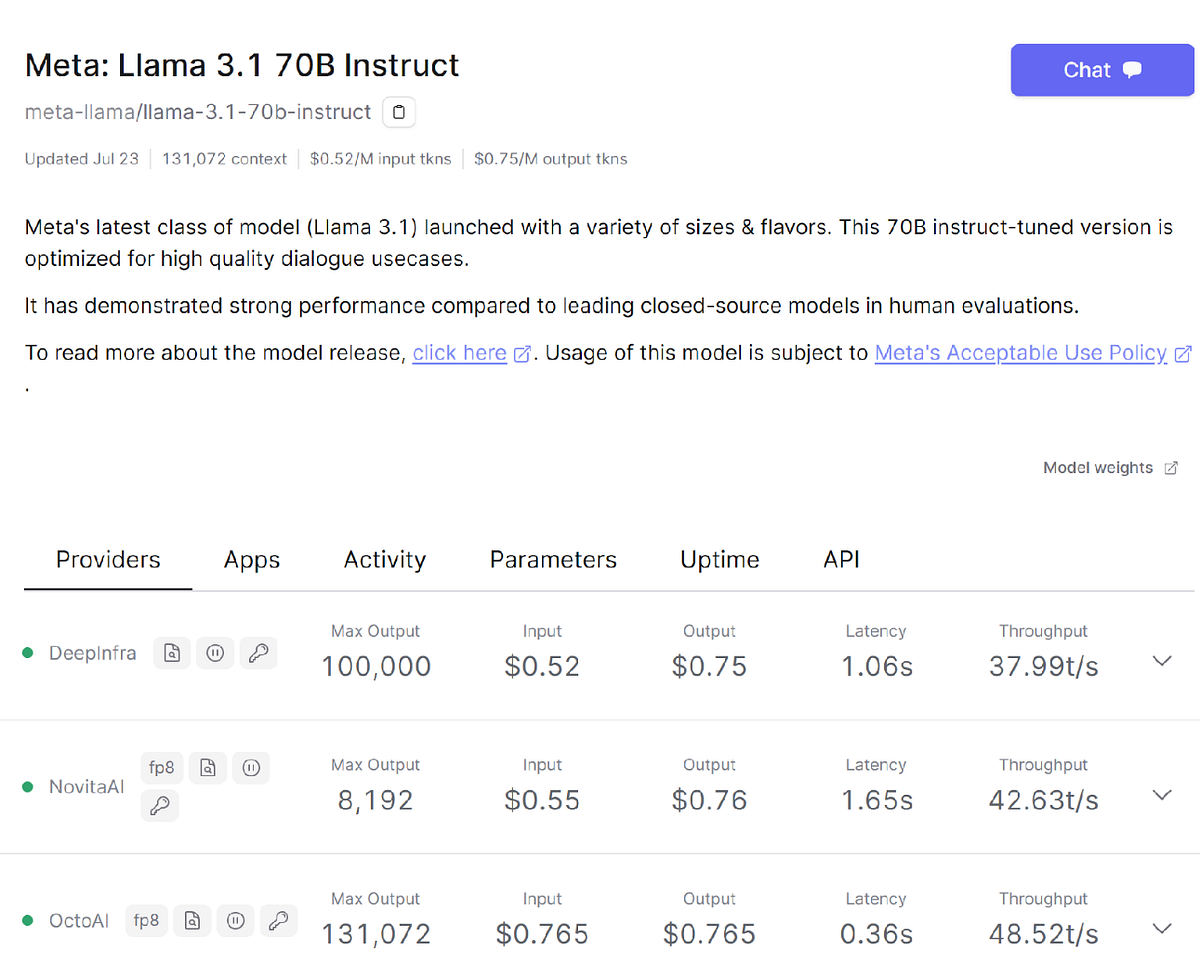

Meta

Meta provides a comprehensive LLM infrastructure that enables the effective development, training, and deployment of LLM with large parameters.

Features

- An open-source library that supports various pre-trained models

- Extensive documentation and community support

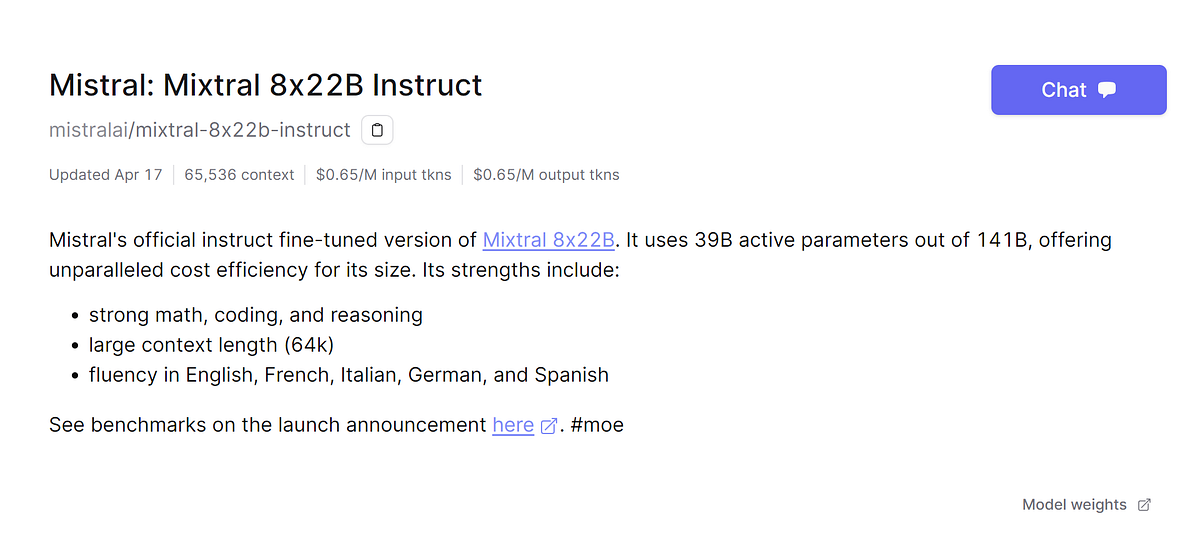

Mistral

Mistral AI focuses on developing advanced AI models, especially in NLP. Their models are used in chatbots, content generation, text analysis, and more.

Features

- Support integration of various LLMs.

- Customize functions, and keep flexible in the application.

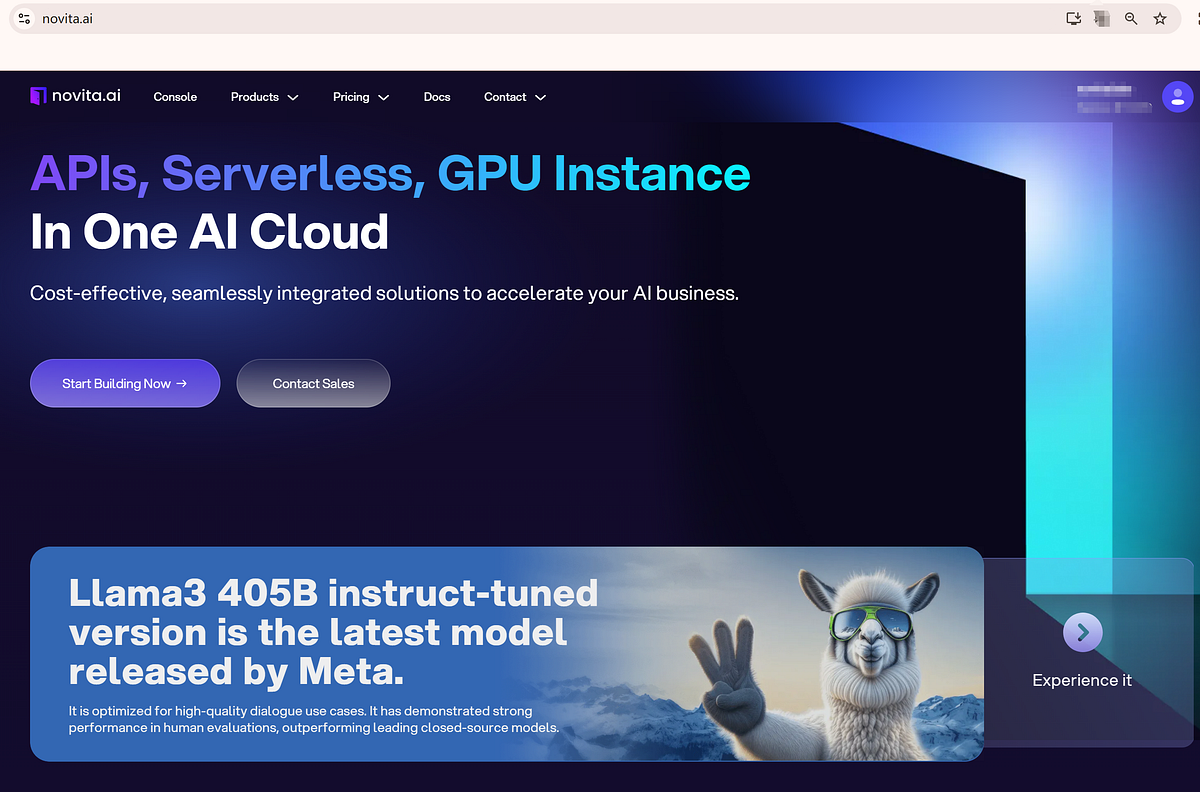

Novita AI

Novita AI provides LLM API services that enable developers to select different models and services to meet specific requirements of the application. Novita AI is always preparing to provide the latest models.

Features

- Easily integrated API

- Provide various LLM models

- Customized and fine-tuning model service

- Cost-effective with affordable pricing for businesses

- Auto Scaling

Process for Building LLM Infrastructure

1. Define Objectives

Identify the specific use cases for the LLM, such as customer support, content generation, or data analysis.

2. Optimize Resource Utilization

Efficient resource use is key for cost-effective LLM infrastructure. Optimize GPU, storage, and network usage to boost performance and cut costs. Techniques like mixed precision training and dynamic allocation can help achieve this.

3. Model Selection and Optimization

Choose the appropriate model architecture based on needs (e.g., Llama3.1 family models). Fine-tune the model to improve performance on specific tasks.

4. Infrastructure Design

Use distributed computing frameworks (like Kubernetes) to manage resources and load balancing. Consider using cloud service providers for elastic scaling.

5. Performance Monitoring

Implement monitoring tools to track model performance and response times. Regularly evaluate the quality of model outputs and make necessary adjustments.

6. Maintain Security

Protect APIs and data transmission using encryption and authentication mechanisms. Conduct security audits to prevent potential attacks and data breaches.

Efficient Choice: Integrate LLM API with Novita AI

It is demanding to do the steps above. You can choose the right LLM service platform with a strong infrastructure. Novia AI providing a comprehensive guide, is a good choice for API integration and easy access. Here is a comprehensive guide, let’s try.

Step-by-step Guice with Novita AI LLM API

- Step 1: Visit the Novita AI website and create an account.

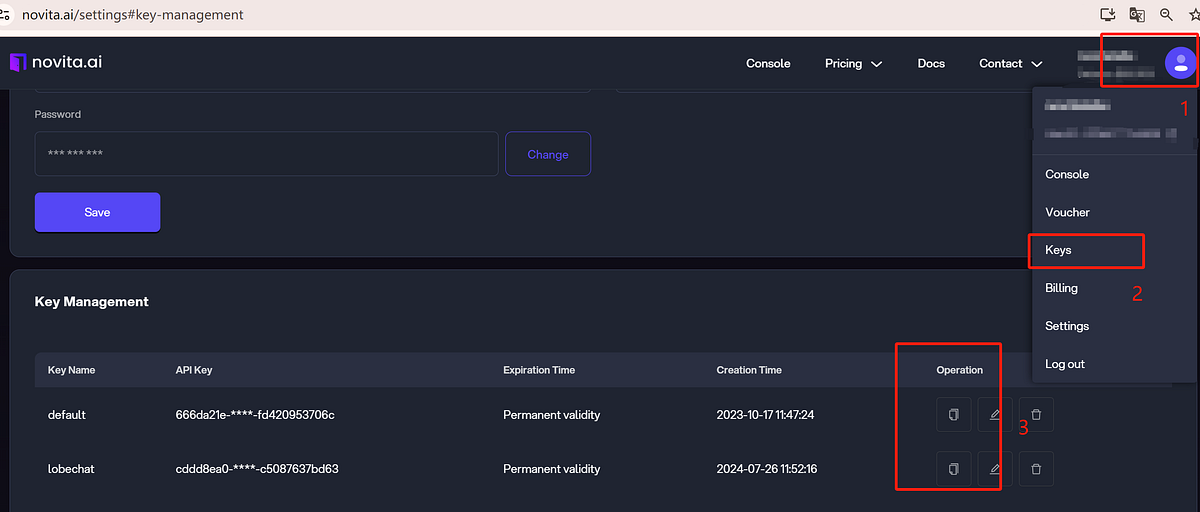

- Step 2: Navigate to “LLM API Key” and obtain the API key you want, like in the following image.

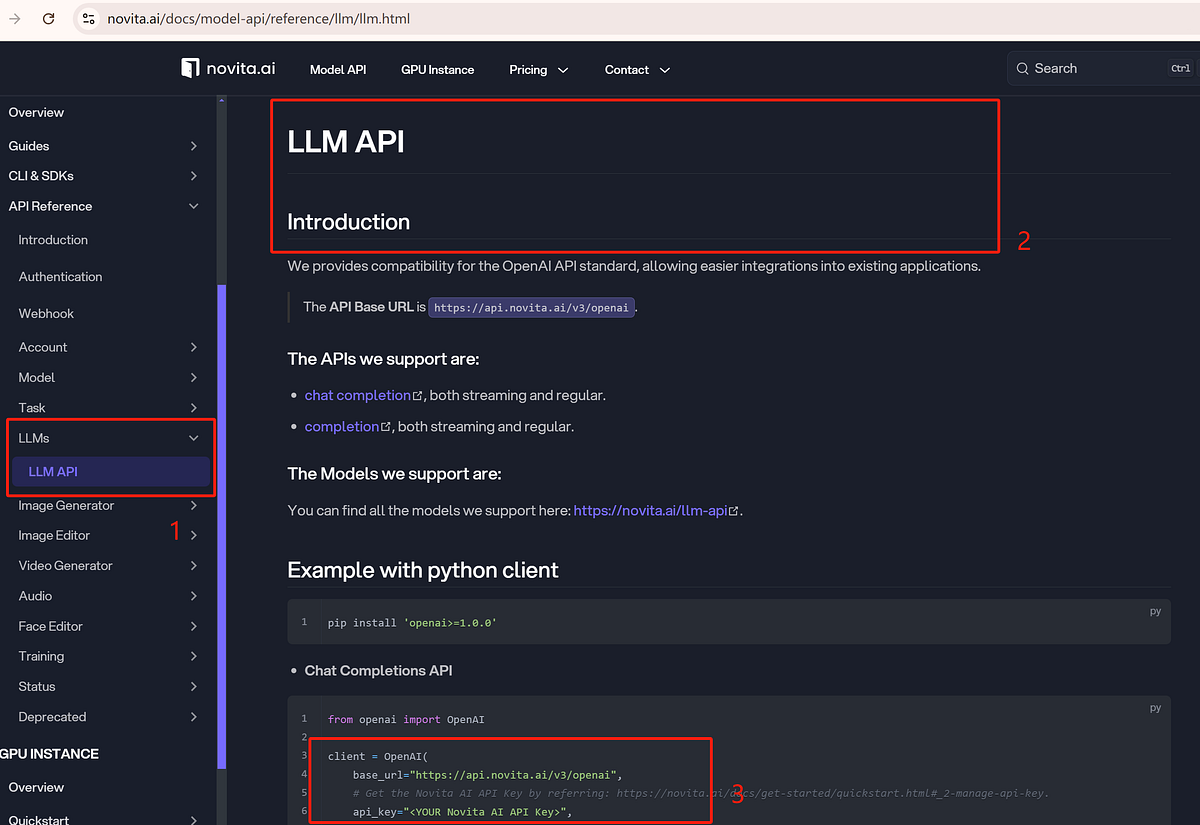

- Step 3: Navigate to API Reference. Find LLM API under the “LLMs”. Use the API key to start the API request. Adjust the parameters according to your needs.

- Step 4: Integrate it into your existing project backend and get the response without waiting a long time. Before starting your project, ensure everything is thoroughly checked. Here is a code example.

Example with curl client

Future Trends in LLM Infrastructure

Federated Learning

Federated learning is an emerging paradigm that allows LLMs to be trained across multiple decentralized devices or servers while preserving data privacy. This approach can reduce the need for central data storage and enhance data security.

Integrated ML Pipelines

LLM infrastructure will likely become more tightly integrated with the rest of the machine learning (ML) pipeline, including data processing, feature engineering, and model deployment. This could lead to the development of end-to-end ML platforms that seamlessly handle the entire lifecycle of LLM-powered applications.

Automated Model Management

As the number of LLMs and their use cases continues to grow, there will be a need for automated tools and frameworks to manage the lifecycle of LLMs, including versioning, monitoring, and deployment.

Conclusion

Building robust LLM infrastructure is a complex challenge involving hardware, software, networking, and management. Following best practices and adopting emerging technologies can help developers create efficient, scalable LLM systems. As AI advances, the need for strong LLM infrastructure grows, making it crucial for developers and organizations to prioritize. Integrating these insights into your development practices will equip your LLM infrastructure to meet the demands of modern AI applications, fostering innovation and success in this rapidly evolving field.

FAQs

How does LLM training work?

LLM training involves feeding extensive text data into the model for unsupervised learning. Neural networks adjust parameters to learn patterns, grammar, and context to reduce differences.

Is LLM the same as chatbot?

LLMs serve as the technical foundation, with chatbots as their application form that utilize these models to converse with users. Chatbots utilize LLMs for natural conversations, but not all chatbots need LLMs.

How does LLM inference work?

LLM inference involves taking a trained model and using it to generate predictions or responses based on new input data. The model processes the input through its neural network layers, applying learned patterns and weights to produce coherent and contextually relevant outputs.

How are LLMs pre-trained?

LLMs are pre-trained using a large corpus of text data through unsupervised learning. During this phase, the model learns to predict the next word, context, and semantic relationships without explicit labels.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading

1.Comprehensive Guide to LLM API Pricing: Choose the Best for Your Needs