Boost Your GPU Utilization with These Tips

Key Highlights

- GPU utilization refers to the percentage of a graphics card’s processing power being used at a particular time. It is important for optimizing performance and resource allocation in GPU-intensive tasks.

- Monitoring GPU utilization can help identify bottlenecks, refine performance, save costs in cloud environments, and enhance workflows.

- Practical tips to enhance GPU utilization include optimizing code for better GPU usage and utilizing tools and techniques for monitoring GPU performance.

- Advanced strategies for maximizing GPU resources include leveraging multi-GPU setups and effectively using GPUs in cloud environments. Novita AI GPU Instance offers unique GPU Cloud service. The pay-as-you-go service can lead you to a different experience without worrying about the GPU utilization.

Introduction

GPUs are essential for speeding up tasks like graphics and math problems. They’re popular in fields such as machine learning. Monitoring GPU usage is crucial for efficiency, cost savings, and optimal project performance. This post explains the importance of tracking GPU usage, its impact on various applications and processes, common issues, tips to maximize GPU performance, and advanced strategies for leveraging GPUs effectively in data science or machine learning projects.

What is GPU Utilization?

Understanding how much your GPU is being used, or its utilization, is super important if you want to make sure your computer runs as smoothly and quickly as possible.

Defining GPU Utilization in Modern Computing

In the computer world today, GPU utilization refers to how much a graphics card is actively processing data. It’s important to monitor the percentage of time the GPU is busy with computations.

GPU utilization involves tracking GPU usage, memory usage, and the intensity of tasks it’s handling. High utilization indicates that the graphics card is actively performing tasks rather than idling.

Efficient GPU usage is crucial for demanding applications like video games, image rendering, and deep learning. Optimizing GPU performance ensures smooth and fast operations.

The Impact of GPU Performance on Applications and Workflows

Using GPU resources wisely really makes a difference in how fast and smooth applications and workflows run. When GPUs are working at their best, things like machine learning and deep learning tasks go much faster because of better performance. This means that everything gets done quicker, which helps make decisions faster and use computer power more efficiently. For businesses that rely on GPUs for AI stuff, this boost in speed and efficiency can really improve how well their applications and workflows perform.

Common Challenges Affecting GPU Efficiency

GPUs face hurdles that hinder their effectiveness and speed, such as CPU bottleneck leading to poor GPU utilization.

Identifying Bottlenecks in GPU Processing

Figuring out GPU processing slowdowns is crucial for optimizing performance:

- CPU bottleneck: Improve CPU efficiency or data movement to prevent GPU idle time.

- Memory bottlenecks: Optimize memory access to reduce GPU wait times.

- Inefficient parallelization or underutilized GPU parts hinder performance.

- Low compute intensity leads to unused GPU capacity.

- Synchronization and blocking operations can halt the GPU; optimizing these processes enhances utilization.

The Role of Memory Allocation in GPU Performance

Efficient GPU memory allocation is crucial for optimal performance. Proper allocation reduces power consumption, speeds up processing, and minimizes errors. Smart memory management, like creating resource pools, ensures GPUs operate smoothly and cost-effectively. Monitoring GPU usage is vital for cost-saving in cloud setups, enabling seamless scaling for high-demand applications.

How to Enhance GPU Utilization?

To get the most out of your GPU, it’s all about tweaking how you write your code and keeping an eye on how the GPU is doing. Here are some handy hints to make better use of your GPU:

- Making small changes in how you set up your code can really help with using GPUs more effectively. This includes adjusting things like batch size and how tasks are done at the same time.

- Keeping track of what’s happening with your GPU: Tools like NVIDIA System Management Interface (nvidia-smi) or others that do similar jobs can show you important info about what’s going on inside, including memory stuff and other key details.

- Playing around with batch sizes when training models could lead to better usage of GPUs. Trying out different sizes might just hit that sweet spot between not overloading the memory and still getting good performance.

Advanced Strategies for Maximizing GPU Resources

To get the best performance in deep learning and machine learning, it’s crucial to use GPU resources wisely. There are some smart ways you can do this.

Leveraging Multi-GPU Setups for Increased Performance

Using multiple GPUs is a smart way to boost how well and fast you can do deep learning and machine learning projects. With more than one GPU, you can split up the work so different parts are done at the same time on different GPUs. This makes everything run faster because it increases how much processing power you have and speeds up how quickly data goes through, which means your projects get finished quicker.

Watch the video below to explore Multi-GPU Tutorial in Unreal Engine!

For making this easier, there are tools like TensorFlow and PyTorch that come with special features designed for working with several GPUs at once. For instance, TensorFlow has something called MirroredStrategy that helps spread out calculations across various GPUs easily. On the other hand, PyTorch offers DistributedDataParallel which lets you train models across many GPUs or even different computers connected together.

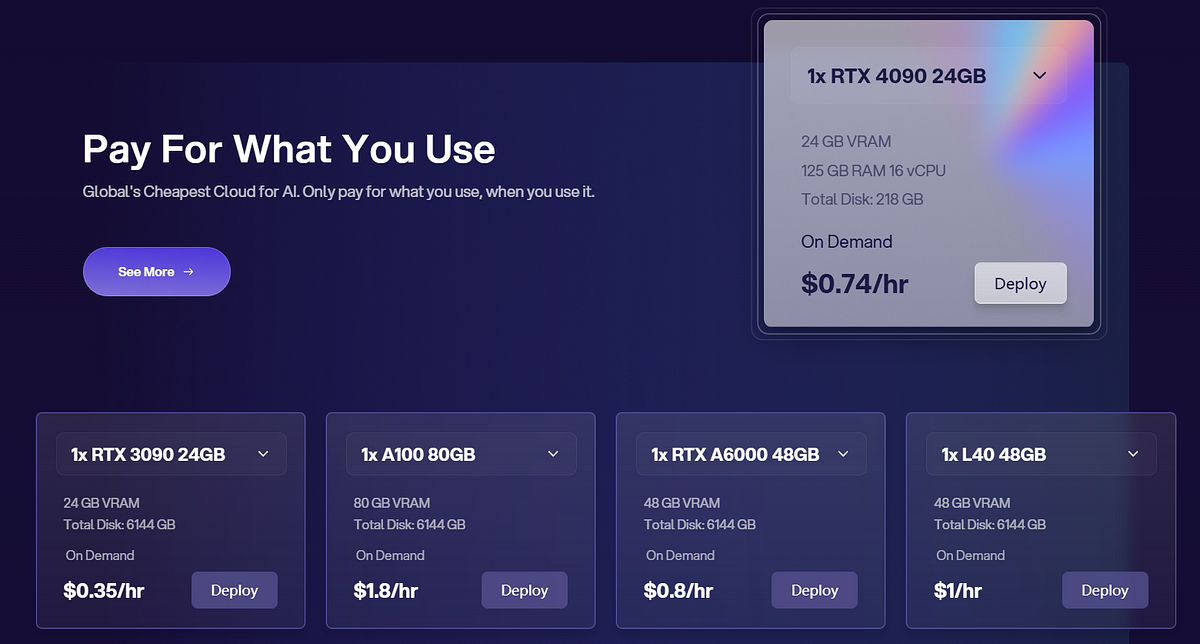

Effective Use of GPU in Cloud Environments

Utilizing GPUs in the cloud comes with a multitude of advantages that not only streamline workflows but also significantly boost computational efficiency for resource-intensive applications. Here’s a detailed look at the key features and benefits of leveraging Novita AI GPU Instance for managing GPU utilization:

- Scalability at Your Fingertips: One of the paramount advantages of GPU Cloud services is their inherent scalability. Unlike traditional on-premises setups, you can effortlessly scale up or down your GPU resources to match your project’s evolving needs. This means you can accommodate sudden spikes in demand or scale back during quieter periods, ensuring optimal resource allocation and preventing unnecessary expenses.

- Cost Efficiency: Migrating GPU workloads to the cloud often results in substantial cost savings. You pay only for what you use, eliminating the capital expenditure associated with acquiring and maintaining physical GPU infrastructure. Additionally, the ability to dynamically allocate and deallocate resources based on real-time demands further contributes to a more cost-effective model.

- Flexibility and Adaptability: Cloud platforms like Novita AI GPU Instance offer a range of GPU instance types, each tailored to specific workloads, from basic machine learning tasks to high-performance computing. This flexibility allows you to choose the right GPU configuration for your project, ensuring maximum efficiency without being locked into a one-size-fits-all solution.

- Real-Time Monitoring and Optimization: Effective GPU utilization management in the cloud is empowered by robust monitoring tools. These tools provide real-time visibility into GPU usage patterns, helping identify bottlenecks and areas for optimization. With this insight, you can fine-tune your configurations, adjust resource allocation dynamically, and prevent overprovisioning, thereby enhancing overall system efficiency.

Conclusion

To get the most out of your GPU, follow these tips to make it work better, fix any slowdowns, and tweak your code. By keeping an eye on things with tools like NVIDIA System Management Interface (SMI), you can see important info about how your GPU is doing. Using more than one GPU or tapping into cloud power can really ramp up what you’re able to do. It’s super important for stuff like AI and deep learning that your GPU runs smoothly. Make sure to check on both how much memory your GPU has left and how hard it’s working regularly so everything stays running at top speed. With this advice in mind, you’ll be able to use all the power your GPUs have got.

Frequently Asked Questions

What is a good GPU utilization?

You’ll find normal ranges from 60% to 90% for gaming. 100% usage can occur in more intensive applications. Lower usage below 40% may indicate that the GPU is not fully leveraged.

Is 100% GPU usage good?

For heavy games, 100% GPU usage is good, while for low-ended games, they can’t use all resources hence causing a low GPU usage. At the same time, keeping 100% GPU usage when idle for a long time may lead to higher temperatures, noise levels, and even an evident decrease in performance.

How do I reduce my GPU utilization?

One effective way to reduce GPU usage is by lowering the graphical settings in games and other graphics-intensive applications. These settings include options such as resolution, texture quality, shadow quality, anti-aliasing, and other visual effects.

Why my GPU usage is lower than CPU?

When, for example, a CPU has higher utilization than the GPU, it means that the system is experiencing bottleneck. Bottleneck refers to a component that limits the potential of other hardware due to differences in their maximum capabilities.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading: