Unveiling VLLM List Models: A Comprehensive Guide

Discover vLLM list models's features, innovation, and the AI-driven future on our blog. Explore the latest trends in list models today.

Key Highlights

- VLLM list models, such as Gemma2 9B and Llama3.1 405B, boast billions of parameters, allowing them to capture vast amounts of information and intricate relationships in natural language.

- Leverage these pre-trained model weights through transfer learning to achieve top performance with minimal task-specific data and training.

- Their success has inspired developers to explore even larger and more powerful models, leading to a rapid evolution of language AI capabilities.

- As algorithms and training techniques continue to advance, VLLM list models are expected to become even more sophisticated and capable.

Introduction

In the dynamic landscape of AI and machine learning, vLLM list models represent a cutting-edge and revolutionary approach. By incorporating contextual information from preceding elements in a sequence, these models can generate more coherent and contextually relevant outputs. This makes them particularly adept at tasks requiring an understanding of textual context and continuity. This blog delves into what are vLLM list models, exploring their functionality, and potential impact on the AI field. By staying abreast of these developments and harnessing the power of vLLM list models, developers can unlock new opportunities for innovation and growth in an increasingly AI-driven world.

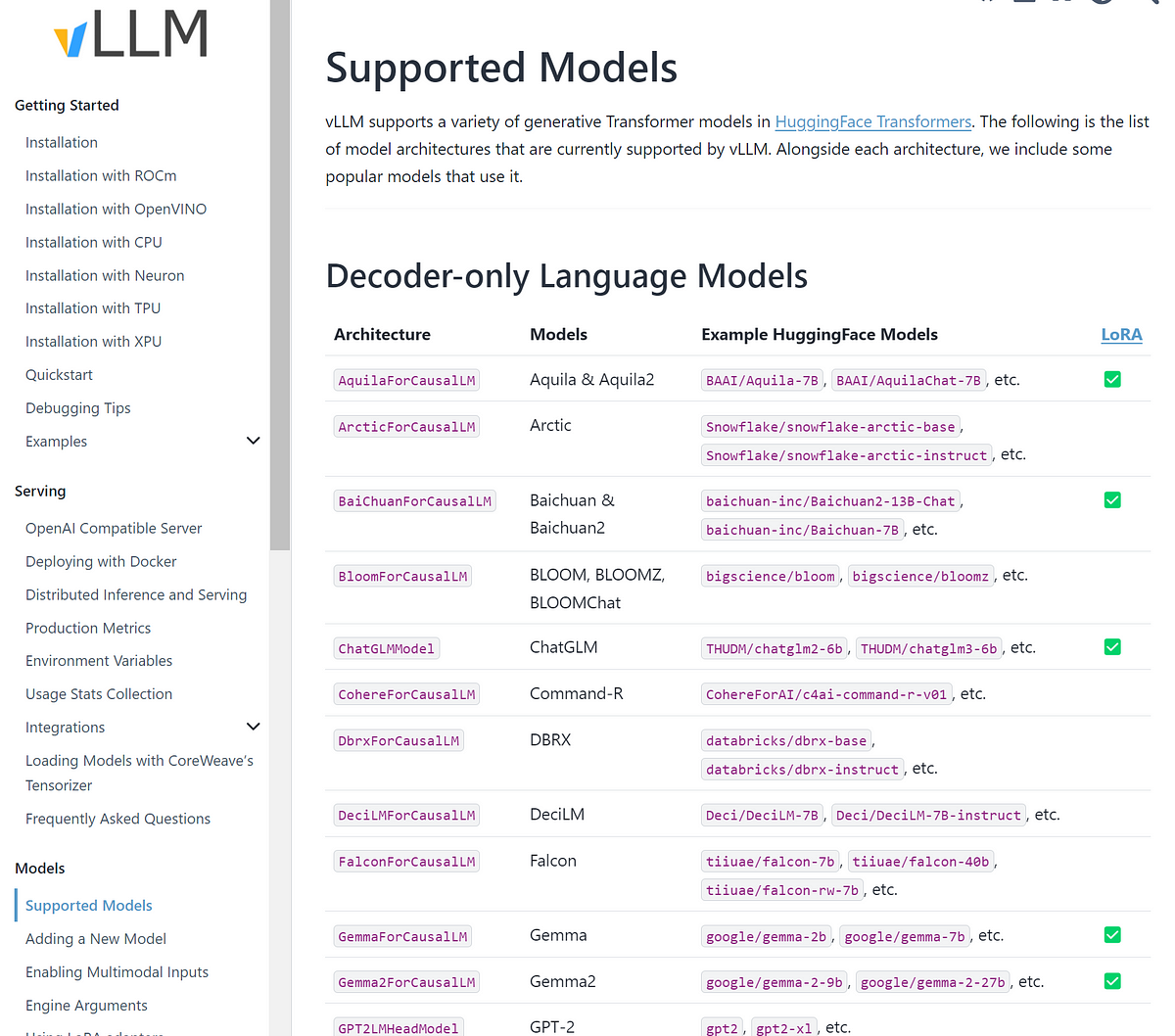

Understanding VLLM List Models

VLLM provides LLM inference and serving with state-of-the-art throughput, Paged Attention, Continuous batching, Quantization (GPTQ, AWQ, FP8), and optimized CUDA kernels. VLLM List Models are advanced AI models that can understand and generate human-like text. These models are trained on vast amounts of data to learn the nuances of language and context in natural language processing (NLP) tasks.

What Are VLLM and VLLM List Models?

VLLM is a high-performance open library for running inference workloads. It allows you to download popular models, run them locally with custom configurations, and serve an OpenAI-compatible API server. Experiment with various models and build LLM-based applications independently without external services.

vLLM list models typically refer to a class of open-source models designed to handle vast amounts of data and parameters, often used for natural language processing tasks. The list models represent various pre-trained models that are optimized for different applications.

How Does VLLM Work?

VLLM supports streaming inference, reducing latency and improving response speed. It enables parallel processing of multiple requests through multithreading and asynchronous operations, enhancing throughput. Compatible with popular pre-trained models, vLLM optimizes memory usage, achieving near-optimal efficiency for higher throughput compared to other engines.

Key Features of VLLM List Models

- Scale and Complexity: Trained on massive datasets of terabytes of text, these models learn from diverse sources to gain a nuanced understanding of language.

- Sequence Handling: VLLM list models excel at managing sequences, from generating paragraphs to translating languages. Their strength lies in handling complex dependencies through advanced architectures like transformers.

- Versatility Across Domains: VLLM list models are versatile, extending beyond text generation to tasks like sentiment analysis, question answering, and summarization. Their adaptability makes them valuable across different fields, from healthcare to finance.

- Memory Efficiency: Utilizes PagedAttention to avoid unnecessary memory usage, guaranteeing seamless project performance.

Top VLLM List Models

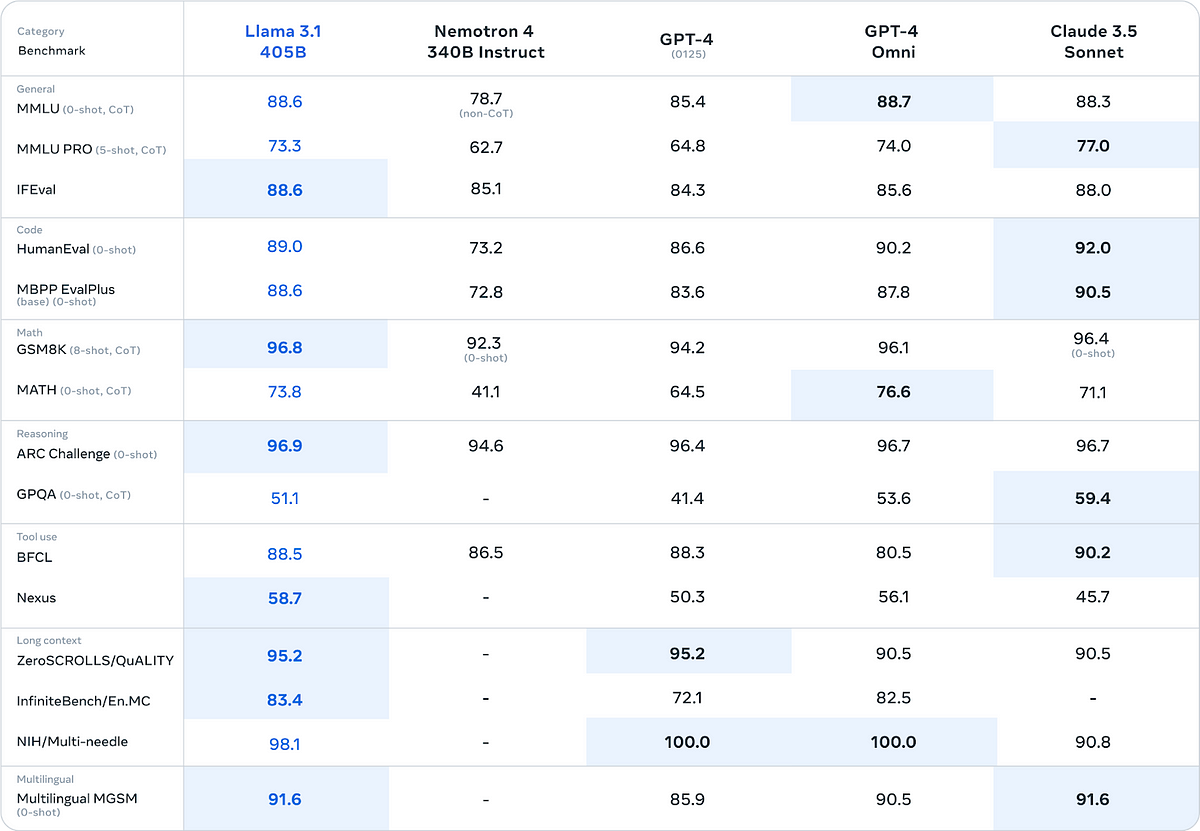

1. Llama 3.1 405B

Llama 3.1 405B by Meta is an advanced openly available model developed by Meta that competes with top AI models in general knowledge, steerability, math, tool use, and multilingual translation. This 405B model is designed for high-quality dialogue. It outperforms GPT-4o and Claude 3.5 Sonnet in evaluations.

Features

- 128k context length

- Over 400billion parameters

- Multi-lingual processing

- Reasoning abilities

- Coding assistant

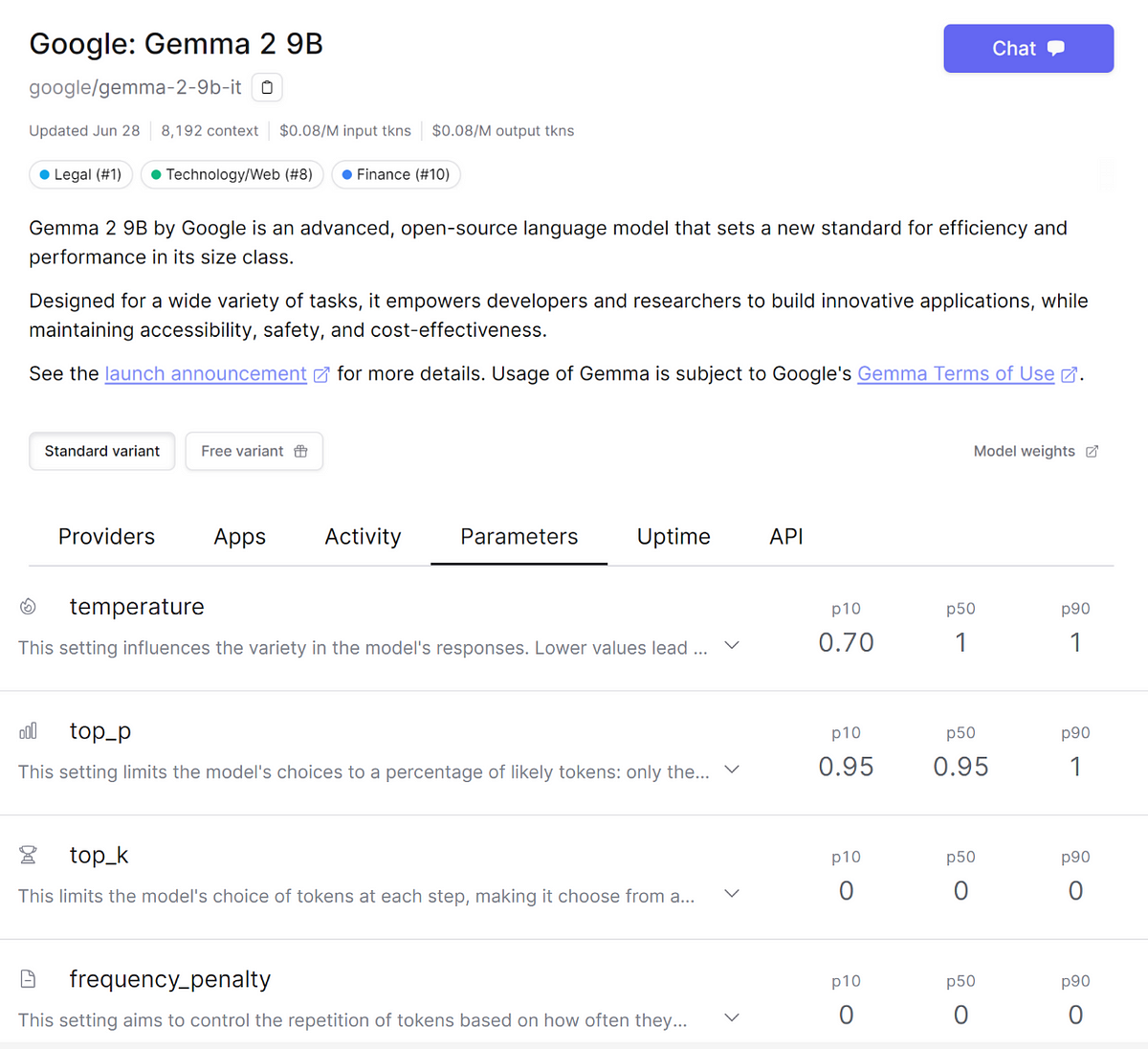

2. Gemma 2 9B

Gemma 2 9B by Google is an advanced, open-source language model that sets a new efficiency and performance standard in its size class. It empowers developers and researchers to build innovative applications while ensuring accessibility, safety, and cost-effectiveness.

Features

- Sliding window attention

- 9 billion parameters

- Competitive performance against models 2–3 times larger

- Open-source availability

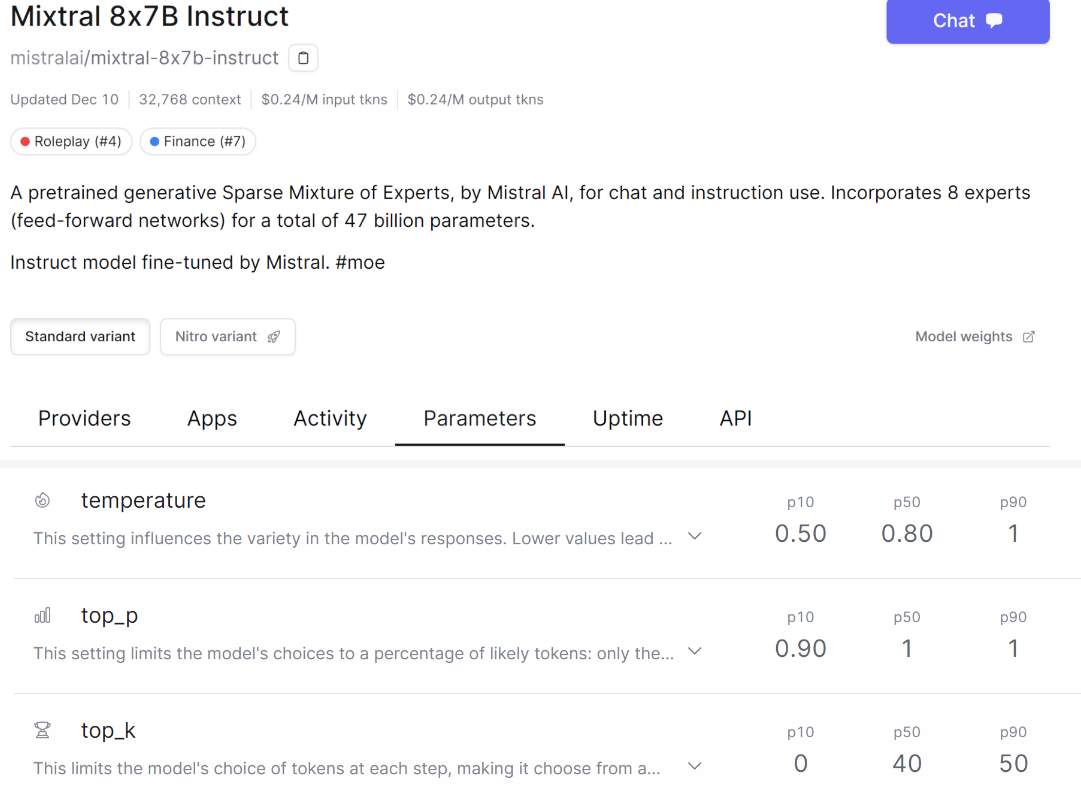

3. Mixtral 8x7B Instruct

This is a language model that can follow instructions, complete requests, and generate creative text formats. The mixtral-8x7B Instruct model showcases how the base model can be efficiently adjusted to deliver impressive performance.

Features

- 32k context length

- Excel at coding, 40.2% on HumanEval

- Multilingual support

- Commercially applicable with an Apache 2.0 license

These three models above are provided by Novita AI, an AI API platform dedicated to providing cost-effective and user-friendly LLM API services. You can check our website for detailed information.

4. Falcon 40B

Falcon-40B, a 40 billion parameters causal decoder-only model created by TII, is trained on 1,000 billion tokens of Refined web with curated corpora.

Features

- Support various annotation tasks

- 60 layers of sophisticated architecture

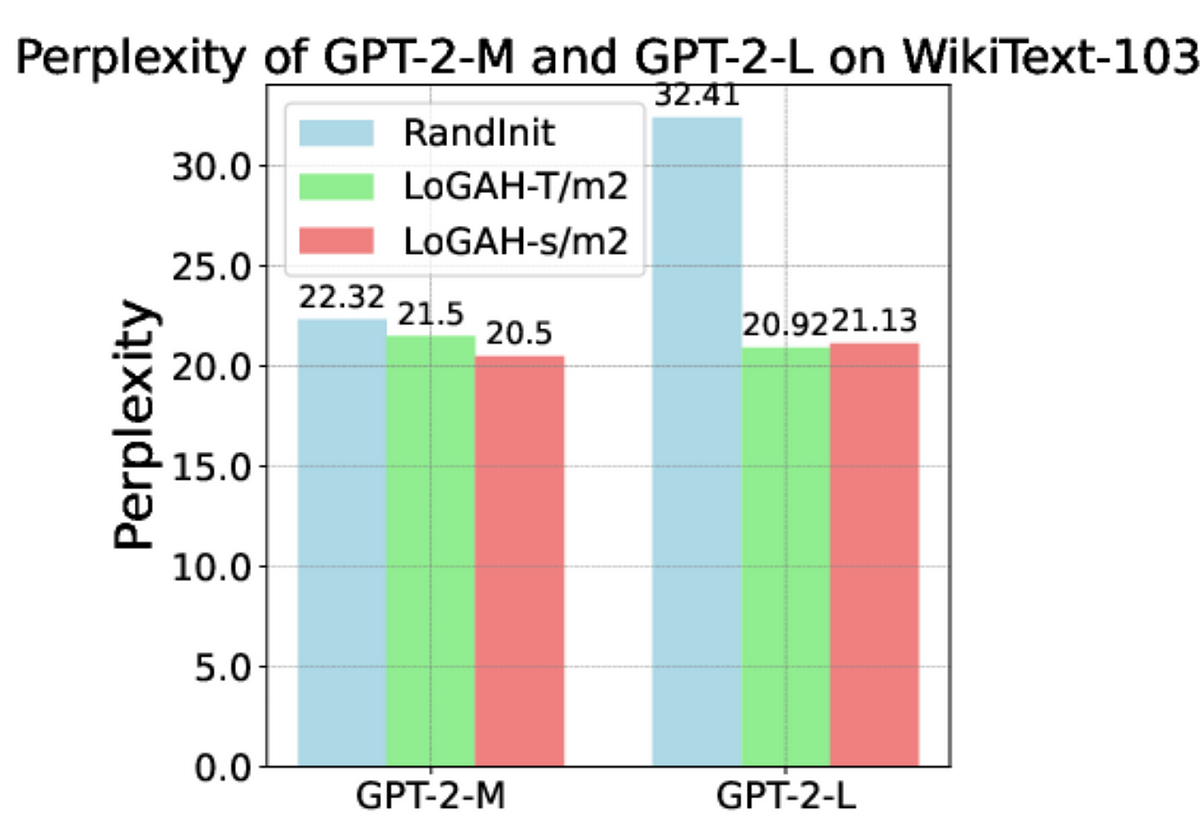

5. GPT-2

GPT-2 is a language model based on a large transformer with 1.5 billion parameters, which was trained using a dataset of 8 million web pages.

Features

- Unsupervised learning

- Used attention mechanism

Deployment Process and Challenges

How to Deploy vLLM List Models

1. Environment Setup

- Ensure that Python and necessary libraries (like torch, transformers, etc.) are installed on your system.

- Use pip to install vLLM like

pip install vllm

2. Load the Model

Use the interface provided by vLLM to load the desired model. For example:

from vllm import VLLM

model = VLLM("model_name_or_path")

3. Configure the Model

Configure model parameters as needed, such as batch size, maximum input length, etc.

4. Inference

Use the loaded model for inference

output = model.generate(input_text)

5. Deployment

If you need to deploy the model as a service, you can use frameworks to create the interface.

6. Run the Service

uvicorn app:app - reload

7. Testing

Test your API using Postman or curl.

Deployment Challenges

- Computational Resource Requirements: Typically need high computational power, often requiring powerful GPUs.

- Memory Limitations: The model parameters can be large, exceeding standard hardware memory limits, which complicates deployment.

- Latency and Response Time: High latency from the inference process can impact real-time user experience.

- Cost Issues: The costs of high-performance cloud computing resources can be very high, especially for large-scale deployments.

- Model Optimization: Optimizing the model through pruning, quantization, etc., reduces computational load and memory usage.

How to Use Novita AI LLM API

Besides deploying new models by yourself, you have another choice, that is, to choose LLM API Service with Novia AI. Deploying premium vLLM list models involves seamless API integration. This approach allows for fast and scalable AI capabilities, enhancing the quality and diversity of generated content.

Efficient Approach to vLLM List Models — API Integration

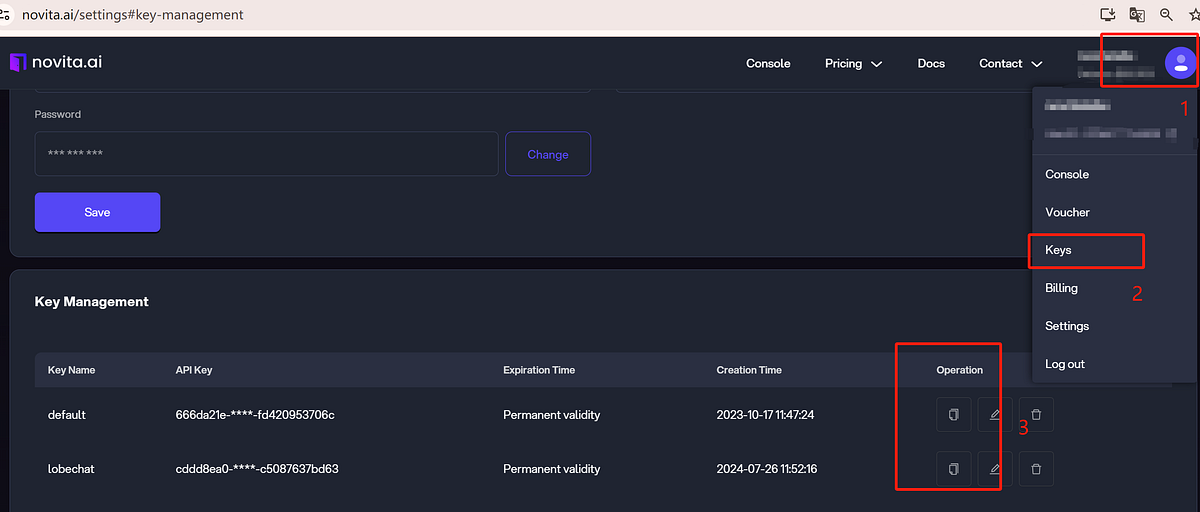

- Step 1: Visit the website and create/log in to your account.

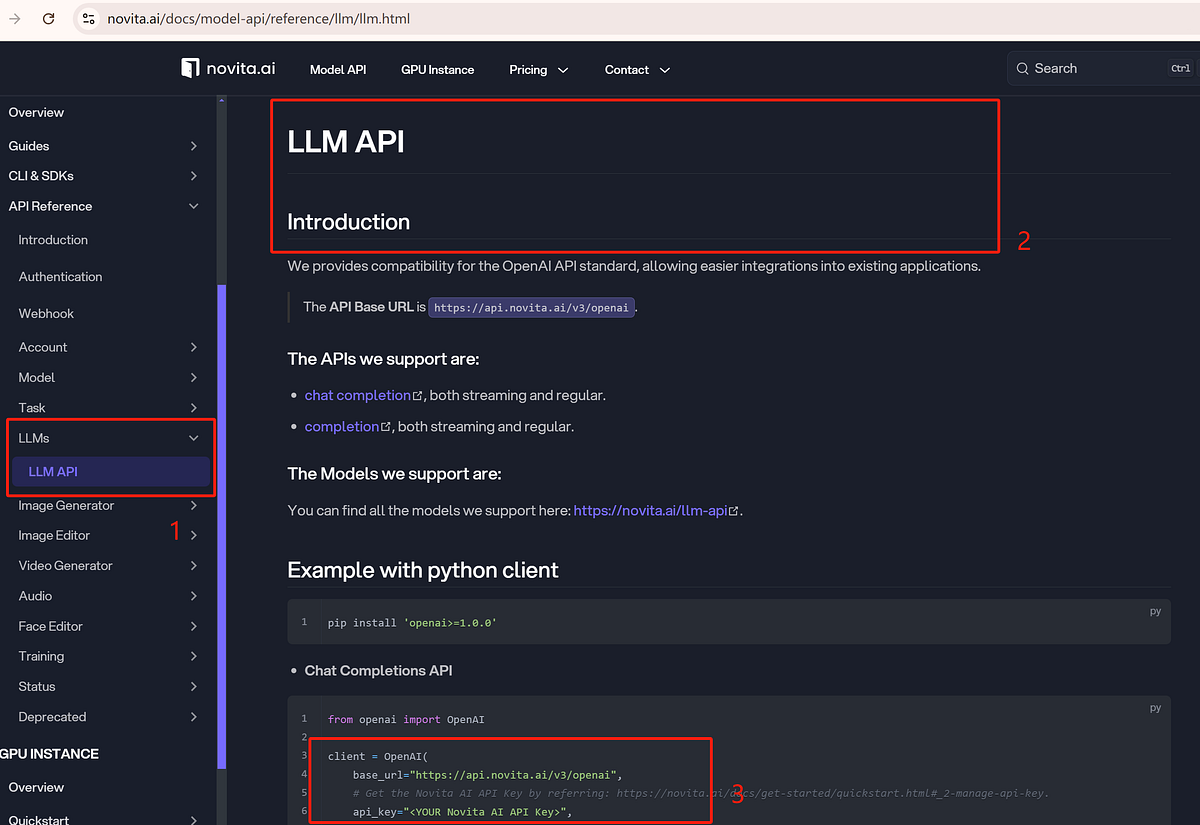

- Step 2: Navigate to “LLM API Key” and obtain the API key you want, like in the following image.

- Step 3: Navigate to API Reference. Find LLM API under the “LLMs”. Use the API key to make the API request.

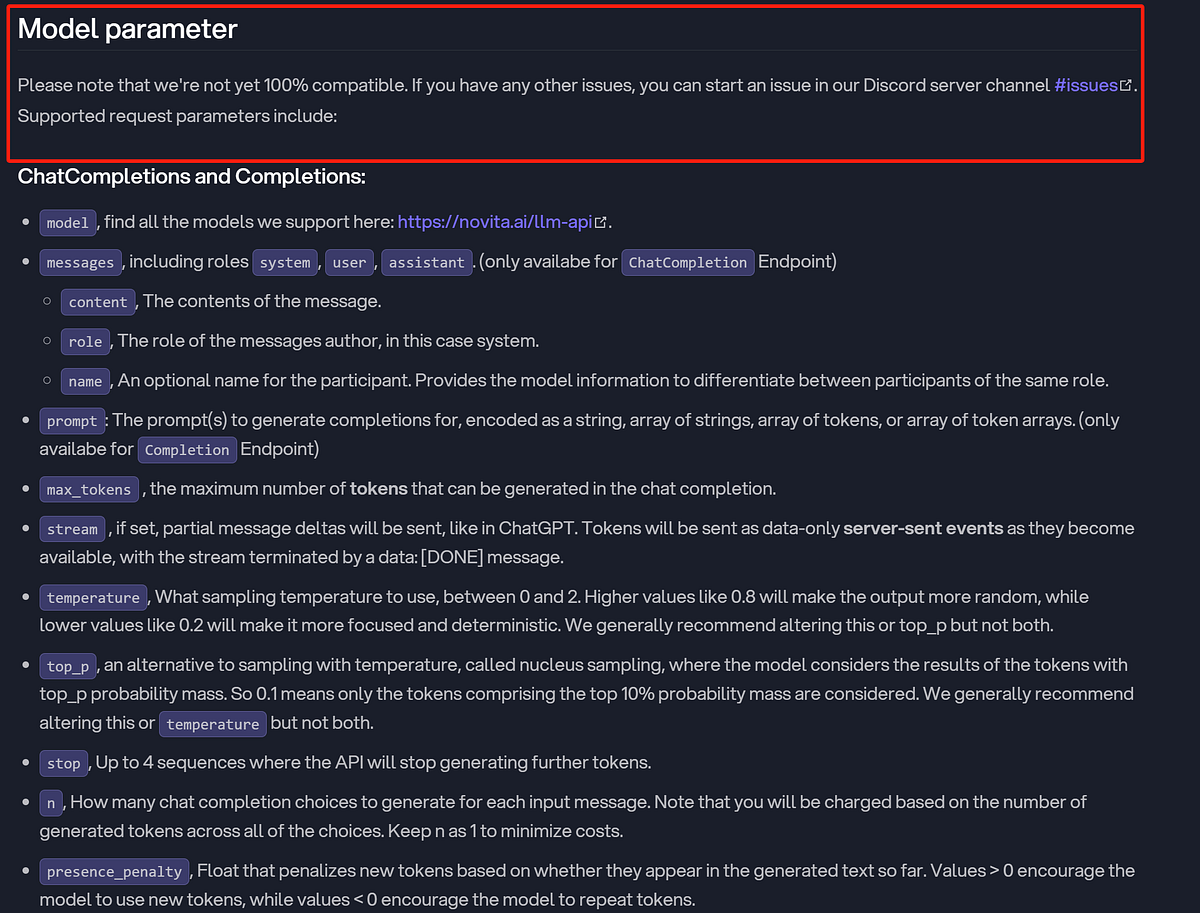

- Step 4: You can adjust the parameters according to your needs.

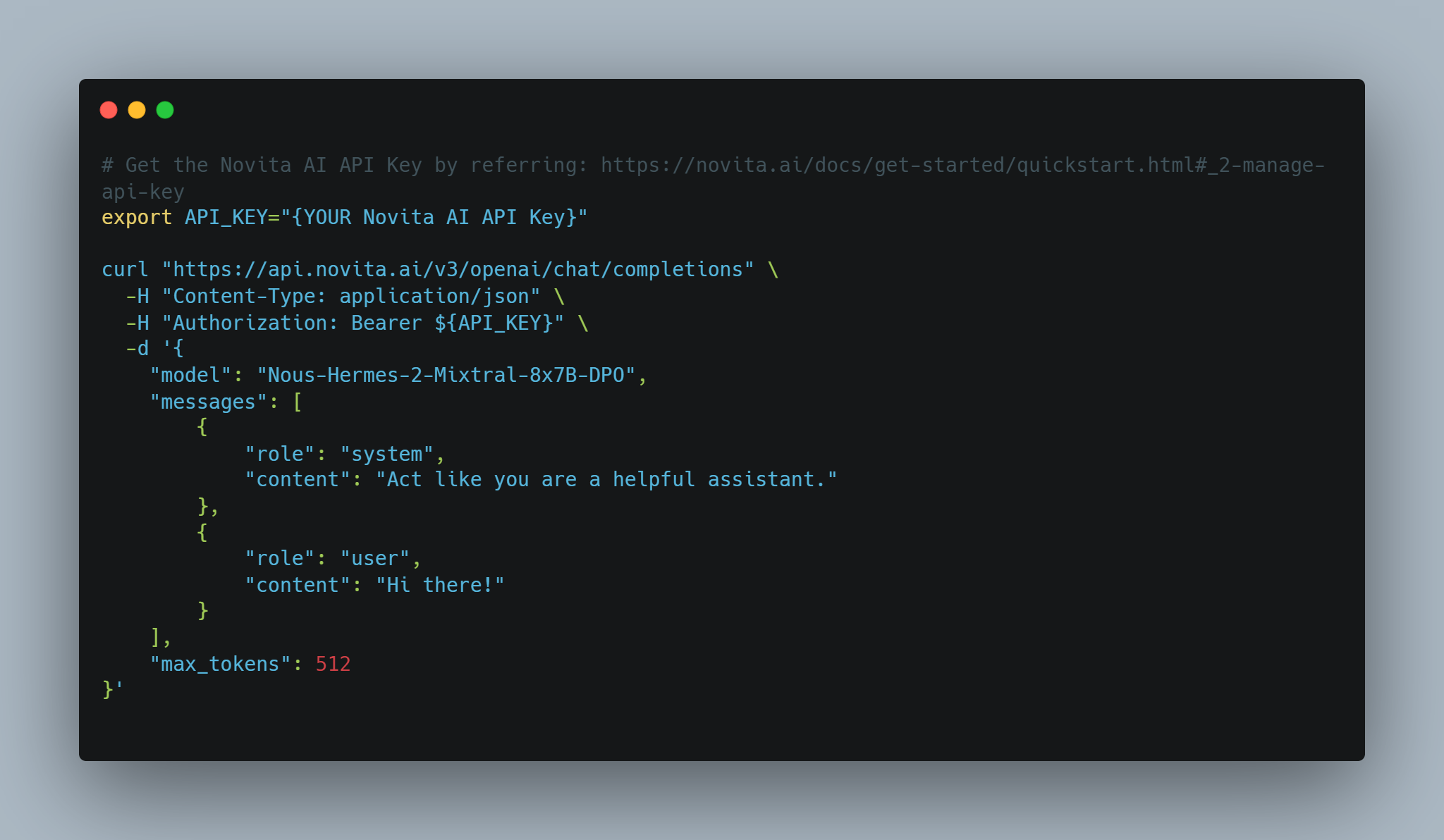

- Step 5: Integrate it into your existing project backend and wait for the response. Here is a code example for reference.

Example with curl client

The Impact of the VLLM List Model on the Future

Machine Learning Capabilities

The rise of vLLM list models is reshaping machine learning capabilities, enabling new possibilities and innovations by processing vast amounts of data. These models are now tackling tasks once deemed beyond AI’s reach. In the future, VLLM list models will become more complex, shaping various industries and fields of study with advancements in hardware, algorithms, and training techniques.

Ethical Considerations

Great capability necessitates great responsibility. The emergence and application of the VLLM list model give rise to significant ethical concerns. Matters like data privacy, bias, and the possible abuse of technology must be tackled as these models are increasingly adopted into society.

Conclusion

VLLM list models represent a significant advancement in the field of machine learning. Their size, sequence processing power, and versatility make them powerful tools for a wide range of applications. As we continue to explore and develop these models, their impact on technology and society will undoubtedly grow. In summary, VLLM list models are not only a technological marvel, but also a glimpse into the future of machine learning. By understanding and utilizing these models, we can unlock new potential and address the most pressing challenges in technology.

FAQs

Does vLLM support quantized models?

Yes, vLLM supports quantization models. Quantization can help reduce the memory footprint and computational costs of models, thereby improving inference efficiency.

Does vLLM require GPU?

It requires compute capability 7.0 or higher GPU (e.g., V100, T4, RTX20xx, A100, L4, H100, etc.)

What is the best binary classifier model?

The best binary classifier model varies based on the use case, dataset, and requirements. Popular models include Logistic Regression, Support Vector Machines (SVM), and Random Forest.

Can vLLM do batching?

It is suitable for batch requests and can handle them quickly after a query.

Does vLLM support Mixtral?

Currently, the vLLM supports Mixtral-8x7B and Mixtral-8x7B-Instruct for context lengths up to 4096.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading