Top 10 LLM Models on Hugging Face

Discover the top 10 HuggingFace LLM models on our blog. Explore the latest in natural language processing technology.

Introduction

Hugging Face has emerged as a goldmine for enthusiasts and developers in natural language processing, providing an extensive array of pre-trained language models ready for seamless integration into a variety of applications. As a premier destination for Large Language Models (LLMs), Hugging Face is pivotal in the field. This article delves into the top 10 LLMs hosted on Hugging Face, each playing a significant role in the ongoing development of language comprehension and generation.

Let’s get started!

Mistral-7B-v0.1

The Mistral-7B-v0.1 is a Large Language Model (LLM) equipped with an impressive 7 billion parameters. It serves as a pre-trained generative text model and is distinguished for outperforming the benchmarks established by Llama 2 13B in multiple tested areas. This model was featured with Many LLM APIs including novita.ai’s Chat-completion.

Additionally, the Mistral-7B-v0.1 employs a Byte-fallback BPE tokenizer.

Use Cases and Applications

Text Generation: The Mistral-7B-v0.1 excels in high-quality text generation, making it ideal for applications such as content creation, creative writing, and automated storytelling.

Natural Language Understanding: Leveraging its advanced transformer architecture and unique attention mechanisms, the model is well-equipped for tasks that require natural language understanding, such as sentiment analysis and text classification.

Research and Development: The model provides a solid foundation for researchers and developers to utilize for further exploration and refinement in diverse natural language processing initiatives.

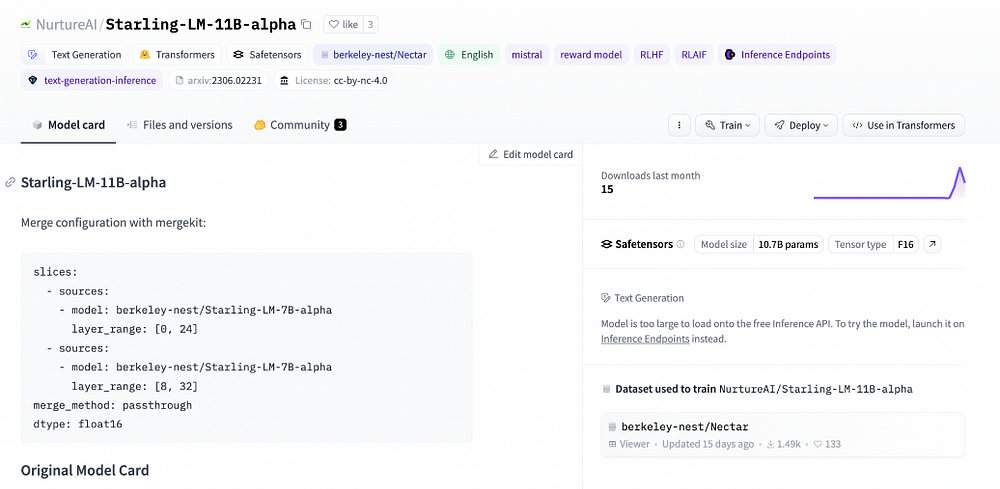

Starling-LM-11B-alpha

This large language model (LLM), developed by NurtureAI, boasts 11 billion parameters. It is based on the OpenChat 3.5 model and has been refined through Reinforcement Learning from AI Feedback (RLAIF). This innovative training and tuning pipeline utilizes a dataset of human-labeled rankings to guide its training process.

Use Cases and Applications

Starling-LM-11B-alpha, an innovative large language model, has the potential to transform our interactions with technology. Its open-source status, robust performance, and broad functionality make it an invaluable resource for researchers, developers, and creatives.

Natural Language Processing (NLP): This model excels in creating lifelike dialogues for chatbots and virtual assistants, composing various creative text forms, translating between languages, and summarizing extensive texts.

Research in machine learning: It plays a significant role in advancing the development of novel NLP algorithms and methodologies.

Education and training: The model is adept at delivering customized educational experiences and creating engaging interactive content. Creative industries: It is capable of producing scripts, poetry, song lyrics, and other forms of creative writing.

Yi-34B-Llama

With 34 billion parameters, Yi-34B-Llama shows superior learning abilities compared to its smaller counterparts. This model stands out with its multi-modal capabilities, adeptly handling text, code, and images, which enhances its versatility beyond models limited to a single modality. It incorporates zero-shot learning, allowing it to adapt to tasks it has not been explicitly trained on, demonstrating great flexibility in unfamiliar scenarios. Furthermore, its stateful design allows it to retain memory of past conversations and interactions, providing a more dynamic and personalized user experience.

Use Cases and Applications

Machine translation: The model offers precise and fluent translation across multiple languages. Question answering: Yi-34B-Llama is capable of providing detailed answers to a broad spectrum of questions, whether they are straightforward, complex, or unusual.

Dialogue: This model is adept at conducting meaningful and engaging discussions on a diverse array of topics.

Code generation: Yi-34B-Llama can produce code in various programming languages, aiding developers in their projects.

Image captioning: The model is skilled at providing accurate descriptions for images, enhancing understanding of visual content.

DeepSeek LLM 67B Base

DeepSeek LLM 67B Base, a large language model with 67 billion parameters, has made a significant mark due to its superior capabilities in reasoning, coding, and mathematics. It surpasses similar models like Llama2 70B Base, demonstrating exceptional code comprehension and generation with a HumanEval Pass@1 score of 73.78. The model’s impressive mathematical abilities are confirmed by its scores on benchmarks such as GSM8K 0-shot (84.1) and Math 0-shot (32.6). Furthermore, it outperforms GPT-3.5 in handling the Chinese language. DeepSeek LLM 67B Base is released under the MIT license, providing free access for researchers and developers to explore and innovate.

Use Cases and Applications

Programming: Deploy DeepSeek LLM 67B Base for coding tasks such as generating code, completing snippets, and resolving bugs.

Research: Apply DeepSeek LLM 67B Base to advance studies in various fields of natural language processing.

Content Creation: Utilize the model to produce diverse creative text outputs, including poems, scripts, musical compositions, and more.

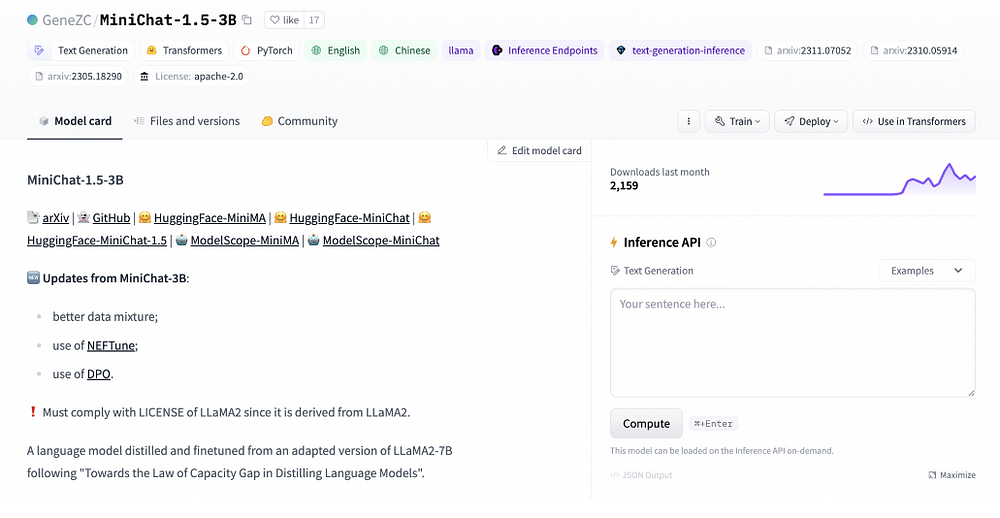

MiniChat-1.5–3B

MiniChat-1.5–3B, a derivative of the LLaMA2–7B model, is highly effective in conversational AI applications. Despite its compact size, it performs competitively with larger models, even surpassing other 3B models in GPT4 evaluations and equaling the capabilities of 7B chat models. It has been optimized for data efficiency through distillation, resulting in a reduced footprint and quicker inference times. Enhanced dialogue fluency is achieved using NEFTune and DPO techniques. MiniChat-1.5–3B, trained on an extensive dataset of text and code, boasts a wide-ranging knowledge base. This multi-modal model supports text, images, and audio, enabling versatile and engaging interactions across different platforms.

Use Cases and Applications

Chatbots and Virtual Assistants: Create dynamic and knowledgeable chatbots for uses in customer support, educational environments, and entertainment settings.

Storytelling and Creative Writing: Craft engaging narratives, screenplays, poetry, and other creative textual forms. Question Answering and

Information Retrieval: Provide precise and timely answers to user inquiries, delivering pertinent information through a conversational approach.

Marcoroni-7B-v3

Marcoroni-7B-v3 is a 7-billion parameter multilingual generative model known for its broad range of capabilities, including text generation, language translation, creative content production, and answering complex questions informatively. Designed for efficiency and flexibility, it handles both text and code, serving as a versatile resource for various applications. With its substantial parameter count, Marcoroni-7B-v3 is adept at mastering intricate language structures, producing realistic and sophisticated outputs. It employs zero-shot learning to effectively tackle tasks without the need for prior training or fine-tuning, making it ideal for quick prototyping and innovative experiments. Additionally, Marcoroni-7B-v3 is open source and available under a permissive license, promoting extensive use and exploration by a global community.

Use Cases and Applications

Question Answering: Marcoroni-7B-v3 thoroughly addresses inquiries, adeptly handling open-ended, complex, or unique questions.

Summarization: Utilize Marcoroni-7B-v3 to condense extensive texts into clear, brief summaries.

Paraphrasing: Marcoroni-7B-v3 skillfully rewords text, ensuring the original meaning remains intact.

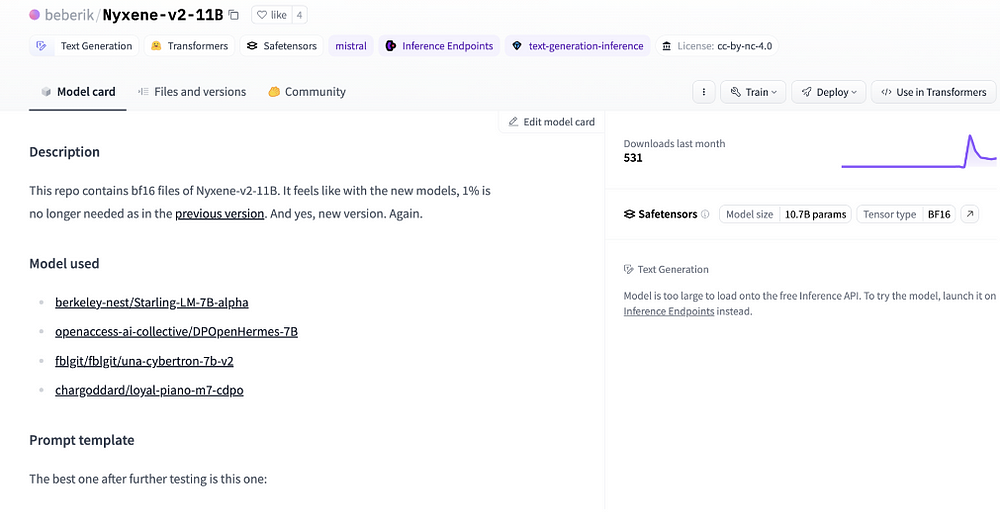

Nyxene-v2–11B

Created by Hugging Face, Nyxene-v2–11B is a powerful large language model (LLM) equipped with 11 billion parameters. This substantial parameter count enables Nyxene-v2–11B to adeptly manage complex and varied tasks. It is particularly proficient in processing information and generating text, achieving higher accuracy and fluency than its smaller counterparts. Additionally, Nyxene-v2–11B is optimized for performance, utilizing the efficient BF16 format which allows for quicker inference times and less memory consumption. Importantly, it simplifies the user experience by eliminating the need for an extra 1% of tokens, maintaining high performance without the complexities of its predecessor.

Use Cases and Applications

Code Completion: Utilize Nyxene-v2–11B to enhance code completion, helping developers craft code more swiftly and accurately.

Translation: Employ Nyxene-v2–11B for precise and smooth language translations, leveraging its advanced capabilities.

Data Summarization: Nyxene-v2–11B is adept at distilling vast quantities of text into clear, succinct summaries, streamlining information processing and saving valuable time.

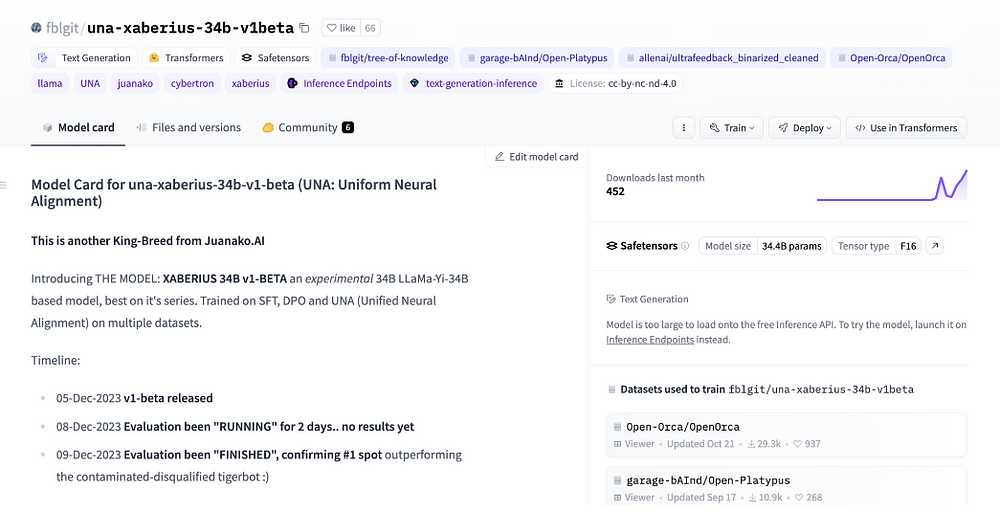

Una Xaberius 34B v1Beta

Una Xaberius 34B v1Beta, an experimental large language model (LLM) built on the LLaMa-Yi-34B architecture, was developed by FBL and launched in December 2023. With its 34 billion parameters, it ranks among the larger LLMs, delivering strong performance and adaptability.

Utilizing advanced training techniques such as SFT, DPO, and UNA (Unified Neural Alignment), this model has risen to the top of the Hugging Face LeaderBoard for OpenSource LLMs, achieving notable scores across various benchmarks.

Una Xaberius 34B v1Beta is highly proficient in interpreting and responding to a wide range of prompts, especially those formatted in ChatML and Alpaca System. Its capabilities include answering questions, creating various types of creative text, and performing specific tasks such as writing poetry, generating code, and composing emails. As the field of large language models continues to grow, Una Xaberius 34B v1Beta stands out as a formidable player, advancing the frontiers of language comprehension and creation.

Use Cases and Applications

Code Generation and Analysis: Leveraging its deep understanding of programming, Una Xaberius can help developers by generating code snippets and analyzing existing code structures.

Education and Training: Una Xaberius is capable of crafting customized educational programs and interactive training content, enhancing learning experiences.

Research and Development: As a sophisticated language model, Una Xaberius is well-suited for conducting research across fields like natural language processing, artificial intelligence, and related disciplines.

ShiningValiant

Valiant Labs presents ShiningValiant, a substantial large language model (LLM) developed on the Llama 2 framework and finely tuned on diverse datasets to promote insight, creativity, passion, and friendliness.

Boasting 70 billion parameters, ShiningValiant stands among the most extensive LLMs, producing text that is both rich and nuanced, thereby outperforming smaller models in depth and detail.

It features innovative safeguards, including safetensors — a safety filter that blocks the generation of harmful or offensive content, ensuring its use is both responsible and ethical. ShiningValiant is not limited to just text generation; it can also be tailored for specific applications such as answering questions, generating code, and creative writing.

Additionally, its multimodal capabilities allow it to handle and create text, code, and images, establishing ShiningValiant as a versatile tool for a wide range of uses.

Use Cases and Applications

Creative Content Creation: Utilize innovative language models to produce a variety of content, such as poems, scripts, code, musical compositions, emails, and letters.

Customer Support: Improve customer service by effectively addressing inquiries, providing personalized product suggestions, and swiftly resolving problems.

Research Assistance: Employ language models to help generate hypotheses, analyze data, and support the composition of research papers.

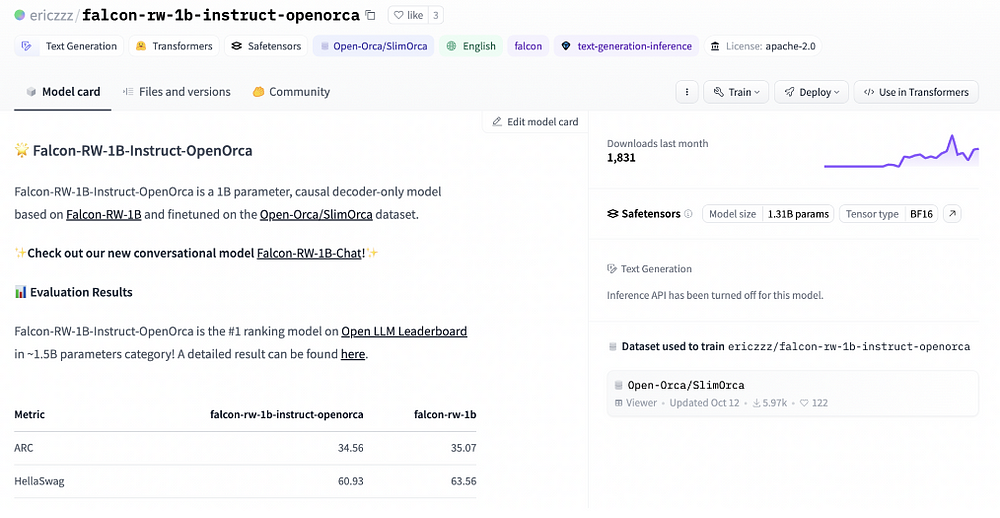

Falcon-RW-1B-INSTRUCT-OpenOrca

Falcon-RW-1B-Instruct-OpenOrca is a powerful large language model (LLM) featuring 1 billion parameters. It is built upon the Falcon-RW-1B model and enhanced through training on the Open-Orca/SlimOrca dataset, which significantly improves its capabilities in following instructions, reasoning, and handling factual language tasks.

This model is equipped with a Causal Decoder-Only architecture, which streamlines text generation, language translation, and the delivery of detailed responses to queries. Falcon-RW-1B-Instruct-OpenOrca excels in its field, achieving the highest ranking in the Open LLM Leaderboard for models in the ~1.5B parameter range.

Use Cases and Applications

Creative Text Generation: Produces a wide array of creative texts, such as poems, code, scripts, musical compositions, emails, and letters.

Instruction Following: Skillfully executes tasks by adhering accurately to given instructions.

Factual Language Tasks: Shows robust proficiency in activities that demand factual accuracy and logical reasoning.

Conclusion

Hugging Face’s collection of large language models presents vast opportunities for developers, researchers, and enthusiasts alike. These models are pivotal in pushing the boundaries of natural language understanding and generation, thanks to their diverse architectures and capabilities. As technology progresses, the potential uses and impacts of these models on various industries are limitless. The exploration and innovation within the field of Large Language Models are ongoing, heralding exciting advancements ahead.

If you’re keen to dive into the world of language models and artificial intelligence, you might consider participating in Analytics Vidhya’s GenAI Pinnacle program. This program offers practical experience, helping you to fully leverage the transformative power of these technologies. Begin your adventure with genAI and explore the vast possibilities offered by large language models today!

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended reading

LLM Leaderboard 2024 Predictions Revealed

Unlock the Power of Janitor LLM: Exploring Guide-By-Guide

TOP LLMs for 2024: How to Evaluate and Improve An Open Source LLM