The Ultimate Guide to Stable Diffusion Textual Inversion

Navigate the complexities of stable diffusion textual inversion with our comprehensive guide. Find all the information you need on our blog.

Welcome to the ultimate guide on stable diffusion textual inversion! In this comprehensive blog, we will delve into the fascinating world of stable diffusion models and explore how they can be used to achieve textual inversion. This cutting-edge technique combines image generation with AI-based language models to generate new words, text tokens, and concepts.

Understanding Stable Diffusion Textual Inversion

Textual inversion, a powerful concept in natural language processing (NLP), is the process of generating new text by manipulating existing text.

Textual Inversion is a powerful training technique that enables the personalization of image generation models using only a small set of example images. By leveraging this technique, the model learns and updates its text embeddings to align with the provided example images.

To initiate this process, a special word or prompt is used, and the text embeddings associated with it are modified to match the desired image characteristics. This allows for a more targeted and personalized image generation based on the provided examples.

Stable diffusion textual inversion takes this a step further by utilizing stable diffusion models. These models, which are based on diffusion models, have shown remarkable results in image generation. By applying textual inversion techniques to stable diffusion models, we can unlock new possibilities in language modeling and image generation tasks.

The Basics of Textual Inversion

Textual inversion involves manipulating text within the embedding space of a language model. The embedding space, represented by an embedding folder, is a vector space where each word or token is mapped to a unique embedding vector. By altering specific dimensions of the embedding vector, we can generate new concepts or modify the meaning of existing text.

In stable diffusion textual inversion, new concepts are introduced by initializing a new embedding vector for a specific word or token. This new embedding vector represents the desired concept in the embedding space. By training a language model with these new embeddings, we enable the model to generate text that aligns with the desired concept.

Role and Importance of Textual Inversion in Stable Diffusion

Textual inversion plays a crucial role in stable diffusion models. Stable diffusion models combine the power of diffusion models with the flexibility of textual inversion embeddings, resulting in improved language modeling and image generation capabilities.

Stable diffusion models utilize diffusion models to generate image samples. These models learn to sample from a probability distribution, gradually refining the generated image through a diffusion process. Textual inversion embeddings, on the other hand, enable the model to generate text that aligns with specific concepts or meanings.

The integration of textual inversion with stable diffusion models allows for more precise control over the generated text and image output. By manipulating the textual inversion embeddings, we can guide the model to generate text that reflects desired concepts, leading to more accurate image generation and improved language modeling.

Utilizing gradient accumulation and batch size optimization techniques, stable diffusion models powered by textual inversion embeddings can generate high-quality images with contextually relevant text. This combination of AI-based language models, stable diffusion models, and textual inversion embeddings opens up new possibilities in image generation, machine learning, and natural language processing.

Getting Started with Stable Diffusion Models

Before diving into the specifics of stable diffusion textual inversion, it’s important to familiarize yourself with stable diffusion models. These models have gained popularity for their ability to generate high-quality image samples through a diffusion process.

By setting up stable diffusion v1–5 models, you can take advantage of the latest advancements in stable diffusion image generation. This involves installing the models, configuring the settings, and understanding the nuances of stable diffusion model training and inference.

Installing the Stable Diffusion v1–5 Models

To start working with stable diffusion v1–5 models, you’ll need to install them on your preferred platform. One popular option is to use Google Colab, a cloud-based platform for running Python code. Another option is to download the models from GitHub and run them locally on your machine.

Once the models are installed, you can explore various settings and configurations to customize the stable diffusion model according to your needs. These settings include learning rate, batch size, and specific concepts you want the model to focus on. By fine-tuning these parameters, you can optimize the stable diffusion model for your specific project requirements.

Configuring Settings for Your New Concept

When working with stable diffusion models, it’s essential to configure the settings to align with your new concept or specific prompts. This involves defining a new token and embedding vector that represents your desired concept within the stable diffusion model.

By tweaking the learning rate and batch size, you can control how the stable diffusion model adjusts its parameters during training. This optimization process plays a crucial role in ensuring that the model converges to the desired concepts and generates text that is contextually relevant. By adjusting these settings, you can fine-tune the stable diffusion model for optimal performance.

Creating the Dataset for Stable Diffusion Textual Inversion

Before training a stable diffusion model, you need to create a robust dataset. This dataset will serve as the foundation for training the model and generating image samples with textual inversion.

Steps to Create a Robust Dataset

Creating a training dataset for stable diffusion textual inversion involves several key steps:

- Gather sample images that represent the concepts or themes you want the model to learn.

- Define specific concepts or prompts that will guide the model during training.

- Generate new embeddings or modify existing text tokens to capture the desired concepts.

- Compile the training data, combining the sample images and the corresponding text prompts.

- Optimize the dataset by fine-tuning the embeddings and refining the text tokens for each concept.

- Incorporate specific prompts and negative prompts into the dataset to provide a diverse range of training examples for the stable diffusion model. These prompts serve as guidance for the model, enabling it to learn and generate image samples that align with the desired concepts.

Working with Model Files

Now that you have a solid understanding of stable diffusion models and have created a dataset, let’s explore how to work with model files to train and utilize stable diffusion models effectively.

How to Download the Required Model Files

To download the required model files, you can refer to the official resources, such as GitHub repositories, where the stable diffusion models are hosted. Once you have downloaded the model files, store them in a designated directory or folder on your machine. This directory serves as the centralized location for accessing and managing the model inference files, checkpoint files, and other relevant resources.

Setting Up a New Token for Your Project

Setting up a new token is crucial for customizing stable diffusion models to represent specific concepts in your project. By establishing a new token, you can seamlessly integrate unique embedding vectors that capture the essence of your desired concepts.

To set up a new token, create an embeddings folder that contains the unique embedding vector specific to your new token. This embedding vector will provide essential context and guidance to the stable diffusion model during training, leading to more accurate image generation and language modeling results.

Here is an example of the Usada Pekora that Tslmy trained on the WD1.2 model, on 53 pictures (119 augmented) for 19500 steps, with 8 vectors per token setting.

portrait of usada pekora

Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 4077357776, Size: 512x512, Model hash: 45dee52b

The Training Process

With the dataset ready and the model files set up, it’s time to dive into the training process of stable diffusion models.

Textual inversion tab

Experimental support for training embeddings in user interface.

- create a new empty embedding, select directory with images, train the embedding on it

- the feature is very raw, use at own risk

- i was able to reproduce results I got with other repos in training anime artists as styles, after few tens of thousands steps

- works with half precision floats, but needs experimentation to see if results will be just as good

- if you have enough memory, safer to run with

--no-half --precision full - Section for UI to run preprocessing for images automatically.

- you can interrupt and resume training without any loss of data (except for AdamW optimization parameters, but it seems none of existing repos save those anyway so the general opinion is they are not important)

- no support for batch sizes or gradient accumulation

- it should not be possible to run this with

--lowvramand--medvramflags.

portrait of usada pekora, mignon

Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 4077357776, Size: 512x512, Model hash: 45dee52b

Essential Elements for Training Set Up

The training setup of stable diffusion models involves several essential elements:

- Configuring the embeddings folder, which contains the training dataset and embedding vectors specific to your desired concepts.

- Precisely initializing the training data, ensuring that it captures the unique characteristics of each concept you want the model to learn.

- Utilizing specific prompts and negative prompts to guide the training process and optimize the model for different concepts.

- Understanding the unique embedding vector characteristics of the stable diffusion model and leveraging them for training image generation models with diverse prompts.

- Customizing the training image generation process to account for different concepts, ensuring that the stable diffusion model generates images that align with the desired prompts.

Training an embedding

- Embedding: select the embedding you want to train from this dropdown.

- Learning rate: how fast should the training go. The danger of setting this parameter to a high value is that you may break the embedding if you set it too high. If you see

Loss: nanin the training info textbox, that means you failed and the embedding is dead. With the default value, this should not happen. It's possible to specify multiple learning rates in this setting using the following syntax:0.005:100, 1e-3:1000, 1e-5- this will train with lr of0.005for first 100 steps, then1e-3until 1000 steps, then1e-5until the end. - Dataset directory: directory with images for training. They all must be square.

- Log directory: sample images and copies of partially trained embeddings will be written to this directory.

- Prompt template file: text file with prompts, one per line, for training the model on. See files in directory

textual_inversion_templatesfor what you can do with those. Usestyle.txtwhen training styles, andsubject.txtwhen training object embeddings. Following tags can be used in the file:

[name]: the name of embedding

[filewords]: words from the file name of the image from the dataset. See below for more info.

- Max steps: training will stop after this many steps have been completed. A step is when one picture (or one batch of pictures, but batches are currently not supported) is shown to the model and is used to improve embedding. if you interrupt training and resume it at a later date, the number of steps is preserved.

- Save images with embedding in PNG chunks: every time an image is generated it is combined with the most recently logged embedding and saved to image_embeddings in a format that can be both shared as an image, and placed into your embeddings folder and loaded.

- Preview prompt: if not empty, this prompt will be used to generate preview pictures. If empty, the prompt from training will be used.

portrait of usada pekora

Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 4077357776, Size: 512x512, Model hash: 7460a6fa

Running the Training Function

To initiate the training process, you need to run the training function, incorporating the dataset, model files, and training configuration. The training data, consisting of the dataset you created earlier, will guide the stable diffusion model in learning the concepts and generating contextually relevant text and image samples.

By specifying the number of steps, you can control the duration of the training process. This parameter influences the model’s learning rate and the number of times it iterates over the training data. Adjusting the number of steps allows you to fine-tune the stable diffusion model’s performance and ensure optimal convergence.

Inference Code and Your Newly Trained Embedding

Now that the training process is complete, you can move on to running inference code and leveraging the newly trained embedding vector to generate text and image samples.

How to Run Inference Code

Running inference code involves utilizing the stable diffusion models to generate text and image samples. Depending on your specific project setup, you may need to consider the hardware requirements, including NVIDIA GPUs, for efficient inference code execution.

By leveraging default settings, you can quickly run the inference code and observe the generated outputs. Incorporating captions and specific concepts in the inference code can further enhance the style transfer and contextual relevance of the generated text and images.

Utilizing Your Newly Trained Embedding

The newly trained embedding vector opens up a world of possibilities for utilizing stable diffusion models. With this embedding, you can generate text that aligns with specific concepts, manipulate the embedding space, and explore the potential of style transfer within text and image generation tasks.

By integrating the new embedding vector into the text encoder of the stable diffusion model, you can optimize the model for generating text that captures the unique characteristics of your desired concepts. Style transfer, an advanced technique, allows you to transfer the style of one text to another, leading to diverse textual inversion outcomes.

Integration with Stable Diffusion Web UI

To facilitate a seamless user experience, stable diffusion models can be integrated with web-based user interfaces (UI). These web UIs provide convenient access to the stable diffusion models, allowing users to generate text and image samples with ease.

Launching the Web UI with the Pre-trained Embedding

Launching the stable diffusion web UI involves utilizing the pre-trained embedding vector to power the user interface. This web UI, specifically designed for stable diffusion models, offers intuitive controls and options for generating text and image samples with textual inversion.

By leveraging prompt template files, users can quickly configure the web UI to generate text that aligns with specific concepts. The textual inversion tab within the web UI serves as a central hub for interacting with stable diffusion models, providing access to various tools and settings for text generation, image generation, and style transfer.

Understanding Hypernetworks and Their Role

In the realm of stable diffusion textual inversion, hypernetworks play a crucial role in modulating the model’s performance and generating unique embeddings.

Diving Deeper into Hypernetworks

Hypernetworks, a concept in generative models, provide a flexible framework for generating model weights. In the context of stable diffusion textual inversion, hypernetworks transform random seeds or inputs into specific embedding vectors, allowing for targeted generation of unique concepts.

These networks are often trained alongside variational autoencoders (VAEs), another type of generative model. Together, hypernetworks and VAEs contribute to enhancing the stable diffusion textual inversion workflow, enabling the generation of novel embeddings that capture specific concepts or prompt-driven text generation.

Practical Usage of Hypernetworks

Practically, hypernetworks are valuable in machine learning tasks, particularly in training language models. By integrating hypernetworks into stable diffusion textual inversion, researchers and practitioners can leverage the power of generative models and fine-tune embeddings specific to their use cases.

Hypernetworks facilitate style transfer within textual inversion embeddings, enabling the model to generate text with specific styles or qualities. By operating at the intersection of machine learning, language modeling, and image generation, hypernetworks provide a powerful tool for expanding the possibilities of stable diffusion textual inversion.

What’s Next in the Field of Textual Inversion?

As stable diffusion textual inversion continues to evolve, the future holds exciting prospects for AI, generative models, and textual inversion research. Here are some potential future trends to watch out for:

AI-driven generative models will become increasingly sophisticated, enabling even more realistic text and image generation.

Research efforts will focus on refining stable diffusion models, optimizing learning rates, and exploring new prompts and negative prompt strategies.

The integration of stable diffusion models with web-based user interfaces, such as Hugging Face’s web UI, will revolutionize the accessibility and usability of stable diffusion textual inversion.

Further advancements in embedding techniques and model architectures will enhance language model training, enabling more accurate and contextually relevant text generation.

Conclusion

In conclusion, stable diffusion textual inversion is a powerful technique that allows for the generation of realistic and high-quality text. By understanding the basics of textual inversion and its role in stable diffusion, you can create robust datasets and train models to generate accurate and contextually relevant text. Additionally, integrating stable diffusion with web UI and exploring the potential of hypernetworks further enhances the capabilities of textual inversion. As the field continues to advance, it is exciting to see what innovations and applications lie ahead. Whether you are a researcher, developer, or simply curious about this technology, stable diffusion textual inversion opens up new possibilities for creative and practical use of natural language processing.

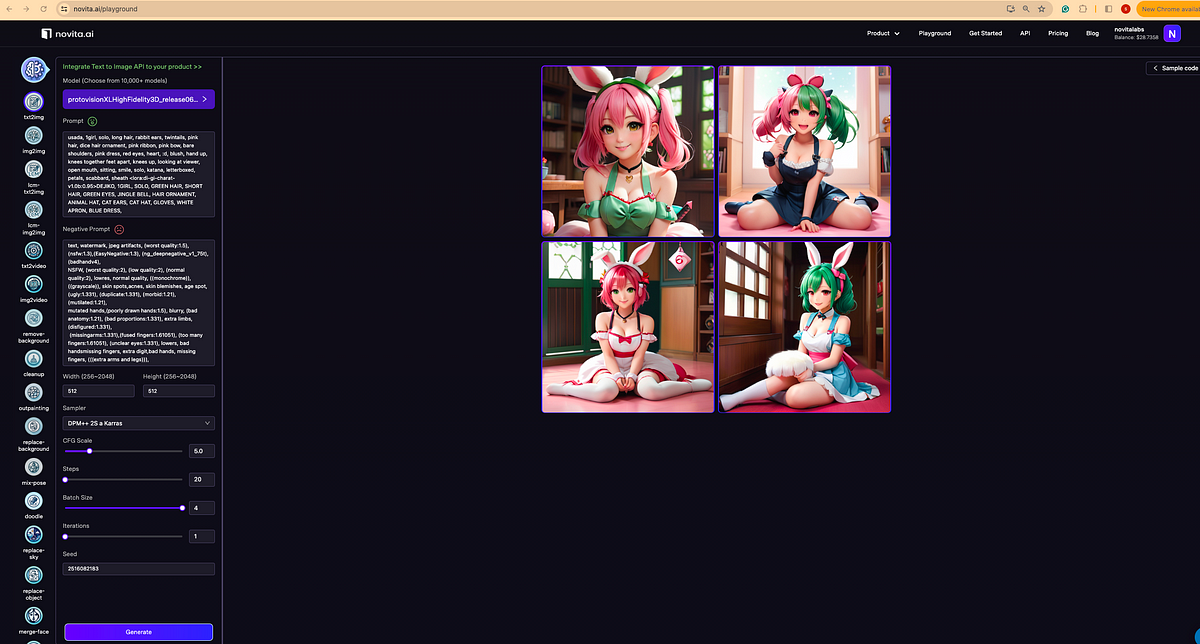

novita.ai provides Stable Diffusion API and hundreds of fast and cheapest AI image generation APIs for 10,000 models.🎯 Fastest generation in just 2s, Pay-As-You-Go, a minimum of $0.0015 for each standard image, you can add your own models and avoid GPU maintenance. Free to share open-source extensions.

Recommended reading