Mastering the Technique: Train Lora with Automatic1111

Enhance your skills to train Lora with Automatic1111. Discover the secrets to mastering this technique and elevate your skills in our guide.

Key Highlights

- LoRA Overview: LoRA is a stable diffusion model that excels in AI-generated image captions, adjustable through base models and style configurations.

- Features: It offers a user-friendly web UI for easy model checkpoint selection, a small model size, configurable batch size, and parameter decomposition for efficient fine-tuning.

- Comparison: It outperforms other models in parameter efficiency, training speed, and adaptability, with a focus on environments with limited resources.

- Automatic1111: A web-based UI for Stable Diffusion, facilitating easy interaction and image generation.

- Training with Novita AI: Provides a platform for training custom LoRA models with a step-by-step process.

- Troubleshooting: Addressing common training errors like bf16 mixed precision and leveraging advanced techniques for optimal results.

Introduction

In the evolving field of AI-driven image generation, LoRA (Low-Rank Adaptation) shines for its efficiency and effectiveness. By enhancing Stable Diffusion models, LoRA optimizes them for high-quality image captioning with minimal computational resources. This blog delves into the benefits of LoRA, compares its performance with other models, and provides a comprehensive guide to setting up and training with LoRA Automatic1111. Empowered by Novita AI, We’ll also address common errors and share advanced techniques to train your models. With the right preparation, you’ll be equipped to master LoRA training and elevate your AI image generation capabilities!

Understanding LoRA Automatic1111

What is LoRA

LoRA, a stable diffusion model, excels in generating high-quality image captions using artificial intelligence techniques. Model output name and training settings can be adjusted in the base model and style configuration files. The stable diffusion webui improves caption quality and allows easy caption generation for single or multiple images with a simple click. LoRA adheres to best practices and can be fine-tuned using machine learning techniques. It is accessible on GitHub and Google Colab for top performance.

Features of LoRA

- Model Resources Support: LoRA’s web UI features a dedicated tab for selecting model checkpoints easily. In the Lora folder, you can access all required training images and caption files.

- Small Model Size: The LoRA model is a compact and stable diffusion model, only x100 smaller than traditional checkpoint models, catering to various user needs.

- Configurable Batch Size: LoRA offers flexibility in configuring batch size, improving the training results.

- Parameter Decomposition: LoRA breaks down the parameter space of the Stable Diffusion model into two low-rank matrices, maintaining high performance with fewer parameters.

- Selective Fine-Tuning: LoRA fine-tunes only decomposed matrices for specific tasks or styles, capturing essential adjustments.

- Efficiency: LoRA accelerates fine-tuning and reduces resource usage by updating fewer parameters, especially beneficial for large models.

- Quality Preservation: LoRA maintains image quality and fidelity with fewer parameter updates through the efficient use of low-rank matrices.

Comparing LoRA with other models

Efficiency of Parameters

- LoRA: By introducing low-rank matrices, only a small number of parameters are updated, reducing computation and storage costs.

- Other models: Requires updating the parameters of the entire model, resulting in high computational costs.

Training Speed

- LoRA: Due to the training of only a few parameters and training data, the speed is fast.

- Other models: Full parameter fine-tuning takes longer to train.

Adaptability

- LoRA: Capable of quickly adapting to multiple tasks, especially suitable for scenarios requiring rapid iterations.

- Other models: Some models may require retraining for specific tasks.

Performance

- LoRA: Performs well in some tasks, approaching the effects of full parameter fine-tuning.

- Other models: Full parameter fine-tuning usually has performance advantages but at a high cost.

Application Scenarios

- LoRA: Suitable for environments with limited resources or applications requiring frequent model updates.

- Other models: In resource-abundant situations, full parameter fine-tuning may be preferred for optimal performance.

What is Automatic1111 in Stable Diffusion LoRA

Automatic1111 refers to a popular web-based user interface for Stable Diffusion, a generative model for creating images from text prompts. This UI provides an accessible way to interact with Stable Diffusion, allowing users to generate and refine images with various settings and options.

To know how it works, you can watch this YouTube video about Stable Diffusion Automatic1111

Preparing to Train Lora with Automatic1111

Before starting LoRA training for beginners, set up a suitable workspace. Install AUTOMATIC1111 for successful training. Caption images for individual training and adjust style settings. Ensure you have all the required equipment and software for efficient training. Follow best practices using stable diffusion web UI on platforms like Google Colab and GitHub for optimal results.

Technical Requirements to Train Lora with Automatic1111

- High-Quality Dataset: For optimal results, ensure you have a high-quality dataset for training the model.

- Web Browser Access: Access the Stable Diffusion web UI through a web browser.

- GPU Support: Efficient LoRA training requires GPU support to handle computations effectively.

- Style Configuration Files: Install specific style configuration files to initiate the training process.

- LoRA File and Images Folder: Properly configure the LoRA file and images folder to facilitate the training process.

- Machine Learning Techniques: Incorporate machine learning techniques and understand Google Colab’s functionality to enhance the training process.

- GitHub for Collaboration: Use GitHub for information sharing and collaboration on the training model.

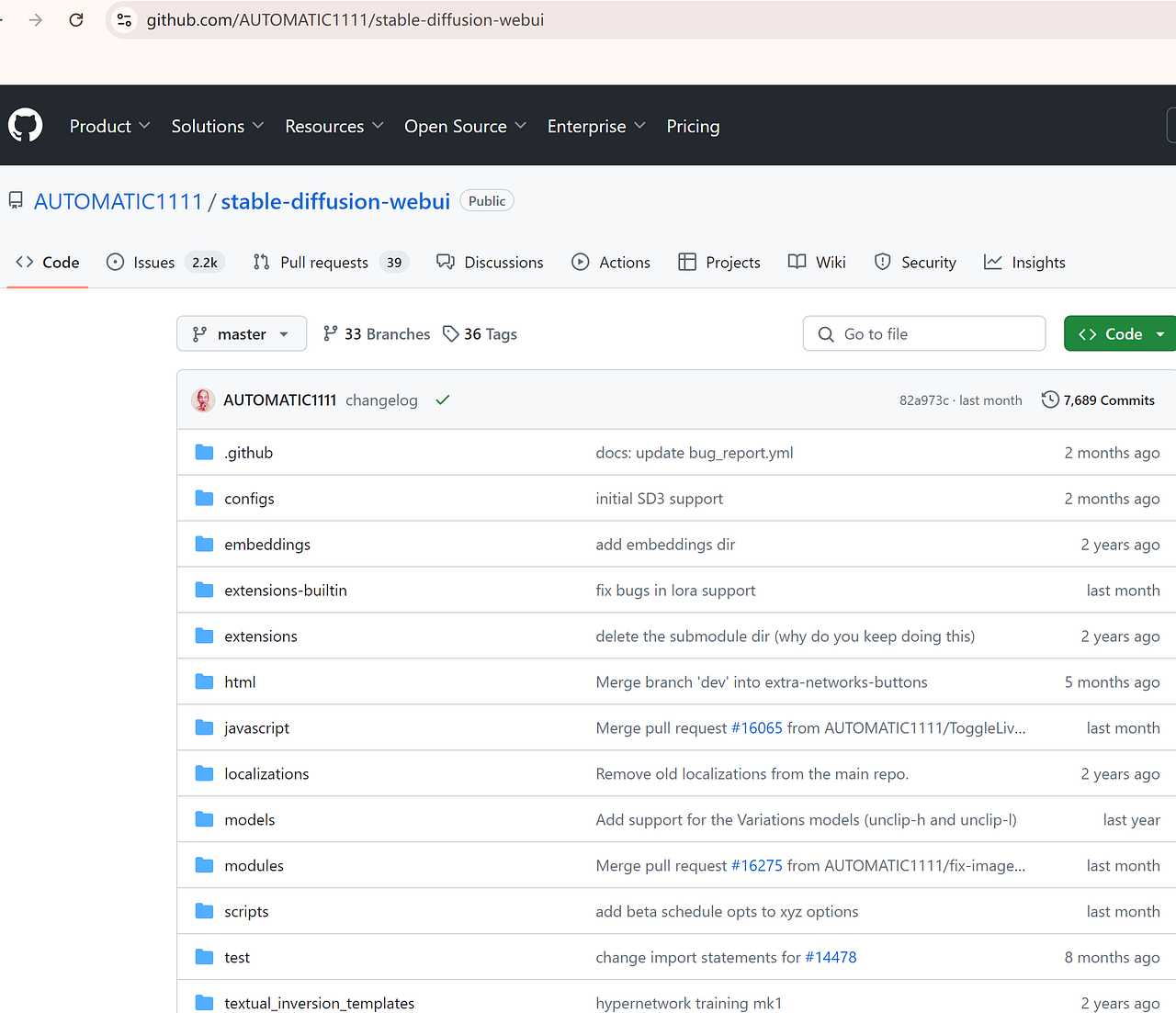

Installation of AUTOMATIC1111

For efficient image captioning training in LoRA, a seamless start with AUTOMATIC1111 installation is crucial. This process supports stable model training and large language models. It enables the generation button for Webui and ensures high-quality results. Best practices like GitHub integration and Google Colab usage enhance AUTOMATIC1111 functionality, Optimizing single-subject anime datasets and PNG images for superior performance while avoiding low-quality images for successful installation. The LoRA tutorial provides beginners with an excellent starting point for their training journey

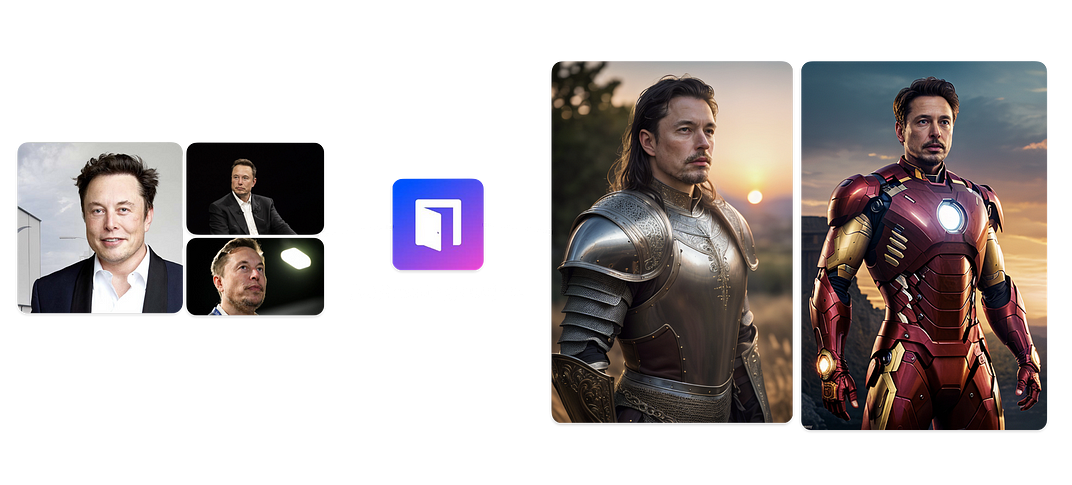

Efficient Approach: Do LoRA Training with Novita AI

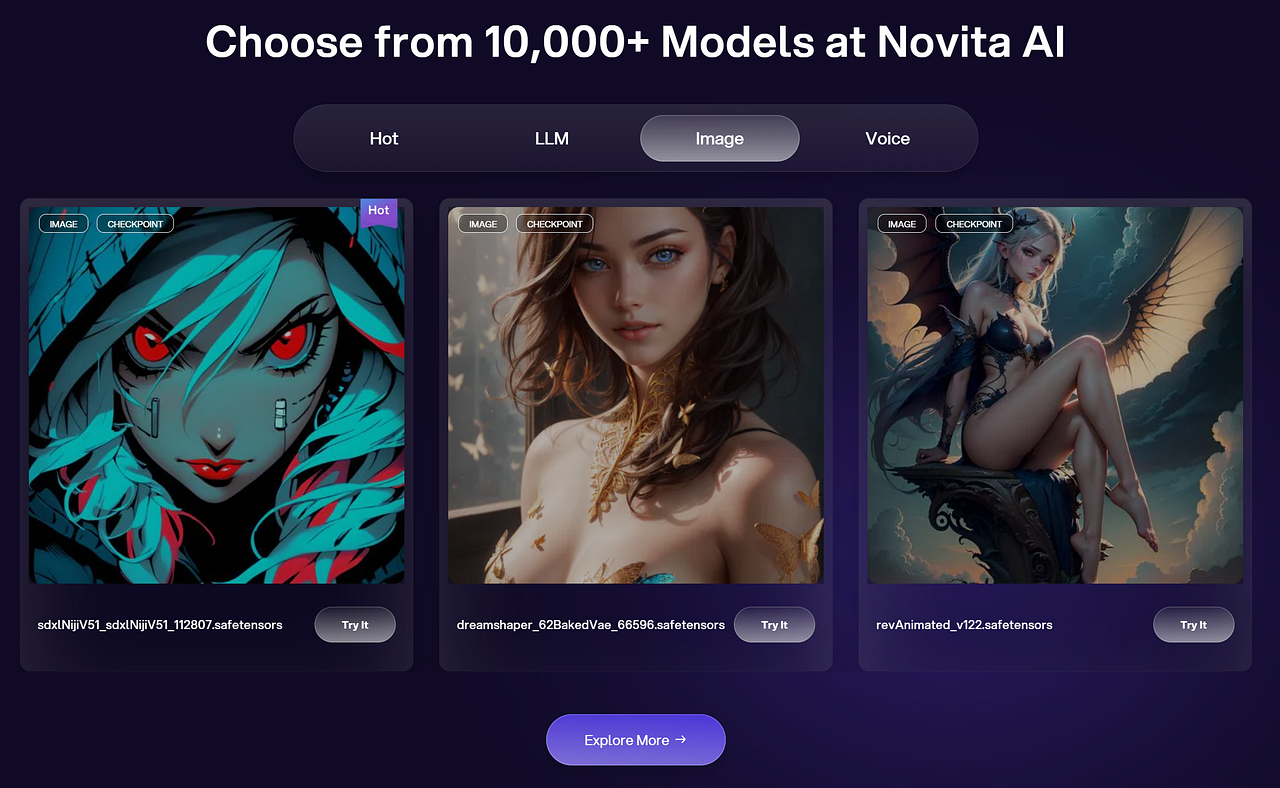

Novita AI provides an easy-to-use platform for developers to train custom LoRA stable diffusion models at scale. Harness the power of LoRA and other stable diffusion models on your unique data.

Why Choose Novita AI?

- Provides Stable Diffusion API and hundreds of fast and cheapest AI image generation APIs

- More than 10,000 models.

- Fastest generation in just 2s

- Pay-As-You-Go

- Reliable and Cost-Effective for Using

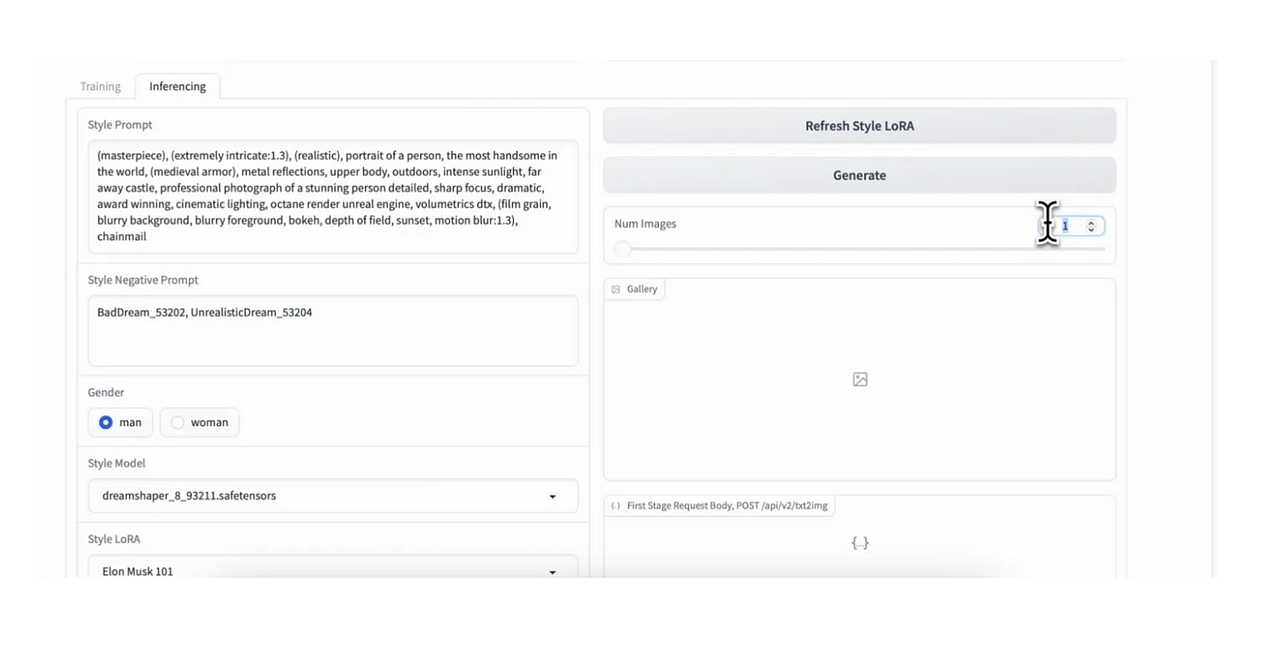

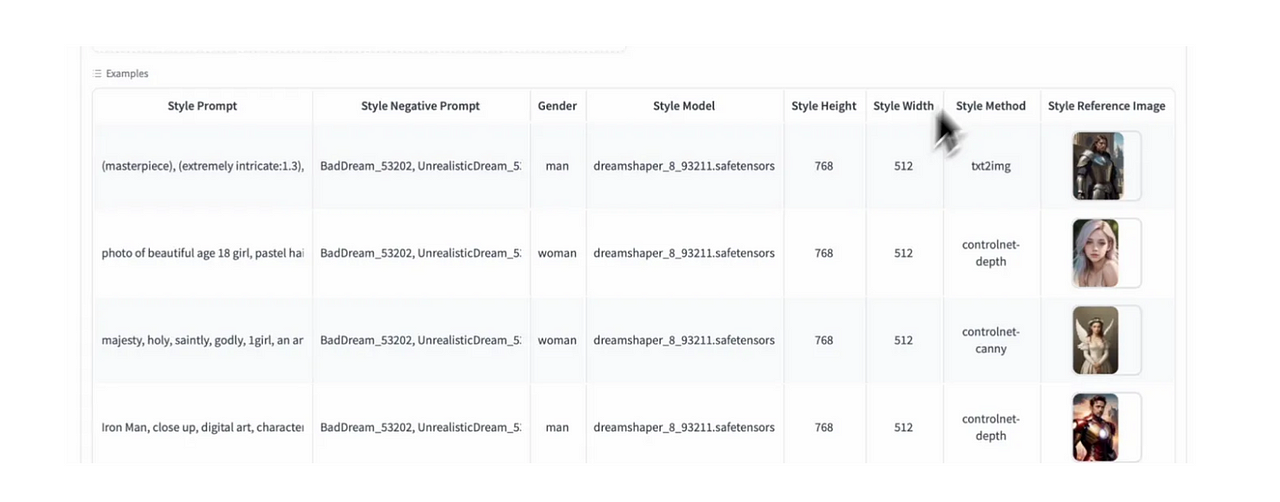

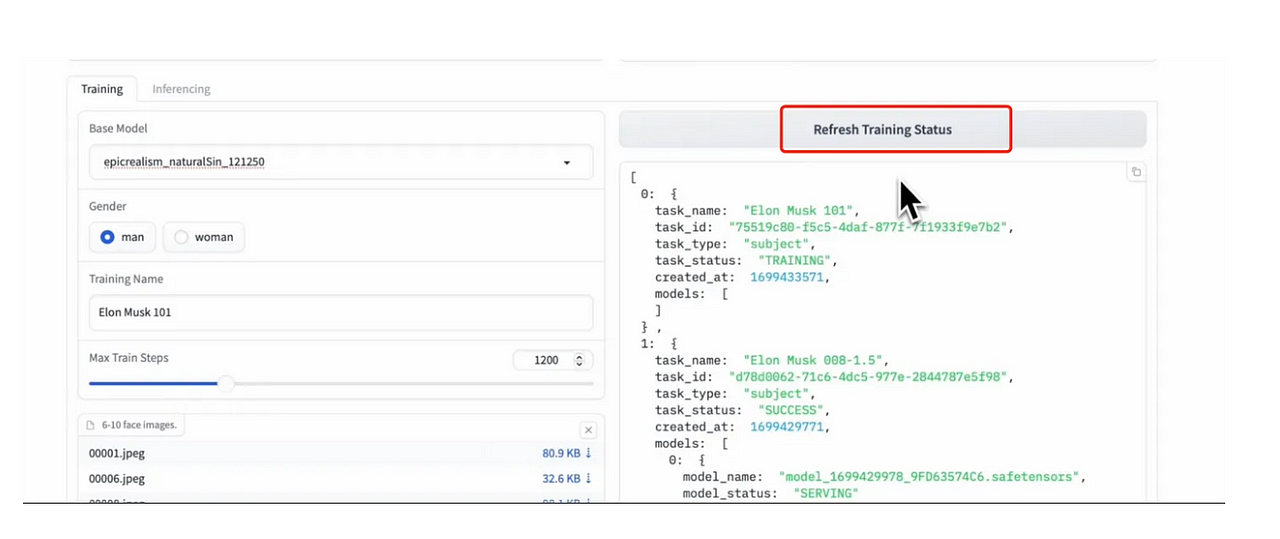

Step-by-step LoRA Training In Stable Diffusion with Novita AI

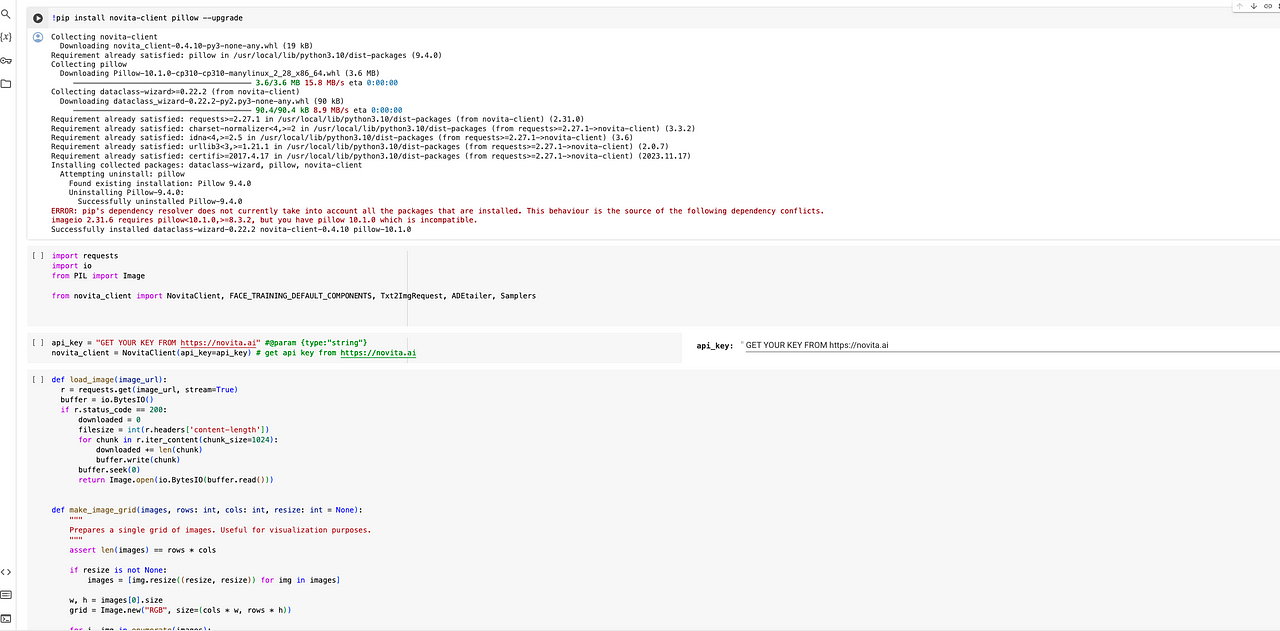

- Step 1: Go to train_subject_in_novita_ai.ipynb. Use stable diffusion webui and AUTOMATIC1111 installation in Colab to start training smoothly.

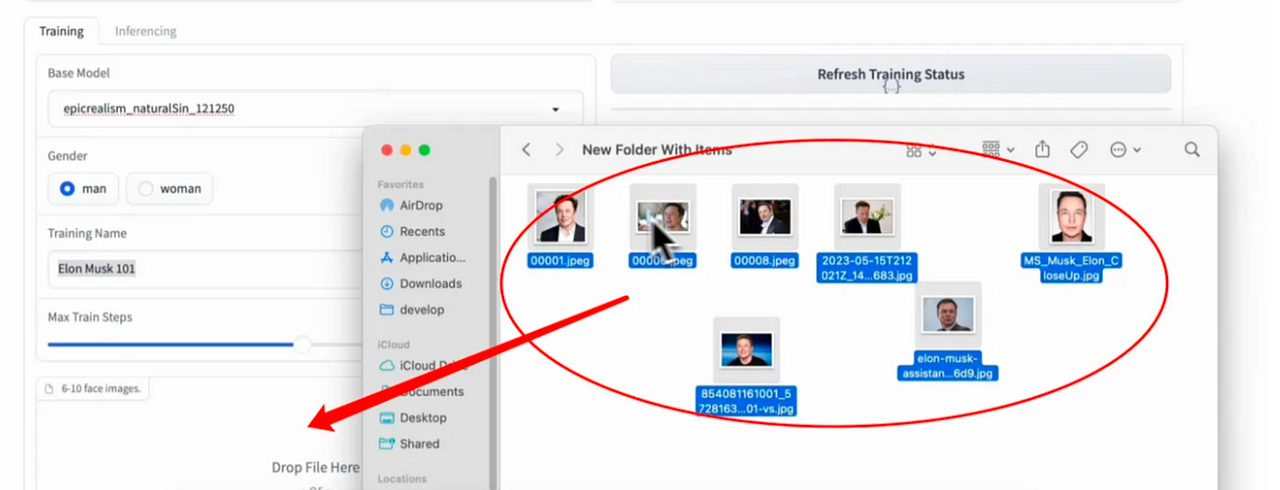

- Step 2: Upload Upload a number of images for model training.

- Step 3: After doing the previous step, set training parameters and start the training. Configure training images, access the web UI and utilize specific style settings to enhance results. Enter negative prompts to avoid unwanted results.

- Step 4: Selecting and Captioning Images. Captioning images is crucial in LoRA training for quality results. It involves creating caption files, embedding images, and selecting high-quality visuals to enhance model effectiveness.

- Step 5: LoRA Training Process: Click Refresh Training status to continue the following tasks.

- Step 6: Get the training results and generate images with the trained model. Do experimentation continually for best results.

Troubleshooting Common LoRA Training Errors

Dealing with the bf16 mixed precision error is essential in LoRA training. Troubleshooting errors involves image regularization, adjusting the learning rate, and identifying configuration file issues. Utilizing stable diffusion XL with LoRA and optimizing trained LoRA models are important advanced techniques.

Dealing with the bf16 Mixed Precision Error

Resolving the mixed precision error calls for meticulous model checkpointing to ensure stable training. Mitigating the error can involve adjusting the batch size to achieve better precision. Inspecting the configuration file may uncover specific solutions to address the bf16 error. Implementing improved regularization techniques is crucial for effectively tackling the mixed precision error. Identifying and resolving dataset issues is a fundamental part of troubleshooting the bf16 error.

Advanced LoRA Training Techniques

Utilizing Stable Diffusion XL can enhance LoRA training outcomes. The stable diffusion web UI improves the training process, while checkpoint models are crucial for effective monitoring and resumption of advanced LoRA training. Textual inversion integration enhances training set diversity and model robustness, with quality training images being essential for effective NLP task techniques.

Utilizing Stable Diffusion XL with LoRA

Stable diffusion XL effectiveness depends on LoRA tab settings and careful configuration is crucial. The choice of the images folder impacts training. Configuring the model output name is vital for successful training. Regularization of images is also important. Following these best practices can yield top-notch machine learning results.

Making the Most of Kohya GUI

Enhance the LoRA training user experience with Kohya GUI. Understanding stable diffusion web UI is crucial for maximizing its functionality. Leveraging checkpoint models and web browser tools further enhances Kohya GUI, improving the captioning process for LoRA models and overall workflow efficiency.

Conclusion

Mastering LoRA training involves understanding its unique features and benefits, setting up the right environment, and applying best practices. By leveraging tools provided by Automatic1111 and Novita AI, and addressing common challenges, you can efficiently train and optimize LoRA models. Continuous learning and adaptation will keep you at the forefront of AI image generation, unlocking new possibilities and improving model performance.

FAQs

How to tell if LoRA is overtrained?

If the generated images look very similar to the training image with the identical prompt, it may indicate overtraining.

How many images to train LoRA?

For new users, it is recommended to use 8–15 images for training to better understand the effects of LoRA.

What’s next after mastering LoRA training?

Optimize trained LoRA models by exploring the dreambooth settings. Check the LoRA folder contents post-training for better results. Improve model performance by mastering image captioning through individual files and the captioning process.

How can I optimize the use of trained LoRA models?

Understand AI tutorial details and explore checkpoint models. Utilize LoRA folder information for model optimization, exploring model checkpoint files, and optimizing GPU settings for better post-training results.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended reading