Master Streaming Langchain: Tips for Interacting with LLMs

Key Highlights

- Streaming in Langchain and LLM allows for real-time, continuous communication with the language models

- Langchain and LLM use streaming to improve user experience and reduce latency in text output

- Chat-completion and chat history are important concepts in streaming with LLMs and chatbots

- Streaming with Langchain involves understanding chains, intermediate steps, and language models

- Streaming challenges can be overcome by addressing issues like latency, batching, and queue management

- Streamlined responses enhance user experience by providing faster and more interactive outputs

- Case studies demonstrate successful implementation of streaming with Langchain in various use cases

Introduction

Streaming enables real-time communication with language models like Langchain and LLM, reducing latency in text output. It utilizes event-driven APIs for continuous data transfer, making interactions more responsive and efficient. By implementing streaming, Langchain and LLM aim to enhance user’s experience by providing faster responses and creating a dynamic conversation environment.

In this blog, we will delve into the concept of streaming in Langchain and LLM, exploring associated NLP terms and overcoming challenges. And we will show you how streaming improves user experience and showcase successful case studies demonstrating its effectiveness.

Understanding Streaming Langchain and LLM

To grasp streaming in Langchain and LLM, we must first understand the NLP terms related to it. Streaming involves continuously sending real-time data. In Langchain and LLM, streaming facilitates a constant data flow between the client and server.

Langchain utilizes LLMs (Large Language Models) for tasks like chatbot interactions and text generation. LLMs are potent models that produce human-like text based on prompts for various applications such as chatbots, content creation, and language comprehension.

A chatbot mimics human conversations by employing NLP to comprehend user queries and offer suitable responses. These chatbots, often used in customer support and virtual assistants, can be developed using LLMs.

In Langchain and LLM, the runnable interface is the programmatic tool allowing developers to interact with the models by sending prompts and receiving responses.

Understanding these NLP terms helps us delve into streaming in Langchain and LLM further. Streaming enables real-time responses from the models, enhancing interactive conversations with continuous data exchange between client and server, eliminating repetitive requests, and reducing latency in text output.

What is the meaning of Streaming in LLM

When streaming with Langchain and LLM, data is sent in the form of tokens. Tokens can be thought of as individual units of text or words. As the client sends prompts or queries to the models, the tokens are processed and transformed into meaningful responses.

Streaming with Langchain and LLM also involves the use of asynchronous programming techniques. Asynchronous programming allows for concurrent execution of tasks, enabling the server to handle multiple requests simultaneously. This improves the overall efficiency and responsiveness of the streaming process.

By leveraging streaming, Langchain and LLM can provide a more interactive and dynamic user experience. Users can receive real-time responses to their queries, making the conversation feel more natural and engaging.

LLMs and Chatbots

LLMs (Large Language Models) play a crucial role in streaming interactions with chatbots. LLMs are powerful language models that can generate human-like text based on given prompts. They can be used to create chatbots that simulate human-like conversations.

A chatbot is an application that uses natural language processing (NLP) techniques to understand user queries and provide appropriate responses. Chatbots built using LLMs are capable of generating contextually relevant and coherent responses, enhancing the user experience.

Streaming with LLMs and chatbots involves continuously sending prompts or queries to the models and receiving real-time responses. The chat model in LLMs enables the models to generate responses based on the context of the conversation. This makes the interaction more dynamic and allows for a more engaging user experience.

By leveraging LLMs and chatbots in the streaming process, Langchain can provide users with fast and accurate responses, creating a more immersive and interactive conversation.

Understanding Streaming Langchain

Langchain utilizes chains to facilitate the streaming process with LLMs. Chains in Langchain are a sequence of intermediate steps that transform the input data and guide the language model towards generating the desired output.

The intermediate steps in the chain act as a bridge between the user’s input and the language model. They preprocess the input data, perform any necessary transformations, and provide the context for the language model to generate meaningful responses.

By using chains, Langchain ensures a smooth and efficient streaming experience. The intermediate steps help streamline the input data and optimize the performance of the language model, resulting in faster and more accurate responses.

The language model in Langchain is the core component responsible for generating the final output based on the provided prompts. It leverages advanced NLP techniques and extensive training data to produce high-quality text output.

Understanding chains and the role of the language model in Langchain is essential for effectively implementing streaming and maximizing its benefits.

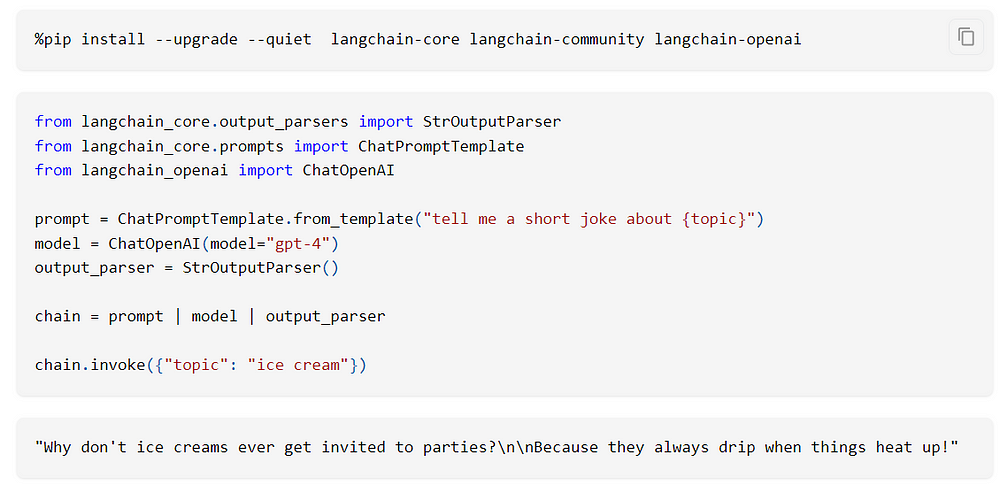

Here is an example of a chain. Let’s see how it works a joke:

How to Stream an interaction with your LLMs

Streaming an interaction with your LLMs in Langchain involves following a few simple steps. First, you need to set up the necessary dependencies and libraries for your project. This includes installing Langchain and any additional packages required for your specific use case.

Next, you can start streaming by using the provided runnable interface in Langchain. This interface allows you to send prompts or queries to your LLMs and receive real-time responses. You can leverage the streaming capabilities of Langchain to create dynamic and interactive chat experiences with your LLMs.

By following these steps, you can successfully stream interactions with your LLMs and enhance the user experience in your applications.

Here is an example of streaming using the chat model from a Chatbot:

Stream a conversation in Chat-completion

Streaming an interaction in chat-completion provided by Novita.ai involves continuously sending prompts or queries to the LLM and receiving real-time responses. This allows for a more interactive and dynamic chat-like experience.

To stream an interaction in chat-completion, follow these steps:

- Set up the necessary dependencies and libraries, including Langchain and any required packages.

- Create a chat history to provide context for the conversation.

- Use the runnable interface in Langchain to send prompts or queries to the LLMs and receive real-time responses.

- Continuously update the chat history with new user inputs and LLM responses to maintain the conversational flow.

- Stream the interaction to provide a seamless and interactive chat experience.

- Collect the final result or output of the chat-completion process to present to the user.

By streaming the interaction in chat-completion, you can create a more engaging and realistic conversation with your LLMs.

Streaming Challenges and How to Overcome Them

While streaming with Langchain and LLMs provides numerous benefits, there are also some challenges that need to be considered. Understanding and addressing these challenges is crucial for ensuring a smooth and efficient streaming experience.

One of the main challenges in streaming is latency, which refers to the delay in receiving responses from the LLMs. This delay can negatively impact user experience, especially in real-time conversational applications. To overcome latency challenges, it is important to optimize the streaming process by minimizing unnecessary computations and leveraging efficient data transfer mechanisms.

Another challenge in streaming is managing large volumes of data. Batching, which involves grouping multiple requests or queries together, can help optimize the streaming process by reducing the number of individual requests. This improves efficiency and reduces resource consumption.

Queue management is another important consideration in streaming. As requests and responses are continuously flowing, it is crucial to ensure that the queue is properly managed to maintain a steady flow of data. This involves implementing efficient queueing mechanisms and optimizing the handling of incoming and outgoing data.

By addressing these challenges and implementing appropriate strategies, such as optimizing latency, batching requests, and managing the queue effectively, the streaming process can be streamlined and the overall user experience enhanced.

Enhancing User Experience with Streamlined Responses

One of the key advantages of streaming with Langchain and LLMs is the ability to enhance user experience by providing faster and more interactive responses. Streamlined responses contribute to a more engaging and dynamic conversation with the models, making the application feel more responsive and natural.

Streamlined responses enable users to receive real-time feedback and make the conversation feel more interactive. This can greatly enhance the user experience and improve user satisfaction.

Additionally, streaming allows for the delivery of the entire response in a continuous flow, eliminating the need for users to wait for the complete response. By providing the entire response in a seamless manner, the conversation becomes more engaging and the user can have a more immersive experience.

To further enhance user experience, Langchain and LLMs support defaults, which provide fallback responses in case the models are unable to generate a meaningful response. These defaults help maintain the flow of the conversation and ensure a smooth user experience.

By leveraging the capabilities of streaming, Langchain and LLMs can provide streamlined and interactive responses, enhancing the overall user experience and making the application feel more dynamic and responsive.

Case Studies: Success Stories with Streaming Langchain

Case studies show Langchain’s successful use in streaming, demonstrating its benefits in various scenarios. The stories emphasize Langchain’s value and flexibility.

Here are a few examples of Langchain in action:

- Customer Support Chatbot

- Langchain made a chatbot for an e-commerce site.

- The chatbot used streaming for quick customer help.

- This helped the chatbot give fast, correct answers, making customers happier.

2. Content Generation

- Langchain made a content app for a media company.

- Users got real-time suggestions with Langchain.

- The app used Langchain to make good, relevant content.

3. Virtual Assistant

- An organization in healthcare used Langchain for an assistant.

- The assistant answered questions on medicine and health instantly.

- Thanks to Langchain, the assistant was accurate and fast, improving user experience.

These cases show how well Langchain works in different areas, leading to better engagement and satisfaction for users.

Conclusion

In essence, understanding the intricacies of streaming Langchain and LLMs can significantly enhance user experience. By streamlining responses and overcoming challenges, you pave the way for success stories akin to the case studies mentioned. Knowing the prerequisites for streaming Langchain, debugging issues, and leveraging data from multiple sources are crucial steps in this process. Embracing the evolving landscape of technology and user interaction through effective streaming techniques is key to staying ahead in the digital realm.

Frequently Asked Questions

What Are the Prerequisites for Using Langchain Streaming?

To use Langchain streaming, you need to have the following prerequisites:

- Familiarity with Langchain and its features

- Access to the Langchain streaming beta API

- Understanding of the Langchain expression language

- Knowledge of streaming concepts and techniques

Can I Stream Data from Multiple Sources Using Langchain?

Yes, you can stream data from multiple sources using Langchain. Langchain provides default implementations of all the necessary methods to enable streaming from multiple sources. This allows for a seamless and efficient data streaming process with Langchain.

How Do I Debug Issues in My Langchain Streaming Setup?

If you encounter any issues with your Langchain streaming setup, you can debug them by:

- Checking the callback and handler functions for any errors or issues

- Reviewing the streaming code and ensuring that the necessary configurations and dependencies are in place

- Using debugging tools and techniques to identify and resolve any issues in the streaming setup

novita.ai provides Stable Diffusion API and hundreds of fast and cheapest AI image generation APIs for 10,000 models.🎯 Fastest generation in just 2s, Pay-As-You-Go, a minimum of $0.0015 for each standard image, you can add your own models and avoid GPU maintenance. Free to share open-source extensions.

Recommended reading

The Ultimate Random Pokemon Generator Guide

Better Animals Plus Fabric: The Ultimate Guide

Pokemon AI Generator: Unleash Your Creativity