Leveraging PyTorch CUDA 12.2 by Renting GPU in GPU Cloud

Rent GPU in GPU Cloud to leverage PyTorch CUDA 12.2. Optimize your performance with advanced GPU capabilities. Explore more on our blog.

Key Highlights

- PyTorch CUDA 12.2 compatibility issues are addressed with solutions like using nightly builds or specific PyTorch versions.

- The importance of matching CUDA versions between PyTorch and the system is emphasized.

- Users highlight the need to know the installed PyTorch and CUDA versions.

- Recommendations include using 'conda' for environment management and installing PyTorch with appropriate CUDA versions.

- Enhancing PyTorch CUDA 12.2 by renting GPU in GPU cloud.

Introduction

PyTorch is a strong deep learning tool that uses GPUs to speed up its work. CUDA, made by NVIDIA, helps PyTorch make the most of GPU power. If you are using the newest CUDA versions like 12.2 with PyTorch, having access to strong GPUs is important for the best performance. This is why renting GPUs from a cloud environment is very helpful.

In this blog, we will lead you to understand the PyTorch CUDA 12.2, the relationship between PyTorch CUDA 12.2 and GPU, and finally find a better way to rent GPU in the GPU cloud so as to be more effectively enhance the use of PyTorch CUDA 12.2.

Understanding PyTorch CUDA 12.2

PyTorch CUDA 12.2 is a big step forward in deep learning tools. It works well with the newest CUDA toolkit and NVIDIA drivers. This means PyTorch can take full advantage of the features and speed of NVIDIA's modern GPU design. The CUDA toolkit has the software libraries and tools you need to build and run CUDA applications, including deep learning models in PyTorch.

Key Features of PyTorch CUDA 12.2

- Enhanced Performance: Improved computational efficiency for deep learning tasks, leveraging the latest CUDA optimizations.

- Support for New Hardware: Compatibility with the latest NVIDIA GPUs, enabling users to take advantage of new architectures.

- Automatic Mixed Precision (AMP): Enhanced support for mixed precision training, allowing for faster training times and reduced memory usage.

- Streamlined Memory Management: Improved memory allocation and management features to optimize resource utilization.

- Expanded Tensor Operations: New and optimized tensor operations that enhance performance on GPU.

- Better Debugging Tools: Improved debugging capabilities for easier troubleshooting of CUDA-related issues.

- Support for Multi-GPU Training: Improved capabilities for training models across multiple GPUs, facilitating larger model training and faster processing.

- Updated Documentation and Tutorials: Comprehensive resources to help users effectively utilize the latest features and optimizations.

The Relationship Between PyTorch CUDA 12.2 and GPU Technology

PyTorch CUDA 12.2 is closely connected to GPU technology. As GPU technology improves and CUDA develops, we can see better and faster deep learning computations. PyTorch, as a deep learning tool, gains a lot from these improvements. It leads to quicker training, better performance, and helps with more complex tasks that need a lot of data.

Here are some key aspects between them:

- Performance Optimization:

CUDA is NVIDIA's parallel computing platform and programming model for its GPUs. Using CUDA 12.2 can enhance PyTorch's computational performance on GPUs, especially in deep learning tasks.

- Cloud Service Support:

Many cloud service providers (such as Novita AI GPU Instance, AWS, Google Cloud, Azure, etc.) offer GPU instances that support CUDA, meaning you can run PyTorch on these cloud platforms and leverage the advantages of CUDA 12.2.

- Environment Configuration:

When using PyTorch in the cloud, it is crucial to ensure that the environment has a version of PyTorch compatible with CUDA 12.2. Users need to select the appropriate PyTorch version based on the GPU type and CUDA version provided by the cloud service.

- Resource Flexibility:

Renting GPU cloud resources allows dynamic adjustment of resources as needed, making it suitable for users who require high-performance computing but do not want to invest in physical hardware. Combined with CUDA 12.2, users can effectively utilize cloud computing resources for large-scale training and inference.

- Scalability:

Cloud services typically allow users to quickly scale computing resources, which is very useful for handling large datasets and complex models, while CUDA's efficient computing capabilities can further accelerate training speed.

Rent GPU in A GPU Cloud

By renting a GPU in a GPU cloud, PyTorch applications can leverage high-performance GPUs without the need for physical hardware. This setup allows for efficient distribution of computational tasks, optimizing resource utilization for machine learning workloads.

Factors should be considered

- CUDA support

- GPU availability

- pricing structures

- scalability options

- customer support quality

- data center locations, and

- security measures

Benefits of Renting GPU in GPU Cloud for PyTorch CUDA 12.2

Using PyTorch CUDA 12.2 often needs fast GPUs. Buying these GPUs can be very expensive. Renting GPUs in a cloud setting is a smart choice. It gives researchers and developers many advantages.

Renting GPUs in the cloud is a cheaper and flexible way to get the power needed for tough deep learning work. It removes the need to spend money on hardware upfront. You can also adjust resources easily to fit your project needs.

- Cost-Effectiveness of GPU Cloud Services

One major benefit of using GPU cloud services is how cost-effective they are. Buying high-performance GPUs can be very pricey. This is especially true for single researchers or small teams. With GPU cloud platforms, users don't have to handle big upfront costs. Instead, they only pay for the computing power they use.

Cloud services also come with different pricing plans. This lets users save money by matching their spending to how much they use. Because users can adjust their resources easily, it can be cheaper than buying and maintaining their own hardware.

Here are some reasons why GPU cloud services are cost-effective:

- Pay-as-you-go Pricing: Users pay based on what they use, giving them more control over costs.

- Scalability: Users can easily increase or decrease resources based on their project needs.

- Scalability and Flexibility in GPU Usage

GPU cloud platforms offer cost-effective scalability and flexibility, allowing users to adjust computing resources for their deep learning projects easily. Users can quickly adapt to handle more data or complex models.

Cloud-based GPU instances provide choices in operating systems, software versions, and ready-made setups, streamlining workflows. Users can focus on research or development without the hassle of hardware and software setup.

With the ability to create and remove GPU instances swiftly, users can accelerate their development processes without waiting for physical hardware setup. This speeds up deep learning projects significantly.

- Access to High-Performance GPUs

GPU cloud platforms provide access to high-performance GPUs from brands like NVIDIA. These GPUs excel at handling tasks such as training deep learning models, outperforming CPUs. With these powerful GPUs, users can accelerate their deep learning projects, enabling them to train large language models, develop advanced computer vision tools, and run complex simulations. This accessibility fosters innovation by empowering researchers and developers to explore new ideas.

Additionally, GPU cloud platforms continuously enhance their offerings by incorporating the latest and most efficient GPUs available. This ensures that users always have access to cutting-edge hardware for their deep learning endeavors.

Implementing PyTorch CUDA 12.2 in GPU Cloud Environments

Implementing PyTorch CUDA 12.2 in cloud environments with GPUs requires selecting a suitable cloud provider with the right GPU instances. Setting up the environment involves installing drivers, libraries, and frameworks for PyTorch and CUDA 12.2.

For optimal deep learning performance in the cloud, utilize best practices such as optimized data storage and efficient data pipelines to accelerate model training. Employ data parallelism for large datasets by distributing training data across multiple GPUs to reduce training time, maximize resource utilization, and cost-effectiveness in the cloud.

Best Practices for Maximizing Efficiency

There are great ways for developers and researchers to work better with PyTorch CUDA 12.2 in a GPU cloud setting.

One good practice is to use the CUDA profiling tools from the CUDA toolkit, such as the NVIDIA GeForce RTX 4070. These tools help find slow parts of the GPU code, look at memory use, and make kernel execution better. This leads to better performance overall.

Another important practice focuses on handling data and input pipelines. By loading and preprocessing data well, you can avoid delays and let the GPU work at full speed. Using asynchronous data loading helps, as it gets the next batch ready while the GPU is working on the current one. This can really speed up the training.

Running Cuda on Novita AI GPU Instance

As you see, you'll need an NVIDIA GPU that plays nice with the new stuff, as well as having both the latest NVIDIA driver installed alongside this very toolkit itself.

Novita AI GPU Instance, a cloud-based solution, stands out as an exemplary service in this domain. This cloud is equipped with high-performance GPUs like NVIDIA A100 SXM and RTX 4090. Novita AI GPU Instance provides access to cutting-edge GPU technology that supports the latest CUDA version, enabling users to leverage the advanced features.

What can you get from renting them in Novita AI GPU Instance?

- cost-efficient: reduce cloud costs by up to 50%

- flexible GPU resources that can be accessed on-demand

- instant Deployment

- customizable templates

- large-capacity storage

- various the most demanding AI models

- get 100GB free

How to start your journey in Novita AI GPU Instance:

STEP1:

If you are a new subscriber, please register our account first. And then click on the GPU Instance button on our webpage.

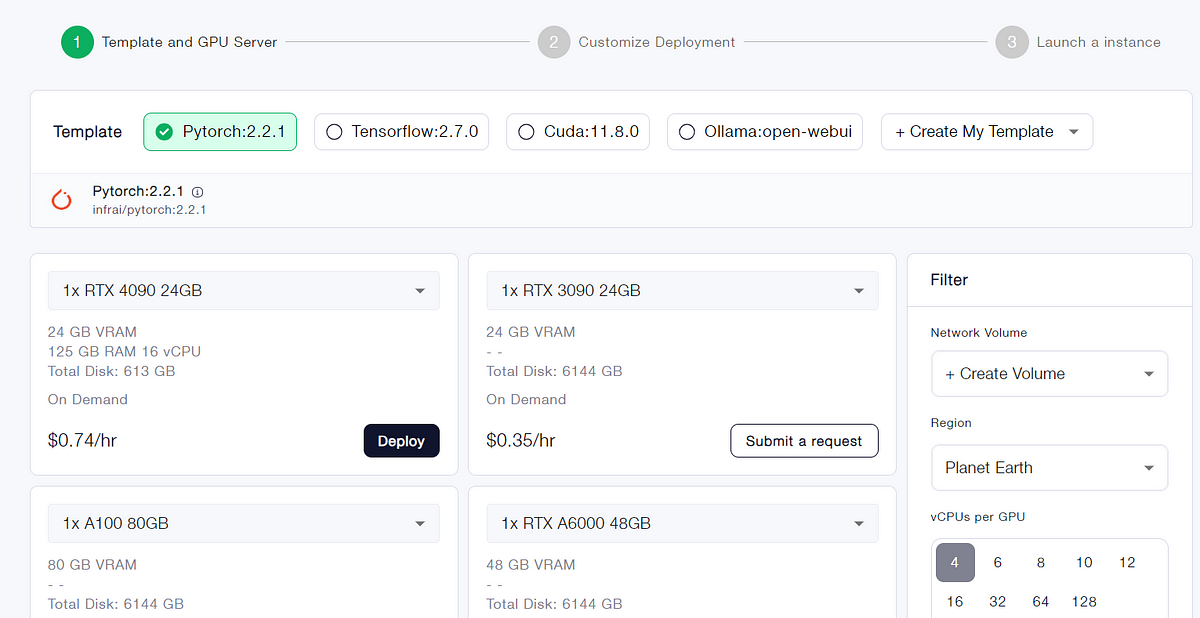

STEP2: Template and GPU Server

You can choose you own template, including Pytorch, Tensorflow, Cuda, Ollama, according to your specific needs. Furthermore, you can also create your own template data by clicking the final bottom.Then, our service provides access to high-performance GPUs such as the NVIDIA RTX 4090, and RTX 3090, each with substantial VRAM and RAM, ensuring that even the most demanding AI models can be trained efficiently. You can pick it based on your needs.

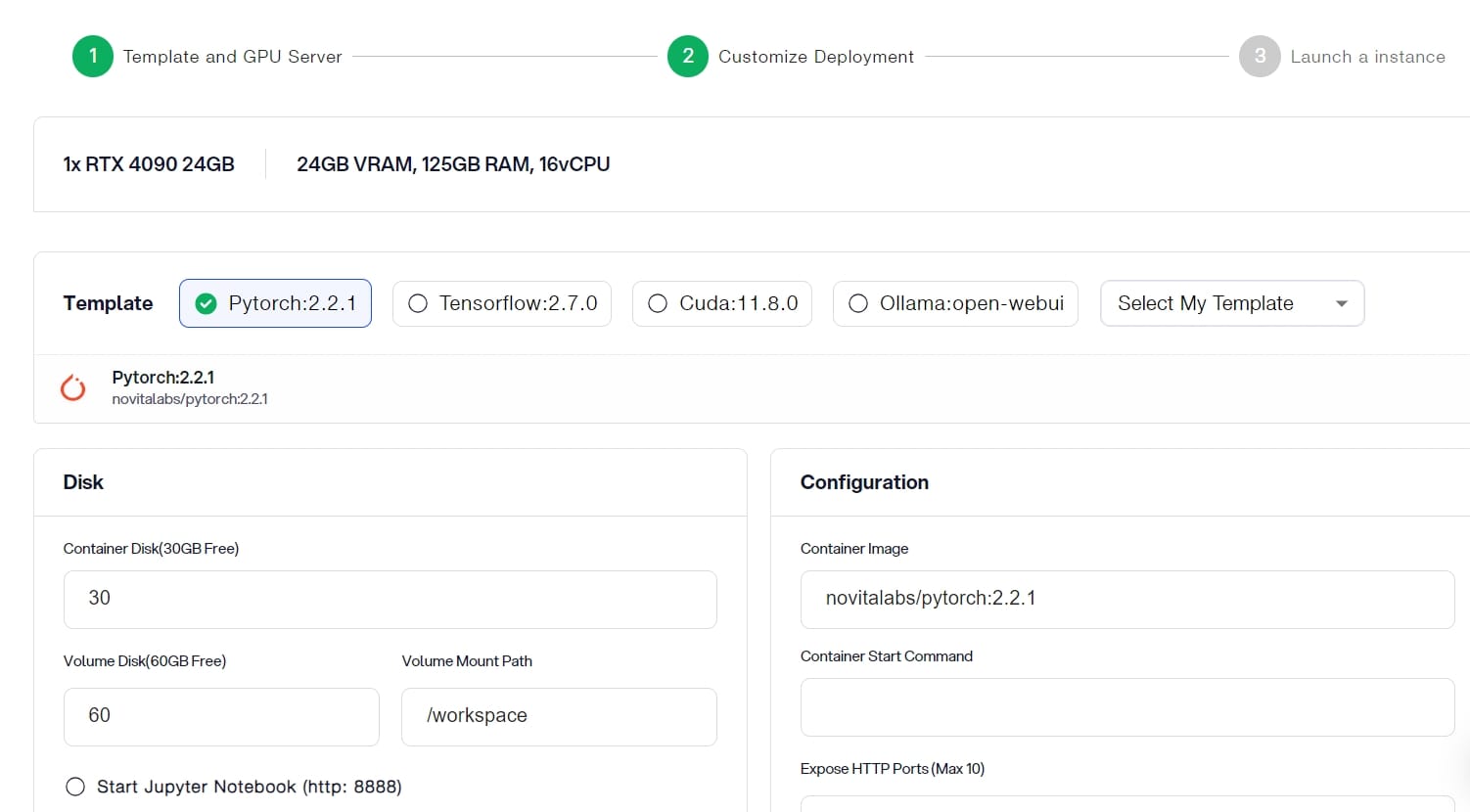

STEP3: Customize Deployment

In this section, you can customize this data according to your own needs. There are 30GB free in the Container Disk and 60GB free in the Volume Disk, and if the free limit is exceeded, additional charges will be incurred.

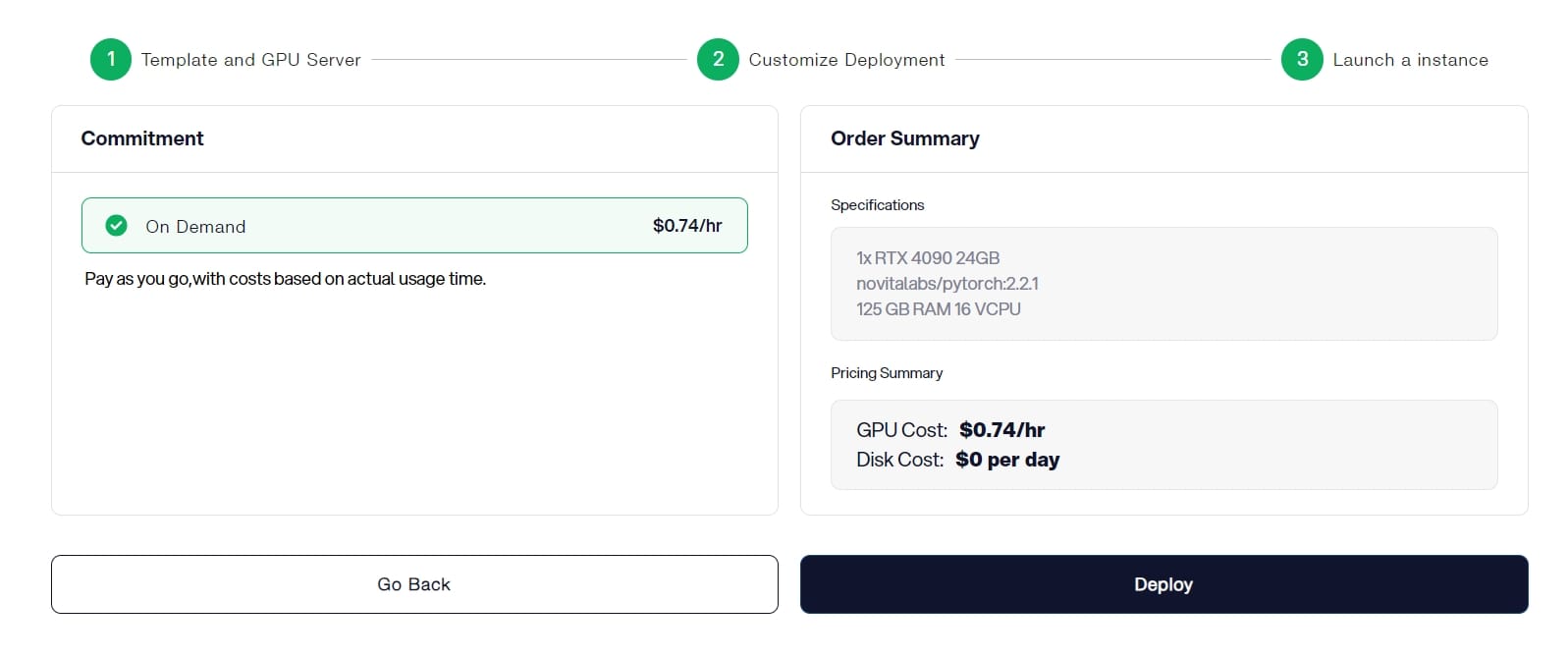

STEP4: Launch an instance

Whether it’s for research, development, or deployment of AI applications, Novita AI GPU Instance equipped with CUDA 12 delivers a powerful and efficient GPU computing experience in the cloud.

Conclusion

In conclusion, using PyTorch CUDA 12.2 and renting GPUs in GPU cloud settings is a smart and affordable way to boost GPU technology. The updates in CUDA for PyTorch have greatly raised GPU performance. This makes it a great tool for machine learning projects. By following good practices and setting up PyTorch CUDA 12.2 in these cloud environments, users can work more efficiently and get the best results in their machine learning work.

Frequently Asked Questions

What Makes PyTorch CUDA 12.2 Ideal for Deep Learning Projects?

PyTorch with CUDA 12.2 works great with the latest NVIDIA CUDA. This gives excellent speed for deep learning projects. It supports the newest NVIDIA GPUs and has better features and changes. This makes it a popular option.

What are the advantages of leveraging PyTorch CUDA 12.2 for deep learning projects?

By utilizing PyTorch CUDA 12.2, deep learning projects benefit from enhanced performance due to faster computations on GPUs, improved memory management, and optimized parallel processing capabilities. This leads to accelerated model training and inference, ultimately boosting productivity and efficiency.

Does CUDA 12.4 work with Torch?

You can install any PyTorch binary with CUDA support (11.8, 12.1, or 12.4) as long as your NVIDIA driver is properly installed and can communicate with your GPU.

Novita AI, is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance - the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading: