Introducing Openhermes 2.5: Comprehending the Power of Messenger of Gods

Introduction

Welcome to our exploration of OpenHermes 2.5, a groundbreaking dataset developed by Teknium. This blog dives into the features, applications, and advancements of this state-of-the-art model extension.

What Is Openhermes 2.5?

Basic Background of Openhermes 2.5

Developed by Teknium, Openhermes 2.5 is an extension and an improvement of the Open Hermes 1 dataset. It is characterized by a much larger scale, greater diversity, and higher quality, with a compilation of over 1 million synthetically generated instruction and chat samples.

Key Features of Openhermes 2.5

- It is a compilation of various open source datasets and custom-created synthetic datasets.

- The dataset has been integrated with Lilac, a data curation and exploration platform, and is available on HuggingFace for exploration, curation, and text embedding searches.

- Openhermes 2.5 includes contributions from multiple sources, such as Airoboros 2.2, CamelAI Domain Expert Datasets, ChatBot Arena, Collective Cognition, and others, each providing a unique set of data that enriches the overall dataset.

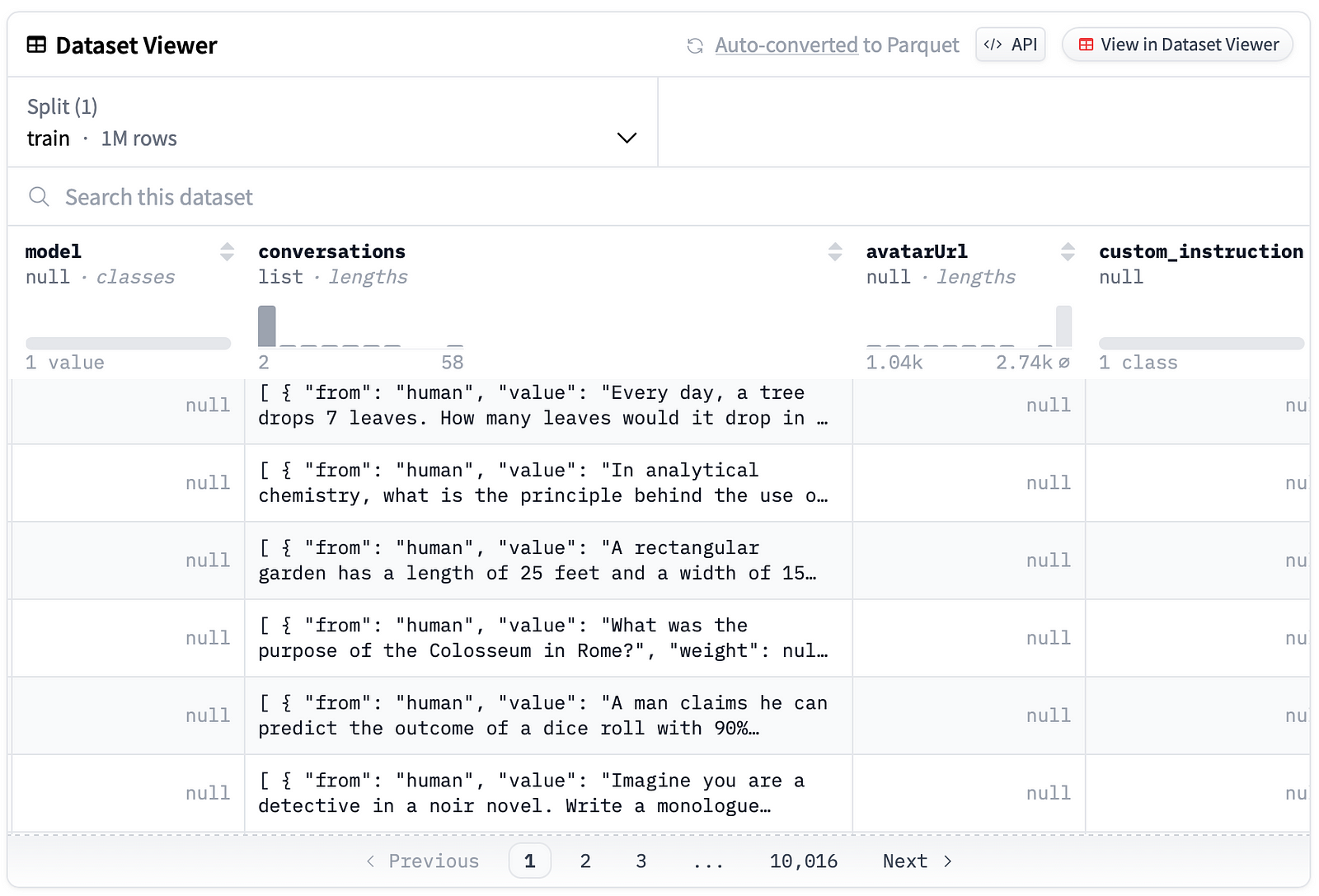

- The structure of Openhermes 2.5 follows a sharegpt format, which is a list of dictionaries. Each entry includes a “conversations” list with dictionaries for each turn, indicating the role (e.g., “system”, “human”, “gpt”) and the text value of the conversation.

Dataset Sources of OpenHermes 2.5

OpenHermes 2.5 incorporates data from a wide range of sources, each contributing to the dataset’s comprehensiveness and utility in training LLMs. Some notable sources include:

- Airoboros 2.2: A dataset by Jon Durbin.

- CamelAI Domain Expert Datasets: Covering Physics, Math, Chemistry, and Biology.

- ChatBot Arena: A GPT-4 specific dataset.

- Collective Cognition: A dataset by Teknium.

- Glaive Code Assistant: A dataset aimed at improving coding skills.

- GPTeacher: A collection of modular datasets for training LLMs.

- SlimOrca 550K: A dataset that contributes to the Orca replication efforts.

What Is OpenHermes-2.5-Mistral-7B?

Explanation

- Continuation of OpenHermes 2: OpenHermes 2.5 Mistral 7B is a state of the art Mistral Fine-tune. It builds upon the previous OpenHermes 2 model, indicating a progression in its development and capabilities.

- Training on Code Datasets: A significant portion of the training data (an estimated 7–14% of the total dataset) consists of code instructions. This training on code has had a positive impact on the model’s performance.

- Training Data: OpenHermes 2.5 was trained on 1 million entries, primarily generated by GPT-4, along with other high-quality data from various open datasets across the AI landscape. This diverse training data likely contributes to the model’s broad capabilities.

- Data Filtering and Format Conversion: Extensive filtering was applied to the public datasets used for training. All data formats were converted to ShareGPT, which was then further transformed by axolotl to use ChatML. This process of standardization and transformation ensures consistency in the training data and may contribute to the model’s improved performance.

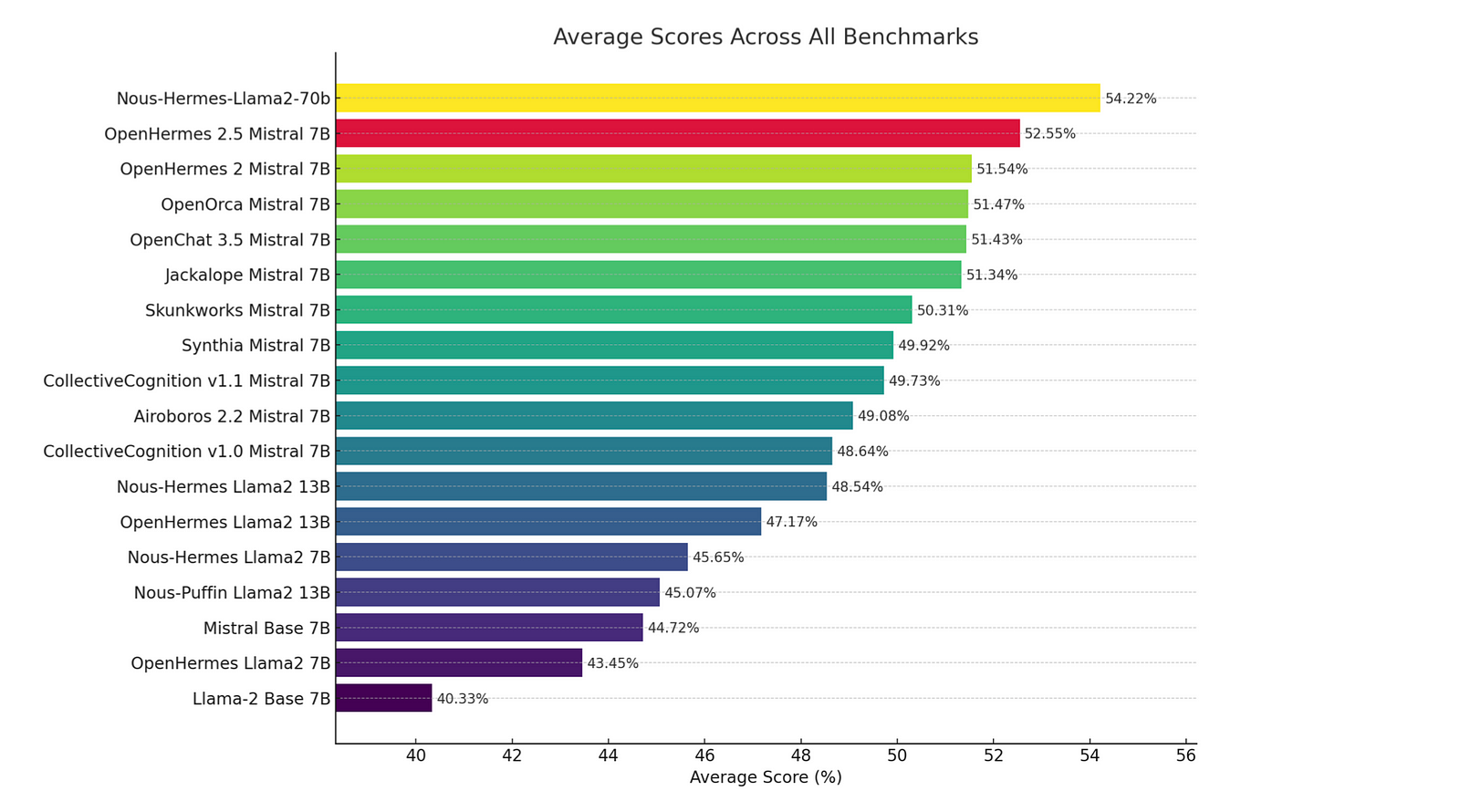

Benchmark Performances of OpenHermes-2.5-Mistral-7B

- TruthfulQA, AGIEval, and GPT4All Suite: The model has seen a boost in performance on these non-code benchmarks, suggesting that training on code datasets has generalized well to other areas.

- BigBench: Interestingly, while the model’s score on the BigBench benchmark decreased, the overall net gain in performance across other benchmarks is still significant, indicating an improvement in the model’s capabilities.

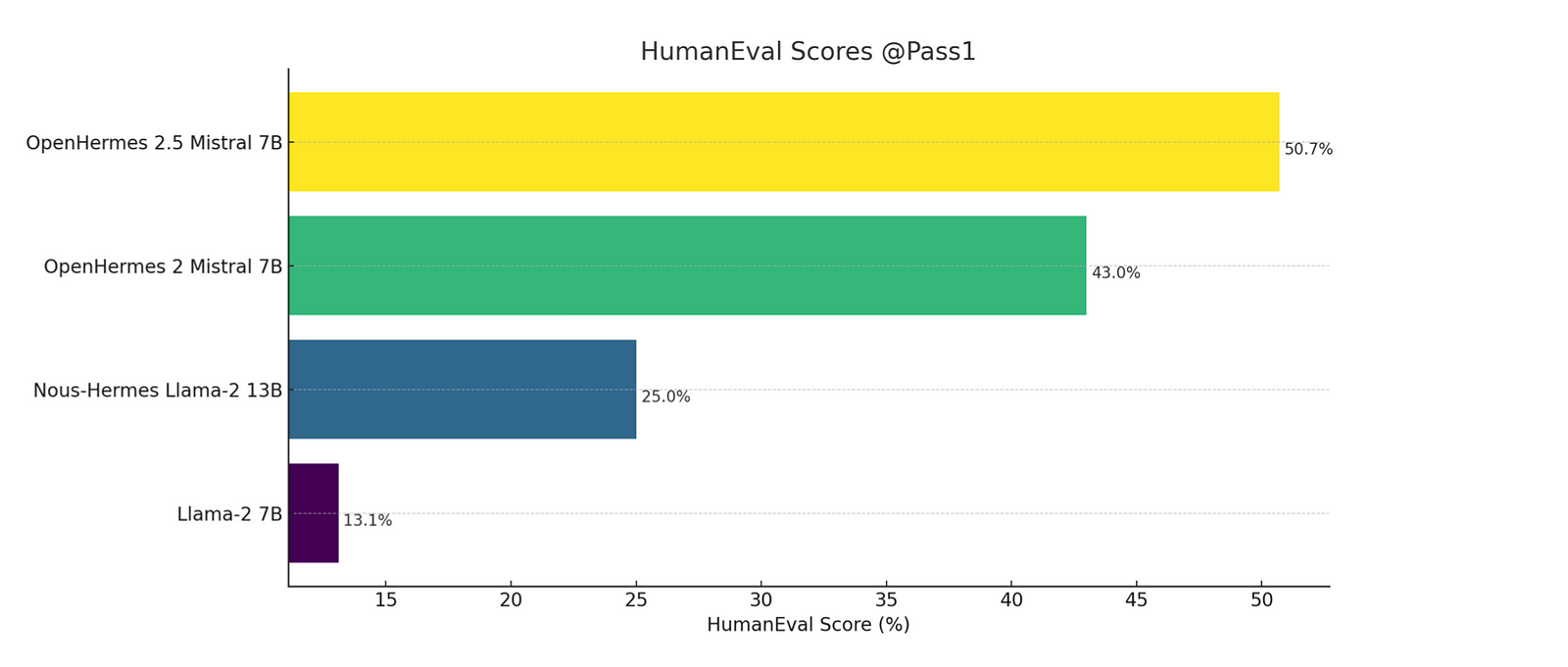

- Humaneval Score Improvement: The model’s performance on the humaneval benchmark, which measures the model’s ability to perform human-like evaluations, has improved from 43% at Pass 1 with Open Hermes 2 to 50.7% at Pass 1 with OpenHermes 2.5. This is a substantial increase and reflects the model’s enhanced ability to generate more human-like responses.

What Are the Practical Applications of OpenHermes-2.5-Mistral-7B In the Industry?

As developers, you’re at the forefront of innovation, constantly seeking tools that can enhance productivity and create engaging user experiences. OpenHermes 2.5 Mistral 7B, with its advanced capabilities, opens up a realm of possibilities across various domains. Let’s explore some of the practical applications that can benefit from this state-of-the-art model.

AI Companion Chat

Enhanced User Interaction: OpenHermes 2.5 Mistral 7B’s proficiency in natural language understanding and generation makes it an ideal candidate for developing AI companion chats. Whether it’s for customer service bots, virtual assistants, or interactive characters in games, this model can provide more nuanced and human-like conversations.

Personalization: By leveraging the model’s ability to understand context and generate relevant responses, developers can create personalized chat experiences that adapt to individual user preferences and needs.

Multilingual Support: With further training and adaptation, OpenHermes 2.5 Mistral 7B can be extended to support multiple languages, opening up global markets for AI companion applications.

AI Novel Generation

Creative Writing: The model’s strength in generating human-like text makes it a powerful tool for AI novel generation. Developers can utilize this capability to create unique storylines, characters, and dialogues for books, scripts, or interactive narratives.

Automated Content Creation: For content creators and digital marketers, OpenHermes 2.5 Mistral 7B can automate the generation of engaging blog posts, articles, or social media content, saving time and resources while maintaining a high level of quality.

Interactive Storytelling: In the gaming industry, this model can be the backbone of interactive storytelling experiences where the narrative adapts in real-time to the player’s choices, creating a deeply immersive environment.

AI Summarization

Efficient Information Processing: OpenHermes 2.5 Mistral 7B’s summarization capabilities are invaluable for processing large volumes of text and extracting key points. This can be applied to news aggregation, research, or business intelligence to provide concise summaries of lengthy documents.

Data Analysis: In the realm of data analysis and reporting, this model can synthesize insights from complex datasets and present them in an easily digestible format, aiding decision-making processes.

Educational Tools: For educational applications, AI-powered summarization can help students and researchers by providing summaries of academic papers, books, or lecture notes, facilitating faster and more effective learning.

As developers, you’ll likely be interested in how to integrate OpenHermes 2.5 Mistral 7B into your projects. The following section offers you two ways to get access to OpenHermes 2.5 Mistral 7B.

How to Get Access to OpenHermes-2.5-Mistral-7B?

How to Download and Use This Model in text-generation-webui?

- Update to the Latest Version: Make sure that you are using the most current version of the text-generation-webui.

- Use One-Click Installers: It’s highly recommended to use the one-click installers for text-generation-webui unless you are confident in performing a manual installation.

- Navigate to the Model Tab: Click on the “Model” tab within the interface.

- Enter Model Details: In the section for downloading a custom model or LoRA, type in

TheBloke/OpenHermes-2.5-Mistral-7B-GPTQ. If you wish to download from a specific branch, for example,TheBloke/OpenHermes-2.5-Mistral-7B-GPTQ:gptq-4bit-32g-actorder_True, refer to the list of branches provided above for each option. - Initiate the Download: Click the “Download” button to start the model download process. Once it’s completed, you will see the status change to “Done.”

- Refresh the Model List: Click the refresh icon in the top left to update the list of available models.

- Select the Downloaded Model: From the Model dropdown menu, choose the model you just downloaded:

OpenHermes-2.5-Mistral-7B-GPTQ. - Load the Model: The model will load automatically and will be ready for use.

- Custom Settings (If Needed): If you have any custom settings to apply, configure them and then click “Save settings for this model,” followed by “Reload the Model” in the top right corner.

- Note on GPTQ Parameters: You no longer need to manually set GPTQ parameters. They are now automatically configured from the

quantize_config.jsonfile. - Start Text Generation: Once everything is set up, click on the “Text Generation” tab, enter your prompt, and begin generating text!

You can find all the files needed from TheBloke on Hugging Face. By following these steps, you can easily download and utilize the OpenHermes-2.5-Mistral-7B-GPTQ model within the text-generation-webui.

How to use OpenHermes-2.5-Mistral-7B on Novita AI?

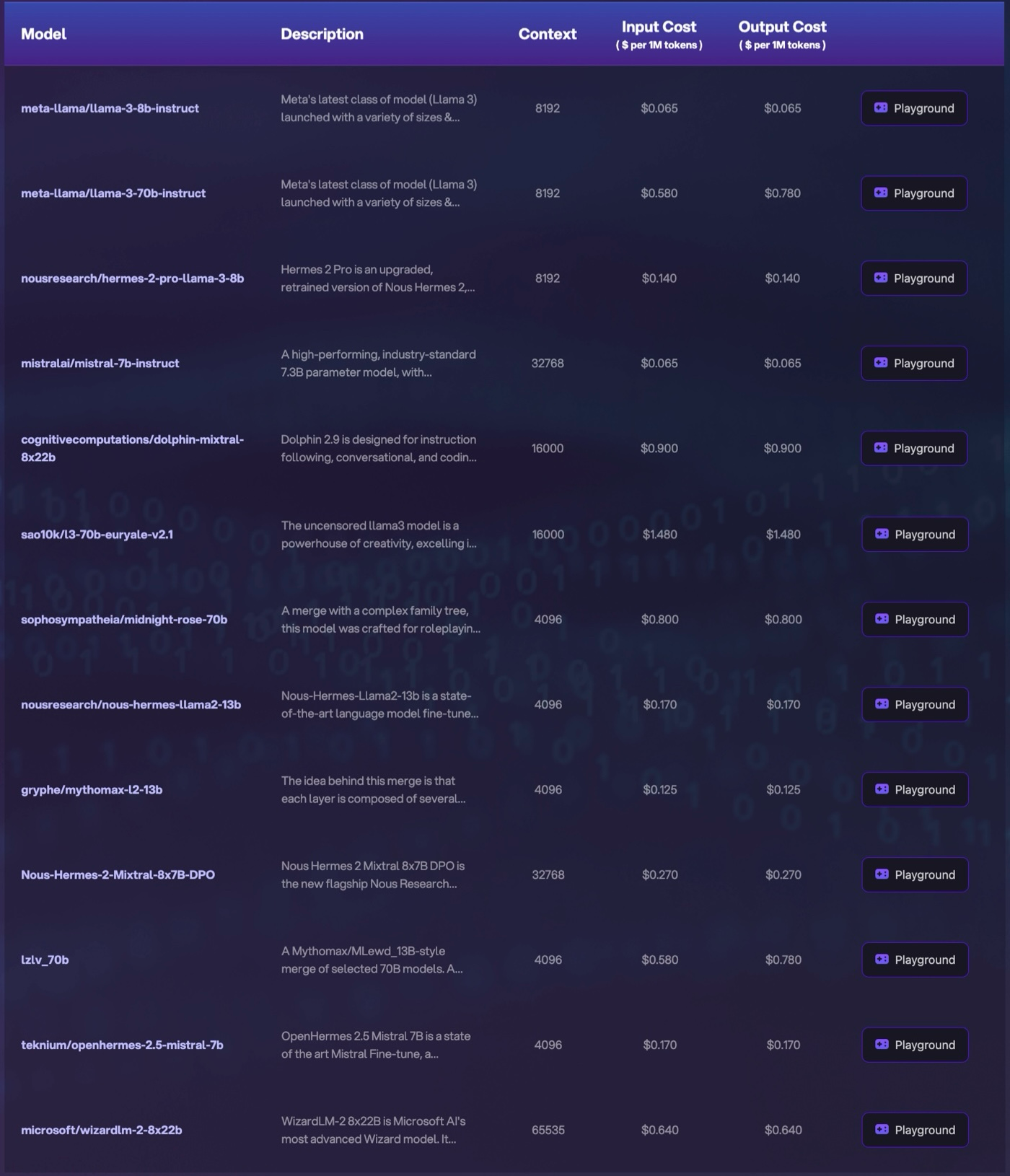

If you find it troublesome to download and use OpenHermes-2.5-Mistral-7B in text-generation-webui, you can get access to it via applying Novita AI LLM API, which is equipped with OpenHermes-2.5-Mistral-7B and other latest, powerful models such as Llama 3 8B instruct, Llama 3 70B instruct and MythoMax-L2–13B:

Just within several lines of code, you can make an API call and leverage the power of OpenHermes-2.5-Mistral-7B and other powerful models:

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring: https://novita.ai/get-started/Quick_Start.html#_3-create-an-api-key

api_key="<YOUR Novita AI API Key>",

)

model = "teknium/openhermes-2.5-mistral-7b"

completion_res = client.completions.create(

model=model,

prompt="A chat between a curious user and an artificial intelligence assistant".

stream = True, # or False

max_tokens = 512,

)Conclusion

In conclusion, OpenHermes 2.5 emerges as a pivotal advancement in AI technology, blending extensive data curation with state-of-the-art model training. From its inception by Teknium to its integration with platforms like Lilac and availability on HuggingFace, this dataset exemplifies a leap forward in natural language processing.

Throughout this blog, we’ve explored the multifaceted applications of OpenHermes 2.5. Whether enhancing user interactions through AI companion chats, fostering creativity in AI novel generation, or enabling efficient data summarization, this model empowers developers to innovate across diverse domains.

Novita AI is the all-in-one cloud platform that empowers your AI ambitions. With seamlessly integrated APIs, serverless computing, and GPU acceleration, we provide the cost-effective tools you need to rapidly build and scale your AI-driven business. Eliminate infrastructure headaches and get started for free — Novita AI makes your AI dreams a reality.